In iOS 13, Apple applies Machine Learning to find cats and dogs

In perhaps the cutest application of machine learning yet, Apple has included algorithms in its latest computer vision framework to enable iPhone and iPad apps to detect your furry friends in images.

VNAnimalDetectorDog knows this is a good doggo

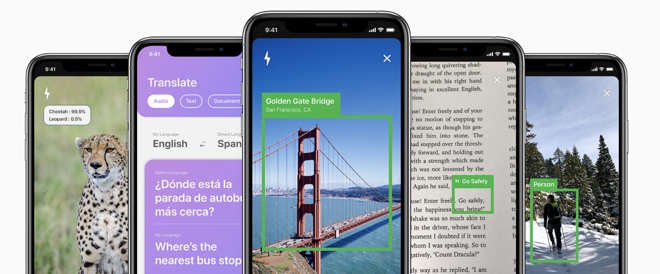

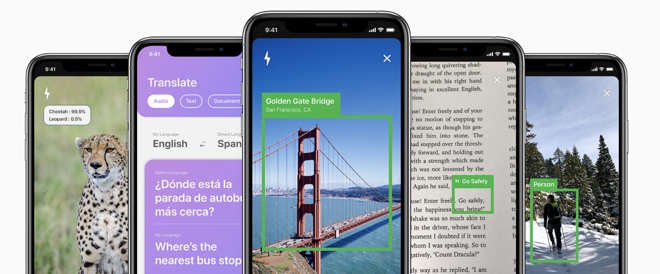

Apple's Vision framework provides a series of Machine Learning computer vision algorithms that can analyze input images and video. These functions can be used to detect objects such as faces; "face landmarks" such as eyes, noses, and mouths; text and barcodes; rectangular images for identifying documents or signs; the horizon; and even perform a "saliency analysis," which creates a heat map depicting areas of an image most likely draw human attention.

Vision analysis can also make use of custom Core ML models for tasks like classification or object detection, including the ability to recognize humans or animals in an image. It even includes, as noticed by developer Frank A. Krueger on Twitter, specific animal detectors for identifying cats and dogs. In the language of Apple's APIs, that's VNAnimalDetectorCat and VNAnimalDetectorDog, both new in the betas of iOS 13, iPad OS 13, tvOS 13 and macOS Catalina.

Apple has used machine learning internally for years in its own Photos app, which can find and identify a wide variety of objects in your library of photos and tag them as identified people, objects, animals, and even use artificial intelligence to put together what's happening in an image, from identifying pics of summer to dining, concerts, night clubs, trips, birthdays, theme parks, museum visits, and weddings, among many others.

As noted in our hands on first look at the macOS Catalina public beta, Apple has exposed many of its internal Machine Learning features to third party developers in new and expanded OS development frameworks including Vision, Natural Language, and Speech, enabling them to make their own applications as intelligent as Apple's.

In parallel with ARKit and Reality Composer for building Augmented Reality content, Create ML is building out an entirely new creative purpose for Macs, both in developing intelligent Mac titles as well as iOS apps with super smart intelligence that grows and develops over time. In Catalina, Apple is working to make these tools both easy to use and powerful.

Create ML builds models that can used to develop apps as intelligent as Apple's own

In iOS 13 and iPadOS 13, devices are now capable of not only performing local ML right on the device, but even personalizing ML models so that your apps can learn your preferences and routines and adjust intelligently in a way that's specific to you, leveraging the same type of individual learning that let Face ID adapt to your appearance as you change your hair, glasses and clothing.

This local learning is entirely private to you, so you don't have to worry about apps building detailed dossiers about you that somehow end up on some server that Facebook or Yahoo decided to leave out in the open and totally unencrypted.

Apple itself is making use of advanced ML within Siri Intelligence, Photos, new media apps including Podcasts. The machine learning addition helps users discover topics, and a variety of other apps including the new Reminders in iOS 13 and Catalina also uses ML to suggest items to remember, contacts to attach to a reminder, and will pop up reminders attached to a person while you're chatting with them in Messages.

Read more about Catalina's use of ML in our extensive hands on.

VNAnimalDetectorDog knows this is a good doggo

Apple's Vision framework provides a series of Machine Learning computer vision algorithms that can analyze input images and video. These functions can be used to detect objects such as faces; "face landmarks" such as eyes, noses, and mouths; text and barcodes; rectangular images for identifying documents or signs; the horizon; and even perform a "saliency analysis," which creates a heat map depicting areas of an image most likely draw human attention.

Vision analysis can also make use of custom Core ML models for tasks like classification or object detection, including the ability to recognize humans or animals in an image. It even includes, as noticed by developer Frank A. Krueger on Twitter, specific animal detectors for identifying cats and dogs. In the language of Apple's APIs, that's VNAnimalDetectorCat and VNAnimalDetectorDog, both new in the betas of iOS 13, iPad OS 13, tvOS 13 and macOS Catalina.

Apple has used machine learning internally for years in its own Photos app, which can find and identify a wide variety of objects in your library of photos and tag them as identified people, objects, animals, and even use artificial intelligence to put together what's happening in an image, from identifying pics of summer to dining, concerts, night clubs, trips, birthdays, theme parks, museum visits, and weddings, among many others.

As noted in our hands on first look at the macOS Catalina public beta, Apple has exposed many of its internal Machine Learning features to third party developers in new and expanded OS development frameworks including Vision, Natural Language, and Speech, enabling them to make their own applications as intelligent as Apple's.

Mac as a Machine Learning creator

Using Apple's Xcode 11 development tools running on macOS Catalina, developers can now use the new Create ML tool introduced at WWDC19 to develop and train their ML models using libraries of sample data right on their machine, without needing to rely on a model training server. ML training can even take advantage of the external GPU support Apple recently delivered for new Macs, leveraging powerful eGPU hardware to crunch through computationally complex ML training sessions.In parallel with ARKit and Reality Composer for building Augmented Reality content, Create ML is building out an entirely new creative purpose for Macs, both in developing intelligent Mac titles as well as iOS apps with super smart intelligence that grows and develops over time. In Catalina, Apple is working to make these tools both easy to use and powerful.

Create ML builds models that can used to develop apps as intelligent as Apple's own

In iOS 13 and iPadOS 13, devices are now capable of not only performing local ML right on the device, but even personalizing ML models so that your apps can learn your preferences and routines and adjust intelligently in a way that's specific to you, leveraging the same type of individual learning that let Face ID adapt to your appearance as you change your hair, glasses and clothing.

This local learning is entirely private to you, so you don't have to worry about apps building detailed dossiers about you that somehow end up on some server that Facebook or Yahoo decided to leave out in the open and totally unencrypted.

Apple itself is making use of advanced ML within Siri Intelligence, Photos, new media apps including Podcasts. The machine learning addition helps users discover topics, and a variety of other apps including the new Reminders in iOS 13 and Catalina also uses ML to suggest items to remember, contacts to attach to a reminder, and will pop up reminders attached to a person while you're chatting with them in Messages.

Read more about Catalina's use of ML in our extensive hands on.

Comments

Scruffy's data is not stored on Apple servers.

There are are already Shared Albums, why on Earth would anyone want to share their entire Library?