Apple is working on virtual assistants to help users navigate AR and VR

Augmented and virtual reality are new frontiers for Apple's larger customer base, and the company is researching development of a computer-generated assistant to help users navigate the new space, and the new interfaces it will require.

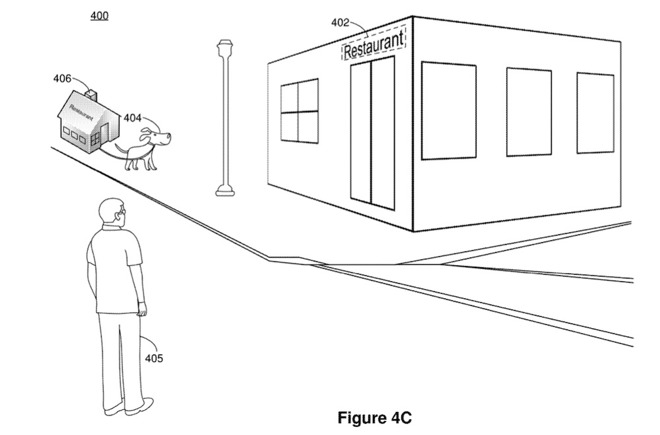

In one implementation, a virtual dog could "fetch" information about a restaurant for users.

At this point, it's no secret that Apple is working on augmented (AR) and virtual reality (VR) systems. It's been steadily increasing the capabilities of its ARKit developer platform, and some type of head-mounted mixed reality (MR) device is likely just over the horizon.

In a new patent application published Thursday, titled "Contextual Computer-Generated Reality (CGR) Digital Assistants," Apple notes that the amount of information given in these VR or AR environments could quickly become "overwhelming" to a user.

To help counter that, Apple is working on virtual assistants -- which appear as computer-generated characters or images -- that could outline useful information or help offers tips to a user. These characters would be contextual, meaning that they'd only appear during certain events or after certain triggers.

Apple gives a few specific examples based on a user's knowledge and experience withe specific animals, symbols or other potential characters.

"For example, knowing a dog's ability to run fast and fetch items, when the user notices a restaurant (real-world or CGR), a computer-generated dog can be used as a visual representation of a contextual CGR digital to quickly fetch restaurant information for the user," the patent reads.

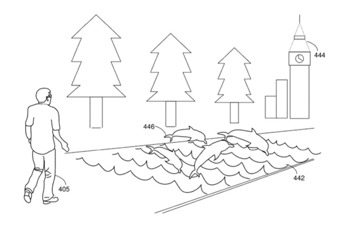

Another example suggests a pod of dolphins could be used for AR navigation.

Other characters described in the patent include a virtual cat that guides users to an interesting place or a pod of digital dolphins that shows users the way to a location. Apple notes that users may also be able to experience CGR assistants through other senses, such as hearing.

Such a virtual assistant would have obvious benefits to users of a head-mounted AR or MR headset, since a virtual assistant could quickly point out useful information directly in front of their eyes. But there's a good chance that Apple is exploring ways to bring similar CGR assistants to existing devices, like iPhones or iPads.

In the patent, Apple also gives non-animal examples, like a balloon, offering at least one tie to rumors of a potential Apple Ultra Wideband (UWB) tracking tag. Information found in early iOS 13 beta builds suggest that the Find My app could display a balloon to help users find missing objects.

This is far from the first AR or VR patent that Apple has applied for. Past patent applications have described various AR mechanisms for accurate handling of real or virtual objects, using ultrasonic positioning, or displaying high-definition AR images.

It isn't clear when a potential Apple AR/VR headset could launch, but past rumors have suggested a timeline as soon as 2021. Apple's potential AR-leveraging "AirTags" could launch as soon as summer 2020.

The patent's inventors are listed as Avi Bar-Zeev, Golnaz Abdollahian, Devin William Chalmers and David H. Y. Huang. Of the inventors here, Abdollahian previously worked on several Apple patents related to user inputs, including both touch-based and touch-free gestures.

In one implementation, a virtual dog could "fetch" information about a restaurant for users.

At this point, it's no secret that Apple is working on augmented (AR) and virtual reality (VR) systems. It's been steadily increasing the capabilities of its ARKit developer platform, and some type of head-mounted mixed reality (MR) device is likely just over the horizon.

In a new patent application published Thursday, titled "Contextual Computer-Generated Reality (CGR) Digital Assistants," Apple notes that the amount of information given in these VR or AR environments could quickly become "overwhelming" to a user.

To help counter that, Apple is working on virtual assistants -- which appear as computer-generated characters or images -- that could outline useful information or help offers tips to a user. These characters would be contextual, meaning that they'd only appear during certain events or after certain triggers.

Apple gives a few specific examples based on a user's knowledge and experience withe specific animals, symbols or other potential characters.

"For example, knowing a dog's ability to run fast and fetch items, when the user notices a restaurant (real-world or CGR), a computer-generated dog can be used as a visual representation of a contextual CGR digital to quickly fetch restaurant information for the user," the patent reads.

Another example suggests a pod of dolphins could be used for AR navigation.

Other characters described in the patent include a virtual cat that guides users to an interesting place or a pod of digital dolphins that shows users the way to a location. Apple notes that users may also be able to experience CGR assistants through other senses, such as hearing.

Such a virtual assistant would have obvious benefits to users of a head-mounted AR or MR headset, since a virtual assistant could quickly point out useful information directly in front of their eyes. But there's a good chance that Apple is exploring ways to bring similar CGR assistants to existing devices, like iPhones or iPads.

In the patent, Apple also gives non-animal examples, like a balloon, offering at least one tie to rumors of a potential Apple Ultra Wideband (UWB) tracking tag. Information found in early iOS 13 beta builds suggest that the Find My app could display a balloon to help users find missing objects.

This is far from the first AR or VR patent that Apple has applied for. Past patent applications have described various AR mechanisms for accurate handling of real or virtual objects, using ultrasonic positioning, or displaying high-definition AR images.

It isn't clear when a potential Apple AR/VR headset could launch, but past rumors have suggested a timeline as soon as 2021. Apple's potential AR-leveraging "AirTags" could launch as soon as summer 2020.

The patent's inventors are listed as Avi Bar-Zeev, Golnaz Abdollahian, Devin William Chalmers and David H. Y. Huang. Of the inventors here, Abdollahian previously worked on several Apple patents related to user inputs, including both touch-based and touch-free gestures.

Comments

The cat would never guide anybody anywhere!

Is Clippy mentioned?