Apple expected to replace Touch ID with two-step facial, fingerprint bio-recognition tech

Apple is developing advanced biometric security technologies, including facial recognition and optical fingerprint sensing designs, to replace the vaunted Touch ID module implemented in all iPhones and iPads since the release of iPhone 5s.

In a note sent out to investors on Friday, and subsequently obtained by AppleInsider, well-connected KGI analyst Ming-Chi Kuo says he believes Apple is developing a new class of bio-recognition technologies that play nice with "full-face," or zero-bezel, displays. Specifically, Kuo foresees Apple replacing existing Touch ID technology with optical fingerprint readers, a change that could arrive as soon as this year, as Apple is widely rumored to introduce a full-screen OLED iPhone model this fall.

Introduced with iPhone 5s, Touch ID is a capacitive type fingerprint sensing module based on technology acquired through Apple's purchase of biometric security specialist AuthenTec in 2012. Initial iterations of the system, built into iPhone and iPad home buttons, incorporated a 500ppi sensor capable of scanning sub-dermal layers of skin to obtain a three-dimensional map of a fingerprint.

Available on iOS devices since 2013, the technology most recently made its way to Mac with the MacBook Pro with Touch Bar models in October.

A capacitive solution, Touch ID sends a small electrical charge through a user's finger by way of a stainless steel metal ring. While the fingerprint sensing module is an "under glass" design, the ring must be accessible to the user at all times, making the solution unsuitable for inclusion in devices with full-face screens.

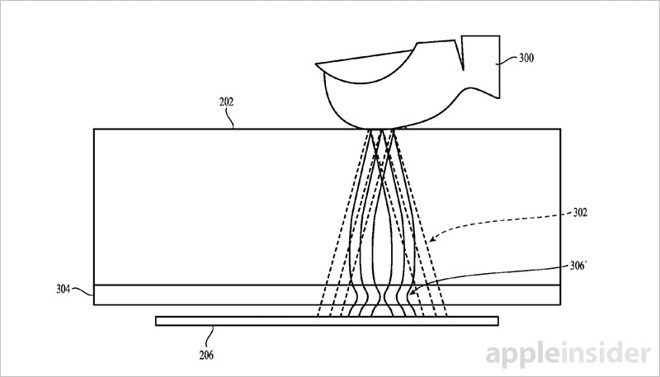

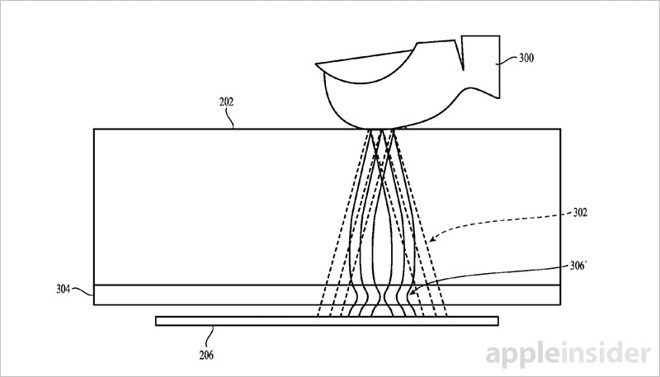

Moving forward, Kuo predicts Apple will turn to optical type fingerprint sensing technology capable of accepting readings through OLED panels without need for capacitive charge components. These "under panel" systems allow for a completely uniform screen surface, an aesthetic toward which the smartphone industry is trending.

Apple has, in fact, been working on fingerprint sensors that work through displays, as evidenced by recent patent filings. The IP, as well as the current state of the art, suggests optical fingerprint modules are most likely to see inclusion under flexible OLED panels as compared to rigid OLED or TFT-LCD screens.

Flexible OLED displays feature less signal interference, lower pixel densities and thinner form factor than competing technologies, Kuo notes. As optical fingerprint sensor development is still in its infancy, however, OLED display manufacturers are under increased pressure to provide customized designs capable of incorporating the tech.

While the barriers to entry are high, companies like Apple and Samsung are among the few that have the bargaining power to implement such designs, Kuo says.

As for alternative bio-recognition technologies, Kuo believes Apple is looking to completely replace fingerprint sensors with facial or iris recognition systems. Of the two, the analyst predicts facial recognition to win out, citing a growing stack of patent filings for such solutions, many of which AppleInsider uncovered over the past few years (1, 2, 3, 4, 5). The company is also rumored to have eye scanning technology in development, but Kuo sees Apple leaning toward facial recognition for both hardware security and mobile transaction authentication services.

Being a hands-off, no-touch security solution, facial recognition is preferable to technology that requires user interaction, like fingerprint sensors. However, Kuo points out that certain barriers stand in the way of implementation, such as software design, hardware component development and the creation of a verification database, among other backend bottlenecks.

Considering the onerous task of deploying a standalone face-scanning solution, Kuo suggests Apple might first deploy a hybrid two-step bio-recognition system that requires a user verify their identity with both a fingerprint and facial scan.

It is unclear when Apple might first integrate facial recognition hardware in its expansive product lineup, but optical fingerprint modules are ripe for inclusion in an OLED iPhone rumored to launch later this year. According to recent rumblings, Apple's first OLED iPhone model will sport a stainless steel "glass sandwich" design and incorporate advanced features like wireless charging. Kuo himself added to the "iPhone 8" rumor pile this week, saying Apple is primed to transition to next-generation 3D Touch tech with the forthcoming handset.

In a note sent out to investors on Friday, and subsequently obtained by AppleInsider, well-connected KGI analyst Ming-Chi Kuo says he believes Apple is developing a new class of bio-recognition technologies that play nice with "full-face," or zero-bezel, displays. Specifically, Kuo foresees Apple replacing existing Touch ID technology with optical fingerprint readers, a change that could arrive as soon as this year, as Apple is widely rumored to introduce a full-screen OLED iPhone model this fall.

Introduced with iPhone 5s, Touch ID is a capacitive type fingerprint sensing module based on technology acquired through Apple's purchase of biometric security specialist AuthenTec in 2012. Initial iterations of the system, built into iPhone and iPad home buttons, incorporated a 500ppi sensor capable of scanning sub-dermal layers of skin to obtain a three-dimensional map of a fingerprint.

Available on iOS devices since 2013, the technology most recently made its way to Mac with the MacBook Pro with Touch Bar models in October.

A capacitive solution, Touch ID sends a small electrical charge through a user's finger by way of a stainless steel metal ring. While the fingerprint sensing module is an "under glass" design, the ring must be accessible to the user at all times, making the solution unsuitable for inclusion in devices with full-face screens.

Moving forward, Kuo predicts Apple will turn to optical type fingerprint sensing technology capable of accepting readings through OLED panels without need for capacitive charge components. These "under panel" systems allow for a completely uniform screen surface, an aesthetic toward which the smartphone industry is trending.

Apple has, in fact, been working on fingerprint sensors that work through displays, as evidenced by recent patent filings. The IP, as well as the current state of the art, suggests optical fingerprint modules are most likely to see inclusion under flexible OLED panels as compared to rigid OLED or TFT-LCD screens.

Flexible OLED displays feature less signal interference, lower pixel densities and thinner form factor than competing technologies, Kuo notes. As optical fingerprint sensor development is still in its infancy, however, OLED display manufacturers are under increased pressure to provide customized designs capable of incorporating the tech.

While the barriers to entry are high, companies like Apple and Samsung are among the few that have the bargaining power to implement such designs, Kuo says.

As for alternative bio-recognition technologies, Kuo believes Apple is looking to completely replace fingerprint sensors with facial or iris recognition systems. Of the two, the analyst predicts facial recognition to win out, citing a growing stack of patent filings for such solutions, many of which AppleInsider uncovered over the past few years (1, 2, 3, 4, 5). The company is also rumored to have eye scanning technology in development, but Kuo sees Apple leaning toward facial recognition for both hardware security and mobile transaction authentication services.

Being a hands-off, no-touch security solution, facial recognition is preferable to technology that requires user interaction, like fingerprint sensors. However, Kuo points out that certain barriers stand in the way of implementation, such as software design, hardware component development and the creation of a verification database, among other backend bottlenecks.

Considering the onerous task of deploying a standalone face-scanning solution, Kuo suggests Apple might first deploy a hybrid two-step bio-recognition system that requires a user verify their identity with both a fingerprint and facial scan.

It is unclear when Apple might first integrate facial recognition hardware in its expansive product lineup, but optical fingerprint modules are ripe for inclusion in an OLED iPhone rumored to launch later this year. According to recent rumblings, Apple's first OLED iPhone model will sport a stainless steel "glass sandwich" design and incorporate advanced features like wireless charging. Kuo himself added to the "iPhone 8" rumor pile this week, saying Apple is primed to transition to next-generation 3D Touch tech with the forthcoming handset.

Comments

2) No physical Home Button indentation makes this story rumour sounds fake to me.

for example: http://www.face-rec.org/interesting-papers/

Introduce Face Recognition as another layer, now you have to hold the phone so it can check your face, wave the phone at the sensor, and touch your finger to the TouchID sensor. If it wants the Face Recognition while your on the PayWave sensor, then that's going to be awkward in situations where the sensor is on a low counter. Or do you manually initiate Apple Pay (e.g. double tap the home button, virtual or otherwise), scan your face and finger, then wave it on the PayWave sensor?

Basically, I can't see a way to introduce Face Recognition specifically without making Apple Pay harder to use. Without a damn good security reason, and a good implementation, I don't see this as anything more than an experiment. If anyone can make it work, it would be Apple, but I don't think it's likely this year, if at all.

Similar to the patent of many years ago now getting rid of the front camera and replacing with a field array sensor and focus in software.

Use holes in the flexible OLED panels could act like an array of pinhole cameras for the field array.

Field array would also let them create basic 3D models for face detection checking so it can't be fooled by photo.

If they get it close to centre of the screen you could use facetime landscape or portrait and look the other person in the eyes.

The gist of it seems like Apple is trying to put in an actual two-factor authentication (eg finger+face) since the finger alone has a few weaknesses (I'm not sure if the "gummy finger" works on the iPhone, but that's how optical finger print readers are defeated.) The face alone is also weak since you can only use parts of the face that don't change with age which gives you two problems:

1) Glasses/Contacts - A "retina scan" has to actually look at the Retina, which means that a scan would have to be taken at very close range, an iris scan would be defeated by contact lenses, which leaves a face scan. Typically what you would have is a prompt like "make a smile" and the phone would check that the 60 or so points on a face that correspond to your smile match. That makes it more difficult to defeat than a simple "photo" based match.

2) Makeup/complexion/tattoos/piercings, if someone breaks out with acne, or has applied makeup to cover moles, then the detection mechanism no longer has the right reference points, especially if the way the recognition works is looking for unique identifying marks.

To some extent people with long hair would also confuse face recognition since some people change their hair frequently.

As far as identifying characteristics go however, The Retina and the Iris are are the ones that can't be changed or fooled, but since they are biological markers, it is possible for those parts of an eye to be damaged through surgery or accident, thus locking one out of the device. There are several DNA markers that are responsible for the color and unique patterns in the Iris (would an identical twin be able to fool it?)

What I think is going to happen, assuming they remove a the physical home button (I wouldn't expect this to happen because it makes it harder to orient the phone from everything about taking pictures and movies to visually-impaired users being unable to use Siri because they no longer can find the the part of the screen to double-tap) there will still be a part of the screen that has a dedicated space for the fingerprint sensor, and perhaps the screen will "wrap" around this sensor and software that isn't aware about this wrap will just have the previous screen size and aspect ratio assumed, where as sensor-aware apps will be able to go "place finger here to pay"

I'm not sure the technology behind it can be used in a smaller device as an iPhone.

someone once posted a photo of him on Facebook and he freaked out.

- The user is on the ski slopes and wearing a Ski-Mask

- The user is a Motorcyclist and is wearing a crash helmet

- The user is a Muslim Woman who is wearing a Nicab

- The user is a Doctor wearing a scrubs mask.

etc

etc

will it work without re-calibratino is you shave your thick bushy beard off?

There is are good reasons why this will only ever be a niche solution.

I can't see it ever replacing touch-id