Apple investigating AR solution capable of moving, removing objects in real time

The U.S. Patent and Trademark Office on Thursday published an Apple patent application detailing a method of inserting virtual objects into a real environment, and relocating or removing real objects from the same, using advanced hardware and complex algorithms.

Source: USPTO

The invention described in Apple's "Method and device for illustrating a virtual object in a real environment" is less of a holistic AR system as it is a piece of puzzle that lays the groundwork for said solutions.

An extension of a patent granted to German AR specialist Metaio shortly before Apple acquired the firm in 2015, today's application was first filed for in Germany in 2007. Metaio was granted a U.S. patent for the invention in 2010, which was transferred to Apple last November.

In particular, the patent application illustrates techniques for faithfully representing a digital object in a real environment. As such, the patent focuses on merging computer generated virtual objects with images of the real world, a technical hurdle that remains a significant stumbling block in delivering believable AR experiences.

For example, superimposing a virtual chair onto a live video feed of a given environment requires highly accurate positioning data, the creation of boundaries, image scaling and other technical considerations. Without the proper hardware running complex software algorithms, the image of said chair might run into collision, perspective and geometry issues, or otherwise appear out of place.

Apple's solution uses assets like high-definition cameras, powerful onboard image processors and advanced positioning and location hardware. Most of the required tools have just recently reached the consumer marketplace thanks to iPhone.

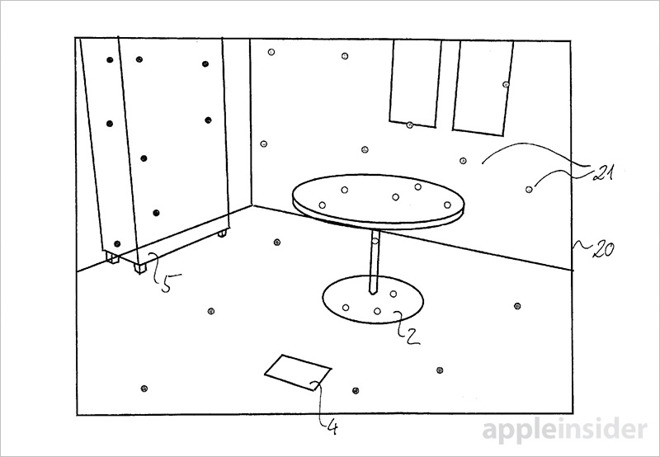

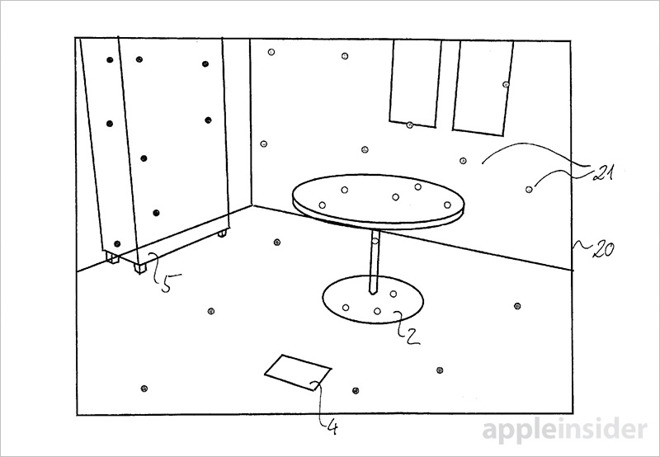

Specifically, the invention calls for a camera or device to capture a two-dimensional image of a real environment and ascertain its position relative to at least one object or in-image component. From there, the device gathers three-dimensional image information or spatial information, including the relative positioning of floor and wall planes, of its surroundings using depth mapping, radar, stereo cameras or other techniques.

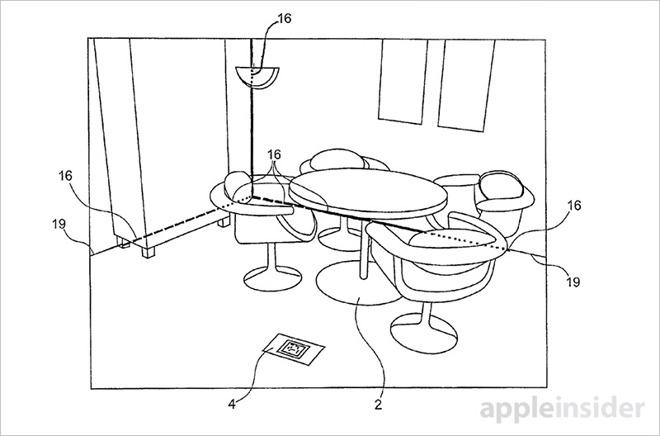

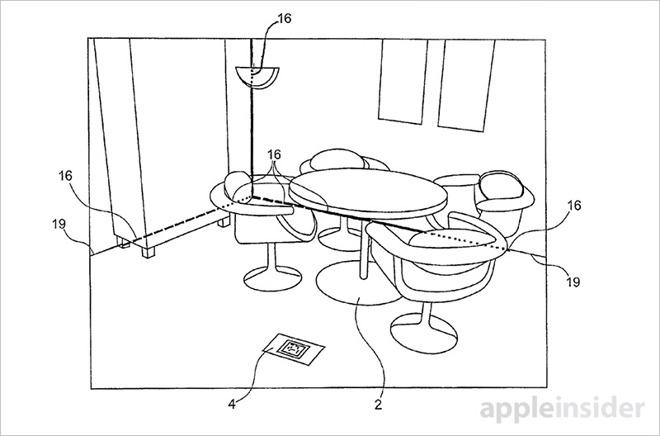

Next, the system segments, or specifies, a given area of the two-dimensional environment. This segmentation data is used to merge a virtual object onto the displayed image, while at the same time removing selected portions of the real environment. The method permits user selection of objects and allows for the realistic presentation of collisions between virtual and real image assets.

The technology is ideal for sales, service and maintenance, production and other related industries, the application says. For example, users can leverage the defined ilk of AR to position or move furnishings in a room without physically interacting with said objects. Alternatively, virtual objects like sofas and tables might be placed in an environment, while real objects might be completely removed from the scene.

Erasing objects from a two-dimensional representation is a challenging feat. Segmentation data for such operations requires highly accurate segmentation based on scene depth, geometry, surrounding textures and other factors.

Images can be displayed on a smartphone screen, as is the case with current AR apps like Pokemon Go and Snap filters, though the application also notes compatibility with semitransparent displays. Goggle type devices like Microsoft's HoloLens leverage similar technology to facilitate mixed reality applications.

The document notes sofas and tables are only the tip of the iceberg. In particular, Metaio envisioned its technology applied to AR car navigation, for example as a heads-up display package.

Though the invention fails to elaborate on the subject, the technology presents the means to effectively render an in-car environment, or parts of that environment, invisible, offering drivers a clear view of the road. Alternatively, some scenarios could find the large infotainment displays available in most modern vehicles superimposed as virtual objects on an image of a car's interior.

Perhaps not coincidentally, Apple is reportedly developing AR-based navigation systems as part of efforts to create self-driving vehicle software and hardware systems.

Last year, a report claimed the company poached a number of engineers from BlackBerry's QNX project to work on an AR heads-up display capable of supporting mobile apps, Siri and other hands-off technologies. Apple is supposedly testing its solution on virtual reality rigs -- likely head-mounted goggles like the Oculus Rift or HTC Vive -- behind closed doors.

The document goes on to detail various image processing algorithms capable of handling the proposed AR applications.

Whether Apple has plans to integrate the invention into a shipping consumer product is unknown, but rumors suggest the company is working to release some type of AR system in the near future. The dual-sensor iPhone 7 Plus camera is likely the key to Apple's AR aspirations. In its current form, the dual-lens array provides a platform for depth sensing operations like Portrait Mode in Apple's Camera app.

Apple's AR patent application was first filed for in October 2015 and credits Peter Meier and Stefan Holzer as its inventors.

Source: USPTO

The invention described in Apple's "Method and device for illustrating a virtual object in a real environment" is less of a holistic AR system as it is a piece of puzzle that lays the groundwork for said solutions.

An extension of a patent granted to German AR specialist Metaio shortly before Apple acquired the firm in 2015, today's application was first filed for in Germany in 2007. Metaio was granted a U.S. patent for the invention in 2010, which was transferred to Apple last November.

In particular, the patent application illustrates techniques for faithfully representing a digital object in a real environment. As such, the patent focuses on merging computer generated virtual objects with images of the real world, a technical hurdle that remains a significant stumbling block in delivering believable AR experiences.

For example, superimposing a virtual chair onto a live video feed of a given environment requires highly accurate positioning data, the creation of boundaries, image scaling and other technical considerations. Without the proper hardware running complex software algorithms, the image of said chair might run into collision, perspective and geometry issues, or otherwise appear out of place.

Apple's solution uses assets like high-definition cameras, powerful onboard image processors and advanced positioning and location hardware. Most of the required tools have just recently reached the consumer marketplace thanks to iPhone.

Specifically, the invention calls for a camera or device to capture a two-dimensional image of a real environment and ascertain its position relative to at least one object or in-image component. From there, the device gathers three-dimensional image information or spatial information, including the relative positioning of floor and wall planes, of its surroundings using depth mapping, radar, stereo cameras or other techniques.

Next, the system segments, or specifies, a given area of the two-dimensional environment. This segmentation data is used to merge a virtual object onto the displayed image, while at the same time removing selected portions of the real environment. The method permits user selection of objects and allows for the realistic presentation of collisions between virtual and real image assets.

The technology is ideal for sales, service and maintenance, production and other related industries, the application says. For example, users can leverage the defined ilk of AR to position or move furnishings in a room without physically interacting with said objects. Alternatively, virtual objects like sofas and tables might be placed in an environment, while real objects might be completely removed from the scene.

Erasing objects from a two-dimensional representation is a challenging feat. Segmentation data for such operations requires highly accurate segmentation based on scene depth, geometry, surrounding textures and other factors.

Images can be displayed on a smartphone screen, as is the case with current AR apps like Pokemon Go and Snap filters, though the application also notes compatibility with semitransparent displays. Goggle type devices like Microsoft's HoloLens leverage similar technology to facilitate mixed reality applications.

The document notes sofas and tables are only the tip of the iceberg. In particular, Metaio envisioned its technology applied to AR car navigation, for example as a heads-up display package.

Though the invention fails to elaborate on the subject, the technology presents the means to effectively render an in-car environment, or parts of that environment, invisible, offering drivers a clear view of the road. Alternatively, some scenarios could find the large infotainment displays available in most modern vehicles superimposed as virtual objects on an image of a car's interior.

Perhaps not coincidentally, Apple is reportedly developing AR-based navigation systems as part of efforts to create self-driving vehicle software and hardware systems.

Last year, a report claimed the company poached a number of engineers from BlackBerry's QNX project to work on an AR heads-up display capable of supporting mobile apps, Siri and other hands-off technologies. Apple is supposedly testing its solution on virtual reality rigs -- likely head-mounted goggles like the Oculus Rift or HTC Vive -- behind closed doors.

The document goes on to detail various image processing algorithms capable of handling the proposed AR applications.

Whether Apple has plans to integrate the invention into a shipping consumer product is unknown, but rumors suggest the company is working to release some type of AR system in the near future. The dual-sensor iPhone 7 Plus camera is likely the key to Apple's AR aspirations. In its current form, the dual-lens array provides a platform for depth sensing operations like Portrait Mode in Apple's Camera app.

Apple's AR patent application was first filed for in October 2015 and credits Peter Meier and Stefan Holzer as its inventors.

Comments

I bet this is one reason Apple wants to design their own GPU.

AAPL should consider buying TRMB

https://www.capturingreality.com/Showcase

Microsoft used a different time of flight sensor in the XBox One Kinect from the following company:

http://www.st.com/content/st_com/en/about/media-center/press-item.html/p3775.html

"The VL53L0 is able to perform a full measurement operation in one image frame, typically less than 30ms, at distances beyond 2m. With such performance levels the camera system can achieve instant focus in both video and burst modes, even in low-light or low-contrast scenes, which are especially challenging for camera-systems not equipped with FlightSense™ technology.

There's another sensor here:

http://www.cnx-software.com/2016/09/26/softkinetic-announces-depthsense-544-tof-camera-for-mobile-devices/

Those sensors measure how long light takes to bounce off distant objects so they can determine the depth of the objects from the camera. They know the speed of light so they send light bursts out (infrared means that it's invisible) and the delay in the sensor receiving the light gives the distance per pixel.

https://en.wikipedia.org/wiki/Time-of-flight_camera

To put digital objects into a scene, there needs to be a fast rendering method. Unreal Engine 4 is one of the best real-time engines for quality and here's an example of AR rendering:

The engines mostly all use physically based rendering now so it's close to photoreal. The characters in that video show the problem with position tracking as they are jittering in position relative to the background, watch the paper on the table. That example probably uses the paper for tracking. Apple's Motion software and 3rd party tools have the ability to do this kind of thing:

This tech can be used for a number of different things, it can be used for some types of gaming and fitness (yoga, dancing) on the Apple TV, it can be used for close range things on the iPhone. If you held up the phone to a mirror, it should be able to scan your body size so it can tell about health, let you try on virtual clothes, measure your proportions to get the right clothing sizes, it can measure your food intake by just taking a picture of it, it can scan objects in 3D, it can help blind people see in front of them.

For navigation it can overlay maps and directions into a scene but the depth sensor can only illuminate so far so it would need to use near objects for stability and have some object recognition and 3D reconstruction/projection on top. It can improve Apple's portrait mode by not just relying on the dual imaging cameras so you'd get proper depth of field blurring. It could even do relighting to an extent so if indoor lighting didn't look right in a picture, it could illuminate objects differently, the manual way of doing it with masking is slow (12:25 for result):

It's quite a lot of data to store for video (maybe 1/3 of HD uncompressed) but it's very useful and even high-end filmmakers can use it alongside footage shot on standard cameras.

On the front, a depth sensor can be used for face recognition, including in the dark as other demos have shown. It would make Facetime tracking more accurate. There seems to be a trend of people doing those face swaps and face filters/lenses on Snapchat:

Not that there should be features to encourage this but they're doing it anyway and the sensors would improve the quality.