Why Apple is unlikely to ditch Touch ID for facial recognition with 'iPhone 8'

Apple is rumored to integrate face-scanning cameras in its upcoming "iPhone 8" handset this fall, sparking speculation that facial recognition technology will replace the company's Touch ID authentication system. AppleInsider explains why such a move is unlikely, at least this year.

'iPhone 8' concept rendering by Marek Weidlich.

'iPhone 8' concept rendering by Marek Weidlich.

For 2017, Apple is widely rumored to launch a high-end iPhone that comes packing a litany of exotic technologies, including an OLED display, wireless charging, glass-sandwich design, in-screen Touch ID and more. The device is expected to sell as a standalone model priced above "iPhone 7s" and "iPhone 7s Plus" refreshes.

As Apple's traditional fall launch timeline approaches, analysts and industry insiders are coming out of the woodwork to offer their predictions on what advanced technology Apple plans to incorporate in the next-generation handset. Being the 10th anniversary of iPhone's initial release in 2007, expectations are high, now bordering on mythical.

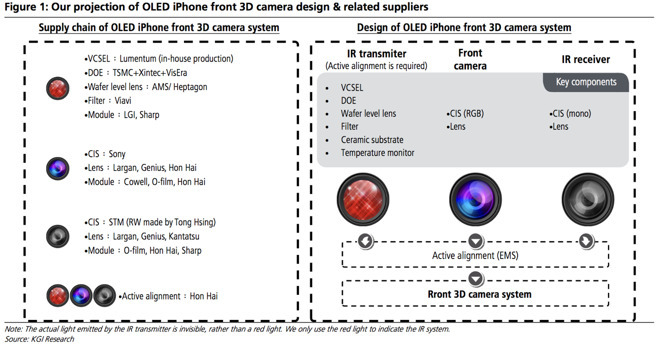

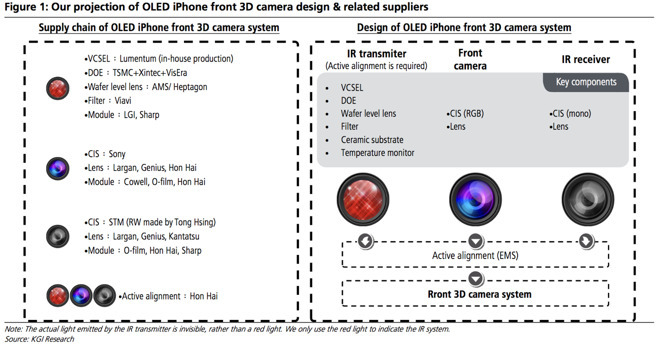

Most recently, KGI analyst Ming-Chi Kuo last month said checks with supply chain sources suggest Apple is looking to integrate a "revolutionary" front-facing camera system capable of 3D sensing. By adding an infrared transmitter and receiver to the usual FaceTime camera, the company will be able to conduct accurate depth mapping functions for 3D modeling, perfect for creating gaming avatars and capturing 3D selfies.

Though Kuo did not explicitly say the camera system would be used for bio-recognition -- face detection -- operations, the analyst in January said Apple is developing facial and iris recognition technologies in hopes of one day replacing Touch ID. Whether those systems will be ready in time for inclusion in "iPhone 8" remains unclear, but a 3D FaceTime camera is a huge step toward that goal.

Since Kuo's February note hit media outlets, analysts and pundits have glommed on to the idea of a face-detecting iPhone. A hands-off, no-touch security solution like facial recognition is preferable to contact technology like fingerprint sensors, which require user interaction. A slew of hurdles stand in the way of implementation, however, ranging from software design, hardware certification and the creation of a verification database, among other backend assets.

Even if a 3D camera is unveiled as part of "iPhone 8's" arsenal of new features, it is unlikely to serve as a complete replacement of Touch ID.

At the heart of modern 3D imaging is depth mapping, a technology that creates a topographic model of an object or environment using specialized equipment. For example, light detection and ranging (LIDAR) uses pulses of laser light, time-of-flight calculations, positioning equipment and other assets to generate highly accurate 3D scene composites.

Alternative methods replace lasers with patterned infrared light, while others rely on known optical distortions combined with complex computational algorithms. A variation of the latter technique is used to accomplish Portrait Mode on iPhone 7 Plus.

Previously reserved for military or industrial applications, depth mapping is finding its way into the commercial market thanks to component amortization, miniaturization and development by tech companies, Apple included. PrimeSense, the Israeli company purchased by Apple in 2013, developed patterned light technology that made its way into Microsoft's Xbox Kinect sensor, for example.

Depth mapping for mobile devices is coming along nicely, but companies have yet to demonstrate a system that functions as accurately as, and with the consistency of, Touch ID.

More recent Apple buys hint at where the company is headed. In February, nearly seven years after Apple bought Swedish facial recognition firm Polar Rose, the company reportedly acquired Israeli firm RealFace for $2 million. Last year, Apple snapped up facial recognition and expression analysis firm Emotient, following the 2015 buy of motion capture specialist Faceshift.

The acquisitions, along with the individual hires of computer vision and machine learning experts, strongly hint at work on face-based bio-recognition systems.

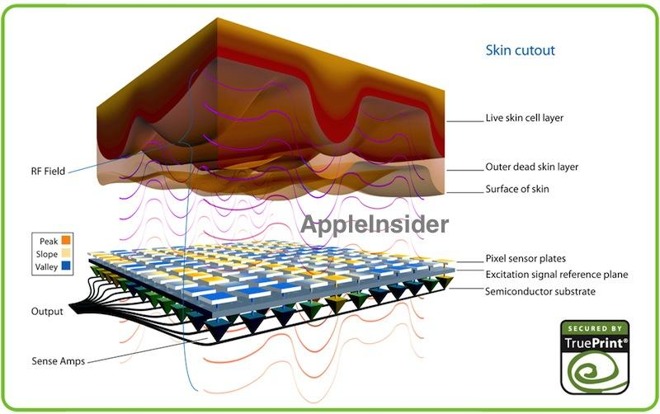

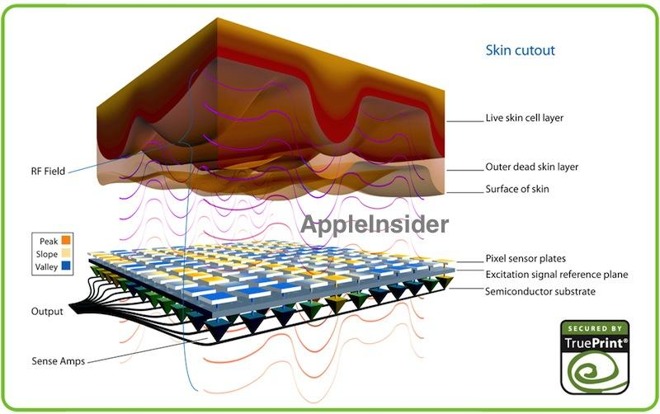

That Apple is working on next-gen Touch ID technology, and perhaps a replacement, is clear. The company already owns a trove of patents covering advanced fingerprint reading methods, from minor tweaks to existing capacitive techniques to ultrasonic sensing.

In-screen or under-screen fingerprint scanners are rumored for inclusion in "iPhone 8." Perhaps not coincidentally, Apple has IP for that, too.

As explained in a recent patent grant, in-screen systems place a sensing component beneath, or within, a smartphone's display. The invention was reassigned to Apple from LuxVue, a micro-LED maker the company purchased in 2014.

In some cases, transmission and reception components are disposed below the cover glass, touch film, LCD, polarizer, filter, backlight and any other layers that comprise the display stack. Apple's iPhone 6s and 7 models incorporate a large capacitive sensing array to facilitate Force Touch, for example. Other methods integrate those same sensors into one or more layers, like infrared diodes disposed throughout an RGB micro-LED array.

The goal is to create a fingerprint sensing system that requires no single anchor point like a home button, meaning a host smartphone can employe a true, unbroken edge-to-edge display. There are pitfalls to such designs, however, including the problem of indicating where a user needs to place their finger for authentication. Apple is already looking into these problems, as evidenced by an invention that allows for an iPhone's entire screen to serve as its fingerprint sensor.

Considering Apple's continued work on Touch ID, and the lack of an accurate and reliable commercial facial recognition system, it is likely that a near-future iPhone will incorporate a hybrid bio-recognition solution. Taking Kuo's analysis into account, it seems that "iPhone 8" will relocate Touch ID beneath the screen, while keeping 3D imaging as a secondary feature. It is possible that the supposed 3D FaceTime camera might not be used for authentication at all, instead serving as a method of gesture input.

In any case, Apple is highly unlikely to abandon Touch ID for untested in-house facial recognition technology on what is to be its flagship product. Circumstantial evidence suggests the company is moving in the direction of face-based authentication, but the technology is simply not mature enough to stand on its own. For now.

'iPhone 8' concept rendering by Marek Weidlich.

'iPhone 8' concept rendering by Marek Weidlich.For 2017, Apple is widely rumored to launch a high-end iPhone that comes packing a litany of exotic technologies, including an OLED display, wireless charging, glass-sandwich design, in-screen Touch ID and more. The device is expected to sell as a standalone model priced above "iPhone 7s" and "iPhone 7s Plus" refreshes.

As Apple's traditional fall launch timeline approaches, analysts and industry insiders are coming out of the woodwork to offer their predictions on what advanced technology Apple plans to incorporate in the next-generation handset. Being the 10th anniversary of iPhone's initial release in 2007, expectations are high, now bordering on mythical.

Most recently, KGI analyst Ming-Chi Kuo last month said checks with supply chain sources suggest Apple is looking to integrate a "revolutionary" front-facing camera system capable of 3D sensing. By adding an infrared transmitter and receiver to the usual FaceTime camera, the company will be able to conduct accurate depth mapping functions for 3D modeling, perfect for creating gaming avatars and capturing 3D selfies.

Though Kuo did not explicitly say the camera system would be used for bio-recognition -- face detection -- operations, the analyst in January said Apple is developing facial and iris recognition technologies in hopes of one day replacing Touch ID. Whether those systems will be ready in time for inclusion in "iPhone 8" remains unclear, but a 3D FaceTime camera is a huge step toward that goal.

Since Kuo's February note hit media outlets, analysts and pundits have glommed on to the idea of a face-detecting iPhone. A hands-off, no-touch security solution like facial recognition is preferable to contact technology like fingerprint sensors, which require user interaction. A slew of hurdles stand in the way of implementation, however, ranging from software design, hardware certification and the creation of a verification database, among other backend assets.

Even if a 3D camera is unveiled as part of "iPhone 8's" arsenal of new features, it is unlikely to serve as a complete replacement of Touch ID.

State of the art

As it stands, facial recognition in its current form is simply not as secure as fingerprint-based authentication methods. It boils down to 2D imaging versus 3D imaging -- 2D is used for fingerprint scanning, while facial recognition is now in the realm of 3D.At the heart of modern 3D imaging is depth mapping, a technology that creates a topographic model of an object or environment using specialized equipment. For example, light detection and ranging (LIDAR) uses pulses of laser light, time-of-flight calculations, positioning equipment and other assets to generate highly accurate 3D scene composites.

Alternative methods replace lasers with patterned infrared light, while others rely on known optical distortions combined with complex computational algorithms. A variation of the latter technique is used to accomplish Portrait Mode on iPhone 7 Plus.

Previously reserved for military or industrial applications, depth mapping is finding its way into the commercial market thanks to component amortization, miniaturization and development by tech companies, Apple included. PrimeSense, the Israeli company purchased by Apple in 2013, developed patterned light technology that made its way into Microsoft's Xbox Kinect sensor, for example.

Depth mapping for mobile devices is coming along nicely, but companies have yet to demonstrate a system that functions as accurately as, and with the consistency of, Touch ID.

Investments and patents

Beyond technical challenges, Apple has a significant stake in its Touch ID solution. All fingerprint sensors used in iPhones, iPads and now MacBook Pro computers are based on technology developed by AuthenTec, a security technology company specializing in fingerprint sensing hardware and supporting software, which Apple purchased for $356 million in 2012.

More recent Apple buys hint at where the company is headed. In February, nearly seven years after Apple bought Swedish facial recognition firm Polar Rose, the company reportedly acquired Israeli firm RealFace for $2 million. Last year, Apple snapped up facial recognition and expression analysis firm Emotient, following the 2015 buy of motion capture specialist Faceshift.

The acquisitions, along with the individual hires of computer vision and machine learning experts, strongly hint at work on face-based bio-recognition systems.

That Apple is working on next-gen Touch ID technology, and perhaps a replacement, is clear. The company already owns a trove of patents covering advanced fingerprint reading methods, from minor tweaks to existing capacitive techniques to ultrasonic sensing.

In-screen or under-screen fingerprint scanners are rumored for inclusion in "iPhone 8." Perhaps not coincidentally, Apple has IP for that, too.

As explained in a recent patent grant, in-screen systems place a sensing component beneath, or within, a smartphone's display. The invention was reassigned to Apple from LuxVue, a micro-LED maker the company purchased in 2014.

In some cases, transmission and reception components are disposed below the cover glass, touch film, LCD, polarizer, filter, backlight and any other layers that comprise the display stack. Apple's iPhone 6s and 7 models incorporate a large capacitive sensing array to facilitate Force Touch, for example. Other methods integrate those same sensors into one or more layers, like infrared diodes disposed throughout an RGB micro-LED array.

The goal is to create a fingerprint sensing system that requires no single anchor point like a home button, meaning a host smartphone can employe a true, unbroken edge-to-edge display. There are pitfalls to such designs, however, including the problem of indicating where a user needs to place their finger for authentication. Apple is already looking into these problems, as evidenced by an invention that allows for an iPhone's entire screen to serve as its fingerprint sensor.

Maybe next time

With Apple still investing significant man hours in the investigation of future Touch ID iterations -- fingerprint patents are still being churned out on a regular basis -- it seems the company is loath to abandon the technology altogether. That said, Apple is also pouring assets into the development of facial recognition solutions (1, 2, 3, 4, 5), and more recently capital into small specialist firms. A two-pronged approach is not unusual for a tech company, especially one as large as Apple.Considering Apple's continued work on Touch ID, and the lack of an accurate and reliable commercial facial recognition system, it is likely that a near-future iPhone will incorporate a hybrid bio-recognition solution. Taking Kuo's analysis into account, it seems that "iPhone 8" will relocate Touch ID beneath the screen, while keeping 3D imaging as a secondary feature. It is possible that the supposed 3D FaceTime camera might not be used for authentication at all, instead serving as a method of gesture input.

In any case, Apple is highly unlikely to abandon Touch ID for untested in-house facial recognition technology on what is to be its flagship product. Circumstantial evidence suggests the company is moving in the direction of face-based authentication, but the technology is simply not mature enough to stand on its own. For now.

Comments

How many angles do you have to hold your iPhone when making payment? Try doing that and getting a face read at the same time.

With TouchID, you hold your phone near the reader and tap.

Using facial recognition, you will have to hold up the phone to get the picture, then move the phone to the NFC reader. That is not an improvement over TouchID.

Apple would keep a screen integrated touchID sensor, if only for Apple Pay, they would not break the authentication process with a facial scan.

A realistic implementation of the technologies, reveals general authentication through facial scanning, with touchID providing higher security verification.

Assuming Apple even cares at this point about security use, a far more plausible use for this tech is camera integration. Integrating this tech into either camera or both, yields greater benefit to apple's ecosystem. The augmented reality, camera effects and App Store APIs are a exponentially greater return on investment.

Additionally, no one has a clue to possible resolution of said theoretical security system. PrimeSense had some cutting edge tech when acquired, so I wouldn't be surprised if Apple developed micrometer face scanning tech. It's realistic to assume high resolution imaging at less than .5 meters, although analysis and comparison to the secure enclave could require a redesign. Anyone have any stats on the bandwidth and RAM available to the secure enclave as it stands?

just my two cents

Also mentioned in the article is the term 'face detection.' Again, while this is an important technology on its own, it's not all that is required for biometric identification, though it's often a subset of the process. Face detection is simply the process of identifying, and sometimes tracking, a human face within a scene. It typically uses Fourier transforms and blurring alogorithms to detect patterns of eyes, nose, mouth, even at somewhat oblique angles. But face detection also doesn't specifically identify an individual. That's the job of...

Face Recognition. Face recognition technology often begins with face detection algorithms, so that any faces that appear in a scene, in the view of a video camera, can be detected and captured. Face recognition might then also employ facial recognition algorithms (but not necessarily) to identify specific facial expressions on the detected faces. But then Face Recognition adds a further, crucial step, applying algorithms not needed by Face Detection or Facial Recognition systems to match specific aspects and calculations made against each face against results stored in a database. This allows the face recognition system to perform its primary task, that of biometric identification, a task that is specifically NOT part of the definition of Face Detection or of Facial Recognition.

See why I've been nitpicking the terminology all these past months?

As as for acquisitions, Apple rarely buys a company to integrates or resell its products whole cloth. Emotient, FaceShift and other firms likely own or have developed underlying technology, patents on said tech or personnel of interest to one of Apple's many teams. Take the LuxVue IP as an example.

- Gaming

- Augmented Reality

- Facetime

- ... etc.

That is (as @radarthekat mentioned), use cases where the device must detect the EXISTENCE of a face, but not necessarily know the IDENTITY of that face.There are hundreds of other applications for better 3D cameras that have NOTHING to do with authentication. This is what Apple is going for.

How will the new method overcome people who

- Wear Crash Helmets when on their bikes

- Wear Ski Masks when on the slopes

- Muslim women who wear a Nicab or Burqa.

- Hospital Staff who are 'gowned up'

and a lot more besides.

ATM, I can remove my glove and either use the TouchId or enter my passcode while still wearing my crash helmet.

Also, I would expect that the TLA's would love to be able to stick your phone up in front of you and gain access. You wouldn't be required to do anything. You could not stop them gaining full access to your device.

I really hope that this sort of feature is not implemented EVER. Does not matter if it is optional. IMHO, it should just not be there period.

As I said at the start, this is a big IF.

the fact that it worked two thirds of the time was an accomplishment, but obviously, that's not nearly enough. It needs to work 99.9% of the time. No doubt it is getting better. But commercial and military systems have very expensive, and big, mechanisms. They also control the lighting. And you're expected to appear the same each time you use it.

its also clumsy.

Thanks for responding. Not enough authors do that. I think that too much is being made of the idea of this being used for unlocking. Maybe someday. But from my knowledge of this field, that day isn't here yet. While no one outside Apple and its critical suppliers of a technology knows what they're doing, and just maybe, there's been some breakthrough we don't know about, this is too problematic to be used for security purposes on a phone at this point in time.

and as I mentioned in my last post, it's really clumsy. There are too many variables in daily life for this to be useful generally. Carrying a package with one hand in the winter with a scarf around the bottom of your face, for example. How does that work easily? Biometrical gloves work for Touch ID.

imwish we would see article explaining how difficult this is, and how unlikely it also is. We seem to have far out features added to these phones, and when the real ones come out, people are disappointed because these wacky features aren't there. Then the stock drops. Oh well.

"Most recently, KGI analyst Ming-Chi Kuo last month said checks with supply chain sources suggest Apple is looking to integrate a "revolutionary" front-facing camera system capable of 3D sensing."

I believe the above. However I believe the 3D sensing will be (initially anyway) only/mainly for Augmented Reality.

Now the way I think the Augmented Reality functionality will work is to have facial TRACKING capability on the front of the device. This will allow the machine to display a "seamless" version of reality on the screen (actually the bezel will be the "seam", that is why they are reducing it as much as possible) as it can work out exactly where the viewer's eyes are all the time.

The iPhone/iPad in AR mode could be more or less invisible to the guy holding it ... he will only see the bezel. Of course an "invisible" iPhone is not much use. But quite simple to superimpose ... text, for example ... on the screen. This would enable people to check their iMessages while walking for example ... obviously not the main point of this functionality, just an unintended consequency (which I don't approve of 100% ... by the way).

A more difficult thing to do would be to have text on the screen giving information about some building/car/breakfast-serial/person visible on the screen. This would be full Augmented Reality. It would have similar possibilities to Google Glass. Only the part of your field of view covered by the screen will be available for AR though. Other people will not be freaked out* by it as they were by Google Glass.

Of course as time goes on Apple will consider incorporation things like facial recognition, iris recognition, creating gaming avatars and capturing 3D selfies

* Though maybe if you held up your AR iPhone or AR iPad so they could not see your eyes they will start to get freaked out ... imagining that you are getting data on them or taking their photograph.