Apple's iOS 13 beta 3 FaceTime gaze magic is triumph of tech evolution

Apple's subtly flattering new FaceTime feature in iOS 13 beta 3 corrects the appearance of your attention so that you appear focused on your caller -- as if perfectly staring at the camera -- even when you're looking at the screen. The magic behind it has incrementally developed across years of evolving software and hardware advancements, offering some interesting insight into how Apple uniquely charts out the future with its products.

But rather than being showcased as a "revolutionary" advance in video chat that will require everyone to buy a new device, Apple's new FaceTime feature builds on a series of technologies the company has incrementally rolled out over the past several years.

The feature currently works in iOS 13 betas on A12 Bionic iPhone XS models and the latest iPad Pro with A12X Bionic. Because the image adjustment occurs locally on your phone, your gaze will appear more naturally focused to whomever is on the other end, regardless of what iOS version, A-chip, or FaceTime capable Mac the recipient is using.

It's the perfect example of a practical application that leverages many of the various technologies Apple has introduced over the past few years. And it highlights how all of the OS, app, silicon and advanced sensor work that Apple is performing -- and uniquely deploying globally at incredible scale -- is so different from commodity phones that knock off the basic outlines of Apple's designs without duplicating the core of its innovations.

It also allows Apple to share its technologies with third party developers in the form of public APIs (Application Programming Interfaces). This leverages the coding talent outside of Apple to find even more uses for its foundational technologies, including Depth imaging, Machine Learning and Augmented Reality. A big part of Apple's annual Worldwide Developer Conference involves introducing developers to new and advanced tools Apple has created for building powerful third-party software for its platforms.

Apple paired a standard and zoom lens together with the ability to shoot simultaneously

At WWDC17, Apple's Etienne Guerard presented "Image Editing with Depth," which detailed how iPhone 7 Plus-- introduced the previous year with a new Portrait mode -- captured differential depth data using its dual rear cameras.

He detailed how Apple's Camera app used this data to create flattering Portrait shots that focused on the subject and blurred out the background. Starting in iOS 11, Apple enabled third party developers to access this same depth data to build their own imaging tools using Apple's Depth APIs. A notable example is Focos, a clever app that provides precise control over depth of field and other effects.

In parallel, Apple also introduced a variety of other technologies including its new Vision framework, CoreML and ARKit for handling all the heavy lifting in building Augmented Reality graphics that appear anchored in the real world. It wasn't entirely clear how all those pieces would fit together, but later that fall Apple introduced iPhone 8 Plus with enhanced depth capture featuring Portrait Lighting.

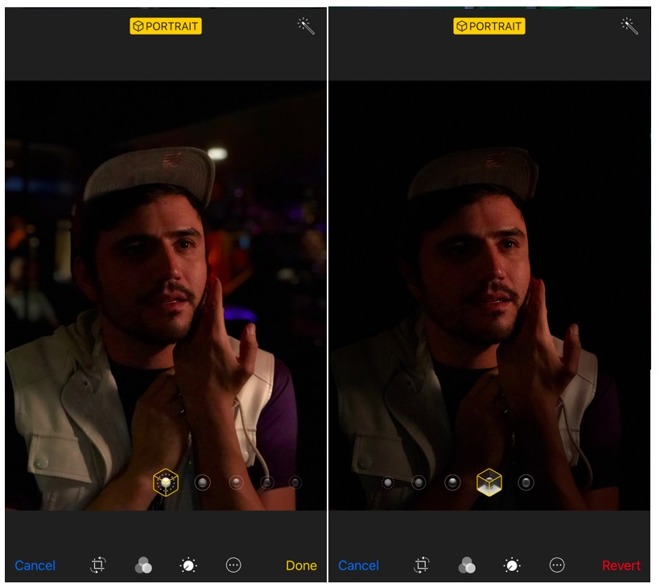

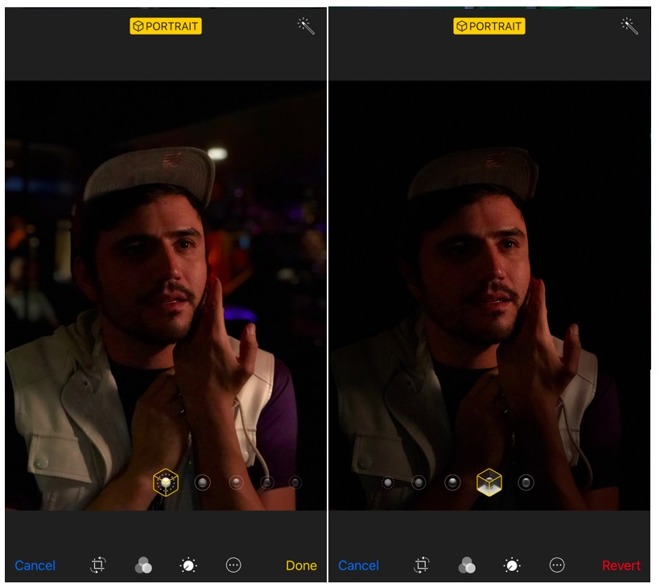

Portrait Lighting can take enhance a casual photo into a dramatic photograph

Portrait Lighting advanced the concept of capturing depth data entering the world of face-based AR: it created a realistic graphical overlay that simulated studio lighting, dramatic contour lighting or stage lighting that appeared to isolate the subject as if seated on a darkened stage. ARKit then effectively augmented users' reality by anchoring subtle graphics to a subject's face.

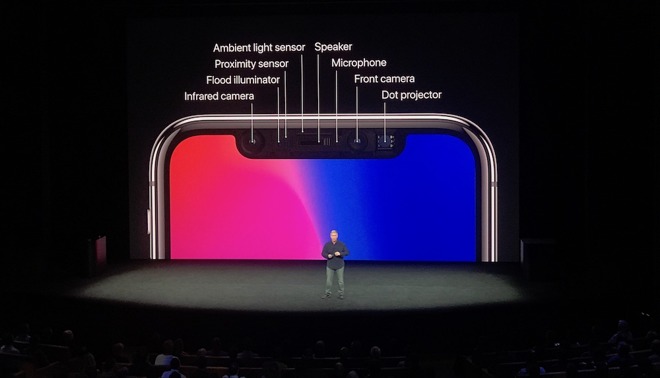

That feature was also expanded upon for the new iPhone X, which in addition to a dual rear cameras also supplied a new type of depth imaging using its front facing TrueDepth hardware. Rather than taking two images, it used a structure sensor to capture a depth map and color images separately.

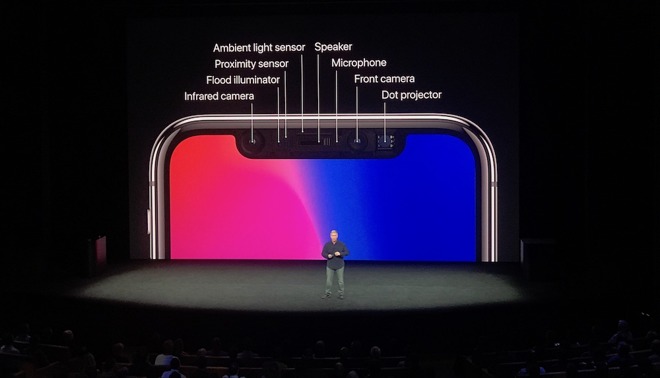

TrueDepth hardware enables a variety of new features for users and app developers

In addition to taking Portrait Lighting shots, it could also take Portrait Lighting selfies. And of course Apple's TrueDepth also introduced Face ID for fast, effortless authentication and Animoji, using ARKit's face tracking to completely overlay your face with an animated emoji avatar that picked up your subtle head and face movements to create another you.

Apple also demonstrated the power of ARKit in the hands of third parties, showing off advanced selfie filters by Snapchat that could realistically map all kinds of extravagant imagery on your face using TrueDepth hardware.

Apple has continued to advance its processing capacity and introduce new features, including an adjustable aperture setting for iPhone XR and iPhone XS models. At this year's WWDC19, Apple also revealed software advances under the hood of Vision and CoreML, including advanced recognition features and intelligent ML tools for analyzing the saliency or sentiment of images, text and other data -- intelligence shared across iOS 13, iPadOS, tvOS and macOS Catalina.

It also outlined a new kind of Machine Learning, where your phone itself can securely and privately learn and adapt to your behaviors. Apple had been internally using this to adapt to your evolving appearance in Face ID, but now third parties can build their own smart ML models that get even smarter in way that's personalized specifically to you. And again, it's private to you on a premium level because Apple is doing the processing locally using the advanced computing power of your iOS device, rather than shipping your data into the cloud, saving it and sharing it indiscriminately with "partners" the way Amazon, Facebook and Google have been.

Apple also makes use of TrueDepth attention sensing to know when you're looking at your phone and when you're not. It uses this to dim and lock your phone faster when you're not using it, or to remain lit up and active while you read a long article or watch a video, even when you're not touching the screen. These frameworks are also shared with developers so that they can build similar awareness into their apps.

Google has furiously copied Apple's high level features in Android and even added some of its own, including dark image capturing on its own Pixel phones. But because it has been unable to sell very many Pixel phones, this is a money-losing effort that isn't sustainable. Further, developers can't justify targeting features unique to Pixel. Google also isn't doing much of the work to open up its technologies to allow third parties to this the way Apple has been.

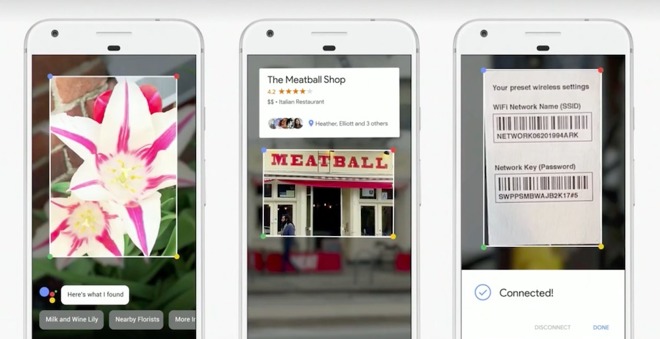

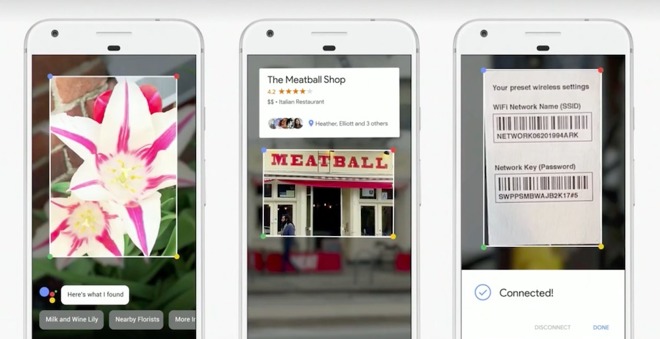

Google has shown advanced ML tech, but mostly in apps designed to collect your data

Both the Pixel's camera features and the advanced ML image and barcode recognition features of the Google Lens app are proprietary. Google hasn't opened up a platform for either. And while it tried to equate its own version of Portrait capture, which used ML rather than dual camera or structure sensor depth capture, with iPhone X, the reality is that it's limited to the features Google created and lacks an optical zoom and a front facing 3D imaging system capable of supporting anything like Portrait Lighting, Face ID or Animoji.

Android licensees have also been hesitant to build advanced sensors like TrueDepth or powerful processors capable of advanced imaging and neural net calculations into very many of their phones, given that most Androids sell at an average of $250 or less. So despite shipping hundreds of millions of total handsets, there are far fewer high end Androids in total than there are modern iPhones. So just as with Windows Phone, developers are doing their best and most interesting work on iOS, not Android.

Both Microsoft and Google began working in depth imaging well ahead of Apple, but Microsoft didn't move past its Xbox Kinect body controller, and Google's Tango similarly expected to use depth imaging on the backside of devices like a rear camera. Apple determined that it would be a lot smarter and more powerful to point its depth sensor at the user, enabling all of the advanced features it has shown off to date, including the latest FaceTime Attention Correction.

Attention Correction in FaceTime

Discussing the "Attention Correction" feature, developer Mike Rundle tweeted, "This is insane. This is some next-century sh*t." Users on Reddit howled "Absolutely incredible" and "the future is now!"But rather than being showcased as a "revolutionary" advance in video chat that will require everyone to buy a new device, Apple's new FaceTime feature builds on a series of technologies the company has incrementally rolled out over the past several years.

The feature currently works in iOS 13 betas on A12 Bionic iPhone XS models and the latest iPad Pro with A12X Bionic. Because the image adjustment occurs locally on your phone, your gaze will appear more naturally focused to whomever is on the other end, regardless of what iOS version, A-chip, or FaceTime capable Mac the recipient is using.

It's the perfect example of a practical application that leverages many of the various technologies Apple has introduced over the past few years. And it highlights how all of the OS, app, silicon and advanced sensor work that Apple is performing -- and uniquely deploying globally at incredible scale -- is so different from commodity phones that knock off the basic outlines of Apple's designs without duplicating the core of its innovations.

Evolutionary, not revolutionary

It's common for tech writers to make a big deal about specific new features that can be easily copied by rivals. But much of the advanced work Apple is doing in imaging and elsewhere is not just a series of one-off features, but is instead built on top of set of expanding frameworks and foundational technologies. This leads to an increasingly rapid pace of development.It also allows Apple to share its technologies with third party developers in the form of public APIs (Application Programming Interfaces). This leverages the coding talent outside of Apple to find even more uses for its foundational technologies, including Depth imaging, Machine Learning and Augmented Reality. A big part of Apple's annual Worldwide Developer Conference involves introducing developers to new and advanced tools Apple has created for building powerful third-party software for its platforms.

Apple paired a standard and zoom lens together with the ability to shoot simultaneously

At WWDC17, Apple's Etienne Guerard presented "Image Editing with Depth," which detailed how iPhone 7 Plus-- introduced the previous year with a new Portrait mode -- captured differential depth data using its dual rear cameras.

He detailed how Apple's Camera app used this data to create flattering Portrait shots that focused on the subject and blurred out the background. Starting in iOS 11, Apple enabled third party developers to access this same depth data to build their own imaging tools using Apple's Depth APIs. A notable example is Focos, a clever app that provides precise control over depth of field and other effects.

In parallel, Apple also introduced a variety of other technologies including its new Vision framework, CoreML and ARKit for handling all the heavy lifting in building Augmented Reality graphics that appear anchored in the real world. It wasn't entirely clear how all those pieces would fit together, but later that fall Apple introduced iPhone 8 Plus with enhanced depth capture featuring Portrait Lighting.

Portrait Lighting can take enhance a casual photo into a dramatic photograph

Portrait Lighting advanced the concept of capturing depth data entering the world of face-based AR: it created a realistic graphical overlay that simulated studio lighting, dramatic contour lighting or stage lighting that appeared to isolate the subject as if seated on a darkened stage. ARKit then effectively augmented users' reality by anchoring subtle graphics to a subject's face.

That feature was also expanded upon for the new iPhone X, which in addition to a dual rear cameras also supplied a new type of depth imaging using its front facing TrueDepth hardware. Rather than taking two images, it used a structure sensor to capture a depth map and color images separately.

TrueDepth hardware enables a variety of new features for users and app developers

In addition to taking Portrait Lighting shots, it could also take Portrait Lighting selfies. And of course Apple's TrueDepth also introduced Face ID for fast, effortless authentication and Animoji, using ARKit's face tracking to completely overlay your face with an animated emoji avatar that picked up your subtle head and face movements to create another you.

Apple also demonstrated the power of ARKit in the hands of third parties, showing off advanced selfie filters by Snapchat that could realistically map all kinds of extravagant imagery on your face using TrueDepth hardware.

Apple has continued to advance its processing capacity and introduce new features, including an adjustable aperture setting for iPhone XR and iPhone XS models. At this year's WWDC19, Apple also revealed software advances under the hood of Vision and CoreML, including advanced recognition features and intelligent ML tools for analyzing the saliency or sentiment of images, text and other data -- intelligence shared across iOS 13, iPadOS, tvOS and macOS Catalina.

It also outlined a new kind of Machine Learning, where your phone itself can securely and privately learn and adapt to your behaviors. Apple had been internally using this to adapt to your evolving appearance in Face ID, but now third parties can build their own smart ML models that get even smarter in way that's personalized specifically to you. And again, it's private to you on a premium level because Apple is doing the processing locally using the advanced computing power of your iOS device, rather than shipping your data into the cloud, saving it and sharing it indiscriminately with "partners" the way Amazon, Facebook and Google have been.

Apple also makes use of TrueDepth attention sensing to know when you're looking at your phone and when you're not. It uses this to dim and lock your phone faster when you're not using it, or to remain lit up and active while you read a long article or watch a video, even when you're not touching the screen. These frameworks are also shared with developers so that they can build similar awareness into their apps.

A hard act to follow

Other mobile platforms are increasingly having trouble keeping up with Apple because they haven't invested similar effort into OS, frameworks, and hardware integration. Microsoft famously dumped billions into generations of Windows Phone and Windows Mobile but couldn't attract a base of users capable of attracting a critical mass of development.Google has furiously copied Apple's high level features in Android and even added some of its own, including dark image capturing on its own Pixel phones. But because it has been unable to sell very many Pixel phones, this is a money-losing effort that isn't sustainable. Further, developers can't justify targeting features unique to Pixel. Google also isn't doing much of the work to open up its technologies to allow third parties to this the way Apple has been.

Google has shown advanced ML tech, but mostly in apps designed to collect your data

Both the Pixel's camera features and the advanced ML image and barcode recognition features of the Google Lens app are proprietary. Google hasn't opened up a platform for either. And while it tried to equate its own version of Portrait capture, which used ML rather than dual camera or structure sensor depth capture, with iPhone X, the reality is that it's limited to the features Google created and lacks an optical zoom and a front facing 3D imaging system capable of supporting anything like Portrait Lighting, Face ID or Animoji.

Android licensees have also been hesitant to build advanced sensors like TrueDepth or powerful processors capable of advanced imaging and neural net calculations into very many of their phones, given that most Androids sell at an average of $250 or less. So despite shipping hundreds of millions of total handsets, there are far fewer high end Androids in total than there are modern iPhones. So just as with Windows Phone, developers are doing their best and most interesting work on iOS, not Android.

Both Microsoft and Google began working in depth imaging well ahead of Apple, but Microsoft didn't move past its Xbox Kinect body controller, and Google's Tango similarly expected to use depth imaging on the backside of devices like a rear camera. Apple determined that it would be a lot smarter and more powerful to point its depth sensor at the user, enabling all of the advanced features it has shown off to date, including the latest FaceTime Attention Correction.

Comments

I’m kidding I love my job. I just don’t like meetings.

The more used to the anticipation of our actions these devices make us, the more stupid these devices seem when their actions are not a correct anticipation of our wants. As a result of more and more anticipation and interpretation, and the inevitable errors, devices are growing more irritating per revision as a result of the added artificial stupidity.

Fully manual devices waste less time than auto-everything because we can stop our own fat-finger typing mistakes in action before they turn into long words. Try to correct yourself with autocorrect and it will fall over and give up as soon as you backspace. But turn off autocorrect entirely and you’ll see how much of a disaster a touchscreen keyboard really is.

So when will that keyboard code evolve? It hasn’t evolved to handle fast and/or two-thumbed typing. Hell, it can’t even handle a left handed person’s thumb as well as a right handed person (the two alignment is designed to handle only the right thumb).

And we humans who type fast don’t magically lose the letter t when we type the word “the” (and very many other close letter combos) very fast on a physical keyboard, like iOS does with touch screen keyboard. Oh gee, sorry; we didn’t know you didn’t mean to say “he chair by he door”...

It still has no context awareness for “its” and “it’s”, or anything else grammatical.

Why is this area not getting evolved?

WTF is an "Android killer"?

Apple is the one to kill in all these tech articles. It's like you made some crap up to seem like Apple is behind and reversed a common phrase to make you believe it.

Snapchat has got gender swop and other extreme filters - DED loses his shit because Apple can change your eyes.

And these lies "Google has shown advanced ML tech, but mostly in apps designed to collect your data" what another joke. Google Cloud, Azure etc all have these incredible image,video and cognition APIs - https://cloud.google.com/vision/. It can literally take you half an hour to do what Apple did with Facetime.

lol.

You sound like the guys in the early PC days scoffing at computers and talking about how much more you can do on a terminal mainframe.

Also, I've written well over a thousand articles since 2012, the article that you're supposedly still butthurt about. But that article wasn't "about" StreetView being creepy, that's was the headline put on it for SEO. In fact, the article I wrote doesn't say anything about being "creepy" at all. If you'd read it, you'd know that.

What I actually wrote was: "While not without controversy from privacy advocates (and with government stopping Google's work of StreetView mapping the world in countries like Australia, Germany and India), Google has effectively covered vast areas of Europe, Russia, North America, Brazil, Japan, Thailand, South Korea and South Africa with its camera cars."

Again, if you'd actually read the article rather than googling for a quick personal attack, you'd know that said "there are a variety of attractive features Google has bundled into its web and app-based Google Maps portfolio, providing a tall order for anyone hoping to replicate all those features. Apple certainly has big shoes to fill in the maps department."

It applauded Google for delivering the future, noting that "Conceptually, StreetView can be dated back to an ARPA project developed at MIT, which in 1978 began mapping the town of Aspen, Colorado, using photographs stored on interactive Laserdisc, allowing users to virtually walk down streets, and even bring up seasonal views of the town from multiple perspectives.

The Aspen Movie Map was one of the first advanced examples of hypermedia, and was described by a young Steve Jobs in a 1983 speech describing the future potential of personal computing.

"It's really amazing," Jobs said, describing the project in contrast with conventional, static forms of old media. "It's not incredibly useful," he added as the audience laughed, "but it points to some of the interactive nature of this new medium which is just starting to break out from movies, and will take another five to ten years to evolve."

So the phony here is you, falsely attacking me for something from 2012 in the most childish fashion possible.

You're utterly clueless. There's a reason why this requires the A12 processor, which is because it's HIGHLY cpu intensive, and not in any way comparable to throwing on shitty snapchat filters.

Unless I missed it, I didn't see anyone explain if this effect can work when there are multiple people in the image.

To to make this believable, they need a pretty serious processor and AI smarts.

This is so the obvious, now that I’ve seen it.

Still, I hope they don’t forget the off switch.