Apple working on gaze tracking to make AR & VR faster & more accurate

Apple's long-rumored augmented reality or virtual reality headset could offer eye tracking as part of its control system, with the iPhone maker coming up with a way to make such a gaze-tracking system as accurate as possible, even when the headset is in motion.

Facebook's Oculus Rift, a VR headset without eye tracking

The mouse and keyboard has been a staple control system for Macs and other computers for some time, with touch being the main avenue for commanding an iPhone or iPad. While voice has become a bigger interaction method in recent years, especially on mobile devices, it isn't quite accurate or flexible enough to work for all tasks, such as to perform actions where a user selects a specific point of a display.

For head-mounted displays, the control systems for virtual reality largely consists of motion-tracked hand-held controllers that are mapped in relation to the headset's position, and usually identified within the application via a cursor or a hand-like representation.

Eye tracking could provide an alternate way for users to interact with a virtual reality environment, by detecting what the user is specifically focusing on within the headset's displays. This could provide a more natural and accurate interaction with a menu or other software system than using hands, as there is less awkwardness than grasping and selecting a virtual object in real-world space.

However, eye tracking is limited in its accuracy as the user's headset is constantly in motion. While fixed to the user's head, the headset will still move around, with the distance between the user's eyes and the eye tracking system varying all the time, minimizing how effective it could be.

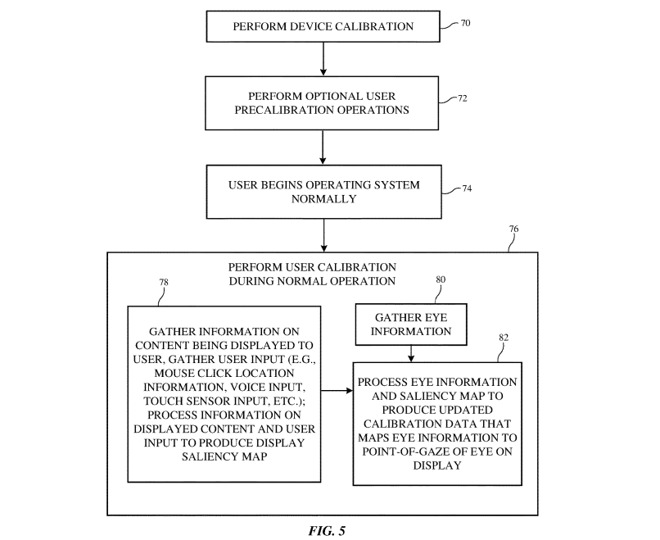

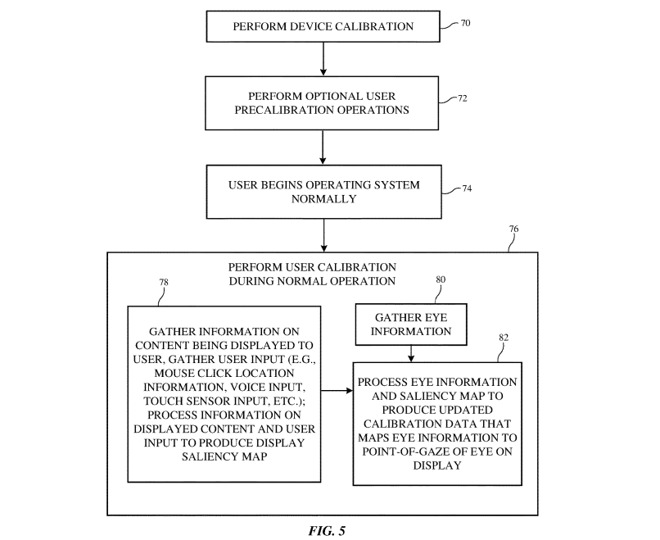

In a patent granted to Apple by the U.S. Patent and Trademark Office on Tuesday, the "Electronic device with gaze tracking system" aims to solve the drift issue using probability and scene detection. Rather than coming up with an entirely new hardware solution, the patent effectively suggests the use of a software system with an established eye tracking system to enhance the ability to select items.

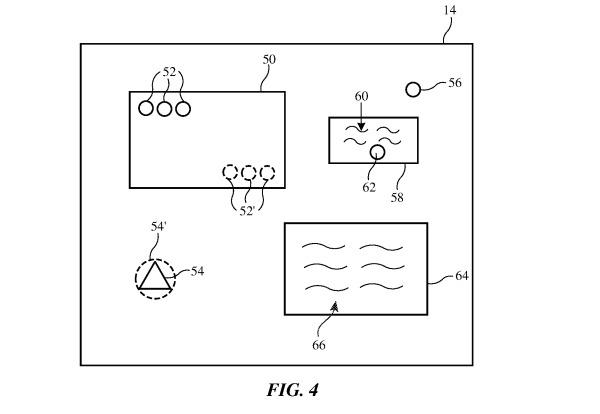

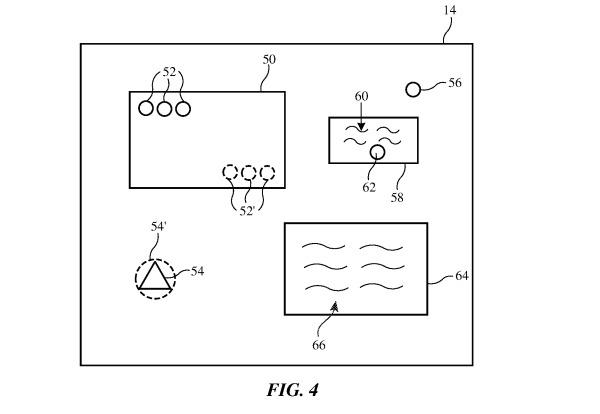

The patent describes how "control circuitry" in the device can produce a "saliency map," a probability density map for content being shown on the display, identifying points of interest that the user could interact with within their field of view. For example, this could include selectable buttons, text, or interactive elements within a scene.

A simplified example of how users may look at specific points of a display for a saliency map complete with points of interest and menus.

In normal use, if a user wants to select something, the gaze detection can determine where the user is looking, and using the saliency map, guess as to what they are specifically looking at, with a separate controller used to confirm the selection.

While aiding with item selection, the saliency map can also help aid with making the eye tracking system more accurate. By comparing where the user is measured to be looking, the areas of interest on the saliency map in relation to the user's view and the detected gaze point, and data from voice and other sources, this could determine the headset's drift from its starting position.

This drift detection allows for a real-time calibration of the eye-tracking system as a whole, making future content detections more accurate for the immediate future.

A flowchart describing repeated calibration of an eye tracking system during use

Apple files numerous patent applications with the USPTO on a weekly basis, but while there is no guarantee the concept will make its way into a future product or service, it does indicate areas of interest for Apple's research and development efforts.

Apple is widely believed to be working on AR and VR devices, and has done so for a number of years, with AR-based smart glasses thought to be on the horizon for release sometime in 2020.

The company has amassed quite a few patents for related technologies, and has even made attempts to promote the use of its products for AR and VR, including demonstrating VR on macOS and introducing ARKit for iOS. Apple also acquired SensoMotoric Instruments in 2017, a German firm specializing in eye-tracking technology.

In 2018, Apple filed a patent application for an eye-tracking system using a "hot mirror" to reflect infrared light into a user's eyes, one that could ultimately be mounted extremely close to the user's face to make the headset as comfortable as possible. A 2015 gaze-based eye tracking patent was granted for controlling an iOS or macOS device by looking at the screen, while also mitigating the issue of the Troxler Effect that can affect vision-based interactions.

One May 2019 patent application detailed how to determine which of a user's eyes is dominant, a data point that could further help eye tracking systems by weighting positioning data more from the dominant eye's results than the weaker eye.

Facebook's Oculus Rift, a VR headset without eye tracking

The mouse and keyboard has been a staple control system for Macs and other computers for some time, with touch being the main avenue for commanding an iPhone or iPad. While voice has become a bigger interaction method in recent years, especially on mobile devices, it isn't quite accurate or flexible enough to work for all tasks, such as to perform actions where a user selects a specific point of a display.

For head-mounted displays, the control systems for virtual reality largely consists of motion-tracked hand-held controllers that are mapped in relation to the headset's position, and usually identified within the application via a cursor or a hand-like representation.

Eye tracking could provide an alternate way for users to interact with a virtual reality environment, by detecting what the user is specifically focusing on within the headset's displays. This could provide a more natural and accurate interaction with a menu or other software system than using hands, as there is less awkwardness than grasping and selecting a virtual object in real-world space.

However, eye tracking is limited in its accuracy as the user's headset is constantly in motion. While fixed to the user's head, the headset will still move around, with the distance between the user's eyes and the eye tracking system varying all the time, minimizing how effective it could be.

In a patent granted to Apple by the U.S. Patent and Trademark Office on Tuesday, the "Electronic device with gaze tracking system" aims to solve the drift issue using probability and scene detection. Rather than coming up with an entirely new hardware solution, the patent effectively suggests the use of a software system with an established eye tracking system to enhance the ability to select items.

The patent describes how "control circuitry" in the device can produce a "saliency map," a probability density map for content being shown on the display, identifying points of interest that the user could interact with within their field of view. For example, this could include selectable buttons, text, or interactive elements within a scene.

A simplified example of how users may look at specific points of a display for a saliency map complete with points of interest and menus.

In normal use, if a user wants to select something, the gaze detection can determine where the user is looking, and using the saliency map, guess as to what they are specifically looking at, with a separate controller used to confirm the selection.

While aiding with item selection, the saliency map can also help aid with making the eye tracking system more accurate. By comparing where the user is measured to be looking, the areas of interest on the saliency map in relation to the user's view and the detected gaze point, and data from voice and other sources, this could determine the headset's drift from its starting position.

This drift detection allows for a real-time calibration of the eye-tracking system as a whole, making future content detections more accurate for the immediate future.

A flowchart describing repeated calibration of an eye tracking system during use

Apple files numerous patent applications with the USPTO on a weekly basis, but while there is no guarantee the concept will make its way into a future product or service, it does indicate areas of interest for Apple's research and development efforts.

Apple is widely believed to be working on AR and VR devices, and has done so for a number of years, with AR-based smart glasses thought to be on the horizon for release sometime in 2020.

The company has amassed quite a few patents for related technologies, and has even made attempts to promote the use of its products for AR and VR, including demonstrating VR on macOS and introducing ARKit for iOS. Apple also acquired SensoMotoric Instruments in 2017, a German firm specializing in eye-tracking technology.

In 2018, Apple filed a patent application for an eye-tracking system using a "hot mirror" to reflect infrared light into a user's eyes, one that could ultimately be mounted extremely close to the user's face to make the headset as comfortable as possible. A 2015 gaze-based eye tracking patent was granted for controlling an iOS or macOS device by looking at the screen, while also mitigating the issue of the Troxler Effect that can affect vision-based interactions.

One May 2019 patent application detailed how to determine which of a user's eyes is dominant, a data point that could further help eye tracking systems by weighting positioning data more from the dominant eye's results than the weaker eye.

Comments

Even more interesting, when our eyes move, we go totally blind for a few milliseconds. This phenomenon is called saccadic masking. You can verify it experimentally in a mirror. No matter how hard you try, you will never be able to see your own eyes move, because they shut down while moving.

Taken together, these allow for something called foveated rendering, where the device tracks where the user's fovea is, and renders only that small patch with high detail. It then renders everything else much more coarsely. As long as the time to render a frame can be kept below the saccadic masking duration, rendering in this way is imperceptible to the user. This means it only needs to render around 3% of the screen, which would allow even a phone GPU to render VR/AR meeting today's standards for angular resolution.

This has even been done before in a series of experiments in the 70s. Subjects were presented with a block of text, which was blurred outside where their fovea was predicted to land. They had no idea this was happening.

That explains a lot - In California, I can hold my iPhone for navigation per the California supreme court ruling when driving - but it always feels dangerous when I track my eyes from and back to the road - that helps explain it.

With Apple inventing every damn user interface it makes sense Apple would find new ways to control new tech.

One concern I have about eye-tracking as a UI for AR glasses is that I have nystagmus (involuntary eye movement). While I can imagine that it might be possible to train a computer to infer the intent of my gaze by filtering out the "noise" of the nystagmus, I can also imagine that most companies wouldn't bother to do that (or even be aware of the issue). Apple is more focused on meeting the needs of people with a variety of physical eccentricities/impairments, so if anybody would attempt to deal with this, it would be Apple. But it's so unusual that I'm not sure even Apple would try to deal with it. I would just hate to miss out on what I suspect is going to be a very cool/compelling product.

It should be possible to differentiate between the involuntary sliding motion and the corrective saccades. Given infinite resolution, whatever is being shown to the user could slide around the view to match the involuntary sliding. The challenge would then be differentiating between saccades to correct the slide and saccades to intentionally move the view. Not sure how that would be done.