Holographic elements could give Apple AR headset an immersive experience

Apple's rumored augmented reality headset may use a holographic combiner to project light onto the wearer's eyes, while an eye-tracking system for monitoring the gaze of the user could be used to provide more information about an environment based on where the user is actively looking.

Apple's current AR offering revolves around ARKit in iOS devices

There have been many rumors about Apple's supposed AR headset or smart glasses, along with a number of regulatory filings indicating the company is exploring the possibilities of the device. While there are already many VR headsets and AR devices on the market, it seems Apple is still keen to iron out design issues to offer what could be a superior version once released.

The patent application for "Display Device," published by the US Patent and Trademark Office on Thursday, describes a complex system for displaying an image for the user in an AR headset. Rather than using a display showing a composite view of the environment and virtual content, Apple proposes the use of a "reflective holographic combiner" to serve the same purpose, reflecting light for the AR elements while at the same time passing through environmental light.

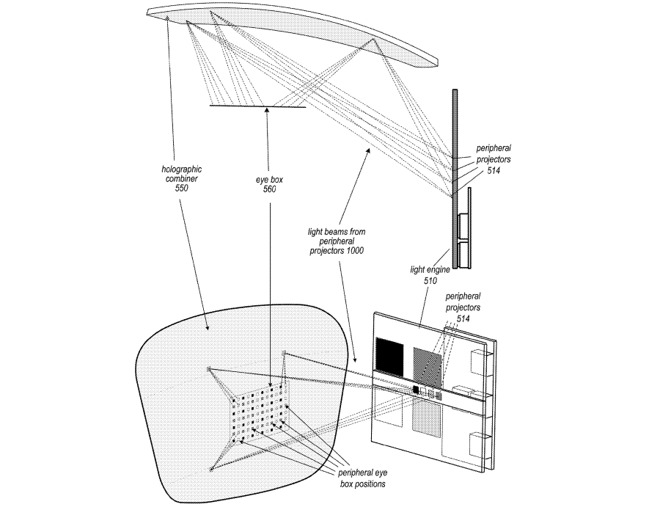

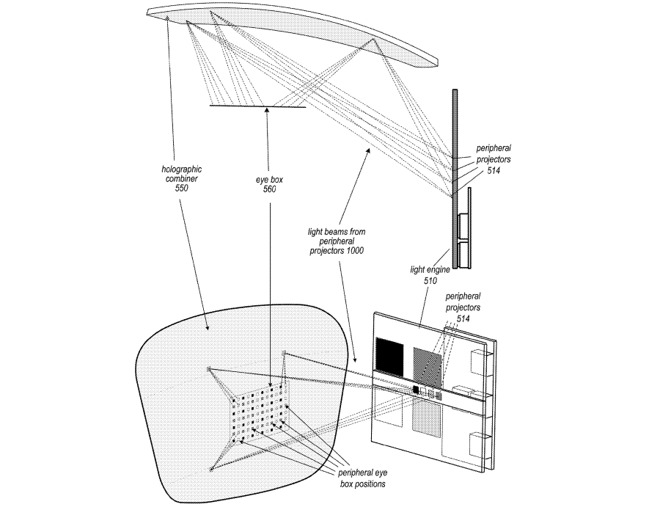

The holographic combiner is used with a light engine to project light into specific points of the combiner to create the representation of the scene. The light engine can consist of multiple image-projection systems, including laser diodes, LEDs, and other sources used as part of foveal and peripheral projectors.

An example of the holographic combiner and different projectors could be used in an AR headset

The foveal projectors are intended to provide high detail images intended for foveal parts of the retina, while the peripheral projectors can provide a lower-resolution image intended for other areas of the retina not able to discern detail at the same level. The light engine can also include optical waveguides with holographic and diffractive gratings to generate beams of light at specific angles to scanning mirrors, which are then directed into other waveguides feeding them into the holographic combiner.

The key reason for the patent application's development is to eliminate "accommodation-convergence mismatch problems," where the virtual object is overlaid on the environment without taking into account the level of depth of field that the user expects it to have. This visual error can cause eyestrain, headaches, and nausea in some cases.

By including projectors for foveal and peripheral areas of the user's vision, in tandem with an eye-tracking system to determine the gaze, Apple hopes to tackle the problem once and for all.

Apple also advises the use of the holographic combiner eliminates the need for extra moving parts or mechanical elements to compensate for the eye changing position, or for changing the optical power during a scan, a development which simplifies the system architecture. It is also suggested the holographic combiner can be implemented using a relatively flat lens, rather than a curved reflective mirror as used in direct retina projector systems.

A second patent application filing attempts to cover the gaze element, in a document titled "Image Enhancement Devices with Gaze Tracking." Rather than dealing with the hardware side, the filing largely covers the intention of making an AR scene fit the real-world environment being viewed.

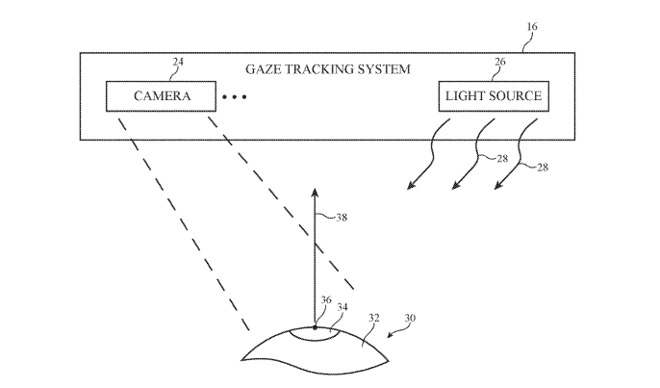

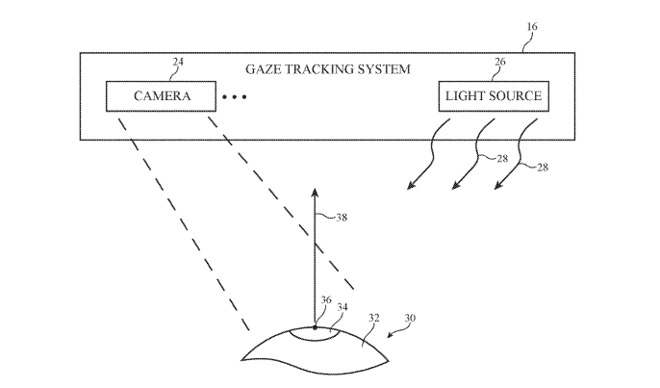

The system chiefly consists of a camera to capture the world, as well as a gaze tracking system, and a display. The gaze tracking system is configured to determine the point-of-gaze information, while control circuitry is configured to display a variety of content based on that data.

A simplified example of a gaze tracking system using light sources and cameras

This data can include "magnified supplemental content," such as a zoomed-in image of the real world, a computer-generated graphic, and other elements. The external camera is used to identify areas of the user's real-world vision that could be points of interest, and could have extra information added for the user's consumption, like face and object identification.

This could lead to features such as using optical character recognition to view text in a scene, then to provide a more readable version to the user in a different language.

While the point-of-gaze could help inform what data to provide to the user, the filing also proposes the use of a "point-of-gaze gesture" to trigger such elements, along with using a "dwell time" to bring up data if the user's vision lingers in a specific point. Blinking could also be used, such as with repeated blinks in quick succession enabling or disabling a zoom function.

Apple files numerous patent applications on a weekly basis, but while the existence of a patent points to areas of interest for the iPhone maker, it does't necessarily guarantee the concepts will make their way into a future product or service.

A foveated display has been raided as an idea in a June filing, by combining high-detailed images where the user is actively looking with lower-resolution versions serving the periphery. Apple proposed the system could help provide higher refresh rates, due to reducing the amount of data the headset display needs to update.

Gaze tracking can also help VR systems become more accurate, such as in an August filing suggesting head-mounted displays could use it to reduce the "drift issue" to continually correct the display if the headset moves differently to the user's eyes during motion.

Outside of rumors and filings, Apple has recently included references to augmented reality glasses in internal betas of iOS 13, the Gold Master release, and a beta of Xcode, effectively confirming such a device is in development.

Apple's current AR offering revolves around ARKit in iOS devices

There have been many rumors about Apple's supposed AR headset or smart glasses, along with a number of regulatory filings indicating the company is exploring the possibilities of the device. While there are already many VR headsets and AR devices on the market, it seems Apple is still keen to iron out design issues to offer what could be a superior version once released.

The patent application for "Display Device," published by the US Patent and Trademark Office on Thursday, describes a complex system for displaying an image for the user in an AR headset. Rather than using a display showing a composite view of the environment and virtual content, Apple proposes the use of a "reflective holographic combiner" to serve the same purpose, reflecting light for the AR elements while at the same time passing through environmental light.

The holographic combiner is used with a light engine to project light into specific points of the combiner to create the representation of the scene. The light engine can consist of multiple image-projection systems, including laser diodes, LEDs, and other sources used as part of foveal and peripheral projectors.

An example of the holographic combiner and different projectors could be used in an AR headset

The foveal projectors are intended to provide high detail images intended for foveal parts of the retina, while the peripheral projectors can provide a lower-resolution image intended for other areas of the retina not able to discern detail at the same level. The light engine can also include optical waveguides with holographic and diffractive gratings to generate beams of light at specific angles to scanning mirrors, which are then directed into other waveguides feeding them into the holographic combiner.

The key reason for the patent application's development is to eliminate "accommodation-convergence mismatch problems," where the virtual object is overlaid on the environment without taking into account the level of depth of field that the user expects it to have. This visual error can cause eyestrain, headaches, and nausea in some cases.

By including projectors for foveal and peripheral areas of the user's vision, in tandem with an eye-tracking system to determine the gaze, Apple hopes to tackle the problem once and for all.

Apple also advises the use of the holographic combiner eliminates the need for extra moving parts or mechanical elements to compensate for the eye changing position, or for changing the optical power during a scan, a development which simplifies the system architecture. It is also suggested the holographic combiner can be implemented using a relatively flat lens, rather than a curved reflective mirror as used in direct retina projector systems.

A second patent application filing attempts to cover the gaze element, in a document titled "Image Enhancement Devices with Gaze Tracking." Rather than dealing with the hardware side, the filing largely covers the intention of making an AR scene fit the real-world environment being viewed.

The system chiefly consists of a camera to capture the world, as well as a gaze tracking system, and a display. The gaze tracking system is configured to determine the point-of-gaze information, while control circuitry is configured to display a variety of content based on that data.

A simplified example of a gaze tracking system using light sources and cameras

This data can include "magnified supplemental content," such as a zoomed-in image of the real world, a computer-generated graphic, and other elements. The external camera is used to identify areas of the user's real-world vision that could be points of interest, and could have extra information added for the user's consumption, like face and object identification.

This could lead to features such as using optical character recognition to view text in a scene, then to provide a more readable version to the user in a different language.

While the point-of-gaze could help inform what data to provide to the user, the filing also proposes the use of a "point-of-gaze gesture" to trigger such elements, along with using a "dwell time" to bring up data if the user's vision lingers in a specific point. Blinking could also be used, such as with repeated blinks in quick succession enabling or disabling a zoom function.

Apple files numerous patent applications on a weekly basis, but while the existence of a patent points to areas of interest for the iPhone maker, it does't necessarily guarantee the concepts will make their way into a future product or service.

A foveated display has been raided as an idea in a June filing, by combining high-detailed images where the user is actively looking with lower-resolution versions serving the periphery. Apple proposed the system could help provide higher refresh rates, due to reducing the amount of data the headset display needs to update.

Gaze tracking can also help VR systems become more accurate, such as in an August filing suggesting head-mounted displays could use it to reduce the "drift issue" to continually correct the display if the headset moves differently to the user's eyes during motion.

Outside of rumors and filings, Apple has recently included references to augmented reality glasses in internal betas of iOS 13, the Gold Master release, and a beta of Xcode, effectively confirming such a device is in development.

Comments

Sounds reasonable 🙄

IMO Robert Scoble and his shower post was the beginning of the "OMG a CAMERA!!" movement and subsequent banning of Google Glass by a high-profile but small handful of restaurants and it went downhill from there.

So today we all seem to be more at ease now with the camera's around us, no longer automatically fearing them in our smart devices. Video doorbells, home security, SnapChat and Instagram...

The timing was wrong in 2012 when Google Glass was first revealed. With 7 years of improvements in hardware and technology, and 7 years for people to digest the fact that camera's can be helpful, useful and fun and not just intrusive, now might be an OK time to revisit a consumer version. If Apple decides to release one of their own I'd expect Google to watch the winds and if blowing the right way quickly release a revised consumer Glass.

“May God us keep

From single vision and Newton’s sleep.”

Lines meant for those who live in their left hemispheres, from William Blake in 1802. Apple’s mission to develop this new stereo visual technology, which is uniquely suited to the resources and talents of the company, will change the nature of human perception itself.

Since the invention of 2D media such as the phonetic alphabet and the printed book or page, not to mention 2D cinema and video screens, we’ve been unknowingly crippled by technologies that train our visual systems not to see in depth.

Wearable 3D vision amplifiers will be much more important than transportation, except now that I think of it, Apple’s version of the automobile will also be an exercise in depth vision and augmented realities.

This was all subliminal, and something which designers and engineers at Apple will see as aesthetic laws that must not be transgressed.

Again, that is. They blew it with the hocky puck mouse — no bilateral symmetry.

That's probably mostly changed now, and definitely would if Apple also got in the game. Like magic it would all OK. Put a million of them out there and now it's the cool thing. A million buyers of an expensive headset ain't gonna fail to push back against FUD. So it has a camera. What me worry? Who cares.