Researchers can hijack Siri with inaudible ultrasonic waves

Security researchers have discovered a way to covertly hijack Siri and other smartphone digital assistants using ultrasonic waves, sounds that cannot be normally heard by humans.

The attack, inaudible to the human ear, can be used to read messages, make fraudulent phone calls or take pictures without a user's knowledge.

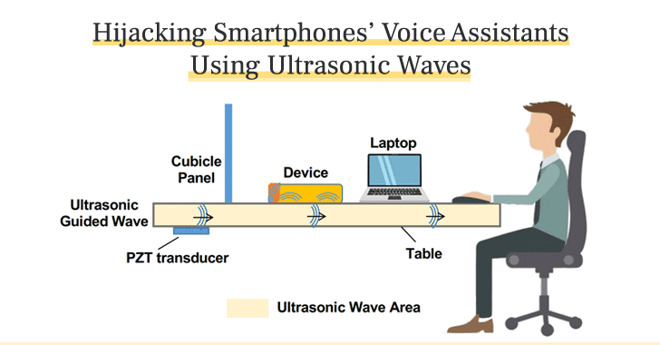

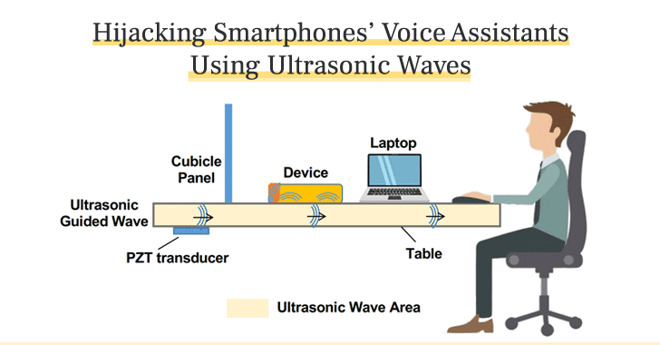

Dubbed SurfingAttack, the exploit uses high-frequency, inaudible sound waves to activate and interact with a device's digital assistant. While similar attacks have surfaced in the past, SurfingAttack focuses on the transmission of said waves through solid materials, like tables.

The researchers found that they could use a $5 piezoelectric transducer, attached to the underside of a table, to send these ultrasonic waves and activate a voice assistant without a user's knowledge.

Using these inaudible ultrasonic waves, the team was able to wake up voice assistants and issue commands to make phone calls, take pictures or read a message that contained a two-factor authentication passcode.

To further conceal the attack, the researchers first sent an inaudible command to lower a device's volume before recording the responses using another device hidden underneath a table.

SurfingAttack was tested on a total of 17 devices and found to be effective against most of them. Select Apple iPhones, Google Pixels and Samsung Galaxy devices are vulnerable to the attack, though the research didn't note which specific iPhone models were tested.

All digital assistants, including Siri, Google Assistant, and Bixby, are vulnerable.

Only the Huawei Mate 9 and the Samsung Galaxy Note 10+ were immune to the attack, though the researchers attribute that to the different sonic properties of their materials. They also noted the attack was less effective when used on tables clovered by a tablecloth.

The technique relies on exploiting the nonlinearity of a device's MEMS microphone, which are used in most voice-controlled devices and include a small diaphragm that can translate sound or light waves into usable commands.

While effective against smartphones, the team discovered that SurfingAttack doesn't work on smart speakers like Amazon Echo or Google Home devices. The primary risk appears to be covert devices, hidden in advance underneath coffee shop tables, office desks, and other similar surfaces.

The research was published by a multinational team of researchers from Washington University in St. Louis, Michigan State University, the Chinese Academy of Sciences, and the University of Nebraska-Lincoln. It was first presented at the Network Distributed System Security Symposium on Feb. 24 in San Diego.

SurfingAttack is far from the first time that inaudible sound waves have been used to exploit vulnerabilities. The research piggybacks on several previous research projects, including the similarly named DolphinAttack.

The attack, inaudible to the human ear, can be used to read messages, make fraudulent phone calls or take pictures without a user's knowledge.

Dubbed SurfingAttack, the exploit uses high-frequency, inaudible sound waves to activate and interact with a device's digital assistant. While similar attacks have surfaced in the past, SurfingAttack focuses on the transmission of said waves through solid materials, like tables.

The researchers found that they could use a $5 piezoelectric transducer, attached to the underside of a table, to send these ultrasonic waves and activate a voice assistant without a user's knowledge.

Using these inaudible ultrasonic waves, the team was able to wake up voice assistants and issue commands to make phone calls, take pictures or read a message that contained a two-factor authentication passcode.

To further conceal the attack, the researchers first sent an inaudible command to lower a device's volume before recording the responses using another device hidden underneath a table.

SurfingAttack was tested on a total of 17 devices and found to be effective against most of them. Select Apple iPhones, Google Pixels and Samsung Galaxy devices are vulnerable to the attack, though the research didn't note which specific iPhone models were tested.

All digital assistants, including Siri, Google Assistant, and Bixby, are vulnerable.

Only the Huawei Mate 9 and the Samsung Galaxy Note 10+ were immune to the attack, though the researchers attribute that to the different sonic properties of their materials. They also noted the attack was less effective when used on tables clovered by a tablecloth.

The technique relies on exploiting the nonlinearity of a device's MEMS microphone, which are used in most voice-controlled devices and include a small diaphragm that can translate sound or light waves into usable commands.

While effective against smartphones, the team discovered that SurfingAttack doesn't work on smart speakers like Amazon Echo or Google Home devices. The primary risk appears to be covert devices, hidden in advance underneath coffee shop tables, office desks, and other similar surfaces.

The research was published by a multinational team of researchers from Washington University in St. Louis, Michigan State University, the Chinese Academy of Sciences, and the University of Nebraska-Lincoln. It was first presented at the Network Distributed System Security Symposium on Feb. 24 in San Diego.

SurfingAttack is far from the first time that inaudible sound waves have been used to exploit vulnerabilities. The research piggybacks on several previous research projects, including the similarly named DolphinAttack.

Comments

I actually surprised that the microphones could transduce at those frequencies. Given the attenuation that occurs of ultrasonic waves at any air-solid interface this attack would be limited to the example given above where the phone is placed directly on a solid surface to conduct the sound waves. I also assume that many cases would effectively block the attack by reducing the transmission (the article implies this when it talks about the Mate 9 and Note 10+)

Presumably this could also be blocked universally by altering the phone software to recognize and block the frequencies used in the attack.

So, let me understand, they're not telling you which iPhone device, or which version of the IOS. Even though later iOS versions can recognize the differences between one individual voice to another. I thought when individual research occurs, they provide testing configurations to legitimize their conclusions.

If you don’t use WiFi. Disable it.

If you don’t use Siri. Disable it.

If I need any one of them turn them on... it only takes a second.

You never know when a new vulnerability will be found. There’s no reason to stress about, but might as well lock things down as much as possible.

Yes, a video demonstration is needed to back this up. I had the same experience. The audio of Siri's responses don't change when you ask her to change the volume to 0. Only media playback volume is adjusted. Even asking her to turn on Do Not Disturb mode gives audible feedback that it was done.

While maybe not entirely realistic, all research teaches us things we didn't know before, so it's all beneficial.

The attack used ultrasonic sound - an audio file won’t help you.randominternetperson said: Yeah, except Siri gets activated on a regular basis by other people, the radio, etc, so any biometric identification used is pretty weak.

From a scientific standpoint it would probably be better if we define ultrasonic specifically from 20,000 Hertz to either infinity or some upper level where another term gets used. Note that medical ultrasounds usually operate at around 10,000 Hertz.

Yes, there is the "training" that one does when setting up a new phone, but from what I've seen, that just sets Siri's expectations for speech patterns, not the voice itself.