Apple Glass could communicate with other devices for accurate AR mapping

The long-rumored Apple Glass could take advantage of data from other units to accurately map an environment, with head-mounted displays capturing data on a scene and sharing it for a better augmented reality experience.

Augmented reality depends on having an accurate measurement of geometry in an environment, with imaging devices performing the task as cameras move around an area. For a single headset, this may be a relatively slow process, but performing the same task using multiple devices can both be beneficial and create its own problems.

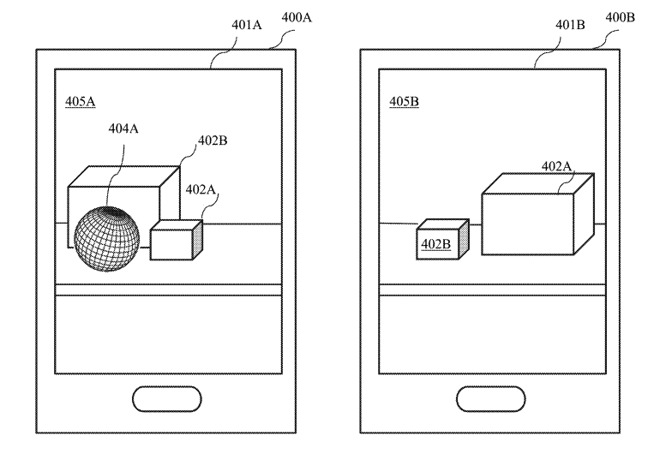

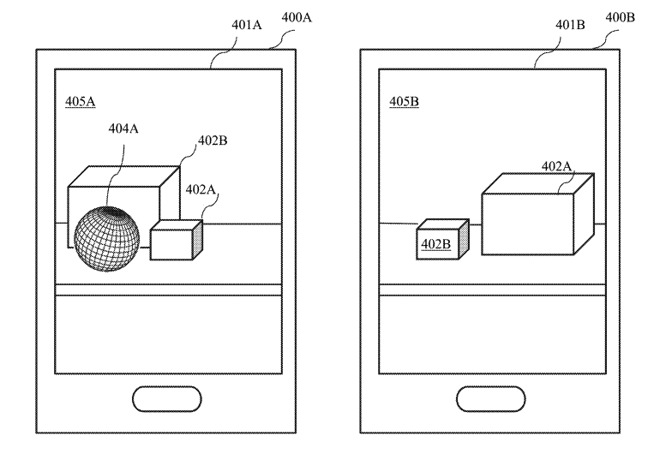

For a start, if multiple AR devices are monitoring an environment independently, the geometry in the maps they each create may not necessarily be accurate. Two people viewing an area based on independently-gathered data may see slightly differently-positioned virtual objects because of the map variations.

In a patent granted to Apple on Tuesday by the US Patent and Trademark Office titled "Multiple user simultaneous localization and mapping (SLAM)," Apple suggests that the use of multiple devices could be beneficial by sharing mapping data, which can create a more accurate map for all users.

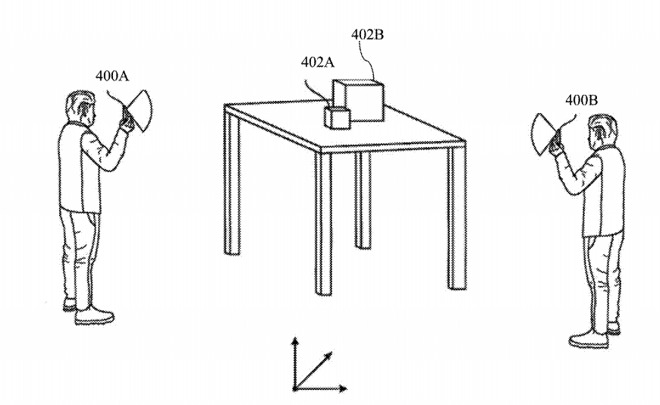

Multiple users at different viewpoints can help generate AR maps faster.

The accuracy can be improved in a few ways, such as multiple devices being able to perform an initial map generation of an area at a quicker pace than one device on its own, or that one device could capture areas a second one either can't see or missed entirely. Having duplicate data points for the same mapped area is also useful, in that it can be used to correct any errors in a generated map.

By creating a more accurate map, this can allow for a better placement of virtual markers in a world, so digital objects and scenes can be seen in the same real-world location from multiple headsets or devices.

In Apple's description, imaging sensors on the devices create keyframes from images in an environment, that apply to device-dependent coordinate systems. Keyframes can consist of image data, additional data, and a representation of a pose of the device, among other items. Each system then generates maps of relative locations.

Keyframes are then swapped between the devices, are paired up with the device's own keyframes, and are then used in calculations for generating more mapping points. Anchor point coordinates are also shared between devices for object positioning.

The patent does add that the system could work in a decentralized fashion, with devices communicating directly rather than relying on an intermediary server to share the data. In cases where positioning data is shared between users in an application, such as gaming, the sharing typically uses a central server to distribute the data between players, but Apple instead offers a more ad-hoc approach of direct communication for an AR mapping session.

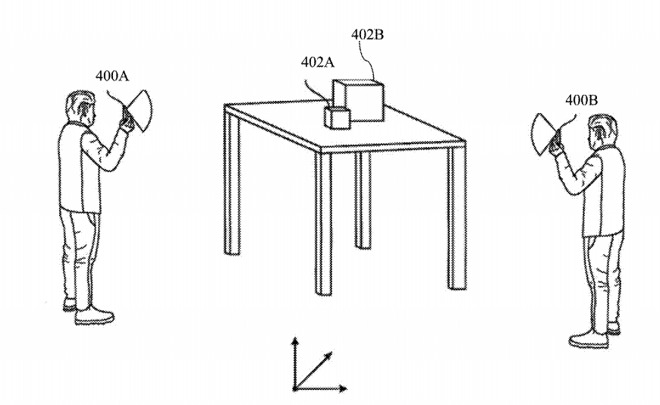

Different viewpoints could allow one device to share data on an object another cannot see.

The patent lists its inventors as Abdelhamid Dine, Kuen-Han Lin, and Oleg Naroditsky. It was filed on May 2, 2019.

Apple files numerous patent applications on a weekly basis, but while patent filings are an indication of areas of interest for Apple's research and development efforts, they do not guarantee the concepts will appear in a future product or service.

While the idea of SLAM can be performed on mobile devices, such as iPhones using ARKit apps, it is more likely that users will experience such a concept in Apple Glass, Apple's long-rumored smart glasses with augmented reality capabilities.

Patent filings from 2016 indicate Apple wanted to take advantage of AR positioning in applications, with an iPhone-based version used for "Augmented reality maps" that would overlay a digital map and data on a real-world camera view. A related filing from 2017 suggested an AR device could be used to identify objects nearby for a user, which again could provide more information on a display and fetch more data from another system.

Apple has also come up with ideas to stop VR users from bumping into real-world objects, and to take advantage of AR to give iPhone users more privacy,

Augmented reality depends on having an accurate measurement of geometry in an environment, with imaging devices performing the task as cameras move around an area. For a single headset, this may be a relatively slow process, but performing the same task using multiple devices can both be beneficial and create its own problems.

For a start, if multiple AR devices are monitoring an environment independently, the geometry in the maps they each create may not necessarily be accurate. Two people viewing an area based on independently-gathered data may see slightly differently-positioned virtual objects because of the map variations.

In a patent granted to Apple on Tuesday by the US Patent and Trademark Office titled "Multiple user simultaneous localization and mapping (SLAM)," Apple suggests that the use of multiple devices could be beneficial by sharing mapping data, which can create a more accurate map for all users.

Multiple users at different viewpoints can help generate AR maps faster.

The accuracy can be improved in a few ways, such as multiple devices being able to perform an initial map generation of an area at a quicker pace than one device on its own, or that one device could capture areas a second one either can't see or missed entirely. Having duplicate data points for the same mapped area is also useful, in that it can be used to correct any errors in a generated map.

By creating a more accurate map, this can allow for a better placement of virtual markers in a world, so digital objects and scenes can be seen in the same real-world location from multiple headsets or devices.

In Apple's description, imaging sensors on the devices create keyframes from images in an environment, that apply to device-dependent coordinate systems. Keyframes can consist of image data, additional data, and a representation of a pose of the device, among other items. Each system then generates maps of relative locations.

Keyframes are then swapped between the devices, are paired up with the device's own keyframes, and are then used in calculations for generating more mapping points. Anchor point coordinates are also shared between devices for object positioning.

The patent does add that the system could work in a decentralized fashion, with devices communicating directly rather than relying on an intermediary server to share the data. In cases where positioning data is shared between users in an application, such as gaming, the sharing typically uses a central server to distribute the data between players, but Apple instead offers a more ad-hoc approach of direct communication for an AR mapping session.

Different viewpoints could allow one device to share data on an object another cannot see.

The patent lists its inventors as Abdelhamid Dine, Kuen-Han Lin, and Oleg Naroditsky. It was filed on May 2, 2019.

Apple files numerous patent applications on a weekly basis, but while patent filings are an indication of areas of interest for Apple's research and development efforts, they do not guarantee the concepts will appear in a future product or service.

While the idea of SLAM can be performed on mobile devices, such as iPhones using ARKit apps, it is more likely that users will experience such a concept in Apple Glass, Apple's long-rumored smart glasses with augmented reality capabilities.

Patent filings from 2016 indicate Apple wanted to take advantage of AR positioning in applications, with an iPhone-based version used for "Augmented reality maps" that would overlay a digital map and data on a real-world camera view. A related filing from 2017 suggested an AR device could be used to identify objects nearby for a user, which again could provide more information on a display and fetch more data from another system.

Apple has also come up with ideas to stop VR users from bumping into real-world objects, and to take advantage of AR to give iPhone users more privacy,

Comments

Imagine the number-crunching involved when both individuals in hiding and fighter jets (with full throttle) are involved in the exchange and processing of the data.

If you continuously create sort of a 3D-wave form map of the surroundings you very quickly run into a resolutions-based problem as the bandwidth between the devices throttles the data that you're able to transfer, and therefor the quality of the data that you can collect.

But once you are able to identify independent blocks of things you are able to just send the most relevant data about it; like change of direction, movement, or, depending on the awareness of individual "blocks", change of states (like soldier down, or change of weaponry). And then you've reduced the data that needs to be transfered down to almost like chess (where in a low bandwidth environment a solider might simply be alerted about an enemy combatant having moved to a new room in an abandoned building; data perhaps collected by passive IR-scan collected by a previously dropped device and/or drone).

6+ years ago I wrote a lil bit about something relevant, the now dead https://en.wikipedia.org/wiki/Tango_(platform) from google; my take on it at the time: https://medium.com/@svanstrom/project-tango-isnt-de6e541a1333.

Edit: Just saw that I accidentally replied to an almost week old story, which in this context feels almost like raising the dead.