Apple's claims about M1 Mac speed 'shocking,' but 'extremely plausible'

Comparing Apple's claims for the Apple Silicon M1 chip to what it has already achieved in the A-series, Anandtech says it "believes" the quoted speed results.

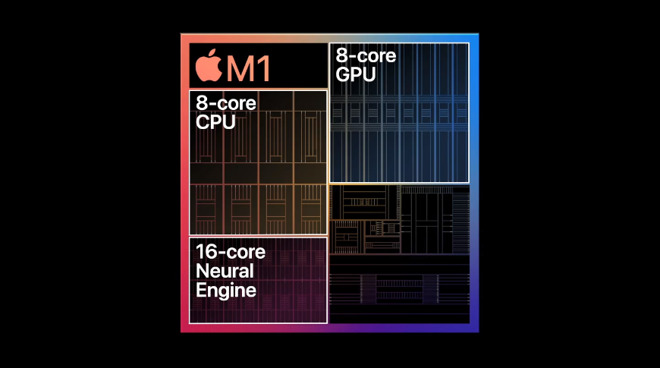

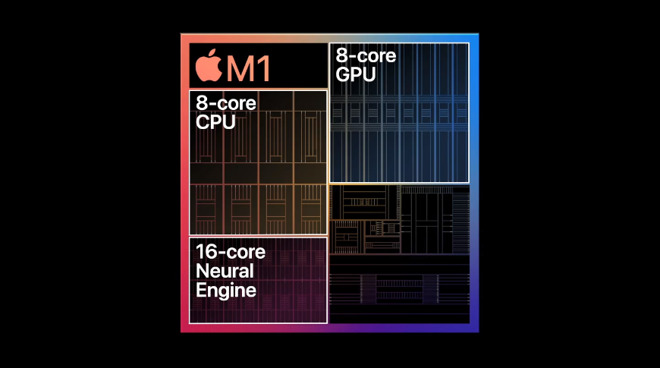

Apple's new M1 processor

During Apple's November 10 event launching new Macs with Apple Silicon M1 processors, the company made very many claims about the chip's performance and low-power capabilities. As is AppleInsider, tech deep-dive site Anandtech is waiting to test the new machines, but concludes that it "certainly believes" Apple's claims.

"The new Apple Silicon is both shocking, but also very much expected," says Anandtech in a detailed technical review of what information is available. "Intel has stagnated itself out of the market, and has lost a major customer today. AMD has shown lots of progress lately, however it'll be incredibly hard to catch up to Apple's power efficiency."

"If Apple's performance trajectory continues at this pace," it continues, "the x86 performance crown might never be regained."

This conclusion is also based on direct testing of Apple's A-series processors. "What we do know is that in the mobile space, Apple is absolutely leading the pack in terms of performance and power efficiency," it says.

Anandtech does note, however, that since it last tested the Apple A12Z, we've seen more significant jumps from both AMD and Intel." It is also critical of Apple's choice of statistics and data shown in the presentation.

"Apple's comparison of random performance points is to be criticized," it says. "However the 10W measurement point where Apple claims 2.5x the performance does make some sense, as this is the nominal TDP [Thermal Design Power] of the chips used in the Intel-based MacBook Air."

Apple's M1 is the first of its Apple Silicon processors, and marks the beginning of the company's move away from using Intel in its Macs. Apple has started its transition to exclusively using its own processors by bringing out a new M1-based Mac mini, MacBook Air, and 13-inch MacBook Pro.

Apple's new M1 processor

During Apple's November 10 event launching new Macs with Apple Silicon M1 processors, the company made very many claims about the chip's performance and low-power capabilities. As is AppleInsider, tech deep-dive site Anandtech is waiting to test the new machines, but concludes that it "certainly believes" Apple's claims.

"The new Apple Silicon is both shocking, but also very much expected," says Anandtech in a detailed technical review of what information is available. "Intel has stagnated itself out of the market, and has lost a major customer today. AMD has shown lots of progress lately, however it'll be incredibly hard to catch up to Apple's power efficiency."

"If Apple's performance trajectory continues at this pace," it continues, "the x86 performance crown might never be regained."

Full chip performance, Intel vs Apple.

M1 should be up a bit higher

Come on @nuvia_inc, pin the tail on the charthttps://t.co/TuFycIQi1Y pic.twitter.com/2LNOFneZEc-- . (@IanCutress)

This conclusion is also based on direct testing of Apple's A-series processors. "What we do know is that in the mobile space, Apple is absolutely leading the pack in terms of performance and power efficiency," it says.

Anandtech does note, however, that since it last tested the Apple A12Z, we've seen more significant jumps from both AMD and Intel." It is also critical of Apple's choice of statistics and data shown in the presentation.

"Apple's comparison of random performance points is to be criticized," it says. "However the 10W measurement point where Apple claims 2.5x the performance does make some sense, as this is the nominal TDP [Thermal Design Power] of the chips used in the Intel-based MacBook Air."

Apple's M1 is the first of its Apple Silicon processors, and marks the beginning of the company's move away from using Intel in its Macs. Apple has started its transition to exclusively using its own processors by bringing out a new M1-based Mac mini, MacBook Air, and 13-inch MacBook Pro.

Comments

I’m debating getting a M1 MacBook Pro but during corona virus, I’m pretty much living on my iPad Pro. My 2018 13” MacBook Pro has been barely turned on.

One thought I had....

if these chips are so amazing.... why are they not making servers? using them in data centres, thats where power per watt really matters isnt it?

whats the best selling windows computer its meant to be so much better than? some $200 education machine.

Wrong. The original iPad was $499 (nearly $600 in today's dollars), compared to $329 today. That's almost half the price for a device that is vastly more capable.

So UMA will work for Apple Silicon for workloads that are both CPU and GPU intensive (right now this is handled by giving, say, 16 GB of RAM to the CPU and 4 GB of RAM to the GPU as is done for the 16' MacBook Pro) only if enough RAM is provided. Of course, Apple knows this. And they know that users of, say, vector video editing or 3D animation - examples of heavy peak simultaneous CPU/GPU workloads - are going to be willing to pay a lot for that RAM.

Sorry, SGI implemented UMA with their O2 workstation (circa 1996), years before Nvidia’s founding.

So if you think that Intel makes more money selling CPUs to Apple than they make selling them to, say, HP just because Apple makes a ton more money than HP does then you are absolutely totally wrong. Intel charges HP the exact same amount for those same Core i3, i7, i9 and Xeon CPUs that they charge Apple. As a result, they make more money off HP because HP sells way more computers than Apple does, even if they make much less money than Apple does in the process.

Finally, of course Apple's 5nm M1 is going to outperform Intel's 14nm Core i5. But when Intel's Core i5 is also 14nm in about 3 years (if they hire TSMC to make the chips) or 5 years (if they make the chips themselves)? Then we will see whose performance will wash over whose. Apple will have some advantages, namely the inherent efficiency of RISC vs ISA as well as Apple's strategy of maximizing performance from each core as opposed to Intel's - and everyone else's - strategy of maximizing cores. But Intel also has a performance attribute of their own: the densest core design in the industry. Meaning that a 10nm Intel design is the equivalent of a 7nm Apple one. So when Intel does get to a 5nm design, it will be the equivalent of a 3nm Apple one. So, we shall see ...

I wonder if Apple will adopt this approach for their smartphones and tablets? Because right now they are still buying mobile RAM modules for phones and tablets from Samsung if I am correct. Building it into the SOCs would increase performance and save money. (Although perhaps at the cost of increased power consumption and heat generation.)

So no, they likely weren’t talking about some $200 educational machine.