MacBook Air with M1 chip outperforms 16-inch MacBook Pro in benchmark testing

With Apple's first Apple silicon Macs due to arrive in the coming days, early benchmark testing on Wednesday reveals the company's new M1 chip outperforms its top Intel-based machines.

A Geekbench test result from a "MacBookAir10,1," the designation of Apple's just-announced MacBook Air with M1 chip, reveals a single-core score of 1687 and a multi-core score of 7433. The 8-core processor was clocked at 3.2 GHz.

By comparison, aggregate scores compiled by the benchmarking site show the M1 blowing past all mobile Macs, all current Mac mini configurations and a healthy portion of iMac specs. That includes the late-2019 MacBook Pro with Intel Core i9-9980HK processor clocked at 2.4 GHz.

According to the unverified results, the MacBook Air testbed was equipped with 8GB of RAM and ran macOS 11.0.1, the forthcoming next-generation operating system due for wide release on Thursday.

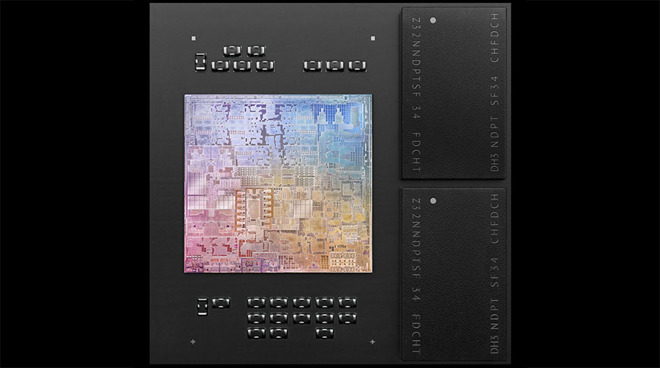

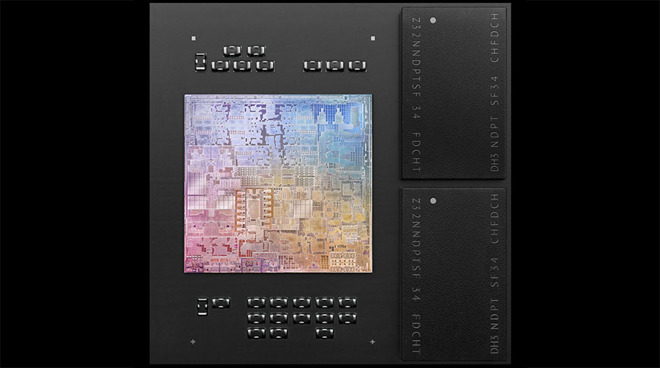

In introducing the M1 on Tuesday, Apple touted its first in house-designed Mac chip as a breakthrough for desktop processing. With leading performance per watt and extreme power efficiency, the silicon is expected to shake up the semiconductor market.

Apple has failed to produce exact specifications for its first ARM-based Mac chip design, but does note the silicon is up to two times faster than competing PC processors while sipping power. M1's integrated graphics module also offers up to two times the performance as compared to leading solutions, though comprehensive graphics benchmarks have yet to surface.

In addition to raw processing power, M1 incorporates custom Apple technology like a 16-core Neural Engine for machine learning computations, specialized image signal processor, and a unified memory subsystem. It remains to be seen whether the latter will prove a detriment to typically RAM-intensive operations.

The MacBook Air, 13-inch MacBook Pro and Mac mini are the first machines to benefit from the M1. Orders for the new hardware went live yesterday, with shipments due to arrive on Friday.

A Geekbench test result from a "MacBookAir10,1," the designation of Apple's just-announced MacBook Air with M1 chip, reveals a single-core score of 1687 and a multi-core score of 7433. The 8-core processor was clocked at 3.2 GHz.

By comparison, aggregate scores compiled by the benchmarking site show the M1 blowing past all mobile Macs, all current Mac mini configurations and a healthy portion of iMac specs. That includes the late-2019 MacBook Pro with Intel Core i9-9980HK processor clocked at 2.4 GHz.

According to the unverified results, the MacBook Air testbed was equipped with 8GB of RAM and ran macOS 11.0.1, the forthcoming next-generation operating system due for wide release on Thursday.

In introducing the M1 on Tuesday, Apple touted its first in house-designed Mac chip as a breakthrough for desktop processing. With leading performance per watt and extreme power efficiency, the silicon is expected to shake up the semiconductor market.

Apple has failed to produce exact specifications for its first ARM-based Mac chip design, but does note the silicon is up to two times faster than competing PC processors while sipping power. M1's integrated graphics module also offers up to two times the performance as compared to leading solutions, though comprehensive graphics benchmarks have yet to surface.

In addition to raw processing power, M1 incorporates custom Apple technology like a 16-core Neural Engine for machine learning computations, specialized image signal processor, and a unified memory subsystem. It remains to be seen whether the latter will prove a detriment to typically RAM-intensive operations.

The MacBook Air, 13-inch MacBook Pro and Mac mini are the first machines to benefit from the M1. Orders for the new hardware went live yesterday, with shipments due to arrive on Friday.

Comments

I assume it’s just the long-term performance.

But while on my way to the corner I will protest:

Apple did not reach this performance with the 4 and 6 core iPhone and iPad chips as people were claiming previously. Apple only reached this performance with an octacore chip that was specifically designed for use in personal computers - not mobile devices - that requires more cores, more power and dissipate more heat. We have always known that this was possible, as modern (meaning a ARM Holdings design base and not the Sun Sparc and other early RISC servers that go back to the 1980s) Linux-based ARM workstations and servers have existed since at least 2011 (the year after the A4 was released). Ubuntu has had official ARM releases since 2012, and HP - the venerable Wintel manufacturer - has been selling them to data centers since 2014.

So I was absolutely right about Apple not being able to build a MacBook Pro or iMac with a 6 core chip that had 128kb/8MB caches (the M1 is octacore with 192kb/12MB caches). As lots of people on this site and elsewhere were indeed claiming that the 4 and 6 core low power/low heat iPhone chips could absolutely be put in a MacBook Pro and work as good or better ... yeah those people were as wrong as I was and even more so.

Now in the corner of shame I go, sucking my thumb in the process. But you folks who claimed that this would have been possible with the iPhone chips need to go to corners of their own.

PS: let me know when Cloudguy has finished shifting goal posts will ya?

-iKnockoff morons.

When has Apple lied about performance? Their track record shows Apple under-promises and over-delivers every year.

"Only benchmarks matter!"

-iKnockoff Morons in 2014

"Well let's wait and see what real world performance is!"

-iKnockoff morons in 2020

(pre-Apple Silicon launch.)

Note also that main memory requests haven’t been for a single byte or even 8 bytes at a time for a very long time: all main memory access is done at chunks the size at least of CPU cache lines. I don’t know what the M1 uses, but for at least the last 20 years the smallest cache line has been 32 bytes. It’s done this way because the electrical signaling overhead takes a meaningful amount of time, and RAM (dynamic) also has other costs, but the net result is a sequential chunk is usually going to be far more efficient to access than 8 bytes or less. The latency between main memory access and getting/setting data is many many CPU cycles as main memory is a fraction of the speed of CPU L1 data and instruction caches, which tend to be slower than CPU register accesses.

By combining GPU cores, CPU cores and huge L1/L2 caches along with L3, and minimizing protocol overhead between CPU and GPU along with minimal wire time, at least (assuming they’re not using buses between them like PCIE) they can make this negotiation between the various SoC function areas very fast, far faster than if it were spread out on a motherboard. Despite all that, it doesn’t change the fact that it’s unlikely that all parts that need memory access can have as much memory access at the same time as it’d take to keep them all running at maximum performance.

The M1 is basically the upcoming iPad Pro chip. Apple will be shipping a 3 GHz version of it (as opposed to 3.2 GHz) in the 2021 iPad Pros that are likely to ship in the coming spring. The other differences is that it will a USBC only controller chip as opposed to a TB3/USB4 controller chip, won't have the 16 GB RAM option, probably not the 2 TB storage option, but will have a cellular chip option.

For whatever product timing reasons, Apple decided to ship the ASi Macs first instead of the iPad Pros.

But as for right now, there are already plenty of fanless Windows 10 - and I mean real Windows, not Windows on ARM that tries and fails to emulate x86! - laptops out there. Consider the Acer Switch 7: 16 GB of RAM and Intel i7 processor. There are also a couple of Dell XPS fanless laptops and a couple of Asus ones in addition to more Acer ones.

Get this: folks are kicking around the idea that the new Intel Tiger Lake CPUs with integrated Iris XE graphics will allow fanless gaming laptops to become a thing (because Tiger Lake is Intel's low heat/low power design and Iris XE GPU - which is integrated in all Tiger Lake Core i5 and higher chips - is supposed to provide gaming performance on the caliber of the Nvidia MX350).

So seriously, you guys need to pay attention to the wider tech world more. If you are thinking that Apple Silicon is going to result in these magical devices that the rest of the tech world can't comprehend let alone compete with that is going to result in Apple quadrupling or more its market share and influence, prepare to be sadly mistaken. The tech media might not know this - as Apple devices are all that they use and as a result truly cover - but actual consumers do.

SJ on the other hand would’ve brought back the burning Intel scientist and nuked that bridge

Apple was the first years ago to pioneer a fabless laptop in the 12” MacBook. But being based on Intel it had mediocre performance because it only had passive cooling.

The difference is this MacBook Air is not only fanless but still outperforms those Windows laptops that have fans, that is the difference of a modern chip design.

As for ChromeOS, well I’ve yet to even see one in real life. But then I only work in the IT Dept of a technology company...

Perhaps we’ll see a 2X difference there in GPU speed, with perhaps only a CPU of 12 cores vs 8 or so.

The ARM design, just like the other RISC systems you deride, also dates back to the 1980’s. I saw a demo of the very first desktop that used an ARM chip, the Acorn Archimedes, in a consultancy in Cambridge, U.K. - where it was developed - in 1989. Even then, it blew away everything else on the market.

Sadly it’s technical and performance brilliance want enough to withstand the WinTel monopoly stream roller.

BTW: Geekbench 5 was just updated to run Apple Silicon Macs. It just showed up as an update in the App Store so Primate Labs has it available to run natively.

But will it be faster? Or that suppose mean that x86 will lead again? Hmm...

You know for five years, I only saw one side of people got their face relentlessly slapped, yet they rarely learned anything. Guess they need more.

also worth noting Apple doesn’t pick the Y just for “fanless.”

Most users don't care wether it uses ARMs, Intel, AMD, that architecture, this technology, etc. They care only that they can actually use it.

It does not matter that Apple made any technological advancement or anything. If people can buy it at reasonable price and use in everyday life. It's the end of story. The rest are just nerd chat.

I have 2015 15-inch MacBook Pro and 2018 iMac for work use.

I also just built a custom PC with Core i7 Gen10, 64GB 3200 RAM, NVMe Drive, and a 3080.

That PC blue screened me twice in a month - unrecoverable and need to reformat drive. I've never seen my Mac crash at that level in my 10 years in this platform.

If I can play a game at 4K 144Hz in my iMac I would not even bother touch a PC again. I bought that because Apple cannot do that. I don't care if I have integrated Intel graphic or Apple Silicon or nVidia 9090 or whatever inside that I would not even see it.

You're also wrong about where Apple's A-series chips have landed when it came to performance and performance-per-watt over the years, how that gap was closing as even iPhones several years old were easily besting Intel chips used in traditional PCs.

The interesting thing Apple has done by developing the M1 (and future chips) is they’ve very much achieved Steve Jobs’ desire of making the computer very much that of an appliance: most users can’t be bothered to know details of their microwave, they just want it to do their bidding in a very repeatable manner to where they use it on autopilot. It’s just a means to an end. That’s something a lot of us computer geeks need to keep in mind: it’s a mind appliance, and all is great if it doesn’t get in the way of achieving an end. People think more about things that cause them issues in achieving their goals than those that don’t.

The ideal Apple product for someone using that as a guide is something that requires minimal concern about feeding the beast: the computer should be something that gets out of their way, it just works. Once they start having to think too much about getting their goals accomplished, that’s a failure in hardware and/or software for that user.

Thus, we have the curious contrast that’s the ideal user buying/using experience: initial excitement to be able to do new things (or old things better in some way) and desirable boredom regarding how the device works. What’s inside? If it does what they want, that’s a moot point, until their needs change.