Apple Glass may speed up AR by rendering only what's in the user's gaze

Analyzing what a user is looking at may help Apple Glass or an AR headset improve its performance in processing video, by using the gaze to prioritize elements of a camera feed for analysis.

Augmented reality systems rely on collecting data about the environment in order to better provide a mixed reality image to the user. For most people, this largely boils down to taking a live camera feed from near the user's eye, digitally manipulating it, and then showing it to the user in its altered state, though this can also cover other data types like depth mapping.

With many VR and AR systems using a headset tethered to a host computer, this need to ingress data about the environment can cause a problem, as there's only so much data you can funnel through that tether at a time. The improvements to cameras over the years, including higher resolutions and frame rates, means there's even more data to analyze and process, and potentially too much for that tether to handle.

There's also the processing resources to consider, as the more data there is, the more processing that may be needed to create the AR image.

In a patent granted by the US Patent and Trademark Office on Tuesday titled "Gaze direction-based adaptive pre-filtering of video data," Apple attempts to combat the ingress problem by narrowing down the video data that needs to be processed, before the main processing takes place.

In short, the patent suggests the headset could take an image of the environment and apply some filters to the scene, with each covering different areas of the video frame. These filters, which determine the data that gets transmitted to the host for processing, are positioned based on the user's gaze, as well as typical AR and VR data points like head position and movement.

The filtered data layers are sent over to the host, be it by tether or wirelessly, which is then processed and fed back to the headset to display. The loop then repeats.

The logic behind this is that not all of the data that is picked up by a camera is necessarily needed to process what the user actually sees. While the user may be facing a direction, their eyes may be pointed off to one side, making the rendering of content on the other side of their view practically pointless.

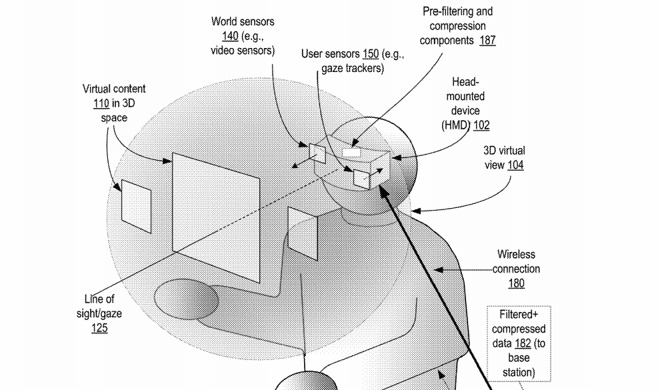

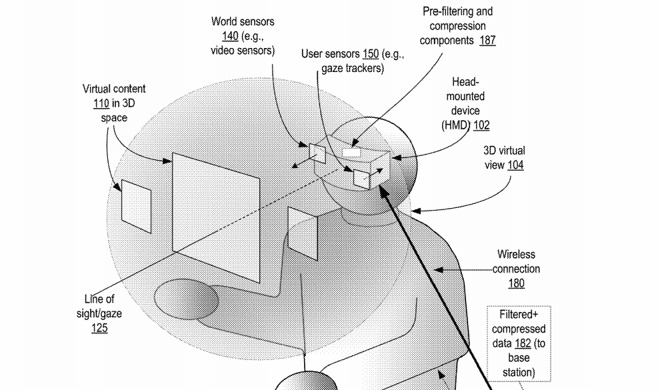

Apple's illustration of a head-mounted display that can monitor the user's gaze and the local environment.

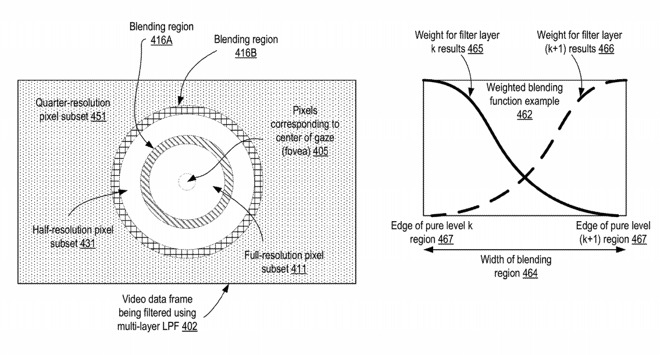

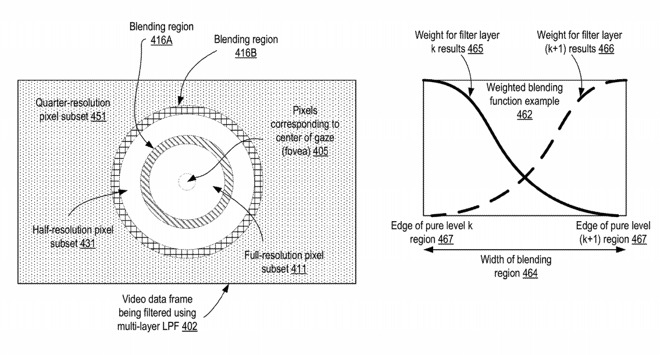

Apple's system requires detecting the gaze then applying that data point to the frame of image data. Multiple subsets of data can define various shapes and sizes of the image that can be prioritized, with sections the user is actively looking at under the main subset while a larger secondary version covering the surrounding area.

The smaller and main subset would be given processing priority, due to being actively looked at by the user. The secondary wider subset could require less processing due to being in the user's periphery, and so is less important. This secondary data may also not be transmitted at full quality to the host, due to it not being as important as the priority data subset.

On rendering the final image for the user, the data can have further filters applied to effectively join them together in as unobtrusive fashion as possible. In effect, the system would blur the lines between the primary high-quality subset and the lower-quality periphery subset, and the rest of the camera feed it is overlaying in the AR app.

The center of the user's gaze will be rendered fully, with lower-quality renders further away.

The subset identification doesn't necessarily have to be generated in one go. Apple suggests the possibility that the primary subset could be created after the first frame of visual data is generated by cameras, but the secondary subset areas could be determined during the creation of the second frame.

Furthermore, gaze detection data associated with the second frame could inform the secondary subset regions in the first frame for processing. This would be useful for cases where a user's eyes are moving, as this could soften any unwanted effects of the viewpoint changing for the user and the system having to catch up and place data in their field of view.

The patent lists its inventors as Can Jin, Nicholas Pierre Marie Frederic Bonnier, and Hao Pan. It was originally filed on July 19, 2018.

Apple files numerous patent applications on a weekly basis, but while the existence of a filing indicates areas of interest for Apple's research and development efforts, they do not guarantee their use in a future product or service.

Apple has been rumored to be working on some form of AR or VR headset for quite a few years, with the bulk of more recent speculation surrounding what is known as "Apple Glass." Thought to potentially take the form of an AR headset, and possibly smart glasses later on, the rumors hint that it could take the form of glasses that are similar in size to typical spectacles.

Naturally, Apple has filed many patent applications relating to the field, but one that is very similar in core concept is that of the "Foveated Display" from June 2019. In that application, Apple suggested there could be a way to improve the processing and rendering performance of a headset display by limiting the amount of data being processed.

Rather than limiting the data reaching the host device, the filing is all about getting the data to the display. In a similar manner to the subsets of data, Apple suggests two different streams of data could be used by the display, consisting of high-resolution and low-resolution imagery.

Using gaze detection, the system would put only high-resolution imagery where the user is looking, followed by lower-resolution data for surrounding areas. By doing this, the display itself has less data it has to deal with when updating each refresh, which can allow for higher refresh rates to be used.

Augmented reality systems rely on collecting data about the environment in order to better provide a mixed reality image to the user. For most people, this largely boils down to taking a live camera feed from near the user's eye, digitally manipulating it, and then showing it to the user in its altered state, though this can also cover other data types like depth mapping.

With many VR and AR systems using a headset tethered to a host computer, this need to ingress data about the environment can cause a problem, as there's only so much data you can funnel through that tether at a time. The improvements to cameras over the years, including higher resolutions and frame rates, means there's even more data to analyze and process, and potentially too much for that tether to handle.

There's also the processing resources to consider, as the more data there is, the more processing that may be needed to create the AR image.

In a patent granted by the US Patent and Trademark Office on Tuesday titled "Gaze direction-based adaptive pre-filtering of video data," Apple attempts to combat the ingress problem by narrowing down the video data that needs to be processed, before the main processing takes place.

In short, the patent suggests the headset could take an image of the environment and apply some filters to the scene, with each covering different areas of the video frame. These filters, which determine the data that gets transmitted to the host for processing, are positioned based on the user's gaze, as well as typical AR and VR data points like head position and movement.

The filtered data layers are sent over to the host, be it by tether or wirelessly, which is then processed and fed back to the headset to display. The loop then repeats.

The logic behind this is that not all of the data that is picked up by a camera is necessarily needed to process what the user actually sees. While the user may be facing a direction, their eyes may be pointed off to one side, making the rendering of content on the other side of their view practically pointless.

Apple's illustration of a head-mounted display that can monitor the user's gaze and the local environment.

Apple's system requires detecting the gaze then applying that data point to the frame of image data. Multiple subsets of data can define various shapes and sizes of the image that can be prioritized, with sections the user is actively looking at under the main subset while a larger secondary version covering the surrounding area.

The smaller and main subset would be given processing priority, due to being actively looked at by the user. The secondary wider subset could require less processing due to being in the user's periphery, and so is less important. This secondary data may also not be transmitted at full quality to the host, due to it not being as important as the priority data subset.

On rendering the final image for the user, the data can have further filters applied to effectively join them together in as unobtrusive fashion as possible. In effect, the system would blur the lines between the primary high-quality subset and the lower-quality periphery subset, and the rest of the camera feed it is overlaying in the AR app.

The center of the user's gaze will be rendered fully, with lower-quality renders further away.

The subset identification doesn't necessarily have to be generated in one go. Apple suggests the possibility that the primary subset could be created after the first frame of visual data is generated by cameras, but the secondary subset areas could be determined during the creation of the second frame.

Furthermore, gaze detection data associated with the second frame could inform the secondary subset regions in the first frame for processing. This would be useful for cases where a user's eyes are moving, as this could soften any unwanted effects of the viewpoint changing for the user and the system having to catch up and place data in their field of view.

The patent lists its inventors as Can Jin, Nicholas Pierre Marie Frederic Bonnier, and Hao Pan. It was originally filed on July 19, 2018.

Apple files numerous patent applications on a weekly basis, but while the existence of a filing indicates areas of interest for Apple's research and development efforts, they do not guarantee their use in a future product or service.

Apple has been rumored to be working on some form of AR or VR headset for quite a few years, with the bulk of more recent speculation surrounding what is known as "Apple Glass." Thought to potentially take the form of an AR headset, and possibly smart glasses later on, the rumors hint that it could take the form of glasses that are similar in size to typical spectacles.

Naturally, Apple has filed many patent applications relating to the field, but one that is very similar in core concept is that of the "Foveated Display" from June 2019. In that application, Apple suggested there could be a way to improve the processing and rendering performance of a headset display by limiting the amount of data being processed.

Rather than limiting the data reaching the host device, the filing is all about getting the data to the display. In a similar manner to the subsets of data, Apple suggests two different streams of data could be used by the display, consisting of high-resolution and low-resolution imagery.

Using gaze detection, the system would put only high-resolution imagery where the user is looking, followed by lower-resolution data for surrounding areas. By doing this, the display itself has less data it has to deal with when updating each refresh, which can allow for higher refresh rates to be used.

Comments

And speaking of which, who at AI insists on using “Glass” and not the far more likely name of “Glasses”?

Today's headsets provide roughly the equivalent of 20/200 vision, and only a few GPU models are capable of driving them fast enough to not induce nausea most of the time. Sharper vision requires many times the GPU power.

I'm personally also interested in how they'll handle varifocal optics and proper occlusion, which is probably more important in AR than in VR since you're overlaying the real world. Maybe they'll save that for a VR style passthru AR set.