Many App Store 'nutrition labels' have false information, report says

An investigation into the accuracy of privacy disclosures in Apple's App Store "nutrition labels" has found that a significant number are simply false.

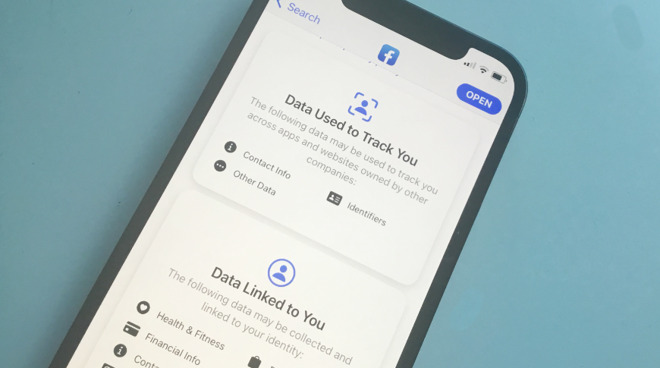

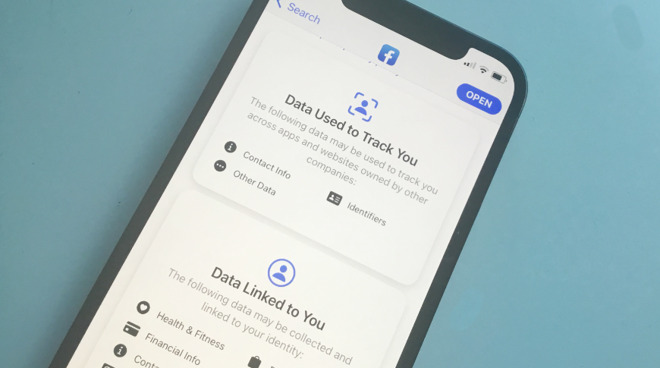

The new App Store privacy labels are prominent, but only after you've scrolled far down an app's listing

Apple made its privacy notices, also known as "nutrition labels," mandatory for any new or updated iOS 14 app in the App Store from December 8, 2020. As reported by AppleInsider at the time, however, Apple appeared to be entirely dependent on app developers both complying, and telling the truth.

Now according to the Washington Post, a survey of app privacy notices has shown that, "many" are false.

The Washington Post does not say how many apps were checked, but claims that "about 1 in 3" of those tried were falsely reporting that they collect no data. These include the game Match 3D, social network Rumble, and PBS Kids Video.

All three have reportedly now made some changes, but during the Washington Post "spot check," each was allegedly falsely claiming to track no data. So was a de-stressing app named Satisfying Slime Simulator, which was reportedly sending information to Facebook, Google and others.

"Apple conducts routine and ongoing audits of the information provided and we work with developers to correct any inaccuracies," an Apple spokesperson told the Washington Post. "Apps that fail to disclose privacy information accurately may have future app updates rejected, or in some cases, be removed from the App Store entirely if they don't come into compliance."

The apps were reportedly tested in part in conjunction with a former National Security Agency researcher. The Satisfying Slime Simulator was sending Facebook, Google and GameAnalytics details of the user's iPhone IDFA, battery level, free storage space, volume setting, and general location.

Apple's forthcoming update to iOS 14 which will introduce a new App Tracking Transparency. Apps still using IDFA will require a user's specific permission to carry on.

Advertisers believe that most users, on being prompted to allow tracking, will choose to say no. Apple will offer advertisers an alternative framework, however, which it claims gives advertisers useful information, without compromising the user's privacy.

Google plans to adopt this new, more privacy-minded SKAdNetwork framework. Facebook continues to protest against the change, and may even take Apple to court over it.

As previously reported by AppleInsider, a number of major app developers have yet to comply with Apple's requirement for privacy or nutrition labels. Until they do so, Apple will not allow any new apps, nor updates to existing ones.

At present, it remains true that Apple is dependent on developers' honesty, but the system is also new. Over time, however, any developer who wants to have an updated app on the App Store will have to provide privacy information. As the App Store policy becomes the norm, and especially when IDFA is made opt in, the accuracy of the nutrition labels will only increase.

The new App Store privacy labels are prominent, but only after you've scrolled far down an app's listing

Apple made its privacy notices, also known as "nutrition labels," mandatory for any new or updated iOS 14 app in the App Store from December 8, 2020. As reported by AppleInsider at the time, however, Apple appeared to be entirely dependent on app developers both complying, and telling the truth.

Now according to the Washington Post, a survey of app privacy notices has shown that, "many" are false.

The Washington Post does not say how many apps were checked, but claims that "about 1 in 3" of those tried were falsely reporting that they collect no data. These include the game Match 3D, social network Rumble, and PBS Kids Video.

All three have reportedly now made some changes, but during the Washington Post "spot check," each was allegedly falsely claiming to track no data. So was a de-stressing app named Satisfying Slime Simulator, which was reportedly sending information to Facebook, Google and others.

"Apple conducts routine and ongoing audits of the information provided and we work with developers to correct any inaccuracies," an Apple spokesperson told the Washington Post. "Apps that fail to disclose privacy information accurately may have future app updates rejected, or in some cases, be removed from the App Store entirely if they don't come into compliance."

The apps were reportedly tested in part in conjunction with a former National Security Agency researcher. The Satisfying Slime Simulator was sending Facebook, Google and GameAnalytics details of the user's iPhone IDFA, battery level, free storage space, volume setting, and general location.

Apple's forthcoming update to iOS 14 which will introduce a new App Tracking Transparency. Apps still using IDFA will require a user's specific permission to carry on.

Advertisers believe that most users, on being prompted to allow tracking, will choose to say no. Apple will offer advertisers an alternative framework, however, which it claims gives advertisers useful information, without compromising the user's privacy.

Google plans to adopt this new, more privacy-minded SKAdNetwork framework. Facebook continues to protest against the change, and may even take Apple to court over it.

As previously reported by AppleInsider, a number of major app developers have yet to comply with Apple's requirement for privacy or nutrition labels. Until they do so, Apple will not allow any new apps, nor updates to existing ones.

At present, it remains true that Apple is dependent on developers' honesty, but the system is also new. Over time, however, any developer who wants to have an updated app on the App Store will have to provide privacy information. As the App Store policy becomes the norm, and especially when IDFA is made opt in, the accuracy of the nutrition labels will only increase.

Comments

Folks, it is all just smoke and mirrors. There is no real security on the App Store. As long as an app has access to your information and the internet, it is not secure. From what I can tell as a developer, all of Apple's security features are really about protecting Apple, not their customers. It is also all about marketing. If you feel like you are being protected, you will buy more Apple products. Even so, Apple is at least making a show of being secure which is something no other company (Google, Facebook, Microsoft) is even attempting to do.

Not possible unless Apple can install software on the developer's servers to monitor what user data is passed onto 3rd parties from there. Apple can only look at the app code and see what it is doing, and even then it is extremely difficult to follow the path any user data takes thru the app. Monitoring network access and transmission is trivial, but knowing exactly what data is being sent is not, especially with an automated system.

Policy, not policing, is going to be a more affective first step. As much as these developers may not want to be transparent about their data collecting and monetization practices, I'm sure most would rather be upfront than be caught screwing their users and outright lose the user's trust, not to mention being be held liable for being dishonest and sued.

From the article, it appears that the apps were forwarding information directly to "Facebook, Google and others..." Do you suppose this was the case? If so, then some sort of automated testing may work to at least catch this type of direct data forwarding.

By the logic of some here you should not lock your house, because hey one can enter if they really want. I applaud Apple for taking these steps and as always it will take time to perfect, but in this day and age instant everything is the motto, but hey arm chair comments are so easy and seem so valuable, though the once that make them never seem to think this completely through. Privacy and security take time and is an never ending cat and mouse race ...

More information on this would be appreciated - has Apple implied they will not monetize user data ? I've long asked if this is why (almost) all roads lead to iCloud servers...

If one uses iCloud with things like subscription music, app store, auto tagged Photos, Contacts, HomePod, TV+, ApplePay, etc. might Apple potentially have more verified data on what is on one's data ecosystem and how it is used than anyone on anyone ? Does the above suggest Apple may 'monetize' our data in an 'anonymized' way ? Why is SIRI always defaulting to 'Learn from this App' (no global off as far as I can tell) for OEM and 3rd party app use...? Has the complexity and depth of so many potential data 'tentacles' made the burden of privacy for users (and or their family, friends, clients and customers) increasingly challenging ?

You have to scroll quite a ways down the WAPO article to find their methodology. That's actually pretty slick.

"To test if privacy labels were hiding the truth, I repeated part of an experiment I ran on my own iPhone in 2019. Software made by surveillance-fighting firm Disconnect called Privacy Pro forces your phone’s data to go through a local virtual private network that logs and blocks connections to trackers."

That's as clearly as it can be said.

You would think Apple would have scripts in place during the app approval process that would check for these things to verify if the nutrition label is accurate. Maybe they will start doing that with iOS 15 due to people not playing by the rules.

the apps that lied either fraudulent or incompetent.

for Facebook we know exactly which one they are given how much they tell us they are the smartest people in the room.

I think we should be careful about developing a false sense of security around what Apple is doing. It's not that I don't trust Apple, I do think they will try to do the right thing, but I just think the whole system is leaky and no amount of "policing" from Apple is going to plug all the leaks. The so-called privacy nutrition labels are okay, but too opaque because they don't provide any visibility into the areas of concern already mentioned. I'd be more confident if we had an independent, consumer focused advocacy group that was involved in evaluating the actual privacy related performance and behaviors of individual apps and the developers behind them in a controlled setting.

Rather than just the opaque "nutrition" labels, I'd like to see a Privacy Reputation Grade assigned to software/product developers. This would be a grading system based not only on the assertions that the developers make up-front, i.e., the nutrition labels, but also the actual observed performance of the developer's products evaluated in a lab and tested by independent privacy advocacy groups, privacy in practice, e.g., actual leaks and breaches detected in the field, and finally, consumer generated and crowd sourced incidents caught by users. I'm not sure how the weighting would work, but it has to involve some level of independent testing and actual consumer feedback, not simply the up-front and unverified claims of the developers themselves.

We, as consumers, must be actively involved in the feedback loop. We cannot rely solely on Apple or the developers themselves. No matter how well meaning and backed by well intentioned efforts Apple, developers, and advocacy groups may put forth, we also bear some of the responsibility for privacy as well. We need to be looking out for ourselves and our fellow citizens and we need to call out bad actors. After all, it’s still up to me to do my part by closing and locking the front door on my physical "private space," so I expect that doing my part to secure my virtual "private space" should not be any different. Yes, it's still only part of the bigger picture, but it's a part that's on me to perform. Whatever Apple does will not relieve me from doing my part.

Self-regulation always works after all!