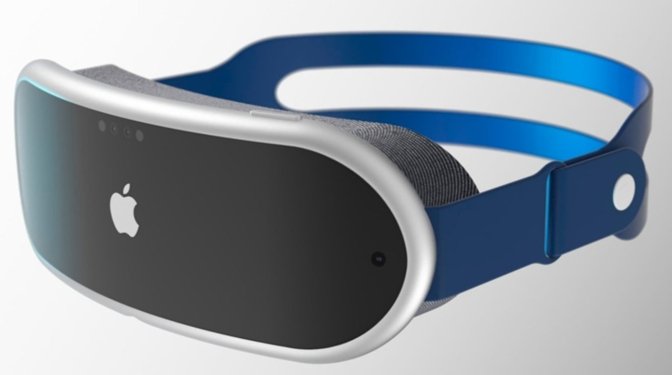

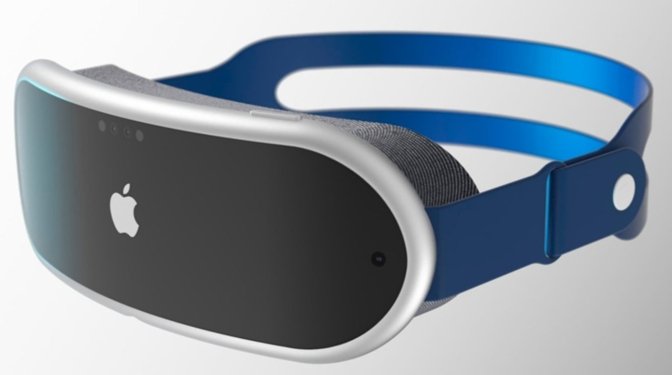

Apple's AR headset to feature eye tracking and possible iris recognition tech, Kuo says

Apple's widely rumored augmented reality headset is expected to sport eye tracking hardware as a means of user input, potentially eschewing the need for handheld controllers, according to analyst Ming-Chi Kuo.

Kuo in a note to investors on Friday said the headset will use a specialized transmitter and receiver to detect eye movements, blinks and related physical information. The analyst believes eye tracking will soon be the most important human-machine interface technology for AR and VR wearables.

"Currently, users primarily operate the HMD (most of which are VR devices) using handheld controllers," Kuo writes. "The biggest challenge with this type of operation is that it does not provide a smooth user experience. We believe that if the HMD uses an eye-tracking system, there will be several advantages."

Apple's system will theoretically be able to use collected eye movement data to determine user interactions with a simulated AR environment. For example, images and onscreen content can be made to move in sync with a user's eyes as they scan a displayed external environment.

Eye tracking also serves as an intuitive mode of UI operation, as users can activate menus by blinking repeatedly or access information about a tangible object by staring at it for an extended period of time.

Further, foveated rendering technology can optimize viewing areas by monitoring the position of a user's eye. This would allow the system to reduce screen resolution in areas that are not in immediate focus, thereby easing processing requirements.

According to Kuo, the transmitter is a complex module that emits several wavelengths of invisible light. This light reflects off a user's eyeball and is detected by an accompanying receiver module. Eye movement is subsequently determined based on changes in the light's properties.

Finally, Kuo says Apple might incorporate some form of iris recognition for biometric identification. The function could be used for user authentication and seamless Apple Pay transactions.

"We are still unsure if the Apple HMD can support iris recognition, but the hardware specifications suggest that the HMD's eye-tracking system can support this function," he writes.

Kuo's analysis of eye tracking technology reads like a summary of recent Apple patents filed over the past couple years. The tech giant has applied for, and has been granted, a number of patents related to eye tracking, gaze tracking and related technologies.

Last September, for example, a patent grant detailed a system by which an AR headset tracks eye movement via reflected light, while another explains the use of gaze tracking to assist in the display of mixed reality environments. Other IP filed over the years covers predictive foveated displays, dynamically altering image resolutions, selectively rendering content based on gaze, attention awareness and more.

Just today, Apple filed a clutch of patent applications for head mounted device technology, one of which covers an efficient method of eye tracking using low resolution images.

Kuo previously predicted Apple's first AR/MR headset to ship in 2022 for an estimated $1,000. That device is anticipated to be followed by "Apple Glass" in 2025 and, potentially, contact lenses after 2030.

Kuo in a note to investors on Friday said the headset will use a specialized transmitter and receiver to detect eye movements, blinks and related physical information. The analyst believes eye tracking will soon be the most important human-machine interface technology for AR and VR wearables.

"Currently, users primarily operate the HMD (most of which are VR devices) using handheld controllers," Kuo writes. "The biggest challenge with this type of operation is that it does not provide a smooth user experience. We believe that if the HMD uses an eye-tracking system, there will be several advantages."

Apple's system will theoretically be able to use collected eye movement data to determine user interactions with a simulated AR environment. For example, images and onscreen content can be made to move in sync with a user's eyes as they scan a displayed external environment.

Eye tracking also serves as an intuitive mode of UI operation, as users can activate menus by blinking repeatedly or access information about a tangible object by staring at it for an extended period of time.

Further, foveated rendering technology can optimize viewing areas by monitoring the position of a user's eye. This would allow the system to reduce screen resolution in areas that are not in immediate focus, thereby easing processing requirements.

According to Kuo, the transmitter is a complex module that emits several wavelengths of invisible light. This light reflects off a user's eyeball and is detected by an accompanying receiver module. Eye movement is subsequently determined based on changes in the light's properties.

Finally, Kuo says Apple might incorporate some form of iris recognition for biometric identification. The function could be used for user authentication and seamless Apple Pay transactions.

"We are still unsure if the Apple HMD can support iris recognition, but the hardware specifications suggest that the HMD's eye-tracking system can support this function," he writes.

Kuo's analysis of eye tracking technology reads like a summary of recent Apple patents filed over the past couple years. The tech giant has applied for, and has been granted, a number of patents related to eye tracking, gaze tracking and related technologies.

Last September, for example, a patent grant detailed a system by which an AR headset tracks eye movement via reflected light, while another explains the use of gaze tracking to assist in the display of mixed reality environments. Other IP filed over the years covers predictive foveated displays, dynamically altering image resolutions, selectively rendering content based on gaze, attention awareness and more.

Just today, Apple filed a clutch of patent applications for head mounted device technology, one of which covers an efficient method of eye tracking using low resolution images.

Kuo previously predicted Apple's first AR/MR headset to ship in 2022 for an estimated $1,000. That device is anticipated to be followed by "Apple Glass" in 2025 and, potentially, contact lenses after 2030.

Comments

Example: In a game, you typically do two things: (1) look around, and then (2) take action. e.g., LOOK at a bridge, and later WALK across the bridge. How will the system know which action you intend when your eyes focus on the bridge?

(This is not a criticism; it’s curiosity.)

Here we have Kuo, once again, packing more features in a future Apple device than would fit in a Tardis.