What you need to know: Apple's iCloud Photos and Messages child safety initiatives

In the time since Apple announced its child safety iCloud Photos image assessment and Messages notifications, there haven't been any changes, but there have been many clarifications from the company on the subject. Here's what you need to know, updated on August 15 with everything Apple has said since release.

Credit: Apple

Apple announced a new suite of tools on Thursday, meant to help protect children online and curb the spread of child sexual abuse material (CSAM). It included features in iMessage, Siri and Search, and a mechanism that scans iCloud Photos for known CSAM images.

Cybersecurity, online safety, and privacy experts had mixed reactions to the announcement. Users did, too. However, many of the arguments are clearly ignoring how widespread the practice of scanning image databases for CSAM actually is. They also ignore the fact that Apple is not abandoning its privacy-protecting technologies.

Here's what you should know.

As Apple noted in its announcement, all of the features were built with privacy in mind. Both the iMessage feature and the iCloud Photo scanning, for example, use on-device intelligence.

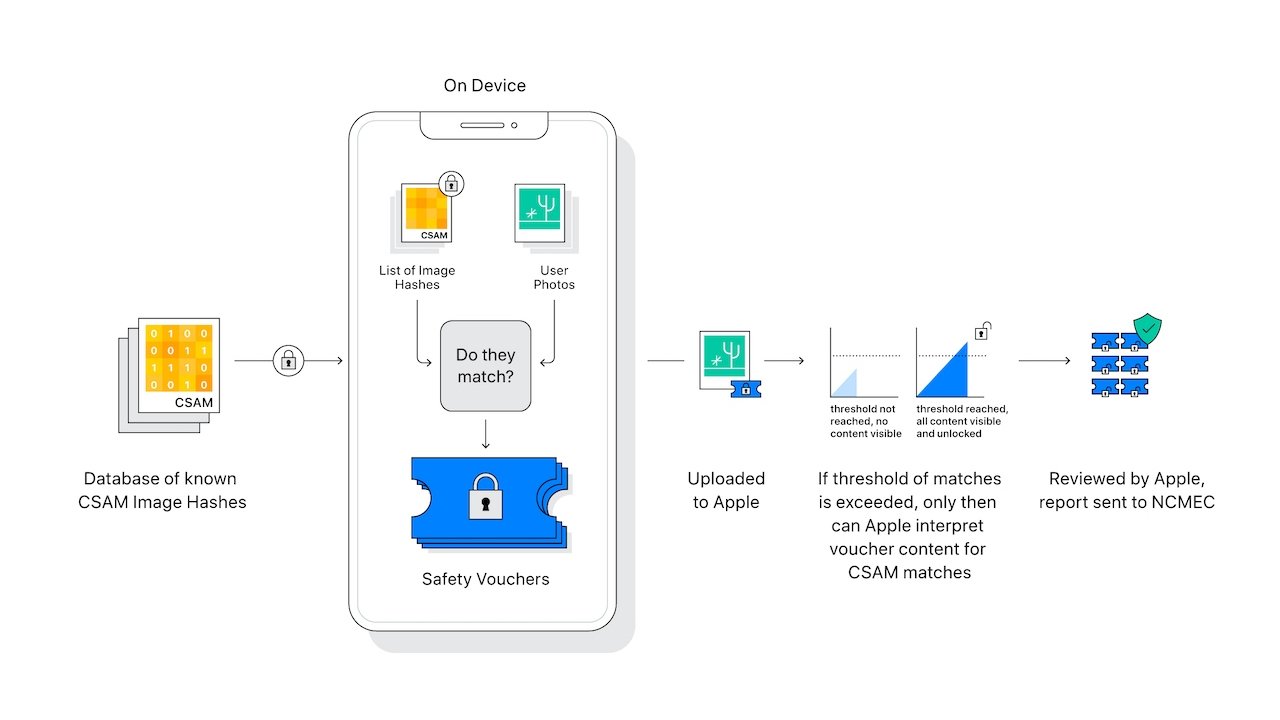

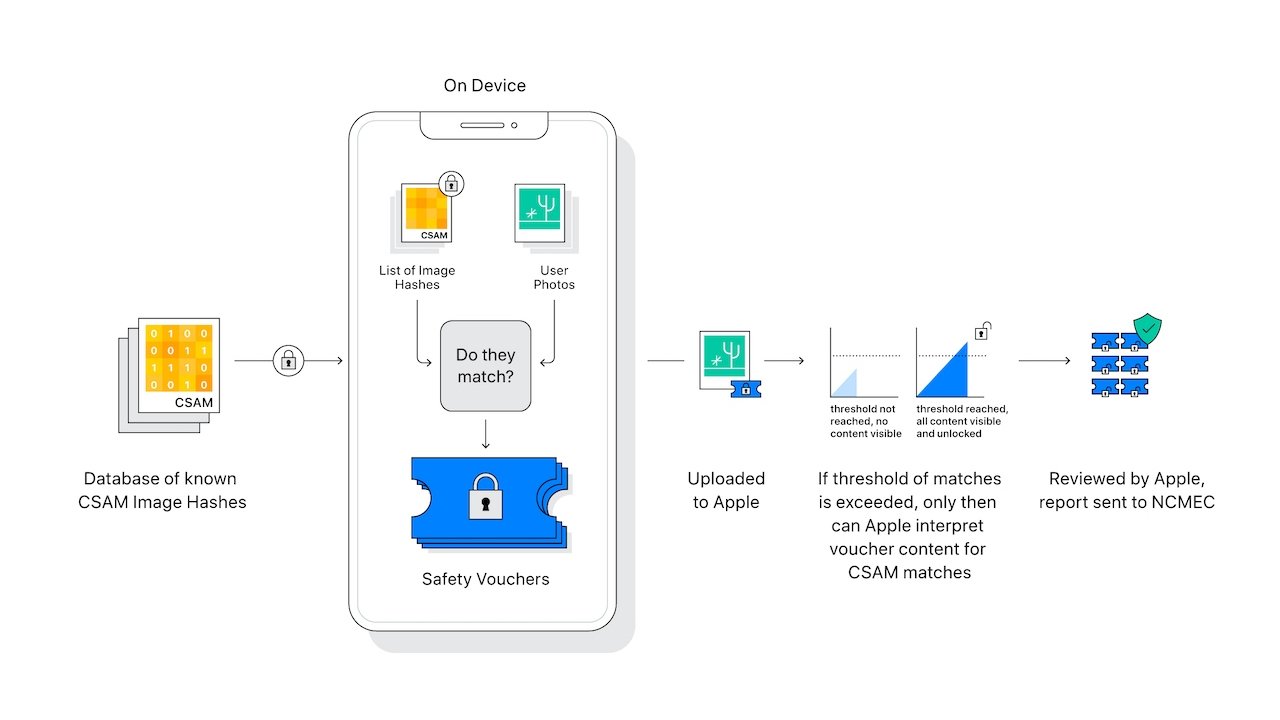

Additionally, the iCloud Photos "scanner" isn't actually scanning or analyzing the images on a user's iPhone. Instead, it's comparing the mathematical hashes of known CSAM to images stored in iCloud. If a collection of known CSAM images is stored in iCloud, then the account is flagged and a report is sent to the National Center for Missing & Exploited Children (NCMEC).

There are elements to the system to ensure that false positives are ridiculously rare. Apple says that the chances of a false positive are one in a trillion per account. That's because of the aforementioned collection "threshold," which Apple declined to detail.

Additionally, the scanning only works on iCloud Photos. Images stored strictly on-device are not scanned, and they can't be examined if iCloud Photos is turned off.

The Messages system is even more privacy-preserving. It only applies to accounts belonging to children, and is opt-in, not opt-out. Furthermore, it doesn't generate any reports to external entities -- only the parents of the children are notified that an inappropriate message has been received or sent.

There are critical distinctions to be made between the iCloud Photos scanning and the iMessage feature. Basically, both are completely unrelated beyond the fact that they're meant to protect children.

In a human's lifetime, there is a 1-in-15,000 chance of getting hit by lightning, and that's not a general fear. The odds of getting struck by a meteor over a lifetime is about 1-in-250,000 and that is also not a daily fear. Any given mega-jackpot lottery is over 1-in-150,000,000 to win, and millions of dollars get spent on it every day across the US.

Apple's one-in-a-trillion per account is not what you'd call a big chance of somebody's life getting ruined by a false positive. And, Apple is not the judge, jury, and executioner, as some believe.

Should the material be spotted, it will be human-reviewed before being handed over to law enforcement. And then, and only then, will the potential match be handed over to law enforcement.

It is then up to them to issue a warrant, as courts do not accept just a hash-match as acceptable.

A false unlock on your iPhone with Face ID is incredibly more probable than false match here at 1-in-1,000,000. A bad Touch ID match is even more probable than that at 1-in-50,000, and again, neither of these are big concerns of users.

In short, your pictures of your kids in the bath, or your own nudes in iCloud are profoundly unlikely to generate a false match.

Even if it did flag an image sent between two consenting adults, it wouldn't mean much. The system, as mentioned earlier, doesn't notify or generate an external report. Instead, it uses on-device machine learning to detect a potentially sensitive photo and warn the user about it.

And, again, it's meant to only warn parents that underage users are sending the pictures.

In other words, unless the images of your kids are somehow hashed in NCMEC's database, then they won't be affected by the iCloud Photo scanning system. More than that, the system is only designed to catch collections of known CSAM.

So, even if a single image somehow managed to generate a false positive (an unlikely event, which we've already discussed), then the system still wouldn't flag your account.

Again, the iCloud Photos system only applies to collections of images stored on Apple's cloud photo system. If someone sent you known CSAM via iMessage, then the system wouldn't apply unless it was somehow saved to your iCloud.

Could someone hack into your account and upload a bunch of known CSAM to your iCloud Photos? This is technically possible, but also highly unlikely if you're using good security practices. With a strong password and two-factor authentication, you'll be fine. If you've somehow managed to make enemies of a person or organization able to get past those security mechanisms, you likely have far greater concerns to worry about.

Also, Apple's system allows people to appeal if their accounts are flagged and closed for CSAM. If a nefarious entity did manage to get CSAM onto your iCloud account, there's always the possibility that you can set things straight.

And, it would still have to be moved to your Photo library for it to get scanned. And you would need a collection of at least 30 images for the account to be flagged.

For example, some experts worry that having this type of mechanism in place could make it easier for authoritarian governments to clamp down on abuse. Although Apple's system is only designed to detect CSAM, experts worry that oppressive governments could compel Apple to rework it to detect dissent or anti-government messages.

There are valid arguments about "mission creep" with technologies such as this. Prominent digital rights nonprofit the Electronic Frontier Foundation, for example, notes that a similar system originally intended to detect CSAM has been repurposed to create a database of "terrorist" content.

There are also valid arguments raised by the Messages scanning system. Although messages are end-to-end encrypted, the on-device machine learning is technically scanning messages. In its current form, it can't be used to view the content of messages, and as mentioned earlier, no external reports are generated by the feature. However, the feature could generate a classifier that could theoretically be used for oppressive purposes. The EFF, for example, questioned whether the feature could be adapted to flag LGBTQ+ content in countries where homosexuality is illegal.

At the same time, it's a slippery slope logical fallacy, assuming that the end goal is inevitably these worst-case scenarios.

These are all good debates to have. However, levying criticism only at Apple and its systems assuming that worst case is coming is unfair and unrealistic. That's because pretty much every service on the internet has long done the same type of scanning that Apple is now doing for iCloud.

As The Verge reported in 2014, Google has been scanning the inboxes of Gmail users for known CSAM since 2008. Some of those scans have even led to the arrest of individuals sending CSAM. Google also works to flag and remove CSAM from search results.

Microsoft originally developed the system that the NCMEC uses. The PhotoDNA system, donated by Microsoft, is used to scan image hashes and detect CSAM even if a picture has been altered. Facebook started using it in 2011, and Twitter adopted it in 2013. Dropbox uses it, and nearly every internet file repository does as well.

Apple, too, has said that it scans for CSAM. In 2019, it updated its privacy policy to state that it scans for "potentially illegal content, including child sexual exploitation material." A 2020 story from The Telegraph noted that Apple scanned iCloud for child abuse images.

They also don't seem to care that Apple is gleaning zero information about the context of pictures from the hashing, or the Messages alerts to parents. Your photo library with 2500 pictures of your dog, 1200 pictures of your garden, and 250 pictures of the ground because you hit the volume button accidentally while the camera app was open is still just as opaque to Apple as it has ever been. And, as an adult, Apple won't flag nor will it care that you are sending pictures of your genitalia to another adult.

Arguments that this somehow levels the privacy playing field with other products very much vary on how you personally define privacy. Apple is still not using Siri to sell you products based on what you tell it, Apple is still not looking at email metadata to serve you ads, and other actually privacy-violating measures. Similar arguments that Apple should do whatever it needs to do to protect society regardless of the cost are similarly bland and nonsensical.

Apple appears to have been taken aback from the response. Even after the original press release and white paper discussing the system, it has fielded multiple press briefings and media interviews trying to refine the message. It was obvious to us that this was going to be inflammatory, and it should have been to Apple as well.

And, they should have been proactive about it, and not reactive.

It should be Apple's responsibility to deliver the information in a sane and digestible manner from the start for something as crucial and inflammatory as this has turned out to be. After-the-fact refinements of the messaging has been a problem, and is only amplifying the volume of the internet denizens who don't care to do follow-up reading on the subject and are happy with their initial takes on the matter.

Further aggravating the situation, Apple is just the latest tech giant to adopt a system like it, and not the first. Also like always, it has generated the most internet-based wrath of them all.

The complaints aren't just from external critics and the public reading news reports about the system, but also from Apple's own employees. An internal Slack channel was found on August 12 to have more than 800 messages on the topic, with employees concerned about the possibility of governmental exploitation.

Based on the reports, the "major complainants" in those posts were not employees working in security or privacy teams, though some others defended Apple's position on the matter.

An interview with Apple privacy chief Erik Neuenschwander about the system on August 10 attempted to fend off the arguments about government overreach. He insisted the system was designed from the start to prevent such authoritative abuse from occurring in the first place, in multiple ways.

For example, the system only applied to the U.S., where Fourth Amendment protections guard against illegal search and seizure. This simultaneously prevents the U.S. government from legally abusing the system, while its existence in just the U.S. rules out it being abused in other countries.

Furthermore, the system itself uses hash lists that are built into the operating system, which cannot be updated from Apple's side without an iOS update. The updates to the database are also released on a global scale, meaning it cannot target individual users with highly-specific updates.

The system also only tags collections of known CSAM, so it won't affect just one image. Images that aren't in the database supplied from the National Center for Missing and Exploited Children won't be tagged either, so it will only apply to known images.

There's even a manual review process, where iCloud accounts that are flagged for having a collection will be reviewed by Apple's team to make sure it's a correct match before alerting any external entity.

Neuenschwander says this presents a lot of hoops that Apple would have to pass through to enable a hypothetical government surveillance situation.

He also says there's still user choice at play, as they can still opt not to use iCloud Photos. If iCloud Photos is disabled, "no part of the system is functional."

On August 13, Apple published a new document to try and explain the system further, including extra assurances to its users and concerned critics. These assurances include that the system will be auditable by third parties, including security researchers and non-profit groups.

This will involve a Knowledge Base publication with the root hash of the encrypted CSAM hash database, enabling the hash of the database on user devices to be compared against the expected root hash.

Apple is also collaborating with at least two child safety organizations to generate the CSAM hash database. There is an assurance that the two organizations aren't under the jurisdiction of the same government.

In the case that the governments overseeing the organizations collaborated, that would also be protected against, Apple insists, as the human review team will realize an account is flagged for reasons other than CSAM.

On the same day, Apple SVP of software engineering Craig Federighi spoke about the controversy, admitting that there was a breakdown in communication.

"We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," said Federighi.

"I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion," he continued. "It's really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

Federighi also insisted that there wasn't any pressure from outside forces to release the feature. "No, it really came down to... we figured it out," he said. "We've wanted to do something but we've been unwilling to deploy a solution that would involve scanning all customer data."

There's no good answer to that question. Every human makes decisions every day about which brand to trust, based on our own criteria. If your criteria is somehow that Apple is now worse than the other tech giants who have been doing this longer, or the concern is that Apple might allow law enforcement to subpoena your iCloud records if there's something illegal in it like they've been doing since iCloud was launched, then it may be time to finally make that platform move that you've probably been threatening to make for 15 years or longer.

And if you go, you need to accept that your ISP will happily provide your data to whoever asks for it, for the most part. And as it pertains to hardware, the rest of the OS vendors have been doing this, or more invasive versions of it, for longer. And, all of the other vendors have much deeper privacy issues for users in day-to-day operations.

There's always a thin line between security and freedom, and a perfect solution doesn't exist. Also arguably, the solution is more about Apple's liability than anything else as it does not proactively hunt down CSAM by context -- but if it did that, then it would actually be doing that privacy-violation that some folks are screaming about now, since it would know pictures by context and content.

While privacy is a fundamental human right, any technology that curbs the spread of CSAM is also inherently good. Apple has managed to develop a system that works toward that latter goal without significantly jeopardizing the average person's privacy.

Slippery-slope concerns about the disappearance of online privacy are worth having. However, those conversations need to include all of the players on the field -- including those that much more clearly violate the privacy of their users on a daily basis.

Update 5:23 PM ET Friday, August 6 Clarified that Apple's one in a trillion false match rate is per account, not per image, and updated with embed of Friday afternoon's version of the Apple technical guide.

Update 4:32 PM ET Sunday, August 15 Updated with details of Apple's attempts to clarify the system to the public, including interviews with Craig Federighi and privacy chief Erik Neuenschwander.

Read on AppleInsider

Credit: Apple

Apple announced a new suite of tools on Thursday, meant to help protect children online and curb the spread of child sexual abuse material (CSAM). It included features in iMessage, Siri and Search, and a mechanism that scans iCloud Photos for known CSAM images.

Cybersecurity, online safety, and privacy experts had mixed reactions to the announcement. Users did, too. However, many of the arguments are clearly ignoring how widespread the practice of scanning image databases for CSAM actually is. They also ignore the fact that Apple is not abandoning its privacy-protecting technologies.

Here's what you should know.

Apple's privacy features

The company's slate of child protection features includes the aforementioned iCloud Photos scanning, as well as updated tools and resources in Siri and Search. It also includes a feature meant to flag inappropriate images sent via iMessage to or from minors.As Apple noted in its announcement, all of the features were built with privacy in mind. Both the iMessage feature and the iCloud Photo scanning, for example, use on-device intelligence.

Additionally, the iCloud Photos "scanner" isn't actually scanning or analyzing the images on a user's iPhone. Instead, it's comparing the mathematical hashes of known CSAM to images stored in iCloud. If a collection of known CSAM images is stored in iCloud, then the account is flagged and a report is sent to the National Center for Missing & Exploited Children (NCMEC).

There are elements to the system to ensure that false positives are ridiculously rare. Apple says that the chances of a false positive are one in a trillion per account. That's because of the aforementioned collection "threshold," which Apple declined to detail.

Additionally, the scanning only works on iCloud Photos. Images stored strictly on-device are not scanned, and they can't be examined if iCloud Photos is turned off.

The Messages system is even more privacy-preserving. It only applies to accounts belonging to children, and is opt-in, not opt-out. Furthermore, it doesn't generate any reports to external entities -- only the parents of the children are notified that an inappropriate message has been received or sent.

There are critical distinctions to be made between the iCloud Photos scanning and the iMessage feature. Basically, both are completely unrelated beyond the fact that they're meant to protect children.

- iCloud Photo assessment hashes images without examining for context, and compares it to known CSAM collections and generates a report to issue to the hash collection maintainer NCMEC.

- The child account iMessage feature uses on-device machine learning, does not compare images to CSAM databases, and doesn't send any reports to Apple -- just the parental Family Sharing manager account

iCloud library false positives - or "What if I'm falsely mathematically matched?"

Apple's system is designed to ensure that false positives are ridiculously rare. But, for whatever the reason, humans are bad at risk-reward assessment. As we've already noted, Apple says the odds of a false match are one-in-a-trillion on any given account. One-in-1,000,000,000,000.In a human's lifetime, there is a 1-in-15,000 chance of getting hit by lightning, and that's not a general fear. The odds of getting struck by a meteor over a lifetime is about 1-in-250,000 and that is also not a daily fear. Any given mega-jackpot lottery is over 1-in-150,000,000 to win, and millions of dollars get spent on it every day across the US.

Apple's one-in-a-trillion per account is not what you'd call a big chance of somebody's life getting ruined by a false positive. And, Apple is not the judge, jury, and executioner, as some believe.

Should the material be spotted, it will be human-reviewed before being handed over to law enforcement. And then, and only then, will the potential match be handed over to law enforcement.

It is then up to them to issue a warrant, as courts do not accept just a hash-match as acceptable.

A false unlock on your iPhone with Face ID is incredibly more probable than false match here at 1-in-1,000,000. A bad Touch ID match is even more probable than that at 1-in-50,000, and again, neither of these are big concerns of users.

In short, your pictures of your kids in the bath, or your own nudes in iCloud are profoundly unlikely to generate a false match.

Messages monitoring - or "If I send a nude to my partner, will I get flagged?"

If you're an adult, you won't be flagged if you're sending nude images to a partner. Apple's iMessage mechanism only applies to accounts belonging to underage children, and is opt-in.Even if it did flag an image sent between two consenting adults, it wouldn't mean much. The system, as mentioned earlier, doesn't notify or generate an external report. Instead, it uses on-device machine learning to detect a potentially sensitive photo and warn the user about it.

And, again, it's meant to only warn parents that underage users are sending the pictures.

What about pictures of my kids in the bath?

This concern stems from a misunderstanding of how Apple's iCloud Photos scanning works. It's not looking at individual images contextually to determine if a picture is CSAM. It's only comparing the image hashes of photos stored in iCloud against a database of known CSAM maintained by NCMEC.In other words, unless the images of your kids are somehow hashed in NCMEC's database, then they won't be affected by the iCloud Photo scanning system. More than that, the system is only designed to catch collections of known CSAM.

So, even if a single image somehow managed to generate a false positive (an unlikely event, which we've already discussed), then the system still wouldn't flag your account.

What if somebody sends me illegal material?

If somebody was really out to get you, it's more likely that somebody would break into your iCloud because the password the user set is "1234" or some other nonsense, and dump the materials in there. But, if it wasn't on your own network, it's defensible.Again, the iCloud Photos system only applies to collections of images stored on Apple's cloud photo system. If someone sent you known CSAM via iMessage, then the system wouldn't apply unless it was somehow saved to your iCloud.

Could someone hack into your account and upload a bunch of known CSAM to your iCloud Photos? This is technically possible, but also highly unlikely if you're using good security practices. With a strong password and two-factor authentication, you'll be fine. If you've somehow managed to make enemies of a person or organization able to get past those security mechanisms, you likely have far greater concerns to worry about.

Also, Apple's system allows people to appeal if their accounts are flagged and closed for CSAM. If a nefarious entity did manage to get CSAM onto your iCloud account, there's always the possibility that you can set things straight.

And, it would still have to be moved to your Photo library for it to get scanned. And you would need a collection of at least 30 images for the account to be flagged.

Valid privacy concerns

Even with those scenarios out of the way, many tech libertarians have taken issue with the Messages and iCloud Photo scanning mechanisms despite Apple's assurances of privacy. A lot of cryptography and security experts also have concerns.For example, some experts worry that having this type of mechanism in place could make it easier for authoritarian governments to clamp down on abuse. Although Apple's system is only designed to detect CSAM, experts worry that oppressive governments could compel Apple to rework it to detect dissent or anti-government messages.

There are valid arguments about "mission creep" with technologies such as this. Prominent digital rights nonprofit the Electronic Frontier Foundation, for example, notes that a similar system originally intended to detect CSAM has been repurposed to create a database of "terrorist" content.

There are also valid arguments raised by the Messages scanning system. Although messages are end-to-end encrypted, the on-device machine learning is technically scanning messages. In its current form, it can't be used to view the content of messages, and as mentioned earlier, no external reports are generated by the feature. However, the feature could generate a classifier that could theoretically be used for oppressive purposes. The EFF, for example, questioned whether the feature could be adapted to flag LGBTQ+ content in countries where homosexuality is illegal.

At the same time, it's a slippery slope logical fallacy, assuming that the end goal is inevitably these worst-case scenarios.

These are all good debates to have. However, levying criticism only at Apple and its systems assuming that worst case is coming is unfair and unrealistic. That's because pretty much every service on the internet has long done the same type of scanning that Apple is now doing for iCloud.

Apple's system is nothing new

A casual view of the Apple-related headlines might lead one to think that the company's child safety mechanisms are somehow unique. They're not.As The Verge reported in 2014, Google has been scanning the inboxes of Gmail users for known CSAM since 2008. Some of those scans have even led to the arrest of individuals sending CSAM. Google also works to flag and remove CSAM from search results.

Microsoft originally developed the system that the NCMEC uses. The PhotoDNA system, donated by Microsoft, is used to scan image hashes and detect CSAM even if a picture has been altered. Facebook started using it in 2011, and Twitter adopted it in 2013. Dropbox uses it, and nearly every internet file repository does as well.

Apple, too, has said that it scans for CSAM. In 2019, it updated its privacy policy to state that it scans for "potentially illegal content, including child sexual exploitation material." A 2020 story from The Telegraph noted that Apple scanned iCloud for child abuse images.

Apple has found a middle ground

As with any Apple release, only the middle 75% of the argument is sane. The folks screaming that privacy is somehow being violated, don't seem to be that concerned about the fact that Twitter, Microsoft, Google, and nearly every other photo sharing service have been using these hashes for a decade or more.They also don't seem to care that Apple is gleaning zero information about the context of pictures from the hashing, or the Messages alerts to parents. Your photo library with 2500 pictures of your dog, 1200 pictures of your garden, and 250 pictures of the ground because you hit the volume button accidentally while the camera app was open is still just as opaque to Apple as it has ever been. And, as an adult, Apple won't flag nor will it care that you are sending pictures of your genitalia to another adult.

Arguments that this somehow levels the privacy playing field with other products very much vary on how you personally define privacy. Apple is still not using Siri to sell you products based on what you tell it, Apple is still not looking at email metadata to serve you ads, and other actually privacy-violating measures. Similar arguments that Apple should do whatever it needs to do to protect society regardless of the cost are similarly bland and nonsensical.

Apple appears to have been taken aback from the response. Even after the original press release and white paper discussing the system, it has fielded multiple press briefings and media interviews trying to refine the message. It was obvious to us that this was going to be inflammatory, and it should have been to Apple as well.

And, they should have been proactive about it, and not reactive.

It should be Apple's responsibility to deliver the information in a sane and digestible manner from the start for something as crucial and inflammatory as this has turned out to be. After-the-fact refinements of the messaging has been a problem, and is only amplifying the volume of the internet denizens who don't care to do follow-up reading on the subject and are happy with their initial takes on the matter.

Further aggravating the situation, Apple is just the latest tech giant to adopt a system like it, and not the first. Also like always, it has generated the most internet-based wrath of them all.

Clarification attempts were made, but the anger's still there

Since the outpouring of uproar, Apple has attempted to clarify the situation. However, it's attempts are nowhere near where they need to be to pacify detractors.The complaints aren't just from external critics and the public reading news reports about the system, but also from Apple's own employees. An internal Slack channel was found on August 12 to have more than 800 messages on the topic, with employees concerned about the possibility of governmental exploitation.

Based on the reports, the "major complainants" in those posts were not employees working in security or privacy teams, though some others defended Apple's position on the matter.

An interview with Apple privacy chief Erik Neuenschwander about the system on August 10 attempted to fend off the arguments about government overreach. He insisted the system was designed from the start to prevent such authoritative abuse from occurring in the first place, in multiple ways.

For example, the system only applied to the U.S., where Fourth Amendment protections guard against illegal search and seizure. This simultaneously prevents the U.S. government from legally abusing the system, while its existence in just the U.S. rules out it being abused in other countries.

Furthermore, the system itself uses hash lists that are built into the operating system, which cannot be updated from Apple's side without an iOS update. The updates to the database are also released on a global scale, meaning it cannot target individual users with highly-specific updates.

The system also only tags collections of known CSAM, so it won't affect just one image. Images that aren't in the database supplied from the National Center for Missing and Exploited Children won't be tagged either, so it will only apply to known images.

There's even a manual review process, where iCloud accounts that are flagged for having a collection will be reviewed by Apple's team to make sure it's a correct match before alerting any external entity.

Neuenschwander says this presents a lot of hoops that Apple would have to pass through to enable a hypothetical government surveillance situation.

He also says there's still user choice at play, as they can still opt not to use iCloud Photos. If iCloud Photos is disabled, "no part of the system is functional."

On August 13, Apple published a new document to try and explain the system further, including extra assurances to its users and concerned critics. These assurances include that the system will be auditable by third parties, including security researchers and non-profit groups.

This will involve a Knowledge Base publication with the root hash of the encrypted CSAM hash database, enabling the hash of the database on user devices to be compared against the expected root hash.

Apple is also collaborating with at least two child safety organizations to generate the CSAM hash database. There is an assurance that the two organizations aren't under the jurisdiction of the same government.

In the case that the governments overseeing the organizations collaborated, that would also be protected against, Apple insists, as the human review team will realize an account is flagged for reasons other than CSAM.

On the same day, Apple SVP of software engineering Craig Federighi spoke about the controversy, admitting that there was a breakdown in communication.

"We wish that this had come out a little more clearly for everyone because we feel very positive and strongly about what we're doing, and we can see that it's been widely misunderstood," said Federighi.

"I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion," he continued. "It's really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, 'oh my god, Apple is scanning my phone for images.' This is not what is happening."

Federighi also insisted that there wasn't any pressure from outside forces to release the feature. "No, it really came down to... we figured it out," he said. "We've wanted to do something but we've been unwilling to deploy a solution that would involve scanning all customer data."

A matter of trust

With Apple's initial seeming lack of transparency, comes the obvious wonder about if Apple is doing what it says its doing, how it says its doing it.There's no good answer to that question. Every human makes decisions every day about which brand to trust, based on our own criteria. If your criteria is somehow that Apple is now worse than the other tech giants who have been doing this longer, or the concern is that Apple might allow law enforcement to subpoena your iCloud records if there's something illegal in it like they've been doing since iCloud was launched, then it may be time to finally make that platform move that you've probably been threatening to make for 15 years or longer.

And if you go, you need to accept that your ISP will happily provide your data to whoever asks for it, for the most part. And as it pertains to hardware, the rest of the OS vendors have been doing this, or more invasive versions of it, for longer. And, all of the other vendors have much deeper privacy issues for users in day-to-day operations.

There's always a thin line between security and freedom, and a perfect solution doesn't exist. Also arguably, the solution is more about Apple's liability than anything else as it does not proactively hunt down CSAM by context -- but if it did that, then it would actually be doing that privacy-violation that some folks are screaming about now, since it would know pictures by context and content.

While privacy is a fundamental human right, any technology that curbs the spread of CSAM is also inherently good. Apple has managed to develop a system that works toward that latter goal without significantly jeopardizing the average person's privacy.

Slippery-slope concerns about the disappearance of online privacy are worth having. However, those conversations need to include all of the players on the field -- including those that much more clearly violate the privacy of their users on a daily basis.

Update 5:23 PM ET Friday, August 6 Clarified that Apple's one in a trillion false match rate is per account, not per image, and updated with embed of Friday afternoon's version of the Apple technical guide.

Update 4:32 PM ET Sunday, August 15 Updated with details of Apple's attempts to clarify the system to the public, including interviews with Craig Federighi and privacy chief Erik Neuenschwander.

Apple's CSAM detection mechanism by Mike Wuerthele on Scribd

(function() { var scribd = document.createElement("script"); scribd.type = "text/javascript"; scribd.async = true; scribd.src = "https://www.scribd.com/javascripts/embed_code/inject.js"; var s = document.getElementsByTagName("script")[0]; s.parentNode.insertBefore(scribd, s); })();Read on AppleInsider

Comments

And even if it was, one in 50 million is still pretty spectacularly against.

And the "humans aren't good at risk assessment" is clearly on display here.

I'm not sure how 3 is an argument against this.

4. This system is only in the US for now. I'm sure the legality of it will be assessed before it is rolled out in other countries. In regards to arrest in other countries, you are subject to the laws of that country/state if you travel in the country/state regardless of your citizenship. Given how the system works, it'd be hard to hash "people hugging" as the photos are not judged based on the image for content, beyond matching the CSAM hash database.

5. I'm glad you're keeping an open mind, and aren't resorting to hyperbole.

Also, it's massively more likely someone will get their password phished than a hash collision occurring - probably 15-20% of people I know have been "hacked" through phishing. All it takes is a couple of photos to be planted, with a date a few years ago so they aren't at the forefront of someone's library and someone's in very hot water. You claim someone could defend against this in court, but I fail to understand how? "I don't know how they got there" isn't going to wash with too many people. And unfortunately, "good security practices" are practised only by the likes of us anyway, most people use the same password with their date of birth or something equally insecure for everything.

One in a trillion tried a trillion times does not guarantee a match, although it is likely. as you're saying. There may even be two or three. You're welcome to believe what you want, and you can research it with statisticians if you are so inclined. This is the last I will address this point here.

And, in regards to the false positive, somebody will look at the image, and say something like: Oh, this is a palm tree. It just coincidentally collides with the hash. All good. Story over.

In regards to your latter point, this is addressed in the article.

If you don't know why workers getting PTSD is an argument against this, then you have no compassion. Are you by any chance a narcissist?

That's the point. We have no idea how this will be rolled out in other countries but we can be certain it will be. The other countries will simply demand that Apple provide them with the same access to personal data provided in the USA. As to how the algorithm works, algorithms change all the time. The dumb pattern matching that Apple is using now could be replaced with an AI trained to recognize certain types of photos.

Are you keeping an open mind when it comes to Apple? You are posting all this Apple information verbatim without any editorial comments about the extreme danger of a program like this. You posted all those glowing articles about how great Apple is for promoting privacy (and you were right), why not be extremely critical or at least extremely skeptical of a plan to scan our photos without our permission and then have humans look at our photos without our permission and then send our photos to law enforcement without our permission?

Edit: That didn't take long:

Apple now says that this system will be implemented in other countries on a country by country basis.

Delightful ad hominem and continued slippery slope. You asked about my kids yesterday in a poorly thought-out example that didn't apply, and I care more about them, than I do a hypothetical Apple contractor who hypothetically has PTSD and is hypothetically being treated poorly by Apple. And there's a lot of could, maybe, and in the future here.

Did you even read this article to its conclusion, or did you just start commenting? I feel like if you had read the article, that last paragraph would be a little different.

You've been complaining about Apple hardware and software for a long time here. Some of it is warranted and some of it is not. If this is so unacceptable to you, take the final steps and go. That simple.

There is no extreme danger from this system as it stands. As with literally everything else in this world, there is good and bad, and we've talked about it here. Every decision we all make weighs the good and the bad.

You're welcome to believe that there extreme danger based on hypotheticals. When and if it develops, we'll write about it.

You didn't address the elephant in the room: Apple has been selling itself and its products on the idea that they will keep our private data private. They stated or strongly implied that all of our data would be strongly encrypted and that governments and hackers would not be able to access it and that even Apple could not access it due to the strength of the encryption. All of those statements and implied promises were false if encrypted data from our phones is unencrypted when it is stored in iCloud. The promise of privacy is turned into a farce when Apple itself violates it and scans our data without our permission. Yes other companies have been doing things like this with our data for years but that's why we were buying Apple products, right?

I could pick apart your article a point at a time but it would be tedious. Example: Our ISPs can scan our data for IP content. Yes but we can and do use VPNs to work around that issue. Heck most employers require the use of a VPN to keep their company secrets secret (I bet Apple does too).

BTW I hope you appreciate that I incorporated your arguments into my own. I did read what you wrote. I just happen to completely disagree with it.

If Apple indeed goes forward with this initiative I will be cancelling my iCloud Photos subscription and go back to synching over a cable.

Your arguments other than your privacy assumption (which is addressed in the article) lean on hypotheticals and maybes. It relies on a worst-case assumption (which we also discussed in the article). This is about today. Neither you nor I can predict the future, or how this will turn out. Us not saying that this is some kind of disaster does not make it apologetic, it makes it realistic. This is how the system works. This is what Apple is doing, how, and what it knows about your photo library.

This all said, I've got other stuff to do. I hope you have a nice weekend.

Apple sold itself on being the data privacy company.

This angered governments who feel they should be able to snoop on anyones data.

The governments argued that not allowing access to private data enables people to harm children by sharing explicit images of them.

Apple knew they would lose the political argument and would be legally forced to introduce back doors to their encryption.

Apple came up with a plan to scan iCloud data themselves for illegal photos using a government pattern matching database.

If the plan works, Apple could then implement strong encryption on iCloud with the pattern matching working with the encrypted data.

Unfortunately it appears that Apple has opened a big can of worms and is now on a slippery sloping razor blade into a pool of lemon juice (how's that for hyperbole?)

I will leave with this (I also have things to do):

What Apple is saying is full of hypotheticals and maybes and relies on best-case assumptions. This is because Apple's code is a black box. No one can review it and see if what they are saying is true or not. Apple is saying "trust us" having just proved that we cannot trust them.