Fed expansion of Apple's CSAM system barred by 4th Amendment, Corellium exec says

Credit: Apple

In a Twitter thread Monday, Corellium COO and security specialist Matt Tait detailed why the government couldn't just modify the database maintained by the National Center of Missing and Exploited Children (NCMEC) to find non-CSAM images in Apple's cloud storage. For one, Tait pointed out that NCMEC is not part of the government. Instead, it's a private nonprofit entity with special legal privileges to receive CSAM tips.

Because of that, authorities like the Justice Department can't just directly order NCMEC to do something outside of its scope. It might be able to force them in the courts, but NCMEC is not within its chain of command. Even if the Justice Department "asks nicely," NCMEC has several reasons to say no.

However, Tait uses a specific scenario of the DOJ getting NCMEC to add a hash for a classified document to its database.

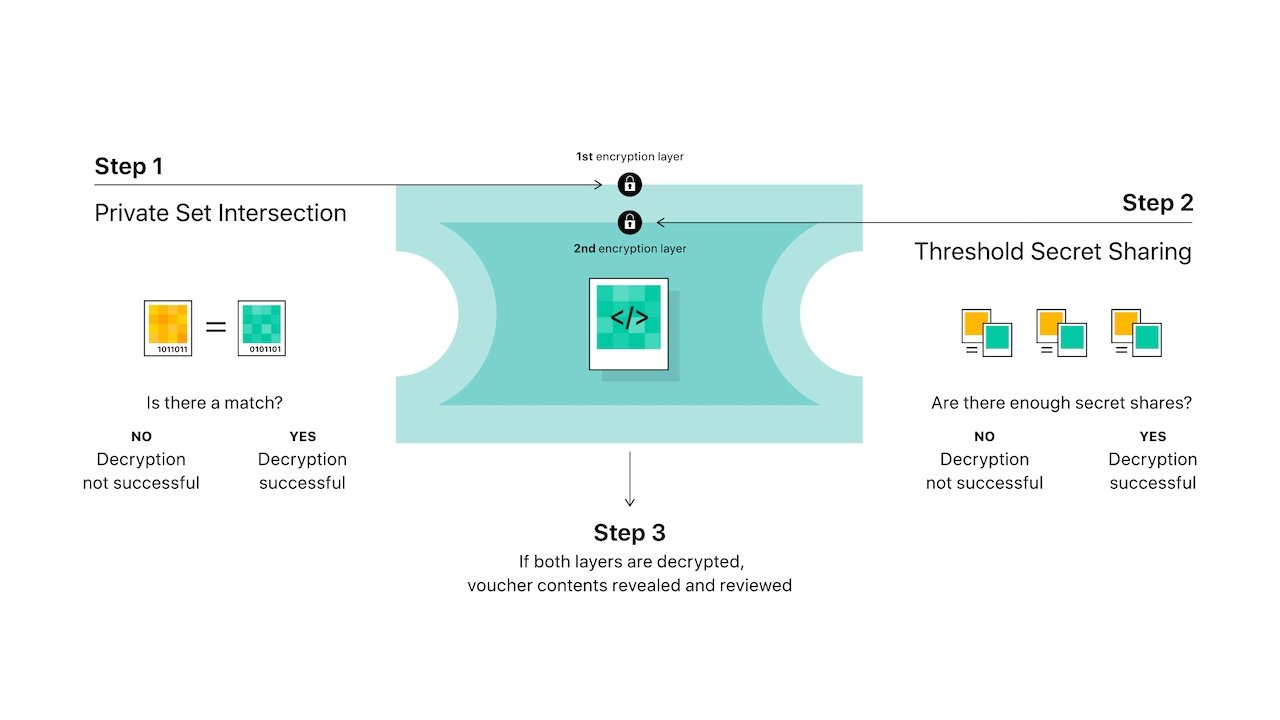

In this scenario, perhaps someone has this photo on their phone and uploaded it to iCloud so it got scanned, and triggered a hit. First, in Apple's protocol, that single hit is not enough to identify that the person has the document, until the preconfigured threshold is reached.

-- Pwn All The Things (@pwnallthethings)

Tait also points out that a single image of non-CSAM wouldn't be enough to ping the system. Even if those barriers are somehow surmounted, it's likely that Apple would drop the NCMEC database if it knew the organization wasn't operating honestly. Technology companies have a legal obligation to report CSAM, but not to scan for it.

So thanks to DOJ asking and NCMEC saying "yes", Apple has dropped CSAM scanning entirely, and neither NCMEC nor DOJ actually got a hit. Moreover, NCMEC is now ruined: nobody in tech will use their database.

So the long story short is DOJ can't ask NCMEC politely and get to a yes-- Pwn All The Things (@pwnallthethings)

Whether or not the government could compel NCMEC to add a hash for non-CSAM images is also a tricky question. According to Tait, the Fourth Amendment probably prohibits it.

NCMEC is not actually an investigative body, and there are blocks between it and governmental agencies. When it receives a tip, it passes the information along to law enforcement. To actually prosecute a known CSAM offender, law enforcement must gather their own evidence, typically by warrant.

Although courts have wrestled with the question, the original CSAM scanning by a tech company is likely compliant with the Fourth Amendment because companies are doing so voluntarily. If it's a non-voluntary search, then it's a "deputized search" and in violation of the Fourth Amendment if a warrant is not provided.

For the conspiracy to work, it'd need Apple, NCMEC and DOJ working together to pull it off *voluntarily* and it to never leak. If that's your threat model, OK, but that's a huge conspiracy with enormous risk to all participants, two of which existentially.

-- Pwn All The Things (@pwnallthethings)

Apple's CSAM detection mechanism has caused a stir since its announcement, drawing criticism from security and privacy experts. The Cupertino tech giant maintains that it won't allow the system to be used to scan for anything other than CSAM, however.

Read on AppleInsider

Comments

It's not shocking at all that "but think of the children" types don't understand just exactly how dangerous for everyone something like this is. Sad, but not shocking.

Applying your illogical thinking, everyone that is not willing to go along with having their data and devices searched, for a crime that they have not committed, never will and not been suspected of, must be keen on supporting the "rights" of pedophiles. Your type of thinking is much more dangerous to a free society than that of a pedophile, because there seems to be lot more people like you, than there are pedophiles.

"Suspicionless surveillance does not become okay simply because it's only victimizing 95% of the world instead of 100%."

"Under observation, we act less free, which means we effectively are less free."

"It may be that by watching everywhere we go, by watching everything we do, by analyzing every word we say, by waiting and passing judgment over every association we make and every person we love, that we could uncover a terrorist plot, or we could discover more criminals. But is that the kind of society we want to live in?"

Edward Snowden

Benjamin Franklin (1706-1790)

If the Chinese government ordered Apple to implement this feature and keep it quiet then Apple would (1) implement this feature and (2) keep it quiet, because Apple needs China vastly more than China needs Apple.

Not to be too conspiratorial or anything, but suppose China did order Apple to implement this feature (not just for CSAM, but for everything of interest to CCP), and to keep it quiet. How could Apple alert users without running afoul of the Chinese government?

Maybe by doing exactly what they're doing. Announce the feature is being implemented only in the US and only for CSAM, and then let people connect the dots.

If Apple could detect terrorist content conclusively, I am sure they would be off of iCloud as well.

Wanting to keep identifiably illegal content off of a storage service is the right thing to do.

I hope other storage services follow suit.