Outdated Apple CSAM detection algorithm harvested from iOS 14.3 [u]

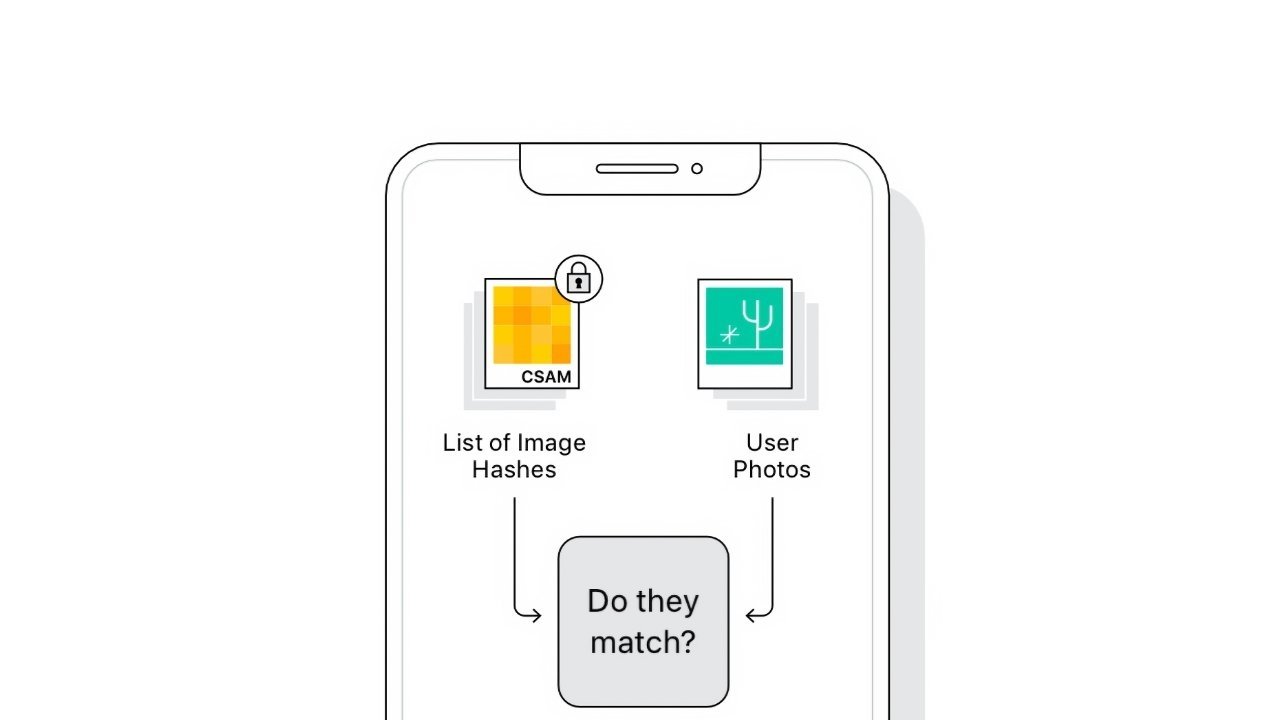

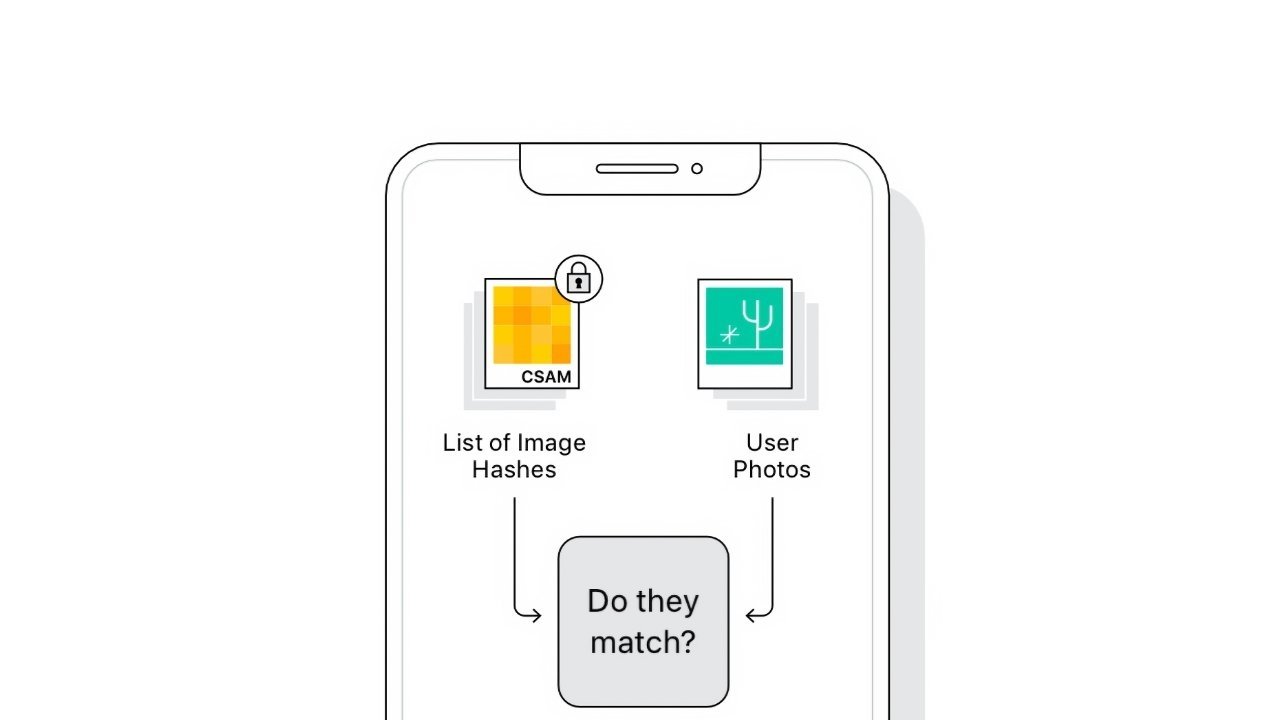

A user on Reddit says they have discovered a version of Apple's NeuralHash algorithm used in CSAM detection in iOS 14.3, and Apple says that the version that was extracted is not current, and won't be used.

CSAM detection algorithm may have been discovered in iOS 14.3

The Reddit user, u/AsuharietYgvar, says the NerualHash code was found buried in iOS 14.3. The user says that they've reverse-engineered the code and rebuilt a working model in Python that can be tested by passing it images.

The algorithm was found among hidden APIs, and the container for NeuralHash was called MobileNetV3. Those interested in viewing the code can find in a GitHub repository.

The Reddit user claims this must be the correct algorithm for two reasons. First, the model files have the same prefix found in Apple's documentation, and second, verifiable portions of the code work the same as Apple's description of NeuralHash.

It appears that the discovered code will not be fooled by compression or image resizing but will not detect crops or rotations.

Using this working Python script, GitHub users have begun examining how the algorithm works and if it can be abused. For example, one user, dxoigmn, found that if you knew the resulting hash found in the CSAM database, one could create a fake image that produced the same hash.

If true, someone could make fake images that resembled anything but produced a desired CSAM hash match. Theoretically, a nefarious user could then send these images to Apple users to attempt to trigger the algorithm.

Despite these discoveries, all of the information provided by this version of NerualHash may not represent the final build. Apple has been building the CSAM detection algorithm for years, so it is safe to assume some version of the code exists for testing.

If what u/AsuharietYgvar has discovered is truly some version of the CSAM detection algorithm, it likely isn't the final version. For instance, this version struggles with crops and rotations, which Apple specifically said its algorithm would account for.

Apple also has a human review process in place. The attack images aren't human-parseable as anything but a bit-field, and there is clearly no CSAM involved in the fabricated file.

No one has discovered the existence of the CSAM database or matching algorithm in iOS 15 betas yet. Once it appears, it is reasonably possible that some crafty user will be able to extract the algorithm for testing and comparison.

For now, users can poke around this potential version of NerualHash and attempt to find any potential issues. However, the work may prove to be fruitless since this is only a part of the complex process used for CSAM detection, and doesn't account for the voucher system or what occurs server-side.

Apple hasn't provided an exact release timeline for the CSAM detection feature. For now, it is expected to launch in a point release after the new operating systems debut in the fall.

Update: Apple in a statement to Motherboard said the version of NeuralHash discovered in iOS 14.3 is not the final version set for release with iOS 15.

Read on AppleInsider

CSAM detection algorithm may have been discovered in iOS 14.3

The Reddit user, u/AsuharietYgvar, says the NerualHash code was found buried in iOS 14.3. The user says that they've reverse-engineered the code and rebuilt a working model in Python that can be tested by passing it images.

The algorithm was found among hidden APIs, and the container for NeuralHash was called MobileNetV3. Those interested in viewing the code can find in a GitHub repository.

The Reddit user claims this must be the correct algorithm for two reasons. First, the model files have the same prefix found in Apple's documentation, and second, verifiable portions of the code work the same as Apple's description of NeuralHash.

It appears that the discovered code will not be fooled by compression or image resizing but will not detect crops or rotations.

Using this working Python script, GitHub users have begun examining how the algorithm works and if it can be abused. For example, one user, dxoigmn, found that if you knew the resulting hash found in the CSAM database, one could create a fake image that produced the same hash.

If true, someone could make fake images that resembled anything but produced a desired CSAM hash match. Theoretically, a nefarious user could then send these images to Apple users to attempt to trigger the algorithm.

Despite these discoveries, all of the information provided by this version of NerualHash may not represent the final build. Apple has been building the CSAM detection algorithm for years, so it is safe to assume some version of the code exists for testing.

If what u/AsuharietYgvar has discovered is truly some version of the CSAM detection algorithm, it likely isn't the final version. For instance, this version struggles with crops and rotations, which Apple specifically said its algorithm would account for.

Theoretical attack vector, at best

If the attack vector suggested by dxoigmn is possible, simply sending a user an image generated by it has several fail-safes to prevent a user from getting an iCloud account disabled, and reported to law enforcement. There are numerous ways to get images on a person's device, like email, AirDrop, or iMessage, but the user has to manually add photos to the photo library. There isn't a direct attack vector that doesn't require the physical device, or the user's iCloud credentials to inject images directly into the Photos app.Apple also has a human review process in place. The attack images aren't human-parseable as anything but a bit-field, and there is clearly no CSAM involved in the fabricated file.

Not the final version

The Reddit user claims they discovered the code but are not an ML expert. The GitHub repository with the Python script is available for public examination for now.No one has discovered the existence of the CSAM database or matching algorithm in iOS 15 betas yet. Once it appears, it is reasonably possible that some crafty user will be able to extract the algorithm for testing and comparison.

For now, users can poke around this potential version of NerualHash and attempt to find any potential issues. However, the work may prove to be fruitless since this is only a part of the complex process used for CSAM detection, and doesn't account for the voucher system or what occurs server-side.

Apple hasn't provided an exact release timeline for the CSAM detection feature. For now, it is expected to launch in a point release after the new operating systems debut in the fall.

Update: Apple in a statement to Motherboard said the version of NeuralHash discovered in iOS 14.3 is not the final version set for release with iOS 15.

Read on AppleInsider

Comments

As mentioned, users have to save the photo. Not just one photo, but 30 photos. You think you’re going to convince someone to save 30 photos they received from a random person? I get pictures in iMessage all the time from friends/family. I rarely save them as once I’ve seen it (often it’s a joke or meme) I simply delete it. Or I’ll leave it in iMessage if I ever need to see it again.

The fact that anyone can reverse engineer this is expected -- for anyone with any knowledge of software development.

LOL this is radically FALSE , you don't have to convince anyone , for example if you've whatsapp there's an option to save photos on device(I have it since day 1) , so if someone sends you 30 photos, not necessarily SEX related but simply CSAM collisions (there's code available to generate those) , they get automatically synch-ed to iCloud , scanned and the result will trigger a review by the Apple employee that plays police . If some of these photos watched lowres resembles sex related stuff you'll have police knocking at your door and calling you a pedophile. This is not a joke

Apple is the modern-day version of your locked bureau. And that locked bureau was clearly and unequivocally OFF LIMITS from Uncle.

When this provision was affirmed, it didn't matter if you had nothing to hide. You had the right to that locked bureau.

It didn't matter if you had the severed head of an innocent child in that bureau, either. You had an indisputable right to privacy from a prying Uncle's eyes except in VERY special circumstances.

It would have been preposterous if a bureau manufacturer had built a skeleton key and EULA for the piece of furniture which allowed them to inspect the contents of your bureau — as a neutral third party, of course — anytime they chose. It would have been even more unthinkable had the manufacturer's policy included a provision which allowed them to report the contents of your private papers and effects to Uncle should they deem them illicit.

Now, how much do we need to worry about Uncle, and his wily ways of creeping on our privacy and autonomy?

Well, seeing as how Uncle incarcerates more of its own fam, and spies on, invades the homes of, and bombs more innocent humans than any other patriarchal entity in the entire human race, I'd say it's a serious point of concern. And any person here or elsewhere dismissing this backdoor attempt to peek into your bureau as "not that big of a concern" has forgotten or been forever blind to the real risk this poses — and the consequences it brings — to civilized society.

Even if you did upload multiple false matches (and that means you're saving random images that are really not very likely to be useful) the human moderator that then inspects them will soon realise that they are only false positives.

If you trust Apple to describe how this mechanism works then it's only transfers to iCloud that are inspected and the hashes on your phone are NOT images, just mathematical fingerprints. If you don't trust Apple then just consider all the other stuff on your phone: maybe it's time to try Android?

https://forums.appleinsider.com/discussion/comment/3327620/#Comment_3327620

People will try to exploit this. It would be better if it wasn’t there at all. The hashes SHOULD be unique, but due to the nature of hashes (it’s an algorithm), there can be collisions. You may sooner win the lottery than encounter one by chance… but what if it wasn’t by chance? It was only a matter of time before someone created an image or images that match hashes in CSAM and started propagating. Now Apple may have a human review step after several flags, but what happens if they get flooded with requests because a series of images gets propagated? Humans can only work so fast. But this human review implies images may be sent to people at Apple, which would necessarily circumvent the encryption that prevents anyone but the intended parties to see the images.

I also find it unfortunate that this has been in since ios14.3, but also hope apple doesn’t enable it. I have iCloud photos turned off and I will not be upgrading to 15. The “smart” criminals will also do the same thing. All this does is root out the dumb ones. The “smarter” ones will turn it off avoid it, but the smartest ones will find/use other channels now.

it can be abused and it will be abused...

This is just step one!!!

As the CSAM hash has to be updated regularly, I would say pretty easy.

have a picture of Reagan, you end up on a list.

And when you think about it, what individual would have a collection of photos that could be matched to a database? CSAM is something people collect, terrorist images? Pictures of political figures? Not so much.

Would be too narrow a vector for anyone to target. All that said, that't not mentioning the fact that images can only exist in the database once they are added by two independent systems from two different governing countries.