AMD proved that Apple skipping 4nm chips isn't a big deal

AMD said at CES that its use of a 4nm process helps its new chips beat two-year old Apple Silicon, but in doing so, it revealed that a jump to 4nm was not a big deal for Apple's future chip releases.

AMD's pushing into 4nm processes for chips, temporarily beating Apple's use of 5nm.

On January 5, AMD used its CES presentation to promote its own line of processors and chips. As to be expected of such a promotional opportunity, the chip maker wheeled out announcements for its inbound chip releases, and took the time to hype it as better than its rival silicon producers.

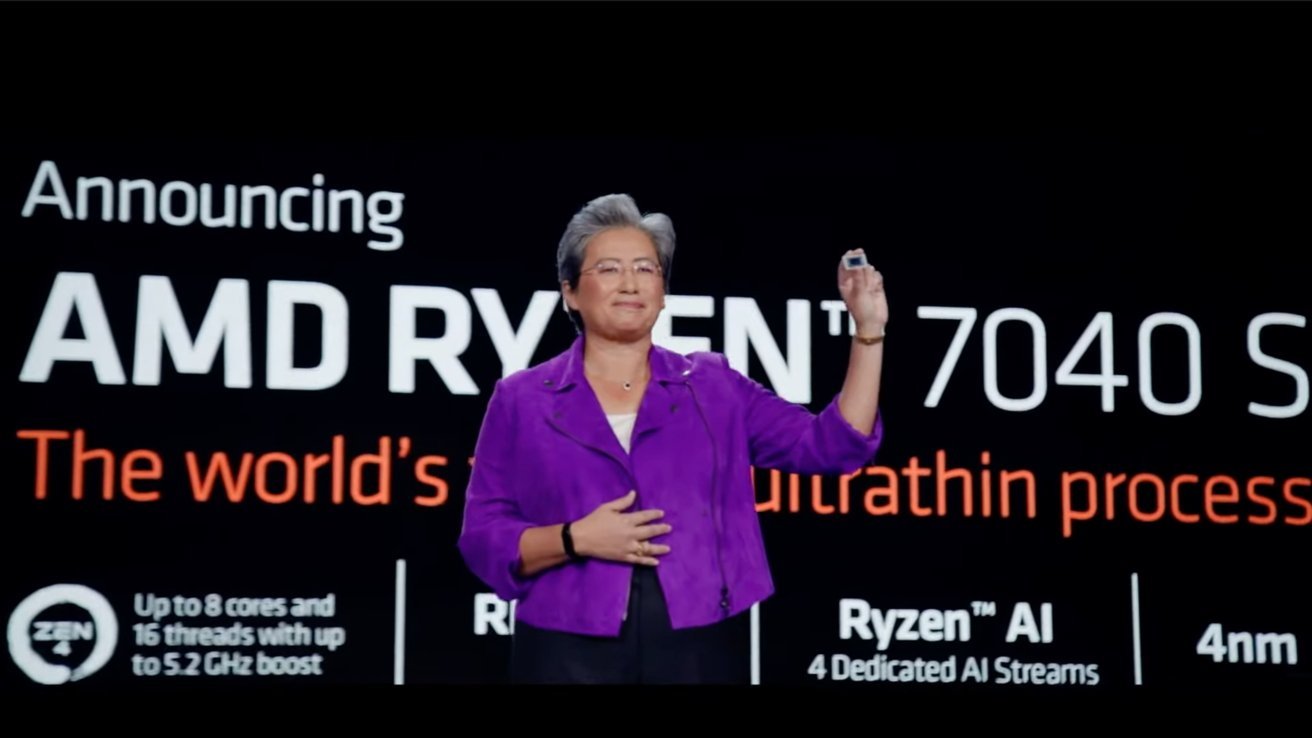

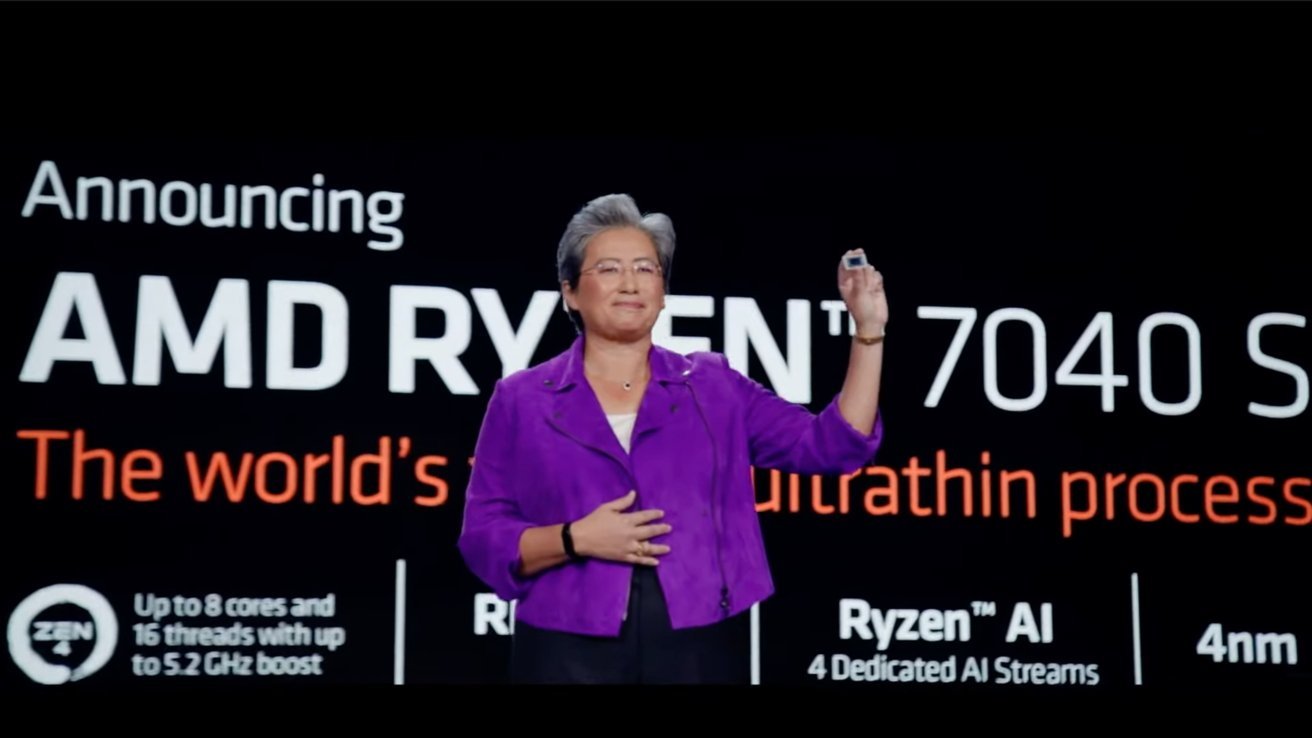

For this year, the big attention was on the AMD Ryzen 7040HS, a series of chips that are intended for use in "ultrathin PC laptops" and other mobile devices. They were declared to be powerful, both in terms of CPU and GPU performance, and they also perform better than other similar chips.

Being one of the major mobile chips on the market, AMD decided that it would compare its top-end Ryzen 9 7940HS against Apple Silicon.

AMD's CES 2023 presentation boasted about its 4nm prowess.

In on-stage slides, AMD proclaimed that the chip was up to 34% faster in terms of multithreaded performance over the M1 Pro. Moreover, it was also able to run at "up to +20% on AI processing" against Apple's M2.

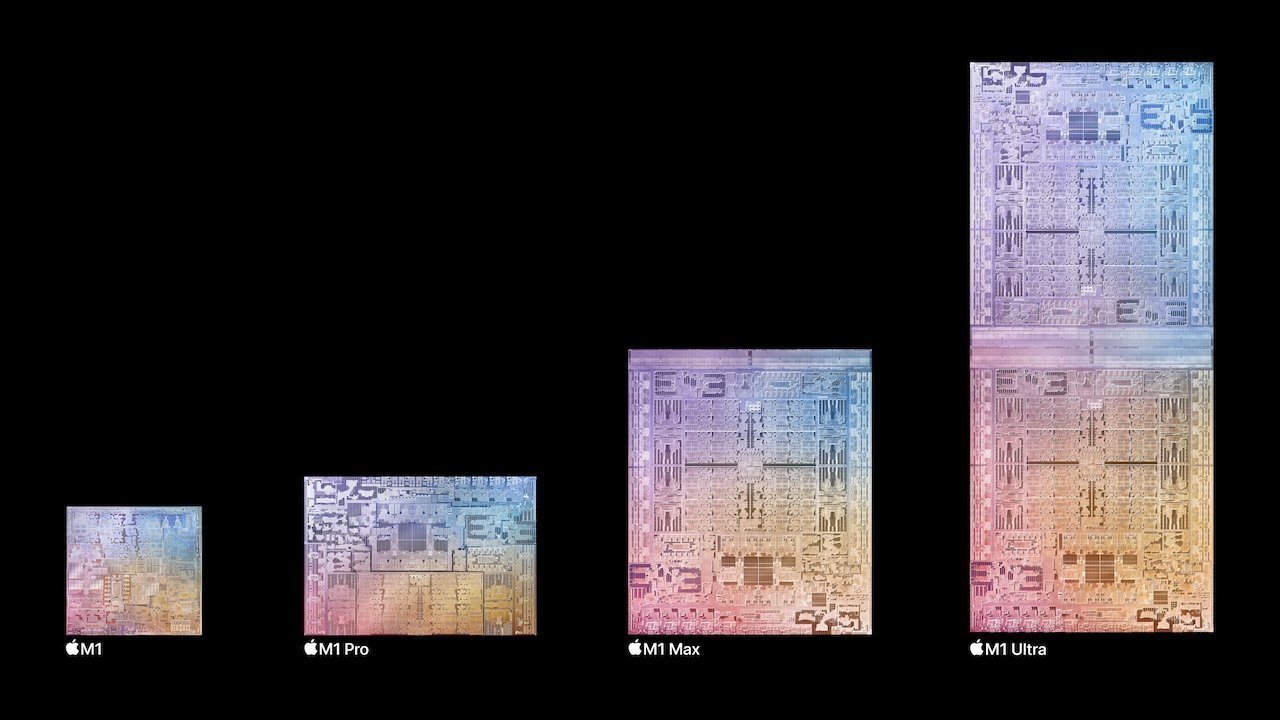

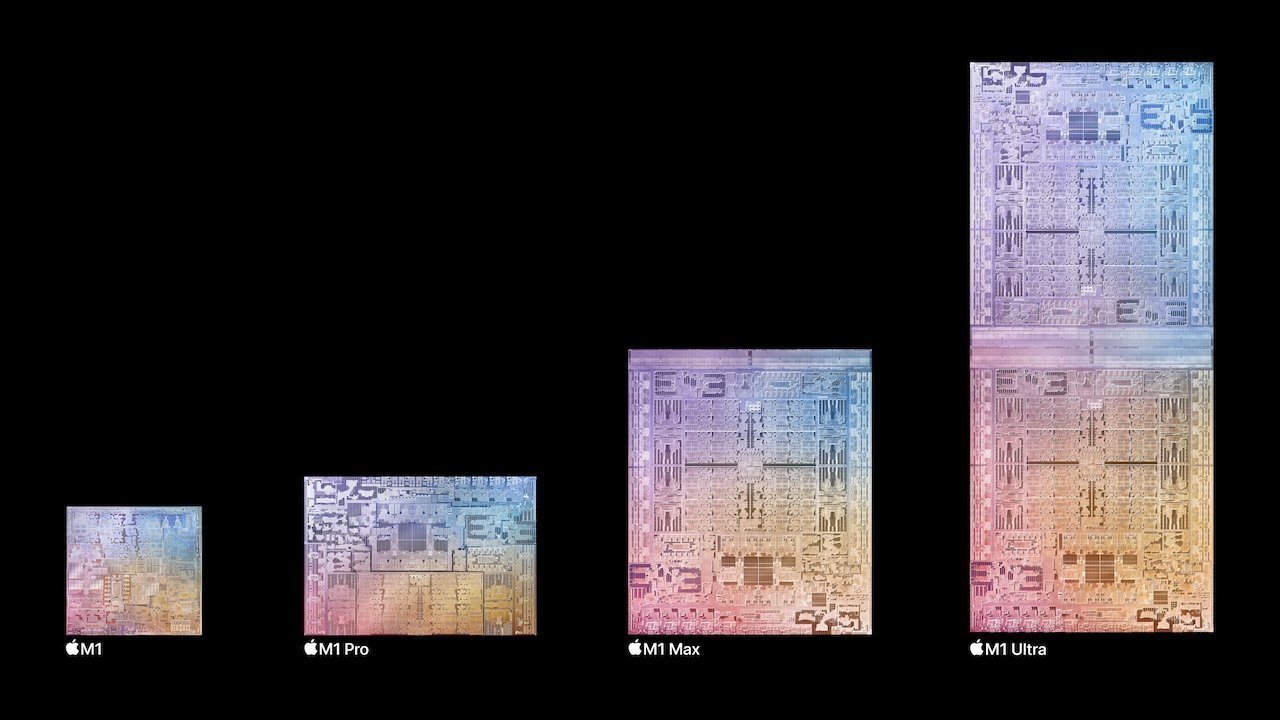

There's a lot you can read into the presentation, such as AMD deciding to go against the M1 Pro instead of an M1 Max or M1 Ultra with its chip performance comparison. Cherry-picking benchmarks isn't anything new in the industry, but there are known chips from Apple that are more powerful than what was looked at.

However, the main point from the presentation that needs addressing is that AMD says this is due to its use of a 4-nanometer production process. By using a 4nm process, AMD says it can achieve "massive horsepower" from its chip designs.

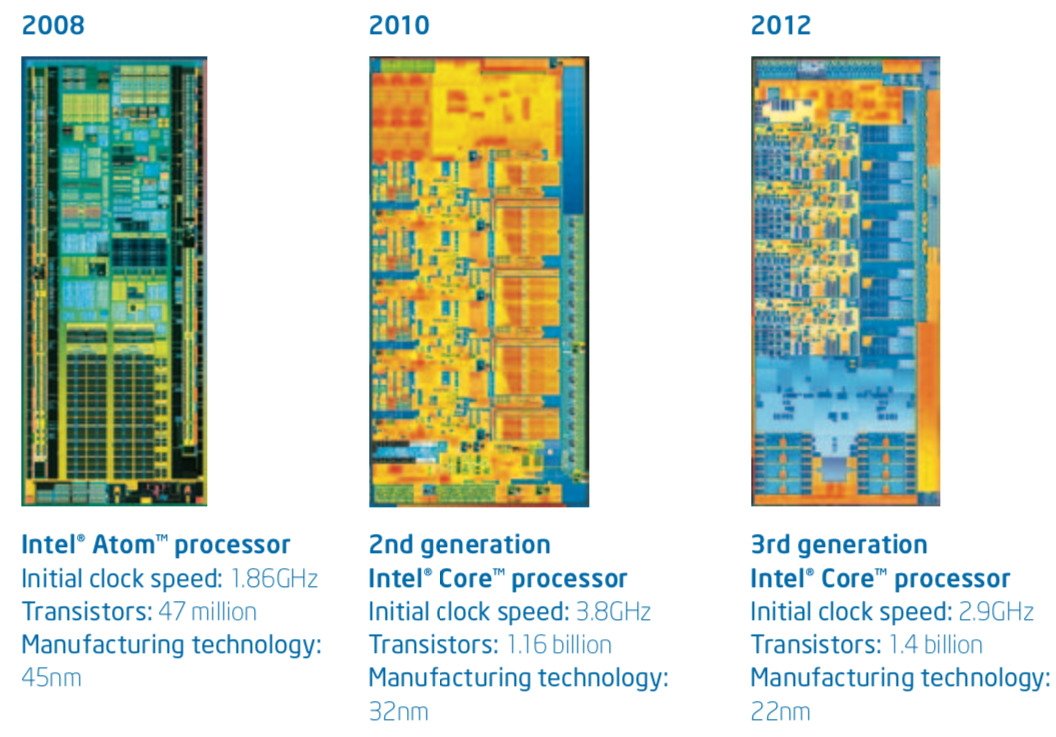

From shrinking the size of the die, chip producers can create chips with more transistors and intricate designs on the same area of silicon. More stuff in a smaller space equals more performance with minimal, space concerns.

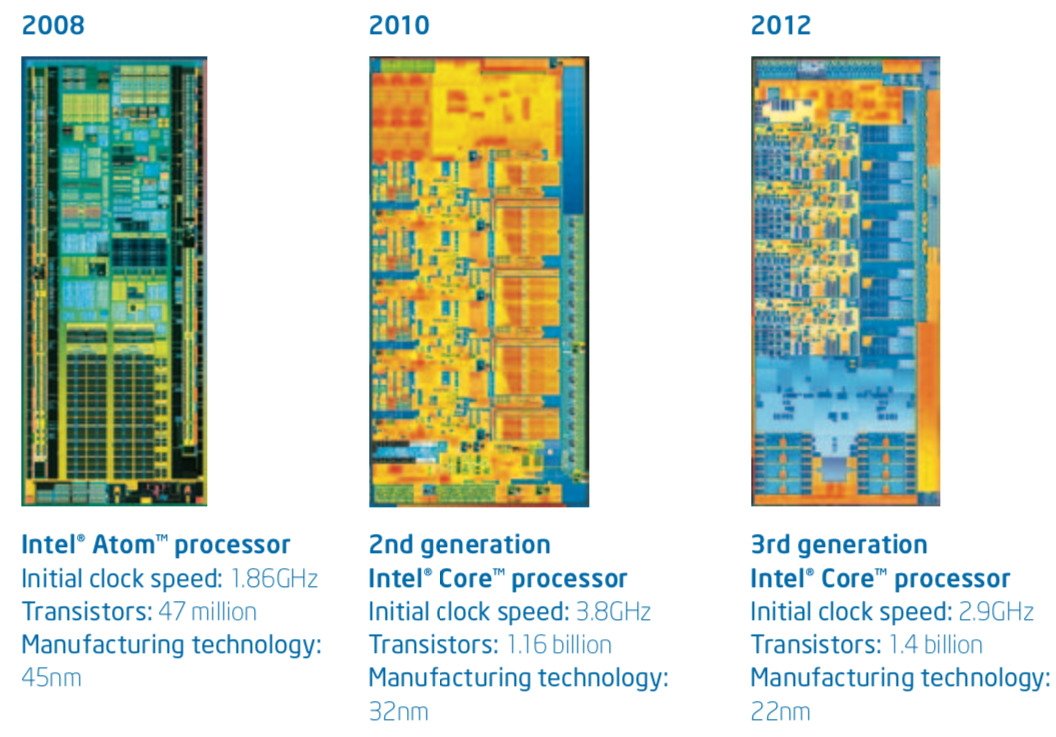

An example of Intel's die shrinking progress over a four-year period.

Going smaller also provides other benefits as well, specifically relating to power and heat. With smaller components on a circuit, less power may be needed to accomplish a task compared to the same circuit using a physically larger process.

The smaller scale also helps with heat, since the lower mass means it isn't generating as much. It can also benefit from dissipating heat through cooling systems at a better rate, too.

Naturally, going down to smaller scales can introduce obstacles that need to be overcome, but to those who manage it, there are considerable rewards in chip design and performance on offer.

For Apple's more immediate chip launches, AMD will still most likely be able to crow about its process usage. Given the M2 is made on a 5nm process, it is almost certain that Apple will keep using it for the other chips in the generation, such as the M2 Pro, M2 Max, and M2 Ultra.

Apple is more likely to make these sorts of major changes between generations, not mid-generation. Feasibly, that would mean the M3 generation could use it, or even something smaller.

Apple could've used 4nm for the initial A16 Bionic prototypes.

Apple was predicted to use TSMC's 4nm process for its A16 Bionic SoC, but instead it stuck to the more established 5nm version. Sure, the A16 was supposed to offer a major generational leap, but early prototypes drew more power than predicted.

Battery life would've been affected, as well as the temperature of the iPhone, with it potentially running hotter than deemed acceptable.

We don't know for sure if the prototype version was using a 4nm process, but whatever it was, Apple didn't think it was good enough in that state.

Reports going back to mid-2022 has Apple signing up to use TSMC's 3-nanometer process. The natural devices to use it being the M3 generation for Mac, despite the insistence that a mid-generational production process change could happen.

In September, Apple apparently became the first in the eyes of anonymous sources that will use the technology. By December, TSMC apparently started mass production of 3-nanometer chips for the Mac and iPhone.

A move to 3nm gives Apple a 15% boost in speed at comparative power levels to a 5nm counterpart, or a 35% reduction in power consumption for comparable performance.

Remember, that's all from changing from 5nm to 3nm. That doesn't take into account any other changes that Apple could bring in to increase clock speed, core counts, or whatever other technical voodoo they come up with in future.

That 15% speed boost could easily become 20%, or even 30%, if Apple plays its cards right.

With such a performance improvement, it makes sense for Apple to concentrate on making a good 3nm chip than to increase the workload by shifting to 4nm then to 3nm. Doing so reduces R&D efforts on its part, as well as any time wasted sticking to 4nm.

Given the supposed woes of the A16's prototype, it could well be that Apple saw there was a problem and decided that skipping was the best course of action overall.

That it could give Apple another considerable performance lead down the road is also a nice benefit to have.

Poking the beast by claiming you're using 4nm instead of 5nm and then using a chip released over a year ago isn't going to win plaudits when there are other more powerful comparisons to be made.

Sure, M1 Pro is powerful, but AMD could've easily tried to take on the M1 Max or M1 Ultra if it were being serious.

When third-party benchmarks of AMD's chip finally surface, the performance may not necessarily be as massively improved over others in Apple's arsenal. Especially with the prospect of 3nm chips on the horizon.

One argument is that AMD is only going on stage and smack-talking Apple's M1 Pro because it's the most popular or approachable option for consumers looking to buy a MacBook Pro. Except that Apple's not really AMD's target.

By showing it can be in the same ballpark as Apple, AMD has laid down the challenge to Intel, threatening to take PC customers away with promises of performance and battery life. After all, if Apple can worry Intel, and AMD is vaguely in the same area, it stands to reason that AMD should be able to worry Intel too.

If AMD can eat Intel's lunch by posturing against Apple, then that's a win in AMD's book against its long-standing rival.

AMD is a good chip designer. It's kept Intel on its toes for years.

We've said it before. Intel, Apple, AMD: pick one, and competition from the other two have made it stronger.

Operationally, Apple won't care about AMD's claims, especially with a loyal customer base and the prospect of 3nm chips on the horizon. It can easily hold back and speak softly for the moment because it knows a metaphorical big stick is on the way.

By 2025, that could turn into 2nm chip production. Less stick, more tree trunk.

Read on AppleInsider

AMD's pushing into 4nm processes for chips, temporarily beating Apple's use of 5nm.

On January 5, AMD used its CES presentation to promote its own line of processors and chips. As to be expected of such a promotional opportunity, the chip maker wheeled out announcements for its inbound chip releases, and took the time to hype it as better than its rival silicon producers.

For this year, the big attention was on the AMD Ryzen 7040HS, a series of chips that are intended for use in "ultrathin PC laptops" and other mobile devices. They were declared to be powerful, both in terms of CPU and GPU performance, and they also perform better than other similar chips.

Being one of the major mobile chips on the market, AMD decided that it would compare its top-end Ryzen 9 7940HS against Apple Silicon.

AMD's CES 2023 presentation boasted about its 4nm prowess.

In on-stage slides, AMD proclaimed that the chip was up to 34% faster in terms of multithreaded performance over the M1 Pro. Moreover, it was also able to run at "up to +20% on AI processing" against Apple's M2.

There's a lot you can read into the presentation, such as AMD deciding to go against the M1 Pro instead of an M1 Max or M1 Ultra with its chip performance comparison. Cherry-picking benchmarks isn't anything new in the industry, but there are known chips from Apple that are more powerful than what was looked at.

However, the main point from the presentation that needs addressing is that AMD says this is due to its use of a 4-nanometer production process. By using a 4nm process, AMD says it can achieve "massive horsepower" from its chip designs.

(Small) size matters

Die Shrinks, or the ability to use a chip production process that runs at a smaller scale than previous generations, are a great marketing point for chip makers.From shrinking the size of the die, chip producers can create chips with more transistors and intricate designs on the same area of silicon. More stuff in a smaller space equals more performance with minimal, space concerns.

An example of Intel's die shrinking progress over a four-year period.

Going smaller also provides other benefits as well, specifically relating to power and heat. With smaller components on a circuit, less power may be needed to accomplish a task compared to the same circuit using a physically larger process.

The smaller scale also helps with heat, since the lower mass means it isn't generating as much. It can also benefit from dissipating heat through cooling systems at a better rate, too.

Naturally, going down to smaller scales can introduce obstacles that need to be overcome, but to those who manage it, there are considerable rewards in chip design and performance on offer.

4nm not for Apple?

Cherry-picking notwithstanding, AMD's claims of using 4nm for its chips does give the company a material advantage over Apple and others who are using 5nm and larger processes.For Apple's more immediate chip launches, AMD will still most likely be able to crow about its process usage. Given the M2 is made on a 5nm process, it is almost certain that Apple will keep using it for the other chips in the generation, such as the M2 Pro, M2 Max, and M2 Ultra.

Apple is more likely to make these sorts of major changes between generations, not mid-generation. Feasibly, that would mean the M3 generation could use it, or even something smaller.

Apple could've used 4nm for the initial A16 Bionic prototypes.

Apple was predicted to use TSMC's 4nm process for its A16 Bionic SoC, but instead it stuck to the more established 5nm version. Sure, the A16 was supposed to offer a major generational leap, but early prototypes drew more power than predicted.

Battery life would've been affected, as well as the temperature of the iPhone, with it potentially running hotter than deemed acceptable.

We don't know for sure if the prototype version was using a 4nm process, but whatever it was, Apple didn't think it was good enough in that state.

Leapfrogging to 3nm

As the march of progress continues, so does the race to get chips to become even smaller and more power efficient. Naturally, that also means Apple chip partner TSMC has been working on just such a topic.Reports going back to mid-2022 has Apple signing up to use TSMC's 3-nanometer process. The natural devices to use it being the M3 generation for Mac, despite the insistence that a mid-generational production process change could happen.

In September, Apple apparently became the first in the eyes of anonymous sources that will use the technology. By December, TSMC apparently started mass production of 3-nanometer chips for the Mac and iPhone.

A move to 3nm gives Apple a 15% boost in speed at comparative power levels to a 5nm counterpart, or a 35% reduction in power consumption for comparable performance.

Remember, that's all from changing from 5nm to 3nm. That doesn't take into account any other changes that Apple could bring in to increase clock speed, core counts, or whatever other technical voodoo they come up with in future.

That 15% speed boost could easily become 20%, or even 30%, if Apple plays its cards right.

With such a performance improvement, it makes sense for Apple to concentrate on making a good 3nm chip than to increase the workload by shifting to 4nm then to 3nm. Doing so reduces R&D efforts on its part, as well as any time wasted sticking to 4nm.

Given the supposed woes of the A16's prototype, it could well be that Apple saw there was a problem and decided that skipping was the best course of action overall.

That it could give Apple another considerable performance lead down the road is also a nice benefit to have.

AMD's posturing shouldn't worry Apple

Going back to AMD's decision to compare against the M1 Pro, the whole thing seems a bit unusual, especially when you're going against the 700-pound gorilla that is Apple.Poking the beast by claiming you're using 4nm instead of 5nm and then using a chip released over a year ago isn't going to win plaudits when there are other more powerful comparisons to be made.

Sure, M1 Pro is powerful, but AMD could've easily tried to take on the M1 Max or M1 Ultra if it were being serious.

When third-party benchmarks of AMD's chip finally surface, the performance may not necessarily be as massively improved over others in Apple's arsenal. Especially with the prospect of 3nm chips on the horizon.

One argument is that AMD is only going on stage and smack-talking Apple's M1 Pro because it's the most popular or approachable option for consumers looking to buy a MacBook Pro. Except that Apple's not really AMD's target.

By showing it can be in the same ballpark as Apple, AMD has laid down the challenge to Intel, threatening to take PC customers away with promises of performance and battery life. After all, if Apple can worry Intel, and AMD is vaguely in the same area, it stands to reason that AMD should be able to worry Intel too.

If AMD can eat Intel's lunch by posturing against Apple, then that's a win in AMD's book against its long-standing rival.

AMD is a good chip designer. It's kept Intel on its toes for years.

We've said it before. Intel, Apple, AMD: pick one, and competition from the other two have made it stronger.

Operationally, Apple won't care about AMD's claims, especially with a loyal customer base and the prospect of 3nm chips on the horizon. It can easily hold back and speak softly for the moment because it knows a metaphorical big stick is on the way.

By 2025, that could turn into 2nm chip production. Less stick, more tree trunk.

Read on AppleInsider

Comments

First and foremost, the A16 is 4nm. Apple stated that outright. It is the N4 process. The linked The Information article AI covered on December 23 may have some elements of truth in it. After all, chipmaking is hard, or everyone would be doing it. Especially high-end graphics. The quote from Ian Cutress therein says all that needs to be said. But it's Apple Insider who makes the leap there to say that the A16 stayed on 5nm and didn't go to 4nm because of these challenges. That is just wrong, wrong, wrong. The A16 did go to 4nm (N4). Apple touted this in its presentation. I find this insistence otherwise, in multiple articles by two different members of the AI staff (Wesley twice and now Malcolm), to be just inexplicable.

The second error is more of a detail, but it's an important one if you're going to be editorializing about Apple and chipmaking. TSMC's so-called "4nm" is the third generation of its 5nm (N5) FinFET platform. It's not a "die shrink," to reference Malcolm's 2019 article where he laid out some of the factors driving Apple's A-series chip production. It uses the same design library. N4 is a second "Tock" not a "Tick," to use the same terms Malcolm used in 2019. That's why Apple could easily revert to the A15 graphics designs for A16 (while staying with N4 for A16, instead of N5P used for the A15), as rumored/leaked in the aforementioned The Information article.

TSMC provides a definitive English-language source of information about how these “process technologies” relate to one another: https://www.tsmc.com/english/dedicatedFoundry/technology/logic

N2 (due in 2025) was recently announced, but it's not clear how it is related to N3—I'll guess that means the relationship between N3 and N2 is similar to the relationship between N5 and N4, that is, N2 will use the N3 FinFlex design library.

Every company cherry picks at their presentations to highlight their performance advantages. Apple, Intel, Qualcomm and AMD all do this. The article mentions(strangely) that they don't compare themselves to the M1 Pro Max or the M1 Ultra. This is because the 7040 is not in the same pricing category(and the Ultra is a desktop chip...). The 7040 is not their high end chip, its a thin and light chip. They have a separate Dragon Range chip for high performance laptops that is a 16 core Zen 4 chip that runs at a much higher TDP. And obviously the M1 Ultra should more rightfully be compared the desktop 7950x or a Threadripper chip, especially when factoring in costs.

AMD is not going to convince the x86 crowd that - all of a sudden after all these decades - the ability to make thin and light devices that have 12 hour battery life is more important than single core performance. AMD has played this game for ages too, and everyone would know that if they shift gears after all this time it will be an admission that they can't win it; that they have no answer for things like this: https://wccftech.com/intel-core-i9-13900ks-worlds-first-6-ghz-cpu-now-available-for-699-us/

So they are going to the "on second thought power-per-watt is what REALLY matters crowd" and saying "hey, can beat Apple on power per watt too!" No, their chips won't beat the M1 Max or M1 Ultra, but who cares. A laptop - these are laptop chips so they won't compete with the Mac Studio - with the M1 Max starts at $2900! AMD Ryzen 9 systems start at $1500.

They are letting people who have been paying $2000 for entry level 14" and 16" MacBook Pros that they can get the same power per watt for hundreds less. That argument isn't going to work against Intel, because no one who buys Intel cares about the latest Ryzen 9 having great power per watt. They care about whether the Ryzen 9 can beat the i9-12900HX in horsepower. As AMD has never matched Intel's single core performance, and as thanks to big.LITTLE the Intel Core i9 has 16 cores to the Ryzen 9's 8 (and 24 threads to the Ryzen 9's 16) it can't match the multicore/SMT performance either, it can't.

Look, stuff like this "By showing it can be in the same ballpark as Apple, AMD has laid down the challenge to Intel, threatening to take PC customers away with promises of performance and battery life. After all, if Apple can worry Intel, and AMD is vaguely in the same area, it stands to reason that AMD should be able to worry Intel too. If AMD can eat Intel's lunch by posturing against Apple" is a flat out refusal to deal with reality. Despite all of the grandiose claims since Apple Silicon made its debut in 2020, Macs have made no real increase in market share. According to all 3 major research firms - Gartner, IDC and Canalys - Apple had anywhere from 9% to 11% of the market last year. To put it another way, ChromeOS outsold macOS for the third year in a row.

Get it through your heads. Apple Silicon is no threat to Intel. The only threat to Intel was Intel, meaning their badly mishandling their fab situation, meaning that they were stuck on 14nm for 6 years and then 3 years on 10nm. When Intel issues 7nm chips - possibly 3Q 2023 but no later than 1Q 2024 - that will be over. AMD knows this. They know that their advantages that were due to being 4 generations ahead of Intel on process node are going to be essentially over by 4Q 2024. Intel already adopted big.LITTLE on 12th gen. They are going to add tiles on 14th gen. And 15th gen they are going to be on a 5nm process. Their window for making the same inroads against Intel in laptops that they made against Intel for gaming desktops is over. They missed it. So they are pivoting to try to pick up some MacBook Pro consumers, because they know that at this point they aren't going to get the Dell and HP laptop customers. Several at CES, several manufacturers revealed that they are actually going Intel-only with their laptops in 2023, and they aren't going to offer any AMD variants at all.

Intel is #1. AMD, Apple and I guess starting in 2024 Qualcomm (though to be honest ... they have no chance) are going to fight each other for #2. Good luck!

The 5000 and 600 series laptop chips from AMD were more powerful and more power efficient than Intel's offerings, and the 7000 chips look to continue that trend. I suspect that Apple was brought for the AI/Machine learning comparison, as Intel does not offer a chip with machine learning currently, while AMD is integrating an FPGA into their chips from their Xilinix acquisition.

The Apple disruption is the ability to improve their in-house OS and their in-house hardware in tandem in an elegant way, combining speed, power, and battery life. Chip only suppliers without an in-house OS are the past moving forward.