AppleInsider · Kasper's Automated Slave

About

- Username

- AppleInsider

- Joined

- Visits

- 51

- Last Active

- Roles

- administrator

- Points

- 9,826

- Badges

- 1

- Posts

- 63,192

Reactions

-

Apple Music expands annual subscription option beyond gift cards

People with an existing Apple Music subscription can now switch to a cheaper annual plan without having to buy a gift card -- though the option is relatively hidden.

Image Credit: TechCrunch

To find it, subscribers must go into the iOS 10 App Store, scroll to the bottom of the Featured tab, and select their Apple ID, TechCrunch noted on Monday. This will prompt for a password, after which people can tap on "View Apple ID," then finally on the Subscriptions button to check their Apple Music membership.

A one-year individual plan is $99 in the U.S., a significant discount off the nearly $120 it costs to go month-by-month. Unfortunately, there are no annual tiers for student or family accounts, and the option is invisible to people who aren't already using Apple Music.

In fact, Apple has so far downplayed the annual option in marketing. The gift card first appeared in September, but is only highlighted in the company's online store.

Offering a discounted plan may nevertheless help Apple compete with the market leader in on-demand streaming, Spotify. The latter is still selling 12-month gift cards for the same price it costs to go month-to-month.

Apple is also aiming to compete with Spotify by offering more original video content. Recently it premiered "Planet of the Apps," and hired two former Sony executives to spearhead future efforts.

-

How to trigger Siri on your iPhone instead of HomePod

With HomePod, accessing Apple's Siri technology is more convenient than ever. But users might want trigger the virtual assistant on an iPhone rather than a speaker that blasts answers to anyone within earshot. Here's how to do it.

As we discovered in our first real-world hands-on, HomePod is an extremely compact, yet undeniably loud home speaker. At home in both small apartments and large houses, HomePod's place-anywhere design and built-in beamforming technology generates great sound no matter where it's set up.

Siri comes baked in, taking over main controls like content playback, volume management, HomeKit accessory commands and more. As expected, the virtual assistant retains its usual assortment of internet-connected capabilities, including ties to Apple services like Messages and various iCloud features.

However, having Siri read your text messages out loud might not be ideal for some users, especially those with cohabitants.

Apple lets you deny access to such features, called "personal requests," during initial setup, but doing so disables a number of utilities. Alternatively, users can tell HomePod, "Hey Siri, turn off Hey Siri" or turn off "Listen for 'Hey Siri'" in HomePod settings in the iOS Home app. Both methods, however, make HomePod a much less useful device.

Luckily, there is a way to enjoy the best of both worlds, but it might take some practice. The method takes advantage of Apple's solution for triggering "Hey Siri" when multiple Siri-capable devices are in the same room. More specifically, users can manually trigger "Hey Siri" on an iPhone or iPad rather than HomePod, allowing for more discreet question and response sessions.

According to Apple, when a users says, "Hey Siri," near multiple devices that support the feature, the devices communicate with each other over Bluetooth to determine which should respond to the request.

"HomePod responds to most Siri requests, even if there are other devices that support 'Hey Siri' nearby," Apple says.

There are exceptions to Apple's algorithm, however. For example, the device that heard a user's request "best" or was recently raised will respond to a given query. Use this knowledge to your advantage to create exceptions to the HomePod "Hey Siri" rule.

When you desire a bit of privacy, simply raise to wake a device and say "Hey Siri." A more direct method involves pressing the side or wake/sleep button on an iPhone or iPad to trigger the virtual assistant, which will interact with a user from that device, not HomePod.

On the other hand, if you want to ensure HomePod gets the message rather than an iOS device, place your smartphone or tablet face down. Doing so disables the "Hey Siri" feature, meaning all "Hey Siri" calls are routed to HomePod.

If you're having trouble with "Hey Siri" or if more than one device is responding to the voice trigger, ensure that all devices are running the most up-to-date version of their respective operating system.

-

Apple's Crash Detection saves another life: mine

Of all the new products I've reviewed across 15 years of writing for AppleInsider, Apple Watch has certainly has made the most impact to me personally. A couple weeks ago it literally saved my life.

Apple's Crash Detection can and has saved lives

I'm not the first person to be saved by paramedics alerted by an emergency call initiated by Crash Detection. There have also been complaints of emergency workers inconvenienced by false alert calls related to events including roller coasters, where the user didn't cancel the emergency call in time.

But I literally have some skin in the game with this new feature because Crash Detection called in an emergency response for me as I was unconscious and bleeding on the sidewalk, alone and late at night. According to calls it made, I was picked up and on my way to an emergency room within half an hour.

Because my accident occurred in a potentially dangerous and somewhat secluded area, I would likely have bled to death if the call hadn't been automatically placed.Not just for car crashes

Apple created the feature to watch for evidence of a "severe car crash," using data from its devices' gyroscopes and accelerometers, along with other sensors and analysis that determines that a crash has occured and that a vehicle operator might be disabled or unable to call for help themselves.

More than five hours later I was shocked how much blood was on the back of my ER mattress--and later, how much I saw on the sidewalk!

In my case, there was no car involved. Instead, I had checked out a rental scooter intending to make a quick trip back to where I'd parked my car.

But after just a couple blocks, my trip was sidelined by a crash. I was knocked unconscious on the side of a bridge crossing over a freeway.

A deep gash above my eye was bleeding heavily. I began losing a lot of blood.

I didn't regain consciousness for another five hours, leaving me at the mercy of my technology and the health workers Crash Detection was able to contact on my behalf.Crash Detection working as intended

Even though I wasn't driving a conventional vehicle, Crash Detection determined that I had been involved in a serious accident and that I wasn't responding. Within 20 seconds, it called emergency services with my location. Within thirty minutes I was loaded in an ambulance and on the way to the emergency room.

When I came to, I had to ask what was happening. That's the first I found out that I was getting my eyebrow stitched up and had various scrapes across the half of my face that I had apparently used to a break my fall. I couldn't remember anything.

Even later after reviewing the circumstances, I had no relocation of an accident occurring. When visiting the scene of the crash, I could only see the aftermath. Blood was everywhere, but there was not enough there to piece together what exactly had happened.

The experience was a scary reminder of how quickly things can happen and how helpless we are in certain circumstances. Having wearable technology watching over us and providing an extra layer of protection and emergency response is certainly one of the best features we can have in a dangerous world.

Almost always, I find myself in the position of making difficult decisions and figuring out how to get out of predicaments. But in the rare occasions where I've been knocked out which has only happened a few times in my entire life, there's a more difficult realization that I'd be completely powerless in the face of whatever problems might occur.

With the amount of blood that I was losing, I couldn't have laid there very long before I would have died. Loss of consciousness and blood is a bad combination for threatening brain damage, too.

I am grateful that I'm living in the current future where we have trusted mobile devices that volunteer to jump in to save us if we are knocked out.Who would opt-in to Crash Detection

Last year, Apple's introduction of Crash Detection on iPhone 14 models, Apple Watch Series 8, and Apple Watch Ultra, was derided by some who worried that the volume of false alerts would be a bigger problem than the few extra lives that might be saved by such a tool.

There were false alerts noted at ski lifts, roller coasters, and by other emergency responders who noted an uptick in calls detailing an incident where the person involved didn't respond to explain it wasn't actually an emergency.

Several observers insisted at the time that the Crash Detection system should be "opt-in," similar to the Fall Detection feature Apple had introduced on Apple Watch to report less dramatic accidents suffered by people over 55.

However, it's impossible to have the system only ever working when it is essential. In my situation, I wouldn't have thought to turn on a system to watch me ride a scooter a few blocks. I probably would have assumed that a scooter ride was less risky than driving, despite having no seatbelt, no airbag and no other protective gear.

So I'm also particularly glad Apple doesn't restrict Crash Detection only to car accidents!

My Apple Watch still works but was scratched up pretty well

The fact that my watch and phone had been monitoring me for over a year without incident before a situation occurred where they literally could spring into action to save me is based entirely upon the idea that they are working in the background, not something I'd need to assume I needed. That's the right assumption to make. It literally saved me.

Crash Detection is a primary example of a new, innovative iOS feature update that adds tremendous value to the products I already use, without any real thinking on my part. It just works. And more importantly, it saved my life when it did.Exercise your Emergency Contacts

Despite having an iPhone that's set up with a European phone number and home address, Crash Detection "just worked" here in the United States. It dialed the right number for the location where I crashed, and getting me help efficiently and quickly. That's great.

However, I realized after I woke up that I had two emergency contacts that should also have been notified. My phone did its job correctly, but in both cases I'd listed both my partner and a family member with old phone numbers they don't still use. That meant that Crash Detection had called the police for me, but wasn't able to notify my designated emergency contacts.

If you haven't taken a recent look at your emergency contact data, now might be a good time to check to make sure that everything is in order. Note that when you update a phone number, it doesn't necessarily "correct" your defined emergency contacts.

You may need to delete and reestablish your desired emergency contact and their phone number, as the system only calls the specific contact number you've supplied. It doesn't run through your contact trying each number you've ever entered for that person.

In my case, there was another Apple service that jumped in to help. Because I was sharing my location with iCloud, it was easy for my partner in another time zone, far away, to find out where I had been taken by using the Find My app, and then to call the hospital to find out my condition.

But if Crash Detection hadn't been working, I may not have survived-- or things could have ended up much worse: badly injured or even mugged while laying unconscious in a sketchy area in the middle of the night.

I'm not the only AppleInsider staffer whose life was saved by the Apple Watch, and I probably won't be the last. So thanks, Apple!

Read on AppleInsider

-

Editorial: As Apple A13 Bionic rises, Samsung Exynos scales back its silicon ambitions

A decade after Apple and Samsung partnered to create a new class of ARM chips, the two have followed separate paths: one leading to a family of world-class mobile silicon designs, the other limping along with work that it has now canceled. Here's why Samsung's preoccupation with unit sales and market share failed to compete with Apple's focus on premium products.

Apple's custom "Thunder" and "Lightning" cores used in its A13 Bionic continue to embarrass Qualcomm's custom Kyro cores used in its Snapdragon 855 Plus, currently the leading premium-performance SoC available to Android licensees.

The A13 Bionic is a significant advancement of Apple's decade-long efforts in designing custom CPU processor cores that are both faster and more efficient than those developed by ARM itself while remaining ABI compatible with the ARM instruction set. Other companies building "custom" ARM chips, including LG's now-abandoned NUCLUN and Huawei's HiSilicon Kirin chips, actually just license ARM's off-the-shelf core designs.

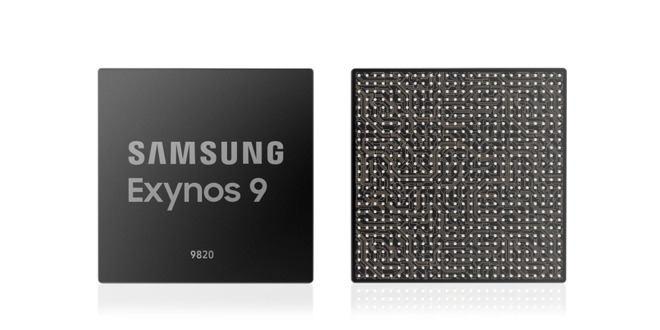

To effectively compete with Apple and Qualcomm, and stand out from licensees merely using off-the-shelf ARM core designs, Samsung's System LSI chip foundry attempted to develop its own advanced ARM CPU cores, initiated with the 2010 founding of its Samsung Austin R&D Center (SARC) in Texas. This resulted in Samsung's M1, M2, M3, and M4 core designs used in a variety of its Exynos-branded SoCs.

The company's newest Exynos 9820, used in most international versions of its flagship Galaxy S10, pairs two of its M4 cores along with six lower power ARM core designs. Over the past two generations, Samsung's own core designs have, according to AnandTech, delivered "underwhelming performance and power efficiency compared to its Qualcomm counter-part," including the Snapdragon 855 that Samsung uses in U.S. versions of the Galaxy S10.

The fate of Samsung's SARC-developed M-series ARM cores appeared to be sealed with reports earlier this month that Samsung was laying off hundreds of staff from its chip design team, following the footsteps of Texas Instruments' OMAP, Nvidia Tegra, and Intel Atom in backing out of the expensive, high-risk mobile SoC design space. It is widely expected that Samsung will join Huawei in simply repackaging ARM designs for future Exynos SoCs.

This development highlights the vast gap between what Apple has achieved with its A-series chips since 2010 compared to Samsung's parallel Exynos efforts over the same period. This is particularly interesting because in 2010 Apple and Samsung were originally collaborators on the same ancestral chip of both product lines.Partners for a new Apple core

In 2010, Samsung and Apple were two of the world's closest tech partners. They had been collaborating to deliver a new ARM super-chip capable of rivaling Intel's Atom, which scaled-down Chipzilla's then-ubiquitous x86 chip architecture from the PC to deliver an efficient mobile processor capable of powering a new generation of tablets.

ARM processors had been, by design, minimally powerful. ARM had originated in a 1990 partnership between Apple, Acorn, and chip fab VLSI to adapt Acorn's desktop RISC processor into a mobile design Apple could use in its Newton Message Pad tablet. Across the 1990s, Newton failed to gain much traction, but its ARM architecture processor caught on as a mobile-efficient design popularized in phones by Nokia and others.

Starting in 2001, Apple began using Samsung-manufactured ARM chips in its iPods, and since 2007, iPhone. The goal to make a significantly more powerful ARM chip was launched by Apple in 2008 when it bought up P.A. Semi. While it was widely reported that Apple was interested in the company's PWRficient architecture, in reality, Apple just wanted the firm's chip designers to build the future of mobile chips, something Steve Jobs stated at the time. Apple also partnered with Intrinsity, another chip designer specializing in power-efficient design, and ended up acquiring it as well.

Samsung's world-leading System LSI chip fab worked with Apple's rapidly assembled design team with talent from PA Semi and Intrinsity to deliver ambitious silicon design goals, resulting in the original new Hummingbird core design, capable of running much faster than ARM's reference designs at 1 Gigahertz. Apple shipped its new chip as A4, and used it to launch its new iPad, the hotly anticipated iPhone 4, and the new Apple TV, followed by a series of subsequent Ax chip designs that have powered all of its iOS devices since.Frenemies: from collaborators to litigants

The tech duo had spent much of the previous decade in close partnership, with Samsung building the processors, RAM, disks and other components Apple needed to ship vast volumes of iPods, iPhones and now, iPad. But Samsung began to increasingly decide that it could do what Apple was doing on its own, simply by copying Apple's existing designs and producing more profitable, finished consumer products on its own.

Samsung's System LSI founded SARC and went on to create its independent core design and its own line of chips under its Exynos brand. That effectively forced Apple to scale up its chip design work, attempt to second-source more of its components and work to find an alternative chip fab.

Increasing conflicts between the two tangled them in years of litigation that ultimately resulted in little more than a very minor windfall for Apple and an embarrassing public view into Samsung's ugly copy-cat culture that respected its own customers about as little as its criminal executives respected bribery laws.

As Samsung continued to increasingly copy Apple's work to a shameless extent, it quickly gained positive media attention for selling knockoffs of Apple's work at cut-rate prices. But it took years for Apple to cultivate alternative suppliers to Samsung, and it was particularly daunting for Apple to find a silicon chip fab partner that could rival Samsung's System LSI chip fab.

Samsung takes its act solo

By 2014, Samsung had delivered years of nearly identical copies of Apple's iPhones and iPads, and its cut-rate counterfeiting had won the cheering approval and endorsement of CNET, the Verge and Android fan-sites that portrayed Samsung's advanced components as the truly innovative technology in mobile devices, while portraying Apple's product design, OS engineering, App Store development, and other work as all just obvious steps that everyone else should be able to copy verbatim to bring communal technology to the proletariat. Apple was disparaged as a rent-seeking imperialist power merely taking advantage of component laborers and fooling customers with propaganda.

What seemed to escape the notice of pundits was the fact that while Samsung appeared to be doing an okay job of copying Apple's work, as it peeled away to create increasingly original designs, develop its own technical direction, and pursue software and services, the Korean giant began falling down.

Samsung repeatedly shipped fake or flawed biometric security, loaded up its hardware with a mess of duplicated apps that didn't work well at all, splashed out technology demos of frivolous features that were often purely ridiculous, and repeatedly failed to create a loyal base of users for its attempted app stores, music services, or its own proprietary development strategies.

And despite the vertical integration of its mobile device, display, and silicon component divisions that should have made it easier for Samsung to deliver competitive tablets and create desirable new categories of devices including wearables, its greatest success had merely been creating a niche of users who liked the idea of a smartphone with a nearly tablet-sized display, and were willing to pay a premium for this.

Despite vast shipments of roughly 300 million mobile devices annually, Samsung was struggling to develop its custom mobile processors. In part, that was because another Samsung partner, Qualcomm, was leveraging its modem IP to prevent Samsung from cost-effectively even using its own Exynos chips. For some reason, the communist rhetoric that tech journalists used to frame Apple as a villainous corporation pushing "proprietary" technology wasn't also applied to Qualcomm.Apple's revenge on a cheater

Unlike Samsung, Apple wasn't initially free to run off on its own. There simply were not any alternatives to some of Samsung's critical components-- especially at the scale Apple needed.

In particular, it was impossible for Apple to quickly yank its chip designs from Samsung's System LSI and take them to another chip fab, in part because a chip design and a particular fab process are extremely interwoven, and in part because there are very few fabs on earth capable of performing state of the art work at scale. Perhaps four in total and each of those fabs' production was booked up because fabs can't afford to sit idle.

It wasn't until 2014, after several years of exploratory work, that Apple could begin mass producing its first chip with TSMC, the A8. That new processor was used to deliver Apple's first "phablet" sized phones, the iPhone 6 and iPhone 6 Plus. These new models absolutely eviscerated the profits of Samsung's Mobile IM group. The fact that their processors were built by Samsung's chip fab arch-rival was a particularly vicious twist of the same blade.

Apple debuted the iPhone 6 alongside a preview of Apple Watch. A few months later, Apple Watch went on sale and rapidly began devastating Samsung's flashy experiment with Galaxy Gear -- the wearable product line it had been trying to sell over the past two years. Galaxy Gear had been the only significant product category that Samsung appeared capable of leading in, simply because Apple hadn't entered the space. Now that it had, Samsung's watches began to look as commercially pathetic as its tablets, notebooks, and music players.

Apple's silicon prowess helped Apple Watch to destroy Samsung's Galaxy Gear

Samsung's inability to maintain its premium sales and its attempts to drive unit sales volume instead with downmarket offerings hasn't resulted in the kind of earnings that Apple achieves by selling buyers premium products that are supported longer and deliver practical advancements.

As a result, Apple is now delivering custom silicon not just for tablets and phones, but also for wearables and a series of specialized applications ranging from W2 and H1 wireless chips that drive AirPods and Beats headphones, to its T2 security chips that handle tasks like storage encryption, media encoding, and Touch ID for Macs.

-

A6X: How Apple's iPad silicon Disrupted mobile video gaming

To maintain its momentum in tablets with iPad, in 2012 Apple completed two additional new custom silicon projects specifically for its tablets. These reached-- and then exceeded-- the graphics of Sony's PlayStation Vita, then the state-of-the-art in handheld video gaming. Here's a look at how Apple 'embraced and extended' mobile gaming.

By the end of 2012, Apple's strategy of developing its custom mobile silicon was clearly a success. Proprietary silicon had enabled the company to deliver new products with features that the industry hadn't even anticipated.

That rush of innovation, in turn, helped it to drive high volume sales that rapidly brought down its costs and paid for new silicon advancements while creating a vast installed base of users that drove an expanding ecosystem of custom, tablet-optimized software.

This allowed Apple to position iPad as an alternative for many low-end PCs and netbooks, as well as being an entirely new kind of "Post-PC" product that could appeal to new users who preferred simplicity and serve entirely new roles for mobile workers. It also allowed Apple to Disrupt the existing market for dedicated mobile video gaming devices.

iPad and iPhone specifically replaced the combination of less-expensive hardware subsidized by more expensive games with a solution that better served most users: more expensive hardware with less expensive games. This was achieved in part due to Apple's proprietary control over its own silicon.Apple silicon wasn't a secret; its intent was

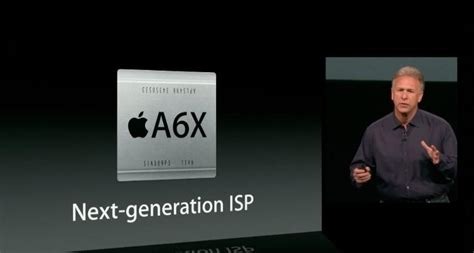

Apple's silicon strategy wasn't exactly a secret. It was publicly known that it had acquired P.A. Semi in 2008. Steve Jobs had even stated on record that the deal was aimed at building mobile chips for iPod and iPhone. It wasn't known, however, what exactly Apple's silicon team was working toward until it released a new chip: A4 in 2010, then A5 in 2011, then A5X in 2012 and the entirely custom core design of A6 later that year. Each new chip was announced only when the product it powered was being introduced.

Apple detailed each new Ax chip just as products using it became available

Up to that point, most of the silicon microprocessors that had played a significant role in driving mass-market consumer technology were a general-purpose design, speculatively developed to be sold as discreet components for a variety of uses.

Chip vendors laid out their plans as a public roadmap showing when new technological advances would arrive. Upcoming chips from Motorola; Intel; the PowerPC partners; and mobile ARM chips from Qualcomm, Texas Instruments, Nvidia, Samsung, and others were charted out well in advance and made available to any buyers seeking to use them.

That had typically been the case because the expense and effort of developing a significantly custom new chip was so vast that it didn't seem workable for one company to create their own advanced processors. Additionally, to help create markets for their upcoming chips in the pipeline, chip vendors wanted device makers to be aware of what they could expect, in order to plan the future together.

This public, distributed planning of the future of chips and the devices using them meant that few products could really be very surprising.The curse of commodity crushing Apple

Across the 1990s, the expected, incremental enhancements of commodity had ground down the PC industry into a boring conveyor belt of PCs, each slightly improved over the last. It was a difficult market for Apple to stand out in, as the primary remaining way it could differentiate its Macs was through industrial design rather than introducing advanced new hardware technology.

Apple's original Macintosh models, and later initiatives such as AV DSPs and PowerPC, had once attempted to drive the technical underpinnings of the Mac in silicon. But in a commodity world, Apple's ability to integrate technologies together was challenged by the flexibility and ease of hardware cloners to ride the wave of cheap commodity and catch up to Apple with the help of a software company delivering a copy of Apple's user interface and app development work.

Apple's few million unit sales of PowerPC Macs struggled to compete with commodity PCs

This worked so well for so long in PCs that virtually everyone assumed that it would carry forward into mobile devices, with Google's Android playing the part of Microsoft's Windows. The superiority of commodity also seemed to be an unchallenged fact in silicon, where Intel had solidly proven the economies of scale behind x86 chips in PCs.

Neither Acorn nor even an industry consortium of the heavyweights once lined up behind PowerPC could ultimately challenge x86 in PCs. On its own, Apple's annual sales of around 4 million PowerPC Macs certainly couldn't sustain a challenge to Intel's technical development supported by the combined sales of around 300 million x86 PC.Video gaming defied commodity

There was a notable market exception to this commodity PC model, however: video game consoles. While PCs languished toward x86 monotony in the 1990s, Sony, Nintendo, and even Microsoft followed the original model of Apple's Macintosh by building unique, proprietary hardware, commonly powered by custom-developed MIPS or PowerPC silicon.

Video game consoles were able to buck the trend of PC commodity by offering unique capabilities powered by advanced silicon CPUs and GPUs, along with unique ecosystems of exclusive games software. Games consoles sold in the tens of millions of units, often achieved by discounting their hardware and then making back profits by charging video game developers steep licensing fees to write games for their platform.

Consoles like Sony's PS3 shipped in volumes that supported custom silicon, propped up by game licensing fees

Microsoft took notice of video games in the mid-90s and first sought to tie gaming to Windows, then introduced Xbox to pull the non-PC gaming market under its control. Apple took note of gaming as well, and while it struggled to attract material attention to the Mac, it found some success in licensing games for iPods in the model of conventional licensed gaming platforms.

In fact, iTunes's iPod Games was clearly the origin of the iOS App Store, which brought gaming-- along with general-purpose apps-- to iPhone and then iPad using a Walled Garden model that resembled the licensing programs of video game consoles.

However, Apple changed the script by continuing to sell premium hardware at a profit, while encouraging third-party developers to create original apps under an App Store where there were only minimal fees to participate. iTunes charged only a 30% cut on sales, a share dramatically lower than any previous market for software had charged.The return of integration

At the same time, the economics favoring WinTel commodity in PCs changed as personal computing shifted from conventional Windows PCs into mobile devices, where tight integration was far more important than the flexibility of taking a commodity video card, logic board, a processor, and loading an OS flexible enough to make it all work well enough.

Apple had already proven that its tight integration of iPod had created something far better Microsoft's partners could loosely assemble in the model of a PC. Within just a couple years, iPhone's similarly tight integration had upended the markets of licensed phone platforms including Symbian, JavaME, Windows Mobile, and Palm OS.

Additionally, in the emerging market for increasingly sophisticated mobile devices powered by ARM chips, the complexity of silicon shifted from a central microprocessor to an entire "System on a Chip," including CPUs, GPUs, memory and storage controllers, and various other components all in the same package. ARM SoC makers were now doing vast integration work, deciding which components to include and tuning them to achieve specific goals in efficiency, economy, performance and other factors.

That makes it obvious why Apple wanted to own its own mobile SoC silicon and drive this highly customizable integration by itself, even while it was also delegating away its Mac CPU design to Intel. iPod, and now iPhone, were showing that Apple could achieve a scale in mobile devices in the double-digit millions that it could never have hoped to achieve in desktop and notebook Macs.

Across its first two years of iPad, Apple shared its A4 and A5 silicon across every mobile device it was making. In 2012, it not only finished its first iPad-specific chip but also created a radically enhanced second generation of that chip just six months later.Apple's sales volumes drive a Swift A6X

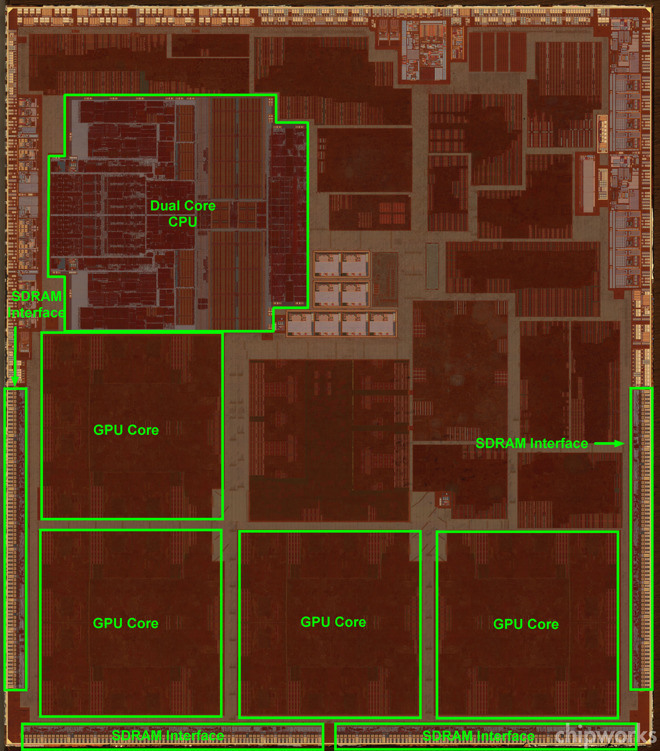

A primary example of how Apple's consistently reliable, high volume sales of finished products were driving the progress of its silicon-- and vice versa-- was the October 2012 delivery of its new iPad 4 using Apple's custom A6X, which arrived just six months after the introduction of iPad 3 and its A5X.

"We're not taking our foot off the gas," Apple's chief executive Tim Cook said at the introduction of the A6X-powered iPad 4. Rather than changing the design of the new model, it added FaceTime HD and the new Lightning connector of iPhone 5 while remaining compatible with existing apps and accessories.

A6X | Source: Chipworks

Apple's new A6X chip once again delivered twice the performance in CPU and GPU of the A5X from just six months earlier. It achieved this in CPU by moving to Apple's new A6 Swift core design shared with iPhone 5.

In graphics, A6X upgraded its GPU from PowerVR SGX543 to PowerVR SGX554. The new chip also benefitted from Samsung's process node reduction that scaled down the size of the chip, making it faster and also less expensive to produce.

That GPU jump was particularly noteworthy because iPad 3 had debuted with the same GPU as Sony's PlayStation Vita, within thee months of that portable gaming tablet's debut. Just six months later, Apple radically upgraded its iPad 4 graphics with an entirely new custom chip with twice the graphics performance.

Sony's PS Vita was cheaper than iPad but sold in far lower volumes

Sony continued to sell its PS Vita-- with the same GPU it debuted with-- across the rest of the decade: 2011-2019. Across its first two years of sales, PS Vita sold an estimated 4 million units. In contrast, Apple sold about 32 million iPads in Fiscal 2011, followed by 58 million in FY 2012, the first year of PS Vita sales.

That pretty clearly demonstrates why Apple could afford to speculatively invest in such a dramatic silicon upgrade for iPad just within 2012. Sony had no plans to constantly upgrade Vita's GPU. Within a few years, Sony determined that the market for handheld gaming couldn't support further custom hardware at all.

Cheap App Store mobile games were destroying the business model of taxing software developers selling expensive AAA titles.iPad bifurcation to reach new price points

In 2012, a significant number of Apple's iPad sales were its cheaper, older iPad 2 model, which it continued to sell. However, all of its iPad sales were generating profits to develop new higher-end iPads and the silicon to power them. Other tablet makers, notably Samsung, were frequently just giving away their tablets just to induce demand.

If Apple's iPad sales had only reached sales of a few million units each year-- like Sony's PS Vita or more conventional tablets like Samsung's Galaxy Tab or Microsoft Surface-- it could not have justified the proprietary development of increasingly advanced custom silicon each year to power successive generations.

If Apple had remained content just selling iPad 2 across 2012, it would not have developed faster more powerful A5X and A6X silicon, and would not have been capable of delivering higher-end products like iPad 3 and iPad 4 to sell to a higher-end niche of buyers. Those premium products created a halo over its entry-level iPad 2, as well as the iPad mini--a new smaller version of the A5-powered, non-Retina display iPad 2--at the end of 2012.

Apple's premium iPad 4 could attract premium sales to users who wanted its Retina display. iPad 2 and iPad mini could serve broad audiences where they could attract away buyers who might otherwise have bought a netbook, an entry-level notebook, or a game console tablet-like PS Vita.

iPad 4 dramatically increased performance in the same form factorDisruption of expensive video games

Apple's massive iPad sales were not only accelerating the pace of its silicon advancement but were also creating an installed base for developers to write tablet-optimized software for it. Games represented the majority of the app titles being created for iPad and the App Store in general.

Sony's PS Vita was hailed at launch for delivering graphics on par with the original Xbox, but it failed to support the same business model of expensive AAA gaming titles that had previously driven gaming device and console sales. It was becoming clear that iPad and smartphones, in general, were not only eating up the market for PCs but would also disrupt gaming by better serving users who previously had to pay for much more for expensive games that were effectively over-serving the market.

As time when on, it was clear that mobile gaming largely destroyed any real future for dedicated portable gaming, in part because while purpose-built gaming devices could be better at gaming, they were not generally very good for things other than gaming. iPad delivered a wider range of less expensive games, but could also browse the web, play media, and perform a variety of general computing tasks.iPad, destroyer of worlds

Beyond video gaming-oriented tablets, iPad was also general-purpose enough to eat up the market for specialized devices like e-ink readers, where the value of a dedicated book reader was similarly overcome by the iPad's advantage of being one device with many uses.

Along with iPhone, iPad also ate into the markets for standalone DVD-players, GPS devices, point and shoot cameras, and eventually even seatback media players. Much of its success came from Jobs' initial definition of a device being ultra-simple but also differentiated from phones with its own tablet-optimized software.

Analysts imagined Apple would sell big TVs for $2000. iPad was a small TV under $500.

Back in 2012, Piper Jaffray analyst Gene Munster was adamantly predicting that Apple would soon begin selling TV sets running iOS. What he and so many others missed was that Apple was already building tens of millions of mobile television replacements with iPad, a device that could stream video, capture video, play video games, read books, browse the web and do many other things that a conventional TV couldn't, at a price that was competitive, and at a significant profit.

Nobody was making money selling TV sets because they were a commodity.

Most notably, iPad helped kill the notion that "commodity always wins." The idea that computing would always be driven by Intel chips and Windows software had been accepted by most analysts and journalists as gospel.

Apple's iPad proved that compelling hardware, driven by advanced new silicon, could undermine the commodity PC market and even overshadow it, building out a vast installed base of users that shifted the priorities of consumers and even the corporate enterprise that was supposedly beholden to WinTel and uninterested in any alternatives.Commodity continues to lose in tablets

The first major challenge to iPad had been Google's industry consortium behind 2011's Android 3.0 Honeycomb, which arrogantly marched out in public as if it would be effortless to kick Apple to the curb in tablets. Instead, Google, Motorola, Samsung, and other major Honeycomb partners were burned with a deathly sting of disinterest in their large, complicated busy-box tablets with poor integration between their hardware and software.

That came despite strident efforts by Google to support Adobe Flash in Android. Google subsequently acquired Motorola Mobility, thinking that it could better compete with Apple by leveraging some of its own vertical integration.

However, while Motorola ostensibly gave Google product design and manufacturing capabilities, it wasn't following Apple's gameplan very closely. Just like Samsung, Motorola could develop and produce its own chips but was instead using silicon from a variety of competitors.

Motorola's Xoom tablet and Atrix phone used Nvidia's Tegra 2. The successor Xyboard and Atrix 2 used a TI OMAP4. It also sold phones with Qualcomm chips. Once again, Android was leading companies to failure.

In 2012, Microsoft unveiled its own Surface RT, with similar expectations of crushing Apple's iPad with an integrated device with more similarities to a conventional PC. Microsoft also hoped to line up all the support of its Windows software developers. However, Surface RT's failure to sell more than a million units helped killed any hopes of creating ARM-based software that could run on Windows RT.

By the end of 2012, and despite now owning Motorola, Google launched a second strategy in Android tablets: plans with PC maker Asus to deliver Nexus 7, a mini-tablet that was so terrible that even Android's most devoted fans described it as an "embarrassment to Google."

Google continued to pursue putting its name on cheap Acer tablets for two years while it struggled to figure out how to leverage Motorola to do anything apart from losing hundreds of millions of dollars. It eventually laid-off its employees and sold the remains to Lenovo.End for OMAP

Google and Microsoft weren't the only companies struggling to adapt to a world where Apple was driving innovation using its own custom silicon. By the end of 2012, Texas Instruments abandoned the launch of its Cortex-A15 based OMAP 5 platform and backed out of the consumer SoC market entirely.

That was likely largely caused by the collapsing demand for its premium silicon after the failure of a long list of devices using its OMAP chips: Palm Pre, RIM's BlackBerry Playbook, Motorola's Xyboard tablet, MOTOACTIVE music player, Nokia's N9, Google's Nexus Q, and Galaxy Nexus.

Rather than empowering various chip vendors to run a common platform, Android appeared to be running them out of business.

The collapse of OMAP 5 also allowed Apple to continue hiring processor design talent. Apple subsequently was reported to have hired away much of TI's OMAP team located in Haifa and Herzliya, Israel, sites in close proximity to Anobit, the flash memory chip designer Apple acquired in late 2011-- and reportedly used to develop its own performance-optimized storage controller.

That wasn't the only failure occurring in mobile silicon, as the next segment will examine.

-

What is Display Scaling on Mac, and why you (probably) shouldn't worry about it

Display scaling makes the size of your Mac's interface more comfortable on non-Retina monitors but incurs some visual and performance penalties. We explain these effects and how much they matter.

A MacBook connected to an external monitor.

In a world where Apple's idea of display resolution is different from that of the PC monitor industry, it's time to make sense of how these two standards meet and meld on your Mac's desktop.A little Mac-ground...

Apple introduced the Retina display to the Mac with the 13-inch MacBook Pro on the 23rd of October 2012, packing in four times the pixel density. From that point onward, Apple gradually brought the Retina display to all its Macs with integrated screens.

This was great, but it appeared Apple had abandoned making its standalone monitors, leaving that task to LG in 2016. So, if you needed a standalone or a second monitor, going officially Retina wasn't an option.

However, now Apple has re-entered the external monitor market, and you have to decide whether to pair your Mac with either a Retina display, such as the Pro Display XDR or Studio Display, or some other non-Retina option.

And part of that decision depends on whether you're concerned about matching macOS's resolution standard.Enter display scaling

Increasing a display's pixel density by four times presents a problem: if you don't adjust anything, all of the elements in the user interface will be four times smaller. This makes for uncomfortably tiny viewing. So, when Apple introduced the Retina standard, it also scaled up the user interface by four times.

The result is that macOS is designed for a pixel density of 218 ppi, which Apple's Retina monitors provide. And if you deviate from this, you run into compromises.

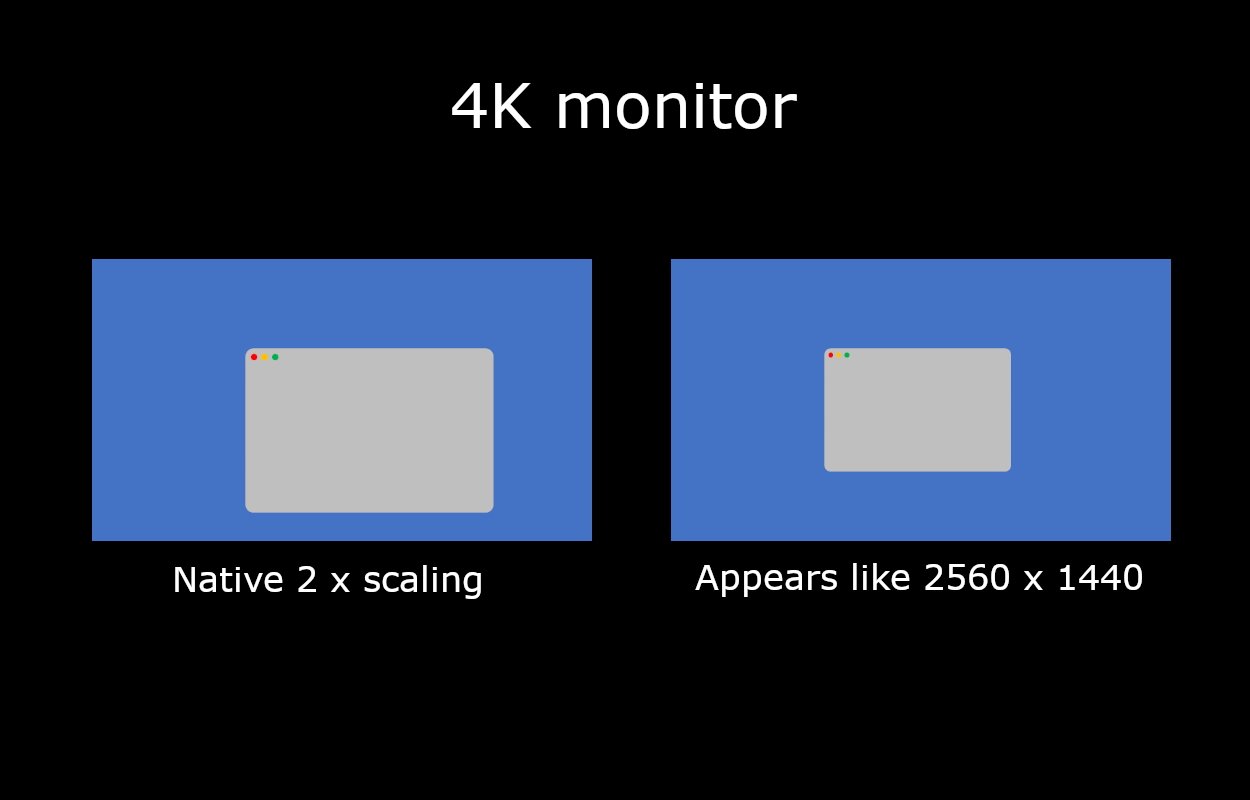

For example, take the Apple Studio Display, which is 27 inches in size. It has a resolution of 5120 x 2880, so macOS will double the horizontal and vertical dimensions of the UI, thus rendering your desktop at the equivalent of 2560 x 1440. Since macOS has been designed for this, everything appears at its intended size.

Then consider connecting a 27-inch 4K monitor to the same Mac. This has a resolution of 3840 x 2160, so the same 2 x scaling factor will result in an interface size the equivalent of 1920 x 1080.

Since both 5K and 4K screens are physically the same size with the same scaling factor, the lower pixel density of the 4K one means that everything will appear bigger. For many, this makes for uncomfortably large viewing.

The difference between native scaling and display scaling on a 4K monitor.

If you go to System Settings --> Displays, you can change the scaling factor. However, if you reduce it to the equivalent of 2560 x 1440 on a 4K monitor, macOS calculates scaling differently because 3840 x 2160 divided by 2560 x 1440 is 1.5, not 2. In this case, macOS renders the screen at 5120 x 2880 to a virtual buffer, then scales it down by 2 x to achieve 2560 x 1440.

Display scaling -- this method of rendering the screen at a higher resolution and then scaling down by 2 x -- is how macOS can render smoothly at many different display resolutions. But it does come with a couple of caveats.Mac(ular) degeneration

Applying a 2 x scaling factor to the respective native resolutions of both a 5K and a 4K monitor will result in a pixel-perfect image. But display scaling will render a 2560 x 1440 image onto a 3840 x 2160 display. This will naturally produce visual artifacts, since it's no longer a 1:1 pixel mapping.

Therefore, display scaling results in deformities in image quality, including blurriness, moire patterns, and shimmering while scrolling. It also removes dithering, so gradients may appear less smooth.

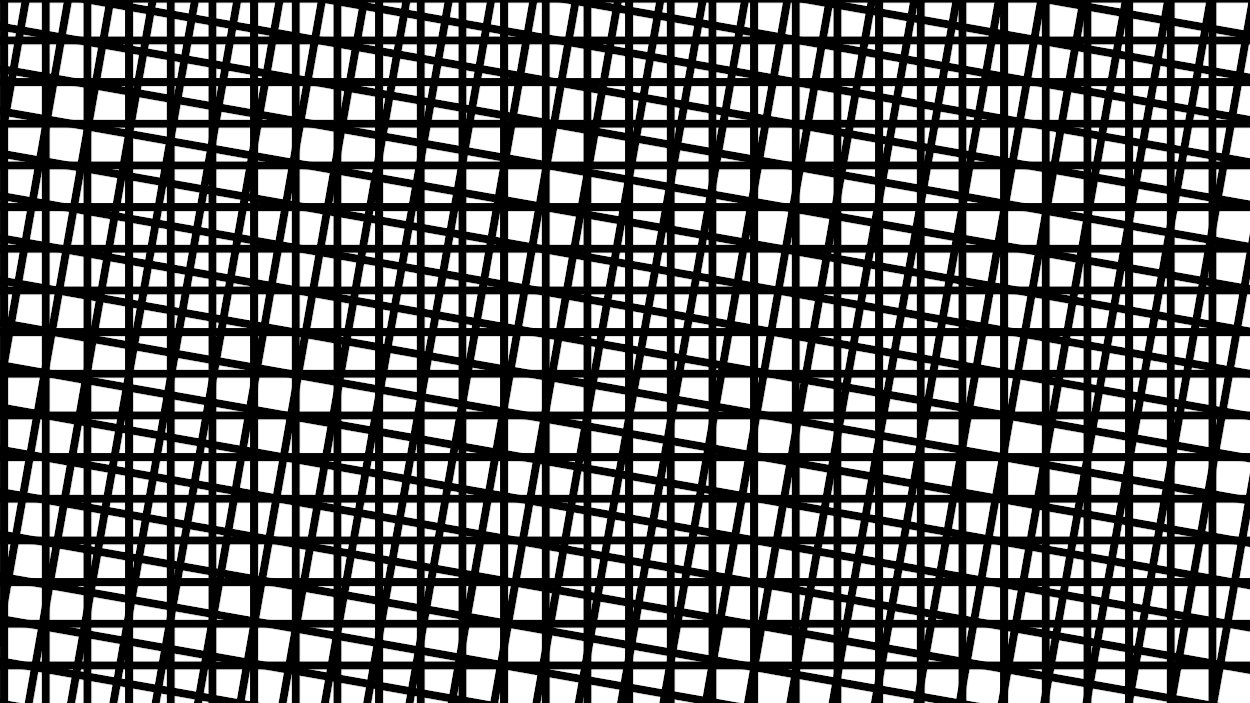

An example of a Moire pattern with two superimposed grids.

Although these visual artifacts are undoubtedly present, the principle of non-resolvable pixels still applies.

By rearranging the formula, angular resolution = 2dr tan(0.5 degrees) becomes d = angular resolution / 2r tan (0.5 degrees). Then we can calculate the viewing distance at which this happens. For example, at the Retina angular resolution of 63 ppd, and the pixel density of 163 ppi for a 4K monitor, the result is about 22 inches.

This means a person with average eyesight won't see individual pixels on a 4K screen when viewed at this distance and farther.

So, you may not notice any deformities, depending on the viewing distance, your eyesight, and the quality of the display panel. (If your nose is pressed against the screen while you look for visual artifacts, you may be looking too closely.)He ain't heavy, he's my buffer

The other caveat of display scaling is performance. If your device renders the screen at a higher resolution than the display into a buffer and then scales it down, it needs to use some extra computational resources. But, again, this may not have as significant an impact as you might think.

For example, Geekbench tests on an M2 MacBook Air showed a drop in performance of less than 3 % at a scaled resolution, compared with the native one, when using OpenCL, and less than 1 % when using Metal. On the same machine, Blender performance dropped by about 1.1 %.

How significant this is depends on your usage. If you need to squeeze every last processor cycle out of your Mac, you'd be better off choosing your monitor's native resolution or switching to a Retina display. However, most users won't even notice.

The overall takeaway from display scaling is that it's designed to make rendering on your non-Retina monitor better, not worse. For example, suppose you do detailed visual work where a 1:1 pixel mapping is essential or long video exports where a 1 % time saving is critical. In that case, chances are that you already own a standalone Retina display or two. Otherwise, you can use display scaling on a non-Retina monitor without noticing any difference.

Read on AppleInsider

-

Understanding spatial design for visionOS

Apple Vision Pro's release is imminent, and there are lot of things for developers to consider when building apps for the headset. Here's what you should think about when making visionOS apps.

Apple Vision Pro in a cold environment.

The Apple Vision Pro is an interactivity departure for Apple. Unlike apps for Mac or iOS, which rely on user interacting largely with a flat plane and a well-established user interface language, visionOS throws that all away, forcing developers to embrace new ways for users to interact with their apps.

Apple has provided extensive documentation and tutorials for designing for spatial computing. The following are some key points to keep in mind as you design your apps for visionOS.Passthrough versus immersive mode

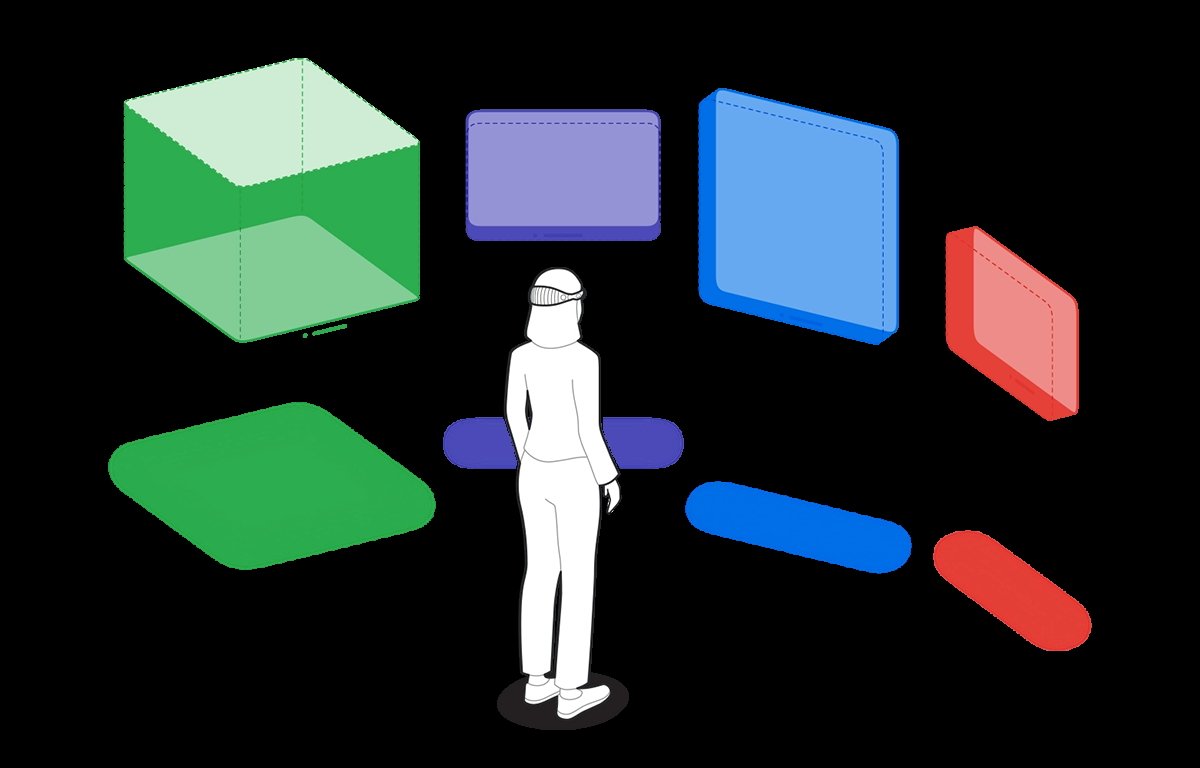

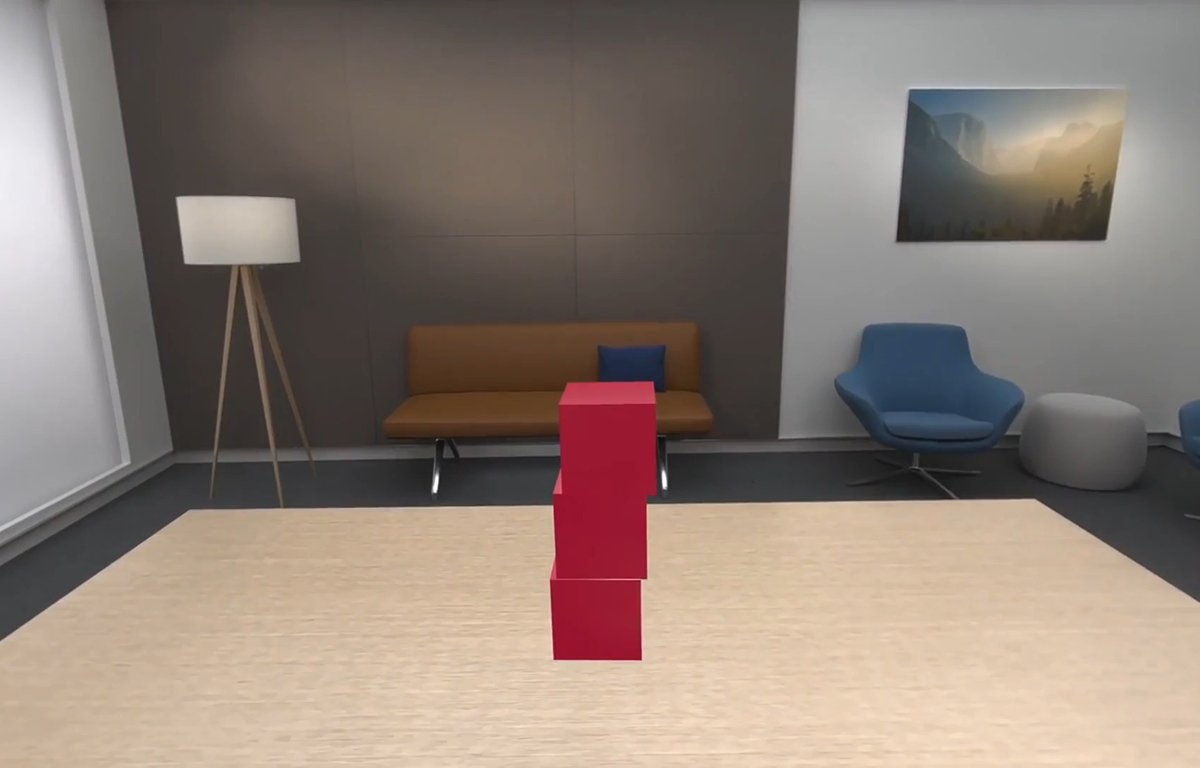

visionOS lets you create apps in either passthrough or immersive mode.

In passthrough mode, the user sees their surroundings through the Apple Vision Pro headset using its built-in external cameras, but apps and windows are displayed floating in space.

Users can use gestures and eye movement to interact with the user interface in this mode

In immersive mode, the user enters a complete 3D world in which they are surrounded by a 3D-generated scene containing 3D objects. Immersive mode apps can also be games in which the user enters the game environment space itself.

For most visionOS apps, Apple recommends starting users in passthrough mode, establishing a ground plane, or orientation, and later transitioning to fully immersive mode if needed.

This is because some users may find immediate immersion in a 3D space on an app's launch jarring.

Passthrough mode.Gestures

Apple Vision Pro (AVP) relies heavily on gestures - more so than iOS or iPadOS.

AVP is the first mainstream computer that allows you to control and manipulate the interface using only your hands and eyes without an input device. Using gestures you move your hands in space to control the user interface.

An object that can receive input in visionOS is called an entity. Entities are actually part of the RealityKit framework.

To implement gestures in a visionOS app, three things are required:- An entity must have an InputTargetComponent

- An entity must have a CollisionComponent with shapes

- The gesture must be targeted to the entity you want to interact with

Objects in visionOS are defined by components and there are different types: input, world, view, view attachment, hover effect, collision detection, and text components.

Each component controls a different aspect of visionOS's interface and control. Apple has defined a Swift struct for each.

An InputTargetComponent is a struct that tells visionOS which object can receive input in the form of gestures.

If you don't add an InputTargetComponent, an object in visionOS can't receive input at all.

You can also set whether an object can receive direct or indirect input.

In Swift:myEntity.components.set(InputTargetComponent()

To define which part of an entity can receive input, you add aCollisionComponent. Collision components have shapes that define hit-test areas on an entity.

By changingCollisionComponentshapes you can change what parts of an entity will accept or ignore gesture input.

To disable any physics-based processing on an entity, you can disable collision detection on theCollisionComponentusing aCollisionFilter.InputTargetComponentswork in a hierarchy so if you add them to an entity that has descendant entities that also haveInputTargetComponentsthe child entities will process input unless you disable it.

You can set what type of input anInputTargetComponentcan receive by setting the.allowedInputTypesproperty.

A descendantInputTargetComponent's.allowedInputTypesproperty overrides the.allowedInputTypesproperties of ancestors.

You can also set the.allowedInputTypeswhen you first initialize the structure with theinit()method.

You can toggle whether theInputTargetComponentcan accept input by setting the.isEnabledproperty.

Gestures can be set to be targeted at a specific entity, or any entity.

You can find out which entity received input in the gesture's.onEndedmethod like this:let tappedEntity = gestureValue.entity

In most cases, you'll use entities in passthrough mode where you may have a mixture of windows, icons, 3D objects, RealityKit scenes, and ARKit objects.

Passthrough mode with windows and 3D objects.HoverEffectComponents

If an entity is an interactive entity (I.e. it's enabled for input), you can add aHoverEffectComponentto it to astound and delight your users.

The HoverEffectComponent is possibly the coolest thing ever in the entire history of user interfaces:

If you add a HoverEffectComponent to an interactive entity, visionOS will add a visible highlight to the entity every time the user looks at it.

When the user looks away from the entity the highlight is removed.

This is one step away from the full mind control of computers.

Like the single-click on the macOS desktop, or highlighting text in iOS, the visual highlight tells the user the entity is selectable - but it does so without any physical interaction other than eye movement.

As the user moves their eyes around the field of view in visionOS, it will highlight whatever entity they look at - as long as it has a HoverEffectComponent.

This is pure magic - even for Apple. Incredible.

The eyes will be new input devices in spatial computing.

This is indeed a very exciting prospect: the ability to control a computer with your eyes will be vastly faster and more accurate than manual input with your hands.

It will also open up a whole new world of computer control for those with disabilities.

visionOS can include spaces that include several kinds of objects, or entirely rendered 3D scenes.Virtual Hands

One new aspect of spatial computing is the idea of virtual hands: in visionOS apps you'll be able to raise your hands and control objects in your field of view in AVP.

We covered this previously in our article on visionOS sample code. Apple already has some demo programs with this working.

Using virtual hands, you will be able to touch and control objects in your view inside of AVP. This gives you the classic UI concept of direct manipulation but without a physical input device.

Virtual hands are similar to on-screen hands used in many modern video games. In fact, this idea was already in use decades ago in some games such as the groundbreaking Red Faction for PlayStation 2 - but it still required a physical input device.

With AVP the experience is more direct and visceral since it appears as if you are touching objects directly with no hardware in between.

Using virtual hands and gestures you will be able to tap on and move objects around inside visionOS's space.

You can also use them in immersive games.Additional considerations for gesture control

There are a few other things you'll want to think about for gestures, control, and spatial interaction.

You'll need to think about nearby controls for distant objects. Since some objects in AVR's 3D space can appear farther away than others, you'll also have to consider how to allow your user to interact with both.

Apple advises to consider 3D space and depth. Developing for AVP will be more complex than for traditional screen-bound devices.Comfort and 360-degree views

Apple stresses several times to keep in mind that comfort is important. Remember that when using the AVP the entire body is used - especially the head.

Users will be able to swivel and rotate their heads in any direction to change the view. They will also be able to stand and rotate, bend, and squat or sit.

You'll need to keep this in mind when designing your app because you don't want to force the user into too much movement, or movements they might find uncomfortable.

There's also the issue of too many repetitive movements which might fatigue the user. Some users may have limited range of motion.

Some users may be able to make some movements but not others. Keep these considerations in mind when designing your app.

Also, be aware that there will be some additional weight on the user's head due to the weight of AVP itself. You don't want to force the user to hold their head in a position too long which might cause neck fatigue.

No doubt over time as later iterations of AVP become lighter this will become less of an issue.

Apple also discourages the use of 360-degree or wrap-around views.

While users will be able to pan across the view by looking in different directions, Apple doesn't want developers to make apps that span a full circle.A few other tidbits

Apple lists a few other things to help in your visionOS development:

Apple recommends not placing the user in an immersive scene when your app launches. You can do so, of course, but unless your app is an immersive game Apple doesn't recommend it.

Even for immersive games, you may want to start the user in a window so they can change their mind or access other apps before they start the game.

Apple defines a key moment as a feature or interaction that takes advantage of the unique capabilities of visionOS.

ARKit only delivers data to your app if it has an open immersive space.

Use Collision Shapes debug visualization in the Debug Visualizations menu to see where the hit-detection areas are on your entities.

You can add SwiftUI views to visionOS apps by using RealityView attachments to create a SwiftUI view as a ViewAttachmentEntity. These entities work just like any other in visionOS.

Also, be sure to see the section of the Xcode documentation entitled Understand the relationships between objects in an immersive space.

You can currently can resize or move a window in visionOS after it is created using onscreen controls, but you can't do so programmatically.

Therefore you should think carefully about a window's initial size when you create it. When visionOS creates a new window it places it onscreen wherever the user is looking at the moment.

If your app includes stereo views that display video you'll want to convert the videos to MV-HEVC format using the AVAsset class in AVFoundation.

To provide lighting in a RealityKit scene in visionOS you can either let the system provide the lighting or you can use an ImageBasedLightComponent to light the scene.

The CustomMaterial class isn't available in visionOS. If you need to make a custom material for a scene, use the Reality Composer Pro tool which can make the material available as a ShaderGraphMaterial struct instead.

Apple has a WWDC 23 video session on using materials in RCP. See the video Explore Rendering for Spatial Computing from WWDC.

Also be sure to watch the WWDC 23 video Principles of Spatial Design as well as Design Considerations for Vision and Motion.

You can get the current orientation of the AVP by using thequeryDeviceAnchor(atTimestamp:)method.Spatial audio and sound design 101

Another spatial part of the AVP experience is immersive audio.

Full-environment audio has been around for decades in software, especially in games, and with headphones or surround-sound home theater systems, users have been able to enjoy immersive sound experiences for years.

But AVP takes the experience to a new level.

For one thing, having immersive audio connected to an immersive or mixed reality visual environment creates an entirely new dimension of realism than previously possible in computing.

Having both sound and the visual experience work in tandem creates a new standard of what's possible.

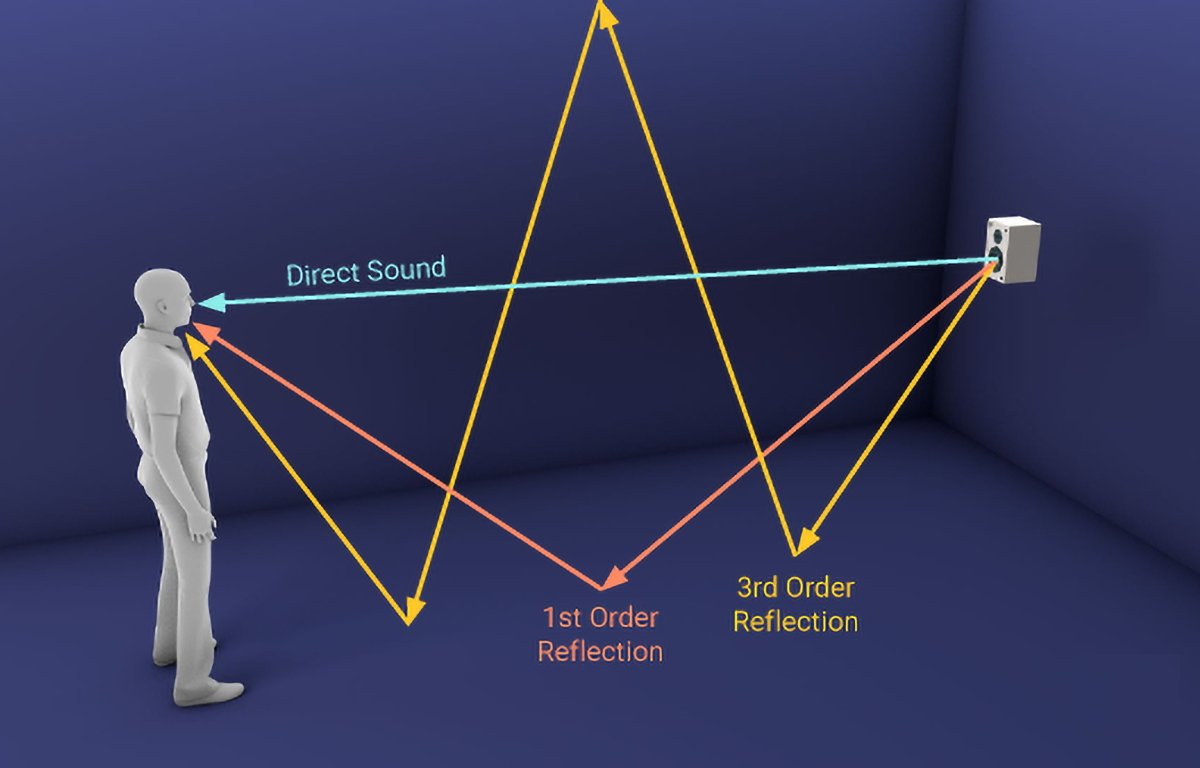

Your brain works by using complex interpretations of what both your eyes see and your ears hear. Many spatial cues in human perception are based on hearing.

For example, the slight delay of a sound arriving at one ear a split second before the other combined with your head obscuring parts of sounds is what allows your brain to tell you what direction a sound originates from. These are called interaural time differences and interaural level differences.

Pitch, timbre, texture, loudness, and duration also play roles in how humans perceive sound. Reflected sounds also have an effect on perception.

Humans can typically hear sound frequencies ranging from 20Hz at the low end to 20Khz at the high end, although this range declines with age, and some people can hear sounds beyond these ranges.

The classic Compact Disc audio format samples sound at 44.1KHz - twice the maximum frequency of human hearing. This is based on the Nyquist Limit - which states that to accurately capture a sound by recorded sampling, the sample frequency must be twice the maximum sound frequency.

One oddity of human hearing is that people have difficulty localizing any sounds that originate from or reflect off of cone-shaped surfaces - which isn't surprising since these shapes rarely occur in nature.

Direct and reflected sounds. Courtesy Resonance Audio.

When vision and sound inputs are properly combined and synchronized, your brain makes connections and judgments automatically without you having to think about it.

Most of your ordinary perception is based on these complex interpretations and sensory input. So is your brain's perception of your orientation in space (combined with inputs from your inner ears which affect balance).

AVP provides a new level of experience because it allows synchronized input of both vision and immersive sound. Keep this in mind too when designing your apps.

You want your users to have the most realistic experience possible, and this is best achieved by careful use of both sound and vision.

For non-immersive apps use audio cues to make interactions more lifelike.

For example, you can use subtle audio responses when a user taps a button or have audio play and seem to come directly from an object the user is looking at.

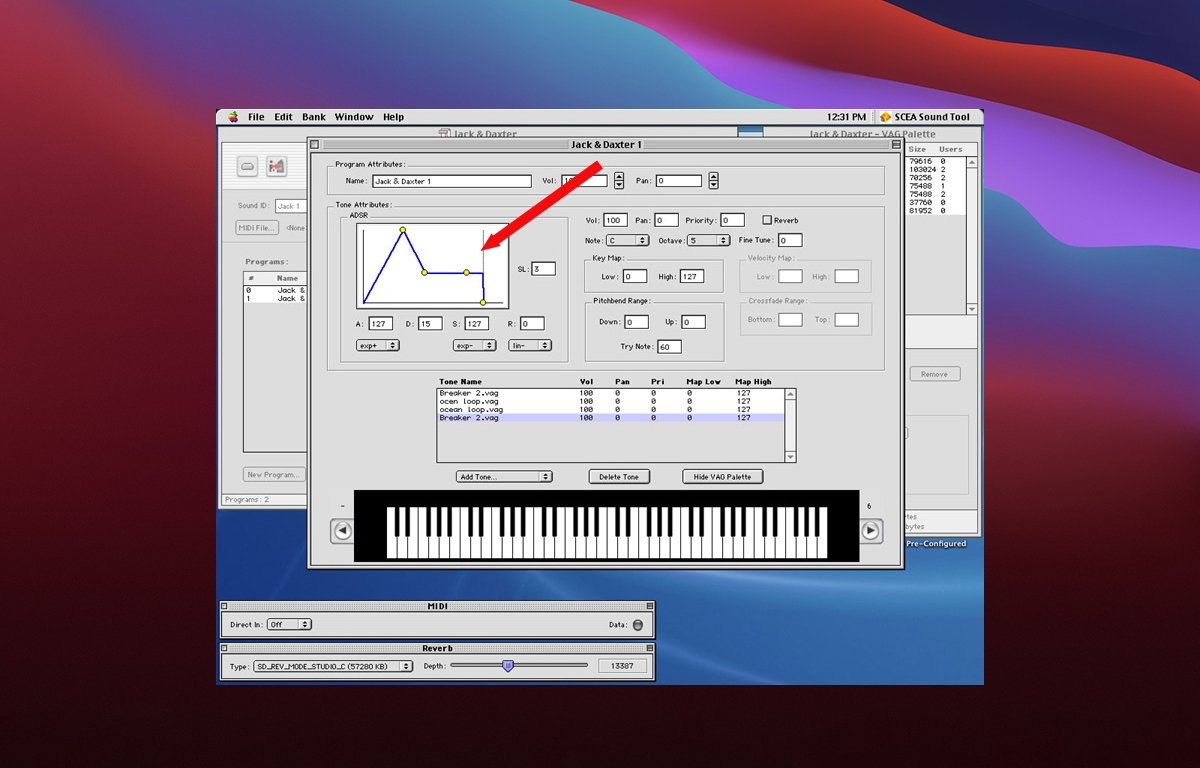

If you are making an immersive app you will need to carefully tune any audio so it properly matches what the user sees. For games, it's important to use 3D audio cues so they precisely match gameplay events and environments.ADSR

Every sound has what is known as an ADSR - attack, delay, sustain, release.

Attack is the rate or slope at which a sound's volume goes from zero to maximum. A perfectly vertical attack means a sound goes from silence to max instantly.

Delay is the time the sound lasts from its maximum, or peak, until it drops or settles into its standard volume, which lasts until the sound's final stage - its release.

Sustain is how long a sound is held at standard volume after the delay period. This is the level most people perceive a sound.

Release is the duration of time from the start of the sound fading out until it goes to zero volume or silence. The release phase begins at the end of the sustain period until silence.

A perfectly vertical release curve means a sound goes from standard volume to silence instantly. An extremely long and shallow release curve means a sound fades out very slowly over time.

If you've used the crossfade effect in the Apple Music app you've heard shallow attack and release curves.

Sounds with a perfectly vertical attack or release are called gated sounds.

Taken together, a sound's four ADSR values are known as its envelope.

Most professional sound editing software lets you adjust all four points on a sound's ADSR graph. Altering the ADSR affects how users perceive sound.

Using ADSR you can create some pretty interesting and subtle effects for sounds.

ADSR was originally used only for synthesized music but today's pro sound software lets you apply ADSRs to sampled (recorded) sound as well.

ADSR adjustment in the PlayStation 2 sound editor.Sound effects

If you're making an immersive game for visionOS, you'll need to think about sound effects carefully.

For example, suppose your game contains a distant explosion or gunfire. You will need to both time and mix your sound so that the relative loudness of each sound matches the distance and intensity of the effect.

Sound realism in immersive games is also dependent on the materials in an environment.

Is there a huge rock canyon in your game where an explosion or gunfire goes off? If so you'll need to include multiple realistic echo effects as the sound bounces off the canyon walls.

You will also need to position each sound in 3D space relative to the user - in front of, behind, to the sides of the user, or even from above or below. Certain sounds in real life may seem to come from all directions at once.

Other types of sounds in real life may temporarily leave the participant deaf, or leave them with only ringing in their ears for a while (grenade concussions come to mind).

Consider that for a distant explosion many miles away in a game setting, in real life, the viewer wouldn't actually hear the explosion until several seconds after they see it.

Little touches like these will add extra realism to your apps.

On top of all this, keep in mind your user may be moving their head and thus alter their perspective and field of view while sounds are playing. You may have to adjust some sounds' spatial cues in 3D space in real time as the user's perspective changes.

There are audio libraries to help with this such as Ressonance Audio, OpenAL, and others, but we'll have to wait and see how spatial audio evolves on AVP.

Sound design for games is an entire discipline unto itself.

For an excellent deep dive on how sound works in the real world check out the exceptional book The Physics of Sound by Richard Berg and David Stork, although some math is required.Apple's visionOS Q&A

At WWDC 23 and in recent developer labs Apple has held several sessions with visionOS experts, and as a result, Apple has compiled a visionOS Q&A which covers many more topics.

You'll want to check it out to make sure your visionOS apps can be as great as possible.

Read on AppleInsider

-

Editorial: Google's acquisition of Fitbit looks like two turkeys trying to make an eagle

Google is acquiring American wearable maker Fitbit in a $2.1 billion dollar move that looks a lot like the ad-search giant's previous attempts to buy its way into a hardware business.

After five years, nobody is wearing Google's Wear OS

Google announced it would pay $2.1 billion for Fitbit, with its hardware executive Rick Osterloh stating in a blog post that the acquisition was "an opportunity to invest even more in Wear OS as well as introduce Made by Google wearable devices into the market."Nest-level privacy promises

The deal has raised obvious privacy concerns due to Google's position as an advertising network that seeks to track individuals and collect data about their behaviors to develop more persuasive advertising strategies.

Fitbit issued its own press release stating, "consumer trust is paramount to Fitbit. Strong privacy and security guidelines have been part of Fitbit's DNA since day one, and this will not change. Fitbit will continue to put users in control of their data and will remain transparent about the data it collects and why. The company never sells personal information, and Fitbit health and wellness data will not be used for Google ads."

But once Fitbit is part of Google, the web promises of a former company won't be any more valuable than the assurances Nest once gave after it was similarly acquired by Google's umbrella Alphabet.

Back in 2015, Nest co-founder Tony Fadell assured Nest customers that "when you work with Nest and use Nest products, that data does not go into the greater Google or any of [its] other business units," and said its new ownership "doesn't mean that the data is open to everyone inside the company or even any other business group - and vice versa."

That changed when Google restructured Nest and linked it to Google Assistant. The company also closed Nest accounts, making it mandatory to sign up for Google accounts to use Nest hardware.Android fans imagine wild synergy

Writing for The Verge, Chaim Gartenberg wrote that "The acquisition makes a lot of sense: Google has spent years trying (and largely failing) to break into the wearables market with its Wear OS platform, but it's struggled to make a real impact."

Gartenberg added, "Fitbit's hardware chops have always been great, giving Google a much stronger foundation to build on for future Android-integrated wearables devices."

Fitbit has created an extraordinary number of smartwatch and activity bands over the past few years, none of which have any capacity to run Android. There is absolutely no "foundation" for Android to sit on. That was an intentional choice.

Two years ago, Fitbit's director of software engineering Thomas Sarlandie explained in an interview why Fitbit intentionally avoided licensing Google's Android-based wearable OS.

"Android imposes a lot in terms of hardware and design," Sarlandie said. "We've been designing our own products. That's how we get better battery life; that's how we get a form factor that's more adaptive to fitness. We think the product we built this way is a better smartwatch experience for our users, because we're able to have more control over what compromises we're making and what chipset were going to develop."

Fitbit had earlier acquired Pebble specifically to obtain a wearable platform OS that could allow it to remain independent from Google and not held back by Android.

"When you're using Android Wear, you have to respect their interface and you're very limited in terms of what you can do in terms of customization," Sarlandie said. "For all those reasons, it really makes sense for us to keep working on our own platform."

But it doesn't make any sense for Fitbit to keep working on its own platform anymore, because the simple OS it acquired from Pebble isn't very sophisticated. It was just all Fitbit could afford to buy as an option to using Wear OS.

Fitbit didn't want Wear OS, but Google also doesn't want Fitbit OS

Google has tons of money to invest in its Wear OS platform, but it simply hasn't been aggressive in pushing it forward--largely because nobody was showing much interest in using it to build products. Even Google's largest licensee Samsung created its own Tizen based wearables rather than codeveloping products using Wear OS.

Without dumping Wear OS, Google doesn't really benefit from owning Fitbit hardware that can't run Wear OS, or a new Fitbit OS that is itself old and simple and isn't optimized to run on Wear OS hardware. But even if it bought Fitbit purely for the talent, Google has another problem standing in the way of developing a Pixel watch from scratch: Google lacks any source for building high-performance wearable hardware.

It is specifically lacking a silicon hardware vendor interested in building something like Apple's 64-bit SiP used in Apple Watch. Apple's wearable chip shares its CPU and GPU core designs with Apple's other Ax-series SoCs used in iPhone and iPad, making it vastly cheaper to maintain.

Google has some silicon experience just from developing a custom Image Signal Processor for its Pixel phone, but if it actually learned anything from that fiasco, it was that custom silicon is an extremely expensive, high-risk project. It would be asinine for Google to build an entirely custom watch after proving it couldn't even sell a slightly customized Qualcomm phone.The wishful thinking surrounding Motorola

The fantasy that Google could buy an outside hardware maker, shake a magic wand, and turn all of that company's products into "Androids" all programmed to destroy Apple was earlier dreamed up when Google announced its plans to buy Motorola Mobility. In addition to the Android phones Motorola was already selling, various bloggers imagined that Google could turn Motorola's setup TV box business into a revitalization of Google TV.

Instead, Google sold off Motorola's TV unit for scrap and subsequently reinvented various new versions of a TV box, using Android or not, all without any real success. Most TVs now ship with a non-Android "smart OS," and most set-top boxes have no connection with Google, whether on the low-end with Roku or the premium-end with Apple TV.

Motorola didn't even result in a successful phone business for Google. Within two years it did nothing but lose hundreds of millions of dollars and lay off its workers to be sold off to China as an Android brand.Google spent five years on Wear OS

Dieter Bohn of the Verge tweeted "this is about Google getting smarter at making wearable hardware."

Yet Google has spent five years working on wearables -- initially announcing Android Wear in early 2014, a full year before Apple Watch arrived. Yet all this time later, while Apple has refined its product into an increasingly popular wearable achieving double-digit growth globally, even Android Authority called Google's efforts "too messy to recommend."

Earlier this year, C. Scott Brown wrote for the site that, "after five years of development and refinement, you'd think Wear OS would be a strong contender within the wearable industry and something a reviews site like Android Authority would recommend. However, that's not really the case."

It's not just wearables that have eluded Google. In both tablets and phones, the company has been unable to dictate to a third party partner how to build a successful device across a series of Nexus introductions; has been just as bad at setting up a design team tasked with building an original product like such as Pixel C tablets or Pixel branded notebooks or Pixel phones; and has been just as dismal at paying a foreign team to build new products under the Google name.

That means Google will face considerable pressure to close a deal that at best could simply give it access to real-world health data, with the potential to put Assistant on a wearable it could market to iPhone users. And if it can't gain antitrust approval to complete the deal, it reportedly has to pay $250 million reverse termination fee to Fitbit.

Read on AppleInsider

-

Editorial: CNBC is still serving up some really bad hot takes on Apple

On Monday, CNBC compiled the confused thoughts of a Wedbush Securities analyst who doesn't seem to have any grasp on reality whatsoever when it comes to the future -- or even present -- of Apple.

Wedbush Securities managing director Dan Ives often shovels out thoughts on CNBC

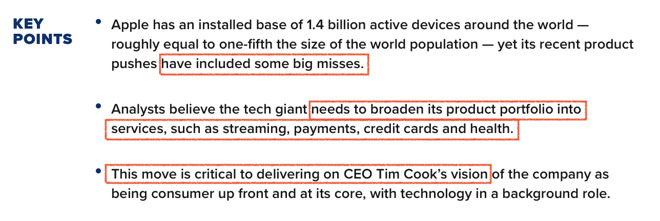

Writing for CNBC under the headline "Waiting for Apple's next breakthrough device? Here's why you shouldn't," Eric Rosenbaum imagined that Apple is struggling to sell new hardware, announced that "analysts" have stumbled onto the idea that the company could maybe branch out into services -- a solid five years after Tim Cook began trumpeting the concept of Services, and then built Services into a $10 billion per quarter enterprise. He then explained that the reason for this is that Apple is getting out of the technology business.

Is this the Onion?

That last one is an astoundingly gag-worthy conclusion based on Rosenbaum's sloppy regurgitation of another idea Cook has spoon-fed to analysts and the media: that Apple isn't in the business of just selling pure technology but rather works to craft complete solutions for its customers. Converting that into the idea that Apple is getting out of tech to become a services company is just astounding, but it's becoming a fashionable media narrative that almost nobody seems embarrassed to recite.

This series of spectacularly obtuse takes presented as "key points" might be excused as an editorial mistake, perhaps the work of a teenage intern trying to boil down the essence of the article into an oversimplified synopsis.

But rather than fleshing out a more nuanced presentation of the state of Apple, the full text of Rosenbaum's article dives further downward into ham-handed simplicity. To be fair, Rosenbaum doesn't seem to have invented his article's "key points" personally. Instead, the missive is largely attributed to Wedbush Securities' managing director Dan Ives.Apple's "very big misses"

How does one support the laughable claim that Apple's "recent product pushes have included some very big misses"? Ives lazily falls back into the comfortable executive armchair of complaining that "HomePod was a failure," calling it "late to the game and mispriced."

Certainly, if HomePod were cheaper-- say if Apple had slashed the price its home speaker the way Sonos often has-- it could have sold some additional number of units while earning less money. That's what Sonos did last year: it reported selling 4.6 million units across its entire range of devices, but only brought in about two-thirds of the revenues of Apple's roughly 4 million sales of HomePod.

It would be pretty ignorant to suggest that Apple would have been better off if it had turned in Sonos' results. In Apple's first year of HomePod availability, sales were more commercially successful than Sonos, a well regarded and quite popular home speaker pioneer now in its sixth year of building wireless smart home audio devices. Apple "walked right in" to the premium tier of the market for smart home speakers.

But Ives isn't thinking about Sonos. Instead, he apparently has in mind Amazon Alexa. He called Apple's success and its "huge installed base of users" a "double-edged sword, as it allows the company to ignore the first-to-market mantra in developing new products, which may become a riskier strategy in today's world."

It's dangerous to try to find meaning in casually-tossed trite cliches, but it certainly appears that Ives is trying to say that Apple is in a precariously dangerous position because its success is blinding it to the importance of being the first mover in emerging product categories.

That's clearly the interpretation Rosenbaum makes, as his next paragraph explains that "Amazon has been moving in the opposite direction -- branching out from its enterprise market success with cloud services giant Amazon Web Services to the consumer market with Alexa."

Yet there are at least two things that are disastrously wrong here: first, that Amazon's first-to-market status with Alexa is representative of success in any way, and second, that Apple needs to be first in introducing new product categories.