Marvin

About

- Username

- Marvin

- Joined

- Visits

- 116

- Last Active

- Roles

- moderator

- Points

- 6,089

- Badges

- 2

- Posts

- 15,326

Reactions

-

Epic vs. Apple lurches on, this time about antisteering compliance

This was why Fortnite was removed. It's a free-to-play game that makes money by selling in-game currency V-Bucks. If they sell them in-game, Apple charges commission automatically via their own payment system. Epic tried to get around it by linking to an external purchase option.teejay2012 said:Can someone clear this up for me? Fortnite is still not available for download on the iOS App Store. I understand you can play through cumbersome web site or cloud gaming workarounds. So how does Epic complain about Apple and anti steering when the app is not even on iOS?

Epic got the courts to force Apple to allow linking to alternative payment sources but Apple says they are still going to charge a commission even when an external payment is used. If this anti-steering provision prevented having to pay a commission, Epic could just put Fortnite back on Apple's App Store (if they were allowed to).

Epic thinks it's unreasonable to allow a commission this way but they charge a commission on their own products and services. If a game uses Unreal Engine, developers don't process transactions via an Epic payment system but developers are required to pay a commission for using their game engine. Epic doesn't want to pay Apple for using their ecosystem.

-

If you're expecting a Mac mini at WWDC, you're probably going to be disappointed

All the remaining M2 products (mini, Studio, Pro) are within their typical release cycle. The Studio and Pro M2 were updated in June 2023, mini was in January 2023.blastdoor said:I recently bought a refurbished m2pro Mac mini because I gave up on an m3 mini being released. I also would not be surprised if the studio skips m3 too.

Apple only launched the M3 Air 7 weeks ago.

Even if Apple wanted to push ahead with AI and M4, that can come in October. This gives them 7 months to update the M2 models.

There aren't many people buying desktops at all, which is why they get the least attention. There's no compelling reason for Apple to put M4 in the Studio model early.keithw said:I would think that they would get the M4 Studio out sooner than later. I can't imagine many people are buying the M2 variants currently available, especially since the M3 Max has been out in laptop form for months. I'm STILL waiting to upgrade my aging (but still competent) iMac Pro from 2017.

I would guess the mini would be quietly bumped to M3 at the same time as the iPad Pro at the upcoming event. Then Studio and Pro to M3 Ultra at WWDC. In October, they can have an AI Mac event for M4 after they have an AI iPhone 16 event showcasing on-board AI models with an updated Neural Engine in A18 Pro.

-

Apple's iOS 18 AI will be on-device preserving privacy, and not server-side

The larger models need a lot of memory but even the smaller ones are very capable of more meaningful replies than chat apps like Siri. Meta released some models similar to Chat GPT and they work very well:jellyapple said:What IQ level will be AI on device? 40?

https://ai.meta.com/blog/code-llama-large-language-model-coding/

The smallest Llama-7B model only needs 6GB of memory and around 10GB storage:

https://www.hardware-corner.net/guides/computer-to-run-llama-ai-model/

The larger 70B model needs 40GB RAM and is considered close to GPT4:

https://www.anyscale.com/blog/llama-2-is-about-as-factually-accurate-as-gpt-4-for-summaries-and-is-30x-cheaper

Apple published a paper about running larger models on SSD instead of RAM:

https://appleinsider.com/articles/23/12/21/apple-isnt-behind-on-ai-its-looking-ahead-to-the-future-of-smartphones

https://arxiv.org/pdf/2312.11514.pdf

Perhaps they can bump the storage sizes up 32-64GB and preinstall a GPT4-level AI model locally. The responses are nearly instant running locally. They may add a censorship/filter model to avoid getting into trouble for certain types of response.

They are powerful tools. You can try them here, first is chat, second is an image generator:40domi said:AI is well over hyped and unintelligent consumers are swallowing it hook line & sinker 🤣

https://gpt4free.io/chat/

https://huggingface.co/spaces/stabilityai/stable-diffusion

For example, you can ask the chat 'What is an eigenvector?' or 'What is a good vegetarian recipe using carrots, onions, rice, and peppers?' and it will give a concise answer better than searching through search engines. The image generators can give some poor quality output but they are good for concepts with a specific description. An image prompt can be 'concept of a futuristic driverless van' to get ideas of what an Apple car could have looked like. Usually image prompts need tuned a lot to give good quality output.

The AI chats are context-aware so they remember what was asked previously. If you ask 'What is the biggest country?', it will answer and if you ask 'What is the capital of that country?', it knows what country is being referred to.

They are much better than search engines for certain types of information. Web engines are good for news, shopping, social media but when you just need an answer to a very specific question, it's usually very difficult to click through each of the links to find the answer.

-

Apple wants to make grooved keys to stop nasty finger oil transfer to MacBook Pro screens

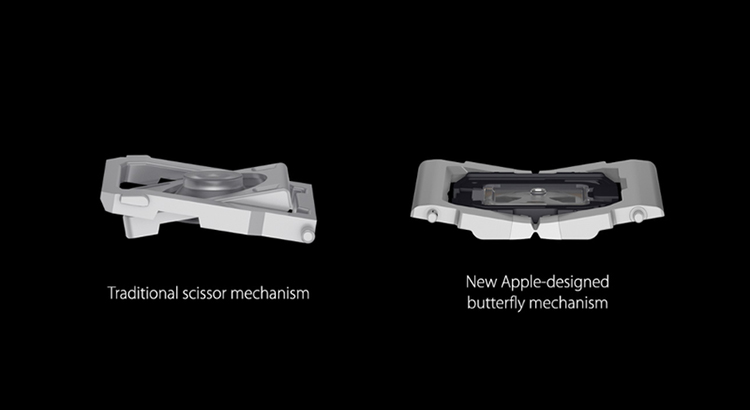

I always wondered if they could physically recess the keys when closing the display. All the keys are in a line horizontally and they have a scissor design:AppleInsider said:"[A] keyboard is one of the most frequently touched parts of a computer," says Apple. "Over time, keyboards collect large amounts of oil, dirt, grime, and other contaminants, especially from a user's hands."

"Recent advances in portable computing have also led to thinner, more compact devices," it continues, which "increases the likelihood of contact between the keys of the keyboard and the display while the notebook computer is closed and the display screen is positioned adjacent to the top surfaces of the keycaps."

"[Consequently] contaminants and debris on the keycaps can cause damage to the display," says the patent. "For example, oil on the keycaps can transfer to the display when the display is closed over the keyboard thereby leaving unsightly oil smudges at the points of contact with the display."

There is also the fact that "dirt on the keycaps can scratch the face of an abutting display." But even in ordinary, everyday use, you've seen a faint outline of the keyboard on a MacBook Pro display and Apple is right that it is unsightly.Since there is no way to avoid our fingertips leaving an oily trace, and no practical way to ask us to wear gloves, Apple is not planning to do much about this. It just intends to use keycaps that have a "polished surface [which] is also resistant to oil and dirt buildup."

On the scissor key to the right side, turning that lever would push the key down. If there were 6-7 metal poles across the keyboard, turning those when the laptop lid closes would push the keys down. Since the laptop has a battery, it can be done electrically.

It's best to avoid mechanical failures with fewer moving parts but maybe it can be done in a way that a mechanical failure leaves the keys up.

The tissue paper that came with the Mac works quite well to keep the display clean. The paper shown at 3:48 in the video:

A retail option like this would be good, similar to the Apple polishing cloth but much thinner.

This wasn't much of an issue with older Macs. It started showing in more recent designs. I imagine it could also be solved by making the bottom case 0.1mm taller so that the keyboard keys never sit above the case height.

-

Rumor: M4 MacBook Pro with AI enhancements expected at the end of 2024

The Mac one uses FP16 to measure TOPs:tenthousandthings said:

The presentation on this at WWDC will be interesting. I can't find it right now, but I read somewhere there is no difference between the NPU in the A17 Pro and the M3, and the 35 trillion versus 18 trillion numbers reflect two different standards for measuring it. So one number is for the mobile industry, the other for the computer industry. Apple didn't double the peak NPU ops/sec in the A17 Pro versus the A16 Bionic. Instead, the competition changed to a different standard that provided better-sounding numbers, and Apple was forced to adopt that for the A17 Pro and the iPhone 15 Pro without comment, but they kept the old standard for the M3, to avoid appearing to claim an astounding leap forward in the NPU relative to the M1 and M2, when in reality it is incremental.nubus said:godofbiscuitssf said:Apple has been doing ML integrations for years. Marketing-speak changed “ML” to “AI” and you’re buying into it.As for this article, Apple’s been updating the Neural Engine every time in the silicon, sometimes obviously with the number of cores, sometimes by stating so, with giant performance increases, and other times by changing the architecture of the core.

Apple has indeed been delivering a Neural Processing Unit for years. However, even M3 Max is only delivering the ML performance of iPhone 14 Pro (17-18 TOPS). Intel is doing 34 TOPS with their 2023 laptop CPUs (combined NPU+GPU) and Lunar Lake will launch this year with 100 TOPS performance for laptops. Standing still is why the stock is dropping.

Something will need to change. I would have killed M3 Ultra and asked the entire team to work on M4 or refocused M3 Ultra on ML at any cost to show at WWDC.

I'll try to find it, it wasn't an anonymous internet commenter or anything, it described the change, but it was only like two sentences and now I can't find it.

https://www.anandtech.com/show/21116/apple-announces-m3-soc-family-m3-m3-pro-and-m3-max-make-their-marks

iPhone one likely uses INT8/FP8:

https://www.baseten.co/blog/fp8-efficient-model-inference-with-8-bit-floating-point-numbers/

Other chips like SnapDragon use INT8, Intel could be using INT4:

https://www.theregister.com/2024/04/10/hailo_10h_ai_chip/

https://www.anandtech.com/show/21346/intel-teases-lunar-lake-at-intel-vision-2024-100-tops-overall-45-tops-from-npu-alone

GPU TFLOPs are FP32.

There's a test here with machine learning FP16:

At 6:40, it compares M2 Max CPU, GPU and Neural Engine. The CPU gets 0.2 TOPs (using 5W), GPU gets 10.8TOPs (using 40W), NE gets 12.5TOPs (using 10W) FP16.

M2 Max GPU is 10.7TFLOPs (30-core) 13.6TFLOPs (38-core) FP32 so these numbers match and suggests the GPU isn't running FP16 twice as fast.

This means the GPU and the NE are around the same performance so if the NE is 35TOPs INT8, the higher Max GPUs are also roughly 35TOPs INT8 so total compute performance of M3 Max if both the NPU + GPU are used would be around 70TOPs. M3 Ultra would be around 100TOPs.

In practise, it can be higher or lower depending on what's being run.

Because the NE is only using 10W vs 40W GPU, they could increase the core count from 16-cores to 32-cores to get 70TOPs INT8 and potentially improve the architecture to go higher but they couldn't use the same NE across iPhone and Mac so there would have to be some kind of Neural Engine Pro/Max similar to the GPUs e.g iPhone16/Macbook M4 = 16-core NE (45TOPs), M4 Pro/Max = 32-core NE (90TOPs), M4 Ultra = 64-core NE (180TOPs).