Apple's new Photos app will utilize generative AI for image editing

A new teaser on Apple's website could be indicative of some of the company's upcoming software plans, namely a new version of its ubiquitous Photos app that will tap generative AI to deliver Photoshop-grade editing capabilities for the average consumer, AppleInsider has learned.

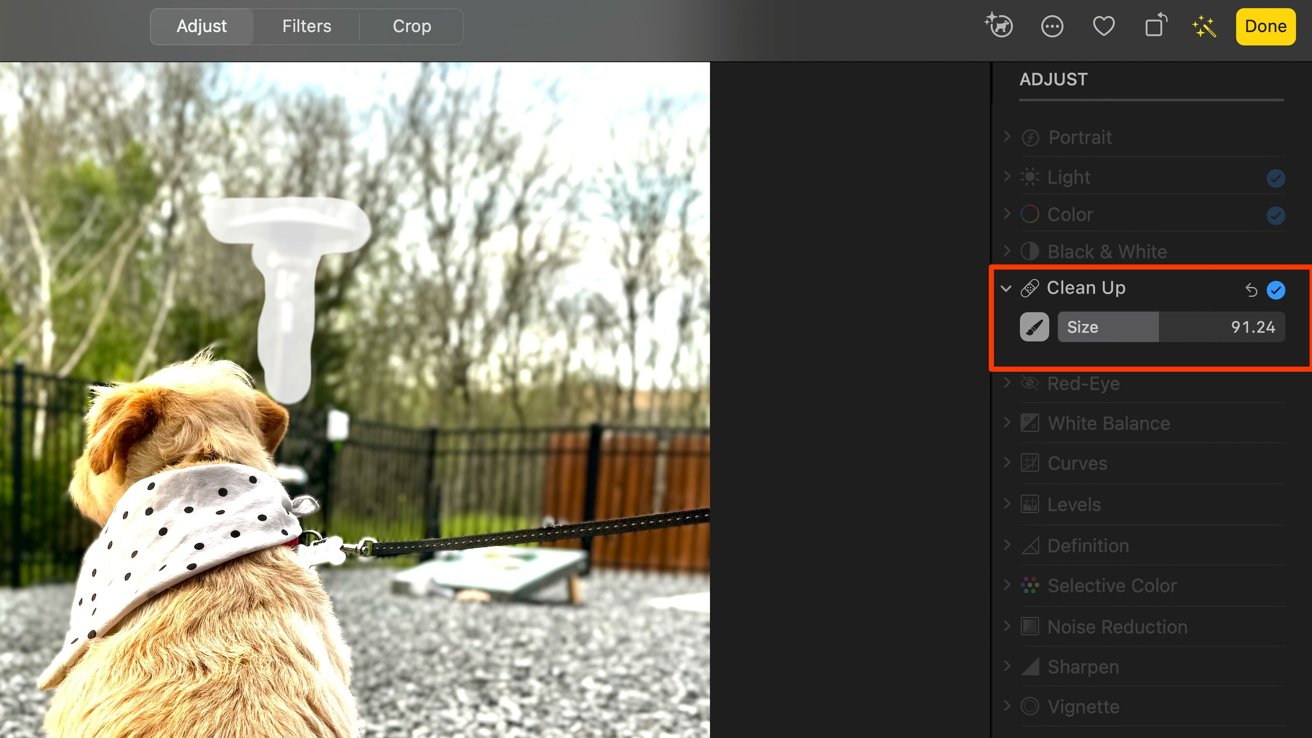

The new Clean Up feature will make removing objects significantly easier

The logo promoting Tuesday's event on Apple's website suddenly turned interactive earlier on Monday, allowing users to erase some or all of the logo with their mouse. While this was initially believed to be a nod towards an improved Apple Pencil, it could also be in reference to an improved editing feature Apple plans to unleash later this year.

People familiar with Apple's next-gen operating systems have told AppleInsider that the iPad maker is internally testing an enhanced feature for its built-in Photos application that would make use of generative AI for photo editing. The feature is dubbed "Clean Up" in pre-release versions of Apple's macOS 15, and is located inside the edit menu of a new version of the Photos application alongside existing options for adjustments, filters, and cropping.

The feature appears to replace Apple's Retouch tool available on macOS versions of the Photos app. Unlike the Retouch tool, however, the Clean Up feature is expected to offer improved editing capabilities and the option to remove larger objects within a photo.

With Clean Up, users will be able to select an area of a photo via a brush tool and remove specific objects from an image. In internal versions of the app, testers can also adjust the brush size to allow for easier removal of smaller or larger objects.

While the feature itself is being tested on Apple's next-generation operating systems, the company could also decide to preview or announce it early, as a way of marketing its new iPad models.

The Clean Up feature will replace Apple's current Retouch tool

During the "Let Loose" iPad-centric event, Apple is expected to unveil two new models of the iPad Air and iPad Pro, the latter of which is rumored to feature the company's next-generation M4 chip. The M4 could introduce greater AI capabilities via an enhanced Neural Engine, with at the least, an increase in cores.

An earlier rumor claimed that there was a strong possibility of Apple's new iPad Pro receiving the M4 system-on-chip. Apple is also expected to market the new tablet an AI-enhanced device, after branding the M3 MacBook Air the best portable for AI.

While Apple has been working on its in-house large language model (LLM) for quite some time, it is unlikely that we will see any text-related AI features make their debut during the "Let Loose" event. The Clean Up feature, however, would provide a way of showcasing new iPad-related AI capabilities.

Should it choose to leverage the new Clean Up feature ahead of its annual developers conference in June, Apple would have the opportunity to promote its new iPads as AI-equipped devices. Giving users the option to remove an object from a photo with their Apple Pencil would be a good way of showcasing the practical benefits of artificial intelligence.

By demonstrating the real-world use cases for AI, the company likely aims to gain a leg up on existing third-party AI solutions, many of which only utilize artificial intelligence to offer short-term entertainment value in the form of chatbots.

Apple teases its event with a new Apple Pencil erase feature

Although the feature provides some insight into what an AI-powered iPad might look like, it remains to be seen exactly when Apple will announce the Clean Up feature. Apple could instead opt to preview the feature at its Worldwide Developer's Conference (WWDC) in June.

Users of Adobe's Photoshop for iPad have had access to a similar feature called "Content-Aware Fill" since 2022. It allows users to remove objects from an image by leveraging generative AI, making it as though the objects were never there to begin with.

The "Content-Aware Fill" feature gradually evolved into "Generative Fill," which offers additional functionality and is available across various Adobe products. In addition to Photoshop, the feature can be found in Adobe Express and Adobe Firefly.

With Generative Fill, users of Adobe's applications simply brush an area of a photo to remove objects of their choosing. Adobe's apps even offer the option to adjust brush size. Apple's new Clean Up feature bears some resemblance to Adobe's.

Clean Up is expected to make its debut alongside Apple's new operating systems in June, though there is always the possibility a mention could slip into Tuesday's iPad media event. Apple also has plans to upgrade Notes, Calculator, iOS 18a href="https://appleinsider.com/articles/24/04/30/apple-to-unveil-ai-enabled-safari-browser-alongside-new-operating-systems">Calendar, and Spotlight with iOS 18.

Read on AppleInsider

Comments

Pixel's best take, magic eraser & repositioning is the very minimal Apple needs to give us!

Magic Editor seems to be using what you describe as Generative AI in Apple's Mac Photo Clean-up feature.

https://pixel.withgoogle.com/Pixel_8_Pro/use-magic-editor?hl=en&country=US ;

What we agree on is that words matter. Generative AI works by utilizing an ML model to learn the patterns and relationships in a dataset of human-created content. GenAI then uses those patterns learned to generate new content. So are Clean-up on a Mac and Magic Editor on an Android smartphone both using simple machine learning or Generative AI?

https://news.mit.edu/2023/explained-generative-ai-1109. ;

Magic Editor uses Generative AI just as surely or not as Clean Up does based on what your wrote:

Quote:" With Clean Up, users will be able to select an area of a photo via a brush tool and remove specific objects from an image. In internal versions of the app, testers can also adjust the brush size to allow for easier removal of smaller or larger objects." And that differs from Magic Editor in what way?

Still no improvement in photos management, metadata management, multi-photo-library management, external storage management, photo backup management, video and live photo management?

On iOS, you still can’t create smart albums though you can use smart album created from macOS, really?

https://blog.google/products/photos/google-photos-magic-editor-pixel-io-2023/

I think you've been confusing things with the old Magic Eraser from the Pixel 6 which wasn't using Google's GenAI.

As for why certain things can't be selected for editing, it's not because it's not using GenAI:

Google Photos' Magic Editor may not be able to select all objects because it isn't allowed to edit certain types of photos or elements in them, such as ID cards, receipts, and other documents that violate Google's GenAI terms. Magic Editor also can't edit faces, parts of people, or large selections. When a user tries to edit one of these items, an error message will appear.

We can wait to see what Apple builds into this year's iPhone for on-device photo editing. You say it will be GenAI-driven, and thus it will do more than Magic Editor can. We can come back in a few months and revisit it. I'll let it rest until Apple actually announces something.

https://blog.google/products/photos/magic-eraser/

It's not generative AI. I don't know why you're so caught up on this but you do you. Google's marketing convinced you really well I guess.

i'm interested in seeing what it does too. But I don't really care who does what better. The point of this conversation is that words have meaning and Google calls things AI even when it's ML. It's so bad Apple had to start doing it in its press so it didn't appear behind to idiots who didn't know better.