Apple invention details AR mapping system for iPhone, head-mounted displays

An Apple invention published by the U.S. Patent and Trademark Office on Thursday details a method of overlaying interactive digital information onto real-world environments displayed on a portable device screen, specifically data regarding navigation assets like points of interest.

An augmented reality invention, Apple's patent application for a "Method for representing points of interest in a view of a real environment on a mobile device and mobile device therefor" deals with interactions between computer generated objects and representations of real environments. In particular, the filing describes techniques by which images of the real world are enhanced by point of interest (POI) information.

Landmarks, buildings and other popular objects are commonly referred to as POIs in mapping applications, including Apple's own Maps. The company already incorporates POI data into first-party features, such as Maps searches for nearby restaurants or gas stations. Today's IP takes the idea beyond two-dimensional maps by adding AR into the mix.

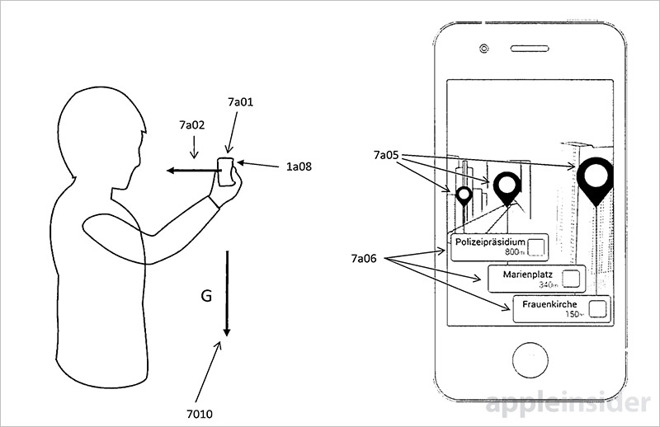

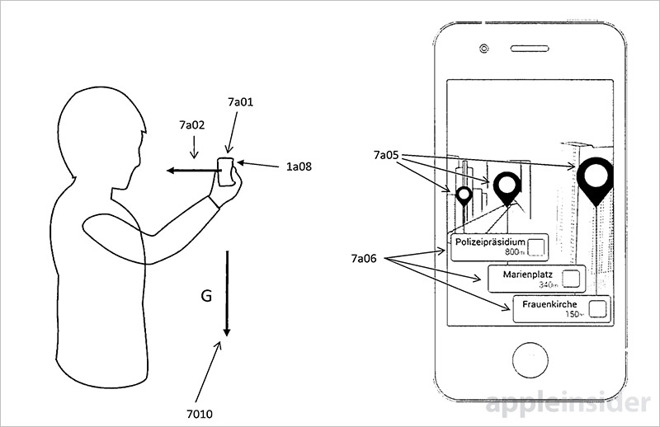

In practice, a device first captures images or video of a user's environment with an onboard camera and displays those images onscreen. Using geolocation information gleaned from GPS, compass and other sensor data, the device determines its relative position and heading relative to its surrounding environment.

In some embodiments, information of nearby POIs, or those that are determined relevant or visible in the recorded real-world view, is downloaded from offsite servers. Using latitude, longitude and altitude data, the system can compute the relative location of target POIs and display them correctly onscreen.

After determining POI location, graphical indicators with interactive annotations are overlaid on top of the captured image. Sensors, including a depth-sensing camera, might be used to place the markers in their correct spot. Importantly, indicators are anchored to their respective real world counterparts.

For example, when a user moves their device to the left, a building shown onscreen will appear to shift to the right of the display. When such movements occur, POI markers shift accordingly, resulting in perceived object persistence.

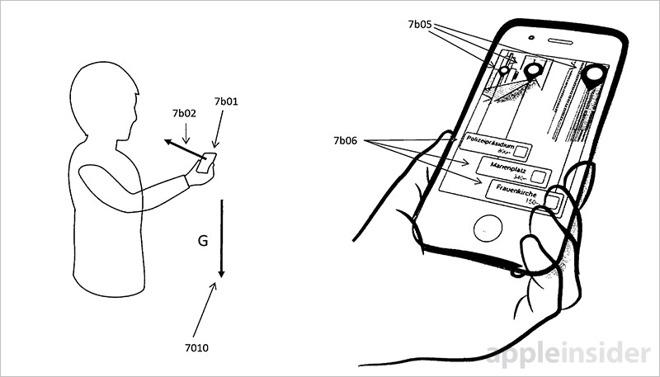

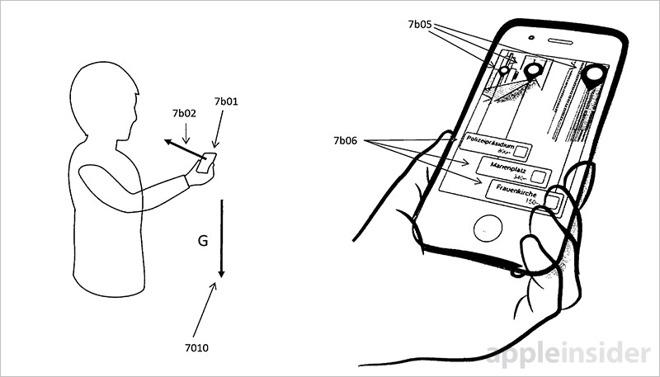

The invention further details more intuitive methods of interacting with the POI system than has been previously proposed. For example, iPhones are rarely held in a vertical position when navigating or performing operations other than picture taking. A more natural pose, with the top of the device angled slightly toward the ground, could push POI indicia offscreen.

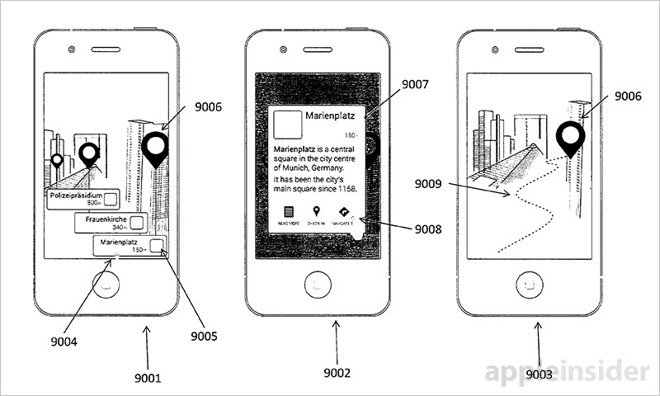

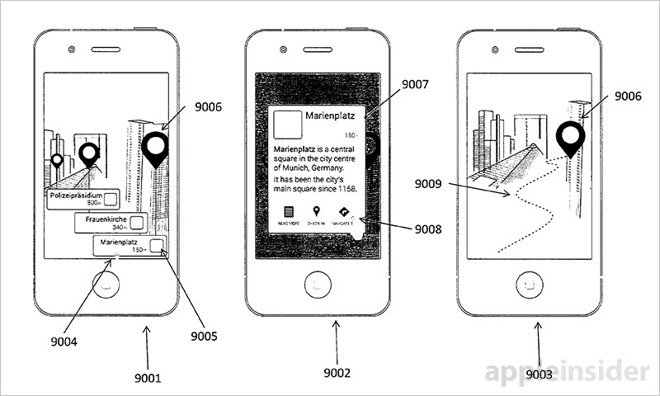

To compensate, the system intelligently draws lines or provides a "balloon" GUI that clearly connects POI annotations to real world objects. In such cases, users can easily tap on an annotation to bring up additional information while still holding the device in a natural manner.

Similar access methods are disclosed for POIs that appear in the middle or upper portion of a display. If the image position of the POI is far away from a user's thumb, for example, the system might generate POI annotations in screen real estate that is more easily accessible.

Other embodiments describe a user interface that switches from a real world view to a vanilla POI list view -- with compass-based directional indicators -- when a user device is tilted beyond a predetermined threshold. This particular presentation method would be ideal for at-a-glance navigation.

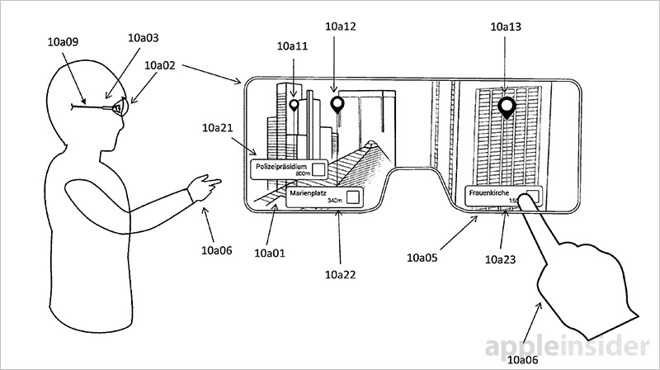

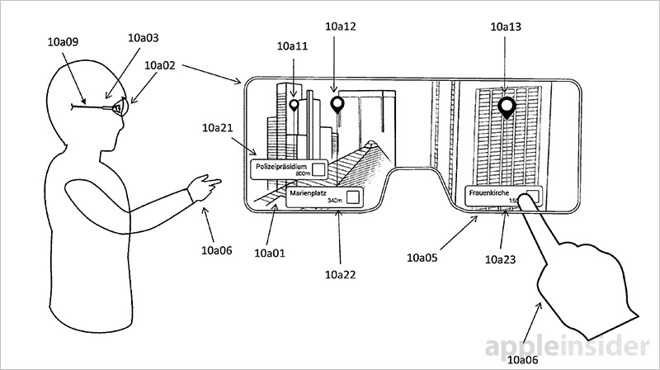

Apple's invention goes on to describe deployment on alternative devices like a head-mounted display with semi-transparent screen.

With an HMD, users can interact with POI overlays by reaching their hand out in front of their face to overlap onscreen annotations. Positioning sensors or an onboard camera might determine finger location relative to onscreen GUI representations, triggering a preconfigured app action like loading additional POI information. In such scenarios, a balloon GUI presented at a lower onscreen position would likely be a more comfortable mode of operation for most users.

Apple's patent application is an extension of granted IP reassigned by German AR firm Metaio, which the tech giant purchased in 2015 to further efforts in the burgeoning AR/VR market. Apple has expressed an increasingly intense interest in AR over the past year, but it wasn't until the announcement of ARKit in iOS 11 that the company's ambitions were revealed.

Whether Apple plans to integrate AR into Maps, or another first-party app, in the near future remains to be seen. So far, Apple seems content on delivering ARKit as a coding platform on developers can build their own wares. Although ARKit was just recently released as part of the iOS 11 beta, coders have already created a number of impressive demo projects showing off the solution's potential.

Apple's AR point of interest patent application was first filed for in April 2017 and credits Anton Fedosov, Stefan Misslinger and Peter Meier as its inventors.

An augmented reality invention, Apple's patent application for a "Method for representing points of interest in a view of a real environment on a mobile device and mobile device therefor" deals with interactions between computer generated objects and representations of real environments. In particular, the filing describes techniques by which images of the real world are enhanced by point of interest (POI) information.

Landmarks, buildings and other popular objects are commonly referred to as POIs in mapping applications, including Apple's own Maps. The company already incorporates POI data into first-party features, such as Maps searches for nearby restaurants or gas stations. Today's IP takes the idea beyond two-dimensional maps by adding AR into the mix.

In practice, a device first captures images or video of a user's environment with an onboard camera and displays those images onscreen. Using geolocation information gleaned from GPS, compass and other sensor data, the device determines its relative position and heading relative to its surrounding environment.

In some embodiments, information of nearby POIs, or those that are determined relevant or visible in the recorded real-world view, is downloaded from offsite servers. Using latitude, longitude and altitude data, the system can compute the relative location of target POIs and display them correctly onscreen.

After determining POI location, graphical indicators with interactive annotations are overlaid on top of the captured image. Sensors, including a depth-sensing camera, might be used to place the markers in their correct spot. Importantly, indicators are anchored to their respective real world counterparts.

For example, when a user moves their device to the left, a building shown onscreen will appear to shift to the right of the display. When such movements occur, POI markers shift accordingly, resulting in perceived object persistence.

The invention further details more intuitive methods of interacting with the POI system than has been previously proposed. For example, iPhones are rarely held in a vertical position when navigating or performing operations other than picture taking. A more natural pose, with the top of the device angled slightly toward the ground, could push POI indicia offscreen.

To compensate, the system intelligently draws lines or provides a "balloon" GUI that clearly connects POI annotations to real world objects. In such cases, users can easily tap on an annotation to bring up additional information while still holding the device in a natural manner.

Similar access methods are disclosed for POIs that appear in the middle or upper portion of a display. If the image position of the POI is far away from a user's thumb, for example, the system might generate POI annotations in screen real estate that is more easily accessible.

Other embodiments describe a user interface that switches from a real world view to a vanilla POI list view -- with compass-based directional indicators -- when a user device is tilted beyond a predetermined threshold. This particular presentation method would be ideal for at-a-glance navigation.

Apple's invention goes on to describe deployment on alternative devices like a head-mounted display with semi-transparent screen.

With an HMD, users can interact with POI overlays by reaching their hand out in front of their face to overlap onscreen annotations. Positioning sensors or an onboard camera might determine finger location relative to onscreen GUI representations, triggering a preconfigured app action like loading additional POI information. In such scenarios, a balloon GUI presented at a lower onscreen position would likely be a more comfortable mode of operation for most users.

Apple's patent application is an extension of granted IP reassigned by German AR firm Metaio, which the tech giant purchased in 2015 to further efforts in the burgeoning AR/VR market. Apple has expressed an increasingly intense interest in AR over the past year, but it wasn't until the announcement of ARKit in iOS 11 that the company's ambitions were revealed.

Whether Apple plans to integrate AR into Maps, or another first-party app, in the near future remains to be seen. So far, Apple seems content on delivering ARKit as a coding platform on developers can build their own wares. Although ARKit was just recently released as part of the iOS 11 beta, coders have already created a number of impressive demo projects showing off the solution's potential.

Apple's AR point of interest patent application was first filed for in April 2017 and credits Anton Fedosov, Stefan Misslinger and Peter Meier as its inventors.

Comments

This seems like the last thing mentioned in the article yet was the headline grabber. My 2 cents ... I'd think the main article's content is the more likely usage, I just don't see Apple going down the Google Glass road any time soon for Joe public. There certainly could be a head mounted version from Apple for specialist usage hence covering it in the patent ranging from military to law enforcement (just as examples) or even gamers but the iPhone itself is ideal for most of us for what I see as a huge market.

I'm no patent expert but perhaps Apple will cover them if they were developed with Apple's AR kit?

I agree with @gwanberg that this patent does sound very much like Live View in AroundMe. Although I thought they turned that feature off years ago, maybe I'm wrong. It does seem like it takes it a step further though with being able to hold the phone in a more natural position while still seeing the relevant information. This is the type of thing I'm hoping to see more of in the AR space.

https://www.engadget.com/2012/09/11/nokia-reveals-new-city-lens-for-windows-phone-8/

To answer that question you need to be intimately familiar with the prior art and the specifics of this particular filing, and determine what specific components of the patent are being patented, assuming this is a utility patent. Within Utility patents, there are four types: process patents, machine patents, manufacture patents, and composition of matter patents.

To prepare you must read all the notes from the USPTO regarding this patent and all the material accompanying the patent application by Apple's engineers and patent attorneys.

I'm sure this would be a good start in answering your question.

In particular, GPS is only available on the iPhone (and Apple Watch?), since it requires the cellular chipset. iPads and laptops are left without.

Unless the newest iPads have GPS hardware installed but not activated and not detected by those curious experts who dig at everything Apple, one should expect to see iPads with GPS with the next hardware release cycle. Maybe laptops too?