Self-driving 'Apple Car' may combine LiDAR with other sensors for better decision-making

As work on the "Apple Car" progresses, Apple is coming up with new ways to improve automated driving systems, including using other sensors to enhance LiDAR scans to better determine what's on the road.

Waymo's LiDAR-equipped minivan concept

Apple has been working on self-driving systems for quite some time, including testing of its developments on a fleet of vehicles in the United States. While it is unclear what the company's ultimate aim is for the system, such as whether it is going to include it as a feature of a supposed "Apple Car" or will be licensed out to other manufacturers, it is known that Apple is still looking at how it can improve the technology.

In a patent granted by the US Patent and Trademark Office on Tuesday titled "Shared sensor data across sensor processing pipelines," Apple proposes multiple processes at play within a self-driving system could be more collaborative with collected data.

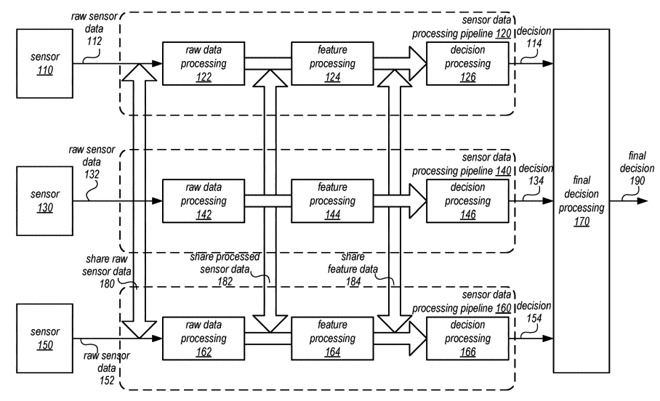

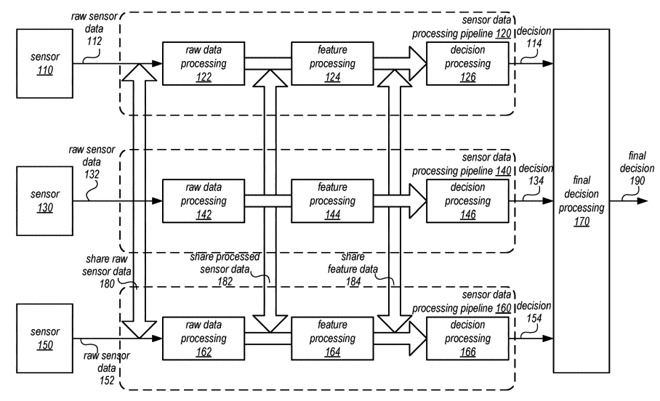

A typical sensor data processing pipeline would, in its simplest terms, involve data collected by sensors that are provided to a dedicated processing system, which then can determine the situation and a relevant course of action that then sends to other systems. Generally, data from a sensor would be confined to just one pipeline, with no real influence from other systems.

In Apple's proposal, pipelines could make a perception decision based on the data it has collected, with multiple pipelines capable of offering different decisions due to using different collections of sensors. While individual perceptions would be taken into account, Apple also proposes a fused perception decision could be made based on a combination of the two determined states.

A logical block diagram illustrating shared sensor data across processing pipelines.

Furthermore, Apple also suggests the data could be more granular, with decisions made and fused at different stages of each pipeline. This data, which can be shared between pipelines and can influence other processes, can include raw sensor data, processed sensor data, and even data derived from sensor data.

By offering more data points to work with, a control system will have more information to work with when creating a course of action, and may be able to make a more informed decision.

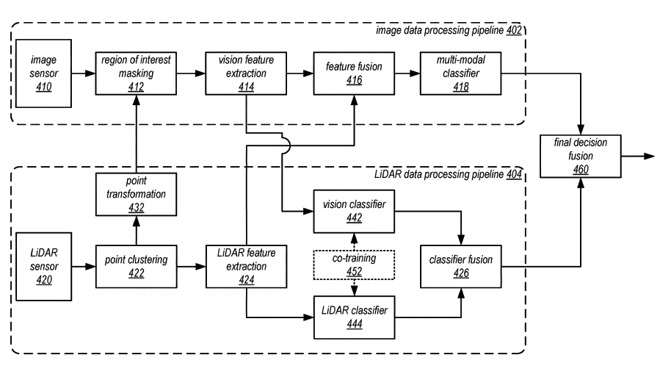

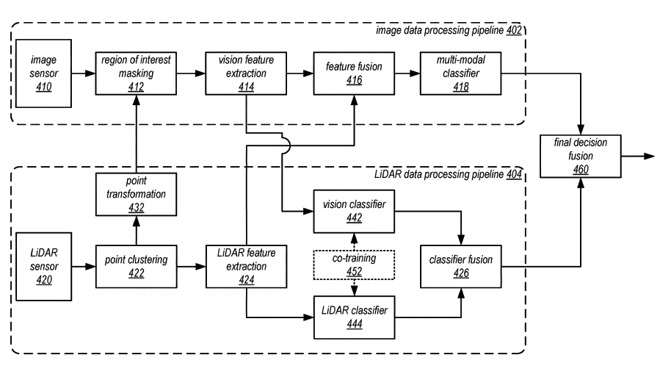

As part of the claims, Apple mentions how the pipelines could be for connected but different types of data, such as a first pipeline being for image sensor data while a second uses data from LiDAR.

Combining such data could allow for determinations that wouldn't normally be possible with one set of data alone. For example, LiDAR data can determine distances and depths, but it cannot see color, something that may be important when trying to recognize objects on the road, and data it could acquire from an image sensor data processing pipeline.

Another logical block diagram, this time showing sharing between a LiDAR sensor pipeline and one for image processing.

Apple offers that the concept could be used with other types of sensor as well, including but not limited to infrared, radar, GPS, inertial, and angular rate sensors.

The patent lists its inventors as Xinyu Xu, Ahmad Al-Dahle, and Kshitiz Garg.

Apple files numerous patent applications on a weekly basis, but though they are in no way a guarantee that Apple is developing specific features or products, it does show areas of interest for the company's research and development efforts.

On the sensor side, these filings have ranged from the creation of new types of LiDAR 3D mapping systems in 2016 to one from October 2019 suggesting how said systems could be hidden from view within the bodywork of the vehicle.

For processing, one May 2019 filing for a "confidence" algorithm would allow the system to get just enough data from its sensors to process the road, cutting down on the amount of data processing required to speed the process up and to save resources for other elements.

Others that surfaced in 2018 covered how a self-driving system could give passengers options, such as where to park or to change the direction of travel, then to act upon statements or gestures. A "Traffic direction gesture recognition" system found at the same time could recognize gestures of police officers and other officials directing traffic.

A "Cognitive Load Routing Metric for Vehicle Guidance" aimed to find the best route to take for a journey, by taking into account the complexity of the route. This included the number of lanes, narrowness of roads, street lights, and pedestrian traffic data among other data points, which could influence both directions provided to drivers and the self-driving system.

There have even been patent filings suggesting a self-driving system could adjust its driving style depending on the stress levels of passengers.

Waymo's LiDAR-equipped minivan concept

Apple has been working on self-driving systems for quite some time, including testing of its developments on a fleet of vehicles in the United States. While it is unclear what the company's ultimate aim is for the system, such as whether it is going to include it as a feature of a supposed "Apple Car" or will be licensed out to other manufacturers, it is known that Apple is still looking at how it can improve the technology.

In a patent granted by the US Patent and Trademark Office on Tuesday titled "Shared sensor data across sensor processing pipelines," Apple proposes multiple processes at play within a self-driving system could be more collaborative with collected data.

A typical sensor data processing pipeline would, in its simplest terms, involve data collected by sensors that are provided to a dedicated processing system, which then can determine the situation and a relevant course of action that then sends to other systems. Generally, data from a sensor would be confined to just one pipeline, with no real influence from other systems.

In Apple's proposal, pipelines could make a perception decision based on the data it has collected, with multiple pipelines capable of offering different decisions due to using different collections of sensors. While individual perceptions would be taken into account, Apple also proposes a fused perception decision could be made based on a combination of the two determined states.

A logical block diagram illustrating shared sensor data across processing pipelines.

Furthermore, Apple also suggests the data could be more granular, with decisions made and fused at different stages of each pipeline. This data, which can be shared between pipelines and can influence other processes, can include raw sensor data, processed sensor data, and even data derived from sensor data.

By offering more data points to work with, a control system will have more information to work with when creating a course of action, and may be able to make a more informed decision.

As part of the claims, Apple mentions how the pipelines could be for connected but different types of data, such as a first pipeline being for image sensor data while a second uses data from LiDAR.

Combining such data could allow for determinations that wouldn't normally be possible with one set of data alone. For example, LiDAR data can determine distances and depths, but it cannot see color, something that may be important when trying to recognize objects on the road, and data it could acquire from an image sensor data processing pipeline.

Another logical block diagram, this time showing sharing between a LiDAR sensor pipeline and one for image processing.

Apple offers that the concept could be used with other types of sensor as well, including but not limited to infrared, radar, GPS, inertial, and angular rate sensors.

The patent lists its inventors as Xinyu Xu, Ahmad Al-Dahle, and Kshitiz Garg.

Apple files numerous patent applications on a weekly basis, but though they are in no way a guarantee that Apple is developing specific features or products, it does show areas of interest for the company's research and development efforts.

Driving self-driving forward

The patent is only the latest in a long string of self-driving filings, as well as connected Apple Car applications, that have surfaced over the years.On the sensor side, these filings have ranged from the creation of new types of LiDAR 3D mapping systems in 2016 to one from October 2019 suggesting how said systems could be hidden from view within the bodywork of the vehicle.

For processing, one May 2019 filing for a "confidence" algorithm would allow the system to get just enough data from its sensors to process the road, cutting down on the amount of data processing required to speed the process up and to save resources for other elements.

Others that surfaced in 2018 covered how a self-driving system could give passengers options, such as where to park or to change the direction of travel, then to act upon statements or gestures. A "Traffic direction gesture recognition" system found at the same time could recognize gestures of police officers and other officials directing traffic.

A "Cognitive Load Routing Metric for Vehicle Guidance" aimed to find the best route to take for a journey, by taking into account the complexity of the route. This included the number of lanes, narrowness of roads, street lights, and pedestrian traffic data among other data points, which could influence both directions provided to drivers and the self-driving system.

There have even been patent filings suggesting a self-driving system could adjust its driving style depending on the stress levels of passengers.

Comments

PS: Thank you for using a different car pick. 🙏 Have you considered contracting someone to create some nice, futuristic designs? From my experience, a great mockup can help draw a lot of attention to a website.

So if they are not going to sell cars, what is the point of all of this R&D? To sell the underlying system to automakers? But they are already working on that themselves. I don't see why they would ditch all that research effort and go with Apple; they will want to own the technology.

Or would Apple go the way of Smart and have an established automaker manufacture the cars with Apple technology? However that still doesn't answer the question of why Apple would want to do this with such low profit margins.

I think it is very likely that Apple won't be setting themselves up like existing automakers. If there is a model they are going to follow, look at Tesla, but extrapolate even more:

1. They won't own any manufacturing or assembly plants. It will all be contracted out. The assembly line design will definitely be theirs.

2. They won't have a dealer network and won't have "inventory". Everything will be BTO.

3. They will have a branded service network of some kind, possibly like Tesla's at-home service.

4. There will be features that sell their vehicle and that provide those margins.

5. They will need to have a branded charging network of some kind.

To me, all this automated driving R&D means they are on a 10 to 15 year timeline. I think they decided they couldn't have a competitive product without it. They can build, for a lack of a better word, a "dumb" EV and have it shipping in a 3 to 4 year time frame, but I think they decided it wasn't worth it sometime in 2017 or whenever the Bob Mansfield shuffle happened. Too bad imo. I'd prefer a dumb EV, ie, one without automated driving features, as long as it has class leading efficiency. Wish they would buy out Lightyear One and do their relentless iteration with that type of idea.

All this was discussed like 3 weeks ago:

https://forums.appleinsider.com/discussion/215945/apple-will-maintain-bumper-to-bumper-control-of-apple-car-project-says-morgan-stanley/p1

What is 'in this age of pandemics"? There is only one pandemic, not multiples. If you mean the history of man, then there are multiple but that's life. If you then look at the historical data you know that this is temporary with pandemics not being a constant, but you suggest that fully autonomous vehicles will never happen because of ridesahring during "pandemics"? WTF?!

1. Okay, so name Apple's automobile manufacturing partners. I'll wait.

1. Nobody knows that yet. Everything we know at this points to them not even being at that stage yet, so why would I know? What makes you sure they know for sure?

2. The point wasn't the Morgan Stanley angle, it was the discussion pointing to the numerous patents by Apple and articles outlining everything addressing these issues that people like yourself are ignoring.

It wasn't just me, there were some other thoughtful people in that thread that also vehemently disagreed with you, including one you thought was saying what you thought but was not.

I'll put money on it — this will not be the Rokr vehicle. It'll be Apple from the top down, leveraging their existing partners for some of it and probably new partners for the rest — whether that's someone like Magna or something we haven't seen yet, who knows. We know that they backed up from that end several years ago now when they reshuffled and brought Mansfield back in and may not have a solution there yet. But no signs point to what you're speculating as far as I can tell.

Saying things like "I know they can't do this because they have to build a factory that'll take 5-10 years" is seriously in "they won't just walk in" territory and you should know that by now.

This, exactly, plus the existing manufacturing partners they have to do many other components that would be a part of such vehicle from the chips all the way up to the glass and aluminum (or whatever). It's literally mapped out in front of us based on their existing business.

As for the idea of integrating LiDAR data, of course they will.

In the 90s, self-driving cars used cameras for lateral motion and position estimation. Cameras have high angular resolution, so they're great for determining which lane a car in the camera's view is in. None of the features of cars (size, taillight spacing, &c.) are standardized, though, so it's hard to tell if you're looking at a small car which is close, or a large car which is further away.

Around 1993, they started adding RADAR for longitudinal position and motion estimation. These sensors had great distance and speed resolution, but poor selectivity. They work in a pretty broad cone, so it's hard to tell if the distance and motion you get back are from the car in front of you, or a car a lane (or even two!) to the side. The data from both sensors was integrated and gave a pretty solid combined estimate of the world outside the car.

LiDAR gives good angular resolution and good distance resolution. Further, it's a lot less susceptible to external interference. It can't tell which light at an intersection is illuminated, though. I expect LiDAR to be used for most guidance with a color camera (or an array of several) used to identify signs and lights, and as backup in case the LiDAR returns nonsensical data.

Data from several internal sensors will probably be used as well. GPS receiver, at least one motion-compensated level, and at least two chassis IMUs (which might be used to synthesize the level). I would actually expect IMUs in each hub (along with the wheel speed sensor and a mic) to monitor flex and control semi-active suspension components.