Apple AR wearables could track gaze, alter resolution for legibility & power saving

Multiple avenues of research from Apple focuses on the ability of wearable AR devices like the rumored "Apple Glass" to sense what you're looking at, and reconfigure itself to contextually present information a wearer needs.

Patent application drawings

A wide range of Apple's plans for its "Apple Glass" and all Apple AR work have been revealed with multiple patent applications. Filed separately, they still combine to contribute an overall picture of Apple working on aspects of how people will be able to interact with real and virtual devices.

"[Using this] a geometric layout of the physical environment is determined," says the application. "Based on the type of the physical environment, one or more virtual-reality objects are displayed corresponding to a representation of the physical environment."

So when the physical layout of the environment is established, then the system can accurately position virtual objects.

We are still some way from a Star Trek holodeck, so this system and all of them touch on issues relating to the headsets that users must wear. "Display System With Virtual Image Distance Adjustment and Corrective Lenses," addresses how headsets could work for people who have vision problems.

"Some users of head-mounted devices have visual defects such as myopia, hyperopia, astigmatism, or presbyopia," says this application. "It can be challenging to ensure that an optical system in a head-mounted device displays computer-generated content satisfactorily and provides an acceptable viewing experience for users with visual defects."

"If care is not taken," it continues, "it may be difficult or impossible for a user with visual defects to focus properly on content that is being displayed or content may otherwise not be displayed as desired."

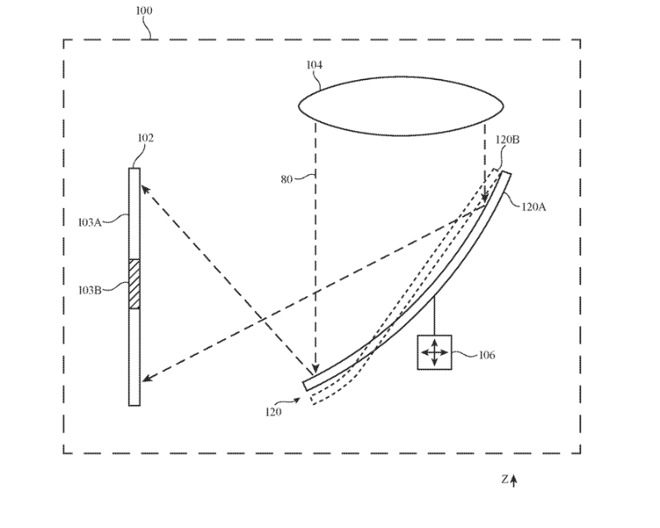

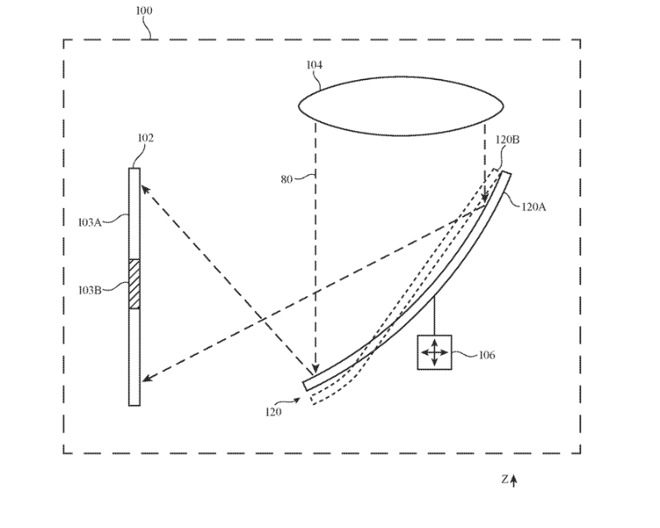

Detail from a patent showing adjustable headset design

Apple proposals revolve around specifics to do with "head-mounted devices [that] may include optical systems with lenses." The application says that, "[these] lenses allow displays in the devices to present visual content to users."

In headsets which have "a transparent display so that a user may observe real-world objects" as well as virtual ones, they could use cameras to relay the reality of that physical environment. This kind of "pass-through camera" can intelligently alter its own settings to fit what is being looked at.

"The pass-through camera may capture some high-resolution image data for displaying on the display," says this application. "However, only low-resolution image data may be needed to display low-resolution images in the periphery of the user's field of view on the display."

So the headset may alter the resolution of what it shows to the wearer, depending on what they're looking at. This has the benefits of meaning less data to process, which helps performance, and consequently battery life.

Foveated imaging, as this alteration of resolution is called, does depend on the ability to know where a user is looking. Part of that can be determined from the physical movement of the head, but that doesn't help the system know which specific parts of a display are being looked at.

The last of the four new related patent applications focuses on this gaze-tracking issue, but for the operation of the devices themselves. "Gaze-based User Interactions" describes methods by which "a user uses his or her eyes to interact with user interface objects displayed on the electronic device."

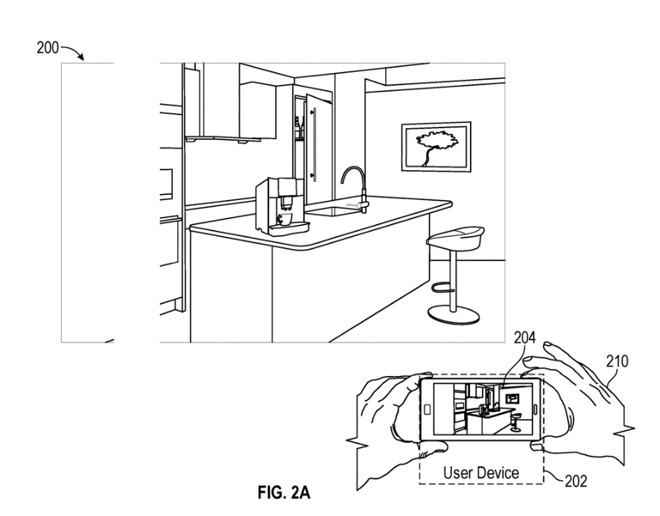

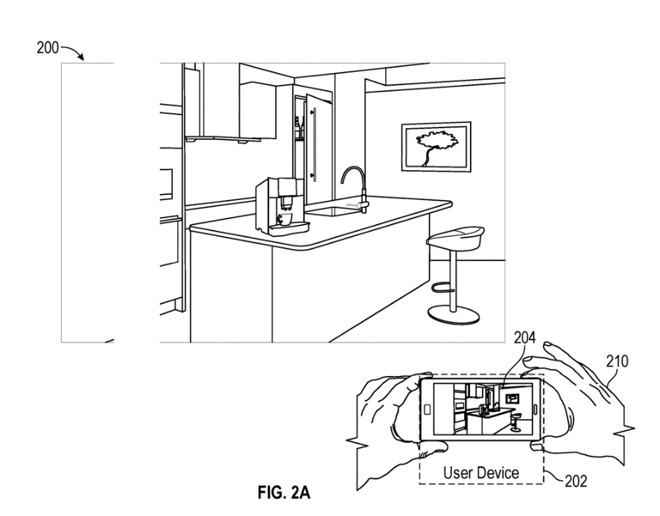

Detail from the patent application showing a real-world environment being mapped to allow positioning of virtual objects

So devices could "provide a more natural and efficient interface." As well as a user being able to select objects or items by looking at them, these methods propose being able to interpret everything from a glance to a Paddington hard stare.

The application describes issues to do with difficulties caused by "uncertainty and instability of the position of a user's eye gaze."

The four applications each represent developments and refinements in areas that Apple has been pursuing for years and across many more patents. As well as an insight into areas that the firm is concerned with, they're also an example of how Apple benefits from being across hardware and software.

Patent application drawings

A wide range of Apple's plans for its "Apple Glass" and all Apple AR work have been revealed with multiple patent applications. Filed separately, they still combine to contribute an overall picture of Apple working on aspects of how people will be able to interact with real and virtual devices.

Detecting the real-world environment

The four patent applications are broadly concerned with detecting and working with the real and virtual environments around a user. "Environment-based Application Presentation," proposes a method of interpreting what cameras see of the real world as a basis for a kind of map."[Using this] a geometric layout of the physical environment is determined," says the application. "Based on the type of the physical environment, one or more virtual-reality objects are displayed corresponding to a representation of the physical environment."

So when the physical layout of the environment is established, then the system can accurately position virtual objects.

We are still some way from a Star Trek holodeck, so this system and all of them touch on issues relating to the headsets that users must wear. "Display System With Virtual Image Distance Adjustment and Corrective Lenses," addresses how headsets could work for people who have vision problems.

"Some users of head-mounted devices have visual defects such as myopia, hyperopia, astigmatism, or presbyopia," says this application. "It can be challenging to ensure that an optical system in a head-mounted device displays computer-generated content satisfactorily and provides an acceptable viewing experience for users with visual defects."

"If care is not taken," it continues, "it may be difficult or impossible for a user with visual defects to focus properly on content that is being displayed or content may otherwise not be displayed as desired."

Detail from a patent showing adjustable headset design

Apple proposals revolve around specifics to do with "head-mounted devices [that] may include optical systems with lenses." The application says that, "[these] lenses allow displays in the devices to present visual content to users."

Foveation imaging in "Apple Glass"

More specific aspects of headsets are examined in the "Head-Mounted Device with Active Optical Foveation" application. Rather than being about the lenses through which images are received, this concentrates on the display of those images in ways that best benefit the user.In headsets which have "a transparent display so that a user may observe real-world objects" as well as virtual ones, they could use cameras to relay the reality of that physical environment. This kind of "pass-through camera" can intelligently alter its own settings to fit what is being looked at.

"The pass-through camera may capture some high-resolution image data for displaying on the display," says this application. "However, only low-resolution image data may be needed to display low-resolution images in the periphery of the user's field of view on the display."

So the headset may alter the resolution of what it shows to the wearer, depending on what they're looking at. This has the benefits of meaning less data to process, which helps performance, and consequently battery life.

Foveated imaging, as this alteration of resolution is called, does depend on the ability to know where a user is looking. Part of that can be determined from the physical movement of the head, but that doesn't help the system know which specific parts of a display are being looked at.

Gaze tracking in AR and with "Apple Glass"

"Using a gaze-tracking system in the head-mounted device," suggest Apple, "the device may determine which portion of the display is being viewed directly by a user."The last of the four new related patent applications focuses on this gaze-tracking issue, but for the operation of the devices themselves. "Gaze-based User Interactions" describes methods by which "a user uses his or her eyes to interact with user interface objects displayed on the electronic device."

Detail from the patent application showing a real-world environment being mapped to allow positioning of virtual objects

So devices could "provide a more natural and efficient interface." As well as a user being able to select objects or items by looking at them, these methods propose being able to interpret everything from a glance to a Paddington hard stare.

The application describes issues to do with difficulties caused by "uncertainty and instability of the position of a user's eye gaze."

The four applications each represent developments and refinements in areas that Apple has been pursuing for years and across many more patents. As well as an insight into areas that the firm is concerned with, they're also an example of how Apple benefits from being across hardware and software.

Comments