'Apple Glass' may use Look Around-style smooth navigation motion

The forthcoming "Apple Glass" will present wearers with new AR views of real or virtual surroundings, and Apple is working on technology so users to can zoom and magnify the images smoothly.

Once "Apple Glass" has shown you a distant object, it's less disorientating to find yourself immediately taken there.

Think of how Apple Maps has its Look Around feature. It's the same as Google's Street View, but it takes away that service's jerky steps and instead makes zooming through streets smooth and easy. That's what Apple wants to bring to applications using "Apple Glass" and Apple AR.

"Movement within an Environment," is a newly-revealed Apple patent application which is about how virtual landscapes can be changed to indicate movement. A user will always be able to stroll through a CG rendered (CGR) environment, but this would let them jump to another part without disorientation.

"Some CGR applications display the CGR environment from a particular position in the CGR environment," says the patent application. "In some cases, the position represents the location of a user or a virtual camera within the CGR environment. [However] In some applications, a user may desire to move within the CGR environment and/or view the CGR environment from a different position."

"Movement in a CGR environment need not correspond directly to the actual physical movement of the user in the real world," it continues. "Indeed, a user can move great distances quickly in a CGR environment that would be infeasible in the real world."

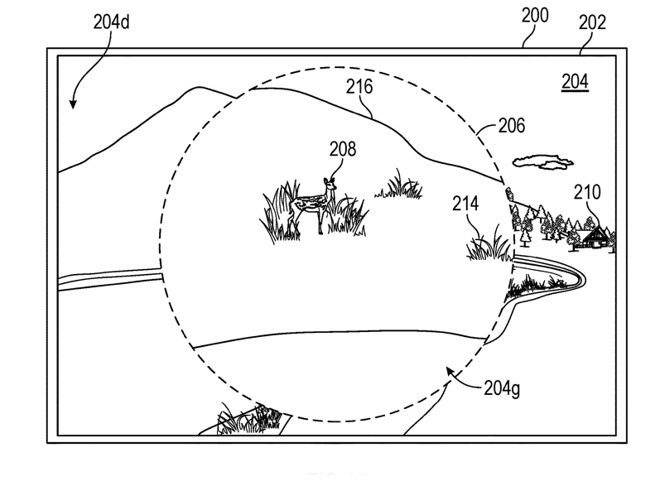

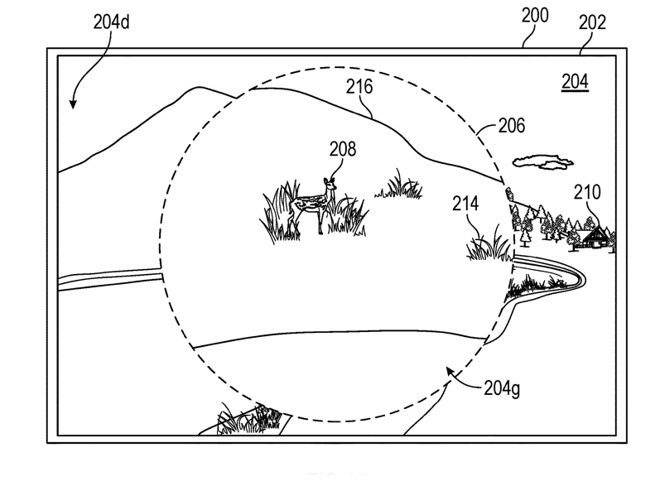

Detail from the patent showing how a "magnified" view could be shown to Apple Glass wearers

This virtual environment has to change depending on the user's position or orientation. "For example, a CGR system may detect a person's head turning and, in response, adjust graphical content and an acoustic field presented to the person in a manner similar to how such views and sounds would change in a physical environment," says the application.

That adjustment is needed in every AR, VR or Mixed Reality environment, whether everything is virtual or augmented reality objects are being positioned over a view of the real world.

What's new about this application is that Apple wants you to be able to turn your head, see something in the "distance," and then decide to jump immediately to it. What you can "see" from where you are in the environment, can be joined by what is presented as a magnified view of a distant point.

"A magnified portion and an unmagnified portion of a computer-generated reality (CGR) environment are displayed from a first position," says Apple. "In response to receiving an input, a magnified portion of the CGR environment from a second position is displayed."

So you'd see a regular view of a distant virtual object, but be able to choose to see a close-up view of it too. Having seen that, you can elect to go to that point and you're more mentally prepared for it than you would if you were just teleported to a new spot.

This patent is credited to three inventors, including Ryan S. Burgoyne. His previous patent applications include a related one about "Apple Glass" users being able to manipulate AR images.

Detail from the patent showing a real object (left) and a virtual one (right). Despite the low-quality illustration, Apple contends that a lack of video noise makes the virtual object look fake

"Some augmented reality (AR) systems capture a video stream and combine images of the video stream with virtual content," says this other patent application. "The images of the video stream can be very noisy, especially in lower light condition in which certain ISO settings are used to boost image brightness."

"[But] since the virtual content renderer does not suffer from the physical limitations of the image capture device, there is little or no noise in the virtual content," it continues. "The lack of the appearance of noise, e.g., graininess, strength, its property variations between color channels, etc., on virtual content can result in the virtual content appearing to float, look detached, stand out, or otherwise fail to fit with the real content."

It's not a new problem but Apple says that existing "systems and techniques do not adequately account for image noise in presenting virtual content with image content in AR and other content that combines virtual content with real image content."

So Apple proposes multiple methods of effectively capturing the noise and applying it to the pristine virtual objects. The result of all the different approaches is to make a virtual object appear to be real and actually in the position it's being shown.

This application is credited to Daniel Kurz and Tobias Holl. Kurz has previously been awarded patents for using AR to turn any surface into a display with touch controls.

Once "Apple Glass" has shown you a distant object, it's less disorientating to find yourself immediately taken there.

Think of how Apple Maps has its Look Around feature. It's the same as Google's Street View, but it takes away that service's jerky steps and instead makes zooming through streets smooth and easy. That's what Apple wants to bring to applications using "Apple Glass" and Apple AR.

"Movement within an Environment," is a newly-revealed Apple patent application which is about how virtual landscapes can be changed to indicate movement. A user will always be able to stroll through a CG rendered (CGR) environment, but this would let them jump to another part without disorientation.

"Some CGR applications display the CGR environment from a particular position in the CGR environment," says the patent application. "In some cases, the position represents the location of a user or a virtual camera within the CGR environment. [However] In some applications, a user may desire to move within the CGR environment and/or view the CGR environment from a different position."

"Movement in a CGR environment need not correspond directly to the actual physical movement of the user in the real world," it continues. "Indeed, a user can move great distances quickly in a CGR environment that would be infeasible in the real world."

Detail from the patent showing how a "magnified" view could be shown to Apple Glass wearers

This virtual environment has to change depending on the user's position or orientation. "For example, a CGR system may detect a person's head turning and, in response, adjust graphical content and an acoustic field presented to the person in a manner similar to how such views and sounds would change in a physical environment," says the application.

That adjustment is needed in every AR, VR or Mixed Reality environment, whether everything is virtual or augmented reality objects are being positioned over a view of the real world.

What's new about this application is that Apple wants you to be able to turn your head, see something in the "distance," and then decide to jump immediately to it. What you can "see" from where you are in the environment, can be joined by what is presented as a magnified view of a distant point.

"A magnified portion and an unmagnified portion of a computer-generated reality (CGR) environment are displayed from a first position," says Apple. "In response to receiving an input, a magnified portion of the CGR environment from a second position is displayed."

So you'd see a regular view of a distant virtual object, but be able to choose to see a close-up view of it too. Having seen that, you can elect to go to that point and you're more mentally prepared for it than you would if you were just teleported to a new spot.

This patent is credited to three inventors, including Ryan S. Burgoyne. His previous patent applications include a related one about "Apple Glass" users being able to manipulate AR images.

Making virtual objects look real

Separately, Apple has also now applied for a patent concerning making the virtual objects you see through "Apple Glass," look more real, more at home with where they appear to be placed. "Rendering objects to match camera noise," is about matching different video qualities within one AR environment.

Detail from the patent showing a real object (left) and a virtual one (right). Despite the low-quality illustration, Apple contends that a lack of video noise makes the virtual object look fake

"Some augmented reality (AR) systems capture a video stream and combine images of the video stream with virtual content," says this other patent application. "The images of the video stream can be very noisy, especially in lower light condition in which certain ISO settings are used to boost image brightness."

"[But] since the virtual content renderer does not suffer from the physical limitations of the image capture device, there is little or no noise in the virtual content," it continues. "The lack of the appearance of noise, e.g., graininess, strength, its property variations between color channels, etc., on virtual content can result in the virtual content appearing to float, look detached, stand out, or otherwise fail to fit with the real content."

It's not a new problem but Apple says that existing "systems and techniques do not adequately account for image noise in presenting virtual content with image content in AR and other content that combines virtual content with real image content."

So Apple proposes multiple methods of effectively capturing the noise and applying it to the pristine virtual objects. The result of all the different approaches is to make a virtual object appear to be real and actually in the position it's being shown.

This application is credited to Daniel Kurz and Tobias Holl. Kurz has previously been awarded patents for using AR to turn any surface into a display with touch controls.

Comments