Apple's Tim Millet talks A14 Bionic, machine learning in new interview

Apple's Vice President of Platform Architecture offers insight on the new A14 Bionic processor, the importance of machine learning, and how Apple continues to separate itself from its competitors in a new interview.

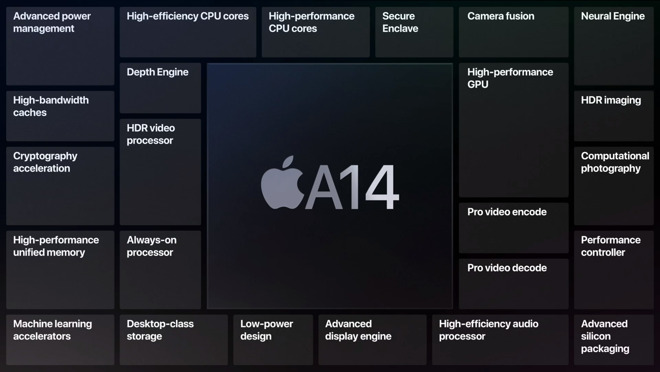

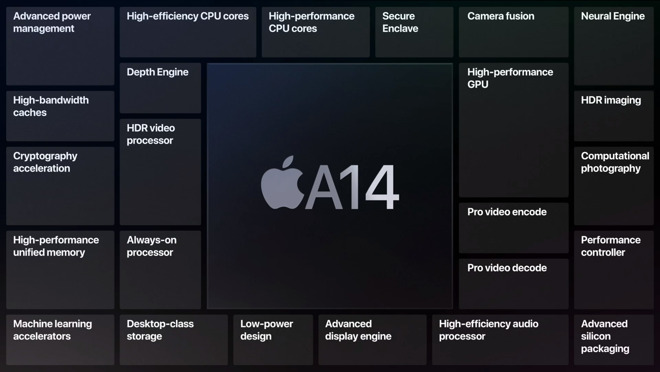

The new iPad Air was announced at Apple's September event, and within the new device, Apple's A14 Bionic processor is could already prove to be a significant upgrade over the previous generation.

According to Apple, the A14 Bionic offers a 30% boost for CPU performance, while using a new four-core graphics architecture for a 30% faster graphics boost, compared against the A12 Bionic used in the iPad Air 3. Against the A13, the benchmarks suggest the A14 offers a 19% improvement in CPU performance and 27% for graphics.

In an interview with German magazine Stern, Apple's Vice President of Platform Architecture, Tim Millet, offered some insight into what makes the A14 Bionic processor tick.

Apple's Tim Millet during the "Time Flies" event

Millet explains that while Apple did not invent machine learning and neural engines -- "the foundations for this go back many decades" -- they did help to find ways to accelerate the process.

Machine learning requires neural networks to be trained on complex data systems, which, until recently, did not exist. As storage grew larger, machines could take advantage of larger data sets, but the learning process was still relatively slow. However, in the early 2010s, this all began to change.

Fast forward to 2017 when the iPhone X released-- the first iPhone that featured Face ID. This process was powered by the A11 chip and was capable of processing 600 billion arithmetic operations per second.

The five-nanometer A14 Bionic chip, which will debut the new iPad Air set to release in October, can calculate over 18 times as many operations -- up to 11 trillion per second.

"We are excited about the emergence of machine learning and how it enables a completely new class," Millet told Stern. "It takes my breath away when I see what people can do with the A14 bionic chip."

Of course, hardware isn't the only thing that matters when it comes to performance. Millet also points out that Apple's hardware developers have the unique position of working alongside the company's software teams.

Together, the developers ensure that they're building software that can be useful to everyone.

"We work very closely with our software team throughout development to make sure we're not just building a piece of technology that is useful to a few. We wanted to make sure that thousands and thousands of iOS developers could do something with it."

He highlights the importance of Core ML, the foundational machine learning framework that is often used for language processing, image analysis, sound analysis, and more. Apple provided developers access to Core ML, giving them the ability to use machine learning in their apps.

"Core ML is a fantastic opportunity for people who want to understand and find out what their options are," said Millet. "We have invested a lot of time in making sure that we don't just pack transistors into the chip that are then not used. We want the masses to access them."

Stern points out that Core ML is a critical component in the internationally bestseller DJ app Djay. It's also been utilized by software giant Adobe.

Lastly, Tim Millet took the time to address the issue of Face ID's incompatibility with coronavirus-limiting facial coverings. He says that while Apple could, in theory, make Face ID while you're wearing a mask, it likely wouldn't. By covering your face, you're removing the data the iPhone uses to confirm it's actually you -- and by doing so, the odds Face ID could be compromised increase.

"It's hard to see something that you can't see," explains Tim Millet. "The facial recognition models are really good, but it's a tricky problem. People want convenience, but they want to be safe at the same time. And Apple is all about making sure data stays safe."

The new iPad Air was announced at Apple's September event, and within the new device, Apple's A14 Bionic processor is could already prove to be a significant upgrade over the previous generation.

According to Apple, the A14 Bionic offers a 30% boost for CPU performance, while using a new four-core graphics architecture for a 30% faster graphics boost, compared against the A12 Bionic used in the iPad Air 3. Against the A13, the benchmarks suggest the A14 offers a 19% improvement in CPU performance and 27% for graphics.

In an interview with German magazine Stern, Apple's Vice President of Platform Architecture, Tim Millet, offered some insight into what makes the A14 Bionic processor tick.

Apple's Tim Millet during the "Time Flies" event

Millet explains that while Apple did not invent machine learning and neural engines -- "the foundations for this go back many decades" -- they did help to find ways to accelerate the process.

Machine learning requires neural networks to be trained on complex data systems, which, until recently, did not exist. As storage grew larger, machines could take advantage of larger data sets, but the learning process was still relatively slow. However, in the early 2010s, this all began to change.

Fast forward to 2017 when the iPhone X released-- the first iPhone that featured Face ID. This process was powered by the A11 chip and was capable of processing 600 billion arithmetic operations per second.

The five-nanometer A14 Bionic chip, which will debut the new iPad Air set to release in October, can calculate over 18 times as many operations -- up to 11 trillion per second.

"We are excited about the emergence of machine learning and how it enables a completely new class," Millet told Stern. "It takes my breath away when I see what people can do with the A14 bionic chip."

Of course, hardware isn't the only thing that matters when it comes to performance. Millet also points out that Apple's hardware developers have the unique position of working alongside the company's software teams.

Together, the developers ensure that they're building software that can be useful to everyone.

"We work very closely with our software team throughout development to make sure we're not just building a piece of technology that is useful to a few. We wanted to make sure that thousands and thousands of iOS developers could do something with it."

He highlights the importance of Core ML, the foundational machine learning framework that is often used for language processing, image analysis, sound analysis, and more. Apple provided developers access to Core ML, giving them the ability to use machine learning in their apps.

"Core ML is a fantastic opportunity for people who want to understand and find out what their options are," said Millet. "We have invested a lot of time in making sure that we don't just pack transistors into the chip that are then not used. We want the masses to access them."

Stern points out that Core ML is a critical component in the internationally bestseller DJ app Djay. It's also been utilized by software giant Adobe.

Lastly, Tim Millet took the time to address the issue of Face ID's incompatibility with coronavirus-limiting facial coverings. He says that while Apple could, in theory, make Face ID while you're wearing a mask, it likely wouldn't. By covering your face, you're removing the data the iPhone uses to confirm it's actually you -- and by doing so, the odds Face ID could be compromised increase.

"It's hard to see something that you can't see," explains Tim Millet. "The facial recognition models are really good, but it's a tricky problem. People want convenience, but they want to be safe at the same time. And Apple is all about making sure data stays safe."

Comments