Apple engineers reveal the plan behind iPhone camera design philosophy

Rather than concentrating on any one hardware aspect of iPhone photography, Apple's engineers and managers aim to control how the company manages every step of taking a photo.

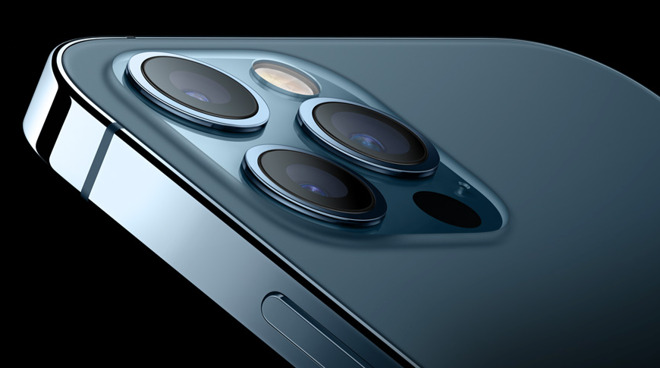

Apple's camera system

With the launch of the iPhone 12 Pro Max, Apple has introduced the largest camera sensor it has ever put in an iPhone. Yet rather than being there to "brag about," Apple says that it is part of a philosophy that sees camera designers working across every possible aspect from hardware to software.

Speaking to photography site PetaPixel, Francesca Sweet, product line manager for the iPhone, and Jon McCormack, vice president of camera software engineering, emphasized that they work across the whole design in order to simplify taking photos.

"As photographers, we tend to have to think a lot about things like ISO, subject motion, et cetera," Job McCormack said. "And Apple wants to take that away to allow people to stay in the moment, take a great photo, and get back to what they're doing."

"It's not as meaningful to us anymore to talk about one particular speed and feed of an image, or camera system," he continued. "We think about what the goal is, and the goal is not to have a bigger sensor that we can brag about."

"The goal is to ask how we can take more beautiful photos in more conditions that people are in," he said. "It was this thinking that brought about Deep Fusion, Night Mode, and temporal image signal processing."

Apple's overall aim, both McCormack and Sweet say, is to automatically "replicate as much as we can... what the photographer will [typically] do in post." So with Machine Learning, Apple's camera system breaks down an image into elements that it can then process.

"The background, foreground, eyes, lips, hair, skin, clothing, skies," lists McCormack. "We process all these independently like you would in [Adobe] Lightroom with a bunch of local adjustments. We adjust everything from exposure, contrast, and saturation, and combine them all together."

This isn't to deny the advantages of a bigger sensor, according to Sweet. "The new wide camera [of the iPhone 12 Pro Max], improved image fusion algorithms, make for lower noise and better detail."

"With the Pro Max we can extend that even further because the bigger sensor allows us to capture more light in less time, which makes for better motion freezing at night," she continued.

Apple's iPhone 12 range brings camera improvements across the board

Nonetheless, both Sweet and McCormack believe that it is vital how Apple designs and controls every element from lens to software.

"We don't tend to think of a single axis like 'if we go and do this kind of thing to hardware' then a magical thing will happen," said McCormack. "Since we design everything from the lens to the GPU and CPU, we actually get to have many more places that we can do innovation."

The iPhone 12 Pro Max is now available for pre-order. It ships from November 13.

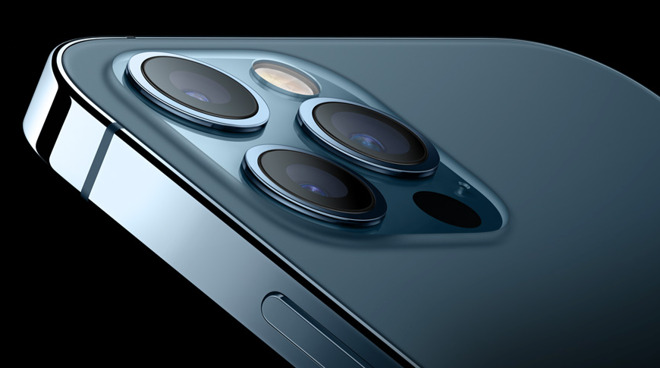

Apple's camera system

With the launch of the iPhone 12 Pro Max, Apple has introduced the largest camera sensor it has ever put in an iPhone. Yet rather than being there to "brag about," Apple says that it is part of a philosophy that sees camera designers working across every possible aspect from hardware to software.

Speaking to photography site PetaPixel, Francesca Sweet, product line manager for the iPhone, and Jon McCormack, vice president of camera software engineering, emphasized that they work across the whole design in order to simplify taking photos.

"As photographers, we tend to have to think a lot about things like ISO, subject motion, et cetera," Job McCormack said. "And Apple wants to take that away to allow people to stay in the moment, take a great photo, and get back to what they're doing."

"It's not as meaningful to us anymore to talk about one particular speed and feed of an image, or camera system," he continued. "We think about what the goal is, and the goal is not to have a bigger sensor that we can brag about."

"The goal is to ask how we can take more beautiful photos in more conditions that people are in," he said. "It was this thinking that brought about Deep Fusion, Night Mode, and temporal image signal processing."

Apple's overall aim, both McCormack and Sweet say, is to automatically "replicate as much as we can... what the photographer will [typically] do in post." So with Machine Learning, Apple's camera system breaks down an image into elements that it can then process.

"The background, foreground, eyes, lips, hair, skin, clothing, skies," lists McCormack. "We process all these independently like you would in [Adobe] Lightroom with a bunch of local adjustments. We adjust everything from exposure, contrast, and saturation, and combine them all together."

This isn't to deny the advantages of a bigger sensor, according to Sweet. "The new wide camera [of the iPhone 12 Pro Max], improved image fusion algorithms, make for lower noise and better detail."

"With the Pro Max we can extend that even further because the bigger sensor allows us to capture more light in less time, which makes for better motion freezing at night," she continued.

Apple's iPhone 12 range brings camera improvements across the board

Nonetheless, both Sweet and McCormack believe that it is vital how Apple designs and controls every element from lens to software.

"We don't tend to think of a single axis like 'if we go and do this kind of thing to hardware' then a magical thing will happen," said McCormack. "Since we design everything from the lens to the GPU and CPU, we actually get to have many more places that we can do innovation."

The iPhone 12 Pro Max is now available for pre-order. It ships from November 13.

Comments

maybe someday, Apple will have a 2.5 to 3 times zoom for the wide to tele, or a 2 times for the super wide to wide. If so, they could eliminate one camera, and have room, and less expense to have both cameras with the same, large, sensor with IBIS for both.

Another likely option, is that Apple will increase the resolution of its imagers, when the balance of realtime response, ie, hardware, allows it to, and that would provide higher quality zoom as well.

What they are saying is what most vertical manufacturers do (and have been doing for years).

Apple is slowly catching up with photography but the harsh truth of the matter is that phone cameras are great and have been for years now. That won't change any time soon. Where phone cameras have branched out over the last two years is in versatility and Apple missed several opportunities to add that. Strangely so.

Apple sells enough of the current generation of iPhones each year, that it requires in the tens of millions to hundreds of millions each of a small set of four different cameras. Ramping to those volumes by suppliers is non trivial, hence why Android Os device makers are more than willing to pay premiums for smaller quantities, in the millions to tenss of millions, for their flagships; they need those features to compete with other Android OS device makers, and Apple doesn't.

Apple will be shifting some 170/190 million iPhones 12 this fiscal year, each with the most powerful SOC in the industry, all to enable the most user friendly computational photography in the market. Apple has only "missed several opportunities", in your opinion, because Apple's customer base doesn't buy features, per se, but rather, buys experiences, same as it ever has.

The metrics that prove this, are ASP, Margins, and revenues, all of which Apple leads, and frankly, it isn't all that bad at shifting units either.

This isn't about quantity (which isn't even an issue). Apple doesn't sell its phones in a day and, as with the Max this year, has always had the option to use more premium, lower yield options on its phones.

As for the world's most powerful SoC, that is again a ludicrous claim. Are you confusing SoC (as a whole) with some of the elements on it? The A14 does not even have a modem on it!

You forgot surveillance and underwater cables; oops, not so much underwater cable business anymore.

So, for the first three quarters of 2020, Huawei did about $100 billion in revenue, at 9.5 percent margins. So lets say that Huawei does $135 billion for their fiscal year.

Apple FY 2020, $274 Billion at something like 38 percent margins.

Apple makes four times more profit than Huawei, at the same revenue, but eight times profit overall, and with less employees, but certainly in a "niche" market unlike Huawei's.

As for the missing "integrated" modem, nobody really cares, but soon, Apple will own that piece of the stack as well.

We could play this all day, but the bottom line is; Huawei is too closely associated with the CCP, and that is the "political cost" of that relationship.

Money has nothing to do with anything.

Nor does politics.

And I'm at a loss to see the connection between cameras and modems or undersea cabling.

This is all about marketing. Nothing else.

The biggest issue with Apple and cameras over the last few years hasn't only been the quality. It has been the versatility. Too often, the iPhone simply couldn't take the equivalent shot because it simply didn't have the hardware to take it.

Why in the world would anyone want square sensors? If you’re taking crappy square images for Instagram or Snapshot, you can crop, the way Apple does it now. The last thing you want is a flash right over the Ken’s. For portraits, you want it a good foot away, to the side, and slightly above.since you can’t exactly do that with a smartphone flash you want it where it can be put, but not over the lens. I’ve never been happy with flash photos because the flash is WAY too close to the lens. It should be at the other end of the camera—the long end

I don’t know. Apple’s cameras take the best pj turns overall. Yes, some cameras are better in one way or the other, but Apple’s are much better balanced, and more likely to get a good picture. Too many others are gimmicky with ultra high rez sensors that take crappy high rez shots and ok, but not really great lower rez shots.

Oh please! Stop with that crap. Huawei’s SoCs are nothing more than standard ARM designs as far as the CPUs go, and the GPUs are nothing great either. They’re about the same as Qualcomm;s, which are way behind Apple’s. And amazingly, Apple manages to easily outperform any Huawei phone without even being turned on.

How about you look beyond that? Try looking at the SoCs as a whole - not the 'CPU'.

The ISPs and DSPs have been incredible over the last few years. They have been untouchable in Wi-Fi. Dual frequency GPS. Integrated modems (now 5G). Amazing NPU architecture. They even managed to squeeze 30% more transistors onto the latest 5nm offering than the A14.

https://www.anandtech.com/show/16226/apple-silicon-m1-a14-deep-dive/3

It isn't even close, and for a fact, the microarchitecture of the A and M series is more performant than either AMD Zen 3 or Intel's latest. Apple's 8 wide decoder design leads AMD and Intel.

https://www.anandtech.com/show/16226/apple-silicon-m1-a14-deep-dive/2

I again restate that Apple has an advantage because they own more of the stack.

You fail to understand that even "looking at the SoC's as a whole" isn't going to give you a win.

"Performance against the contemporary Android and Cortex-core powered SoCs looks to be quite lopsided in favour of Apple. The one thing that stands out the most are the memory-intensive, sparse memory characterised workloads such as 429.mcf and 471.omnetpp where the Apple design features well over twice the performance, even though all the chip is running similar mobile-grade LPDDR4X/LPDDR5 memory. In our microarchitectural investigations we’ve seen signs of “memory magic” on Apple’s designs, where we might believe they’re using some sort of pointer-chase prefetching mechanism."

Oh, and "squeezing 30% more transistors onto the latest 4nm offering than the A14", isn't a win either. It just increases the cost of the Kirin 9000, same as the M1 with a comparable number of transistors. That Apple can design a processor that is more performant with less transistors, and ultimately ship something on the order of 170 million A14 this fiscal year, is impressive.

Meanwhile, there is only something like 8 million Kirin 9000's, and there won't be anymore. Good luck with that.

It isn't about winning. It's about not being incorrect in statements.

You seem to be unaware of what a SoC is and why process mode, transistor count and the different systems on it are important.

It is a technical feat on many levels.

And it's worth noting recent reviews of the Max which put it in the same league as Huawei and Samsung in some cases for photography (even slightly better in some opinions!).

That is Apple catching up and very much due to the improved hardware on that model which, in turn make the comments picked up by the article look more like marketing than ever.

So I'm the one that doesn't understand SoC's?

Apple just dominates SoC's, and you can't admit that, so you move the goalposts.

I posted the links to anandtech so that you can get an idea on why Apple is dominating SoC's.

Take advantage of that.

Also, you are jumping on the A14 which has only been on the market for days and the M1 which nobody has at this point.

Now, go back and read what I actually said about SoCs - what is on them and why they are important.

Think about the areas I mentioned.

It's wonderful that Huawei has all of those SoC computing cores (NPU), et al, just as any other SoC, but, again second order.

read the new Anandtech deep dive into the A14 published yesterday afternoon to understand this.

When the speed of ANY flagship already does everything you want?

No. That is precisely when the rest of the SoC comes into play.

But that is besides the point. If you are taking SoC - talk SoC (the whole thing) . If you mean CPU and micro architecture, then say it.

Having the modem sitting on the SoC offers big advantages. The power and quality of the ISP/DSPs are also key. The NPU is ever more important. As is ultrafast Wi-Fi, dual frequency GPS...

They are called 'systems' on a chip for good reason. The CPU is just part of the package but calling the rest 'second order' is absurd. Especially as that isn't even the issue here.

The issue is calling something by a name when you really mean something else.