Apple's Photos app may recognize people by face and body language in the future

Apple's Photos app may improve on its Faces subject identification feature by not just using facial recognition, but also working to identify people's individual body language, typical poses, and torso.

Even before it had Face ID, Apple introduced face recognition in Photos.

Over a decade since it introduced face detection in iPhoto '09, Apple is researching how to improve its ability to identify people in images. As smart and useful as the Faces feature is, it gets easily mixed up and Apple wants to use extra details such as body language to sort people out.

"Recognizing People by Combining Face and Body Cues," is a newly-revealed patent application describing multiple ways this might be done -- and why it's needed.

"With the proliferation of camera-enabled mobile devices, users can capture numerous photos of any number of people and objects in many different settings and geographic locations," says Apple. "However, categorizing and organizing those images can be challenging."

"Often, face recognition is used to identify a person across images, but face recognition can fail," it continues, "e.g., in the event of a poor quality image and/or the pose of the person, such as if the person is looking away from the camera."

So it's our fault that our photos make face detection difficult. Apple's proposed solution is to create what it calls a "cluster" of characteristics of a person, not just their face.

"Images taken over a period of time can be grouped into 'moments,' wherein each moment represents a set of spatiotemporally consistent images," says Apple. "Said another way, a 'moment' consists of images taken within a same general location (e.g., home, work, a restaurant, or other significant location), and within a same timeframe of a predetermined length."

The photos you take at home one morning, for example, are then analyzed and data about each person in the image is stored in what Apple calls a "multidimensional abstract embedding space." It's a database of information gleaned about that person from these photos.

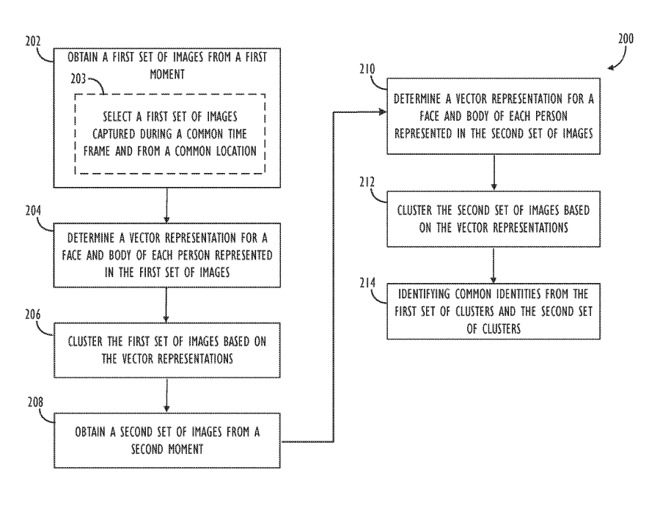

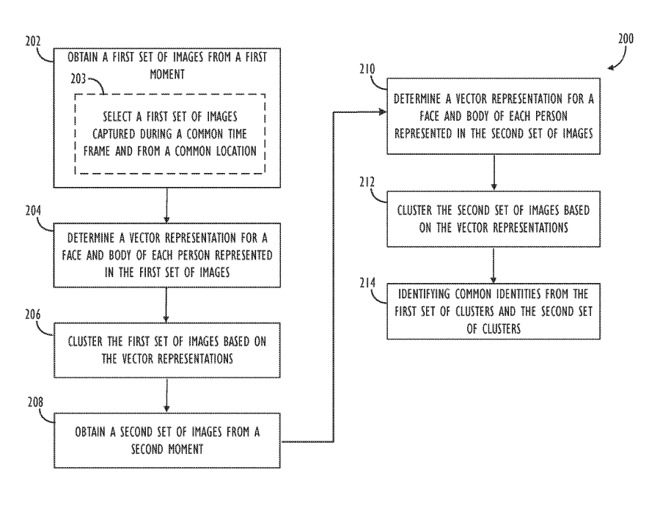

Detail from the patent outlining one workflow for augmenting face recognition with other characteristics

"[This is] not only based on face characteristics, but also based on additional characteristics about the body (e.g., torso) of the people appearing in the images," says Apple. "These characteristics may include, for example, shape, texture, pose, and the like."

Not all of these characteristics are treated equally, though. "[For instance] torso characteristics are less likely to be consistent across moments... because people change clothes over the span of days, weeks, months, etc.," continues Apple.

So it remains the face that is central to this detection and the cataloging of this "cluster" of information. Apple only talks about creating clusters in one, two or three "moments" of photographs being taken, but it appears that accuracy is improved the more images it has to work from.

This patent application lists three inventors. It includes Vinay Sharma, whose previous work includes a granted patent regarding training systems to improve facial recognition.

Even before it had Face ID, Apple introduced face recognition in Photos.

Over a decade since it introduced face detection in iPhoto '09, Apple is researching how to improve its ability to identify people in images. As smart and useful as the Faces feature is, it gets easily mixed up and Apple wants to use extra details such as body language to sort people out.

"Recognizing People by Combining Face and Body Cues," is a newly-revealed patent application describing multiple ways this might be done -- and why it's needed.

"With the proliferation of camera-enabled mobile devices, users can capture numerous photos of any number of people and objects in many different settings and geographic locations," says Apple. "However, categorizing and organizing those images can be challenging."

"Often, face recognition is used to identify a person across images, but face recognition can fail," it continues, "e.g., in the event of a poor quality image and/or the pose of the person, such as if the person is looking away from the camera."

So it's our fault that our photos make face detection difficult. Apple's proposed solution is to create what it calls a "cluster" of characteristics of a person, not just their face.

"Images taken over a period of time can be grouped into 'moments,' wherein each moment represents a set of spatiotemporally consistent images," says Apple. "Said another way, a 'moment' consists of images taken within a same general location (e.g., home, work, a restaurant, or other significant location), and within a same timeframe of a predetermined length."

The photos you take at home one morning, for example, are then analyzed and data about each person in the image is stored in what Apple calls a "multidimensional abstract embedding space." It's a database of information gleaned about that person from these photos.

Detail from the patent outlining one workflow for augmenting face recognition with other characteristics

"[This is] not only based on face characteristics, but also based on additional characteristics about the body (e.g., torso) of the people appearing in the images," says Apple. "These characteristics may include, for example, shape, texture, pose, and the like."

Not all of these characteristics are treated equally, though. "[For instance] torso characteristics are less likely to be consistent across moments... because people change clothes over the span of days, weeks, months, etc.," continues Apple.

So it remains the face that is central to this detection and the cataloging of this "cluster" of information. Apple only talks about creating clusters in one, two or three "moments" of photographs being taken, but it appears that accuracy is improved the more images it has to work from.

This patent application lists three inventors. It includes Vinay Sharma, whose previous work includes a granted patent regarding training systems to improve facial recognition.

Comments