Apple researching 'light folding' to make periscope lenses for iPhone

A newly-revealed patent application shows that Apple is pressing ahead with its search for a "folding camera" for the iPhone.

Apple has been expected to develop a "folding camera" for a future iPhone. Most recently, Apple has reportedly been searching for a supplier to make such a periscope lens, and now a new patent application explains the thinking behind it.

It's previously been reported that a folding lens system could reduce the camera bump on the back of iPhones. However, Apple has been researching this for several years, already holding multiple patents on the topic.

This latest patent application, "Camera Including Two Light Folding Elements," is specifically concerned with the balance between having an improved camera while keeping the small form factor of an iPhone, or an iPad.

"The advent of small, mobile multipurpose devices such as smartphones and tablet or pad devices has resulted in a need for high-resolution, small form factor cameras that are lightweight, compact, and capable of capturing high resolution, high quality images at low F-numbers for integration in the devices," says the application.

"However, due to limitations of conventional camera technology, conventional small cameras used in such devices tend to capture images at lower resolutions and/or with lower image quality than can be achieved with larger, higher quality cameras," it continues.

Apple says that what is needed is a "photosensor with small pixel size and a good, compact imaging lens system." But as these have been developed, demand for even better ones has increased.

"In addition," it continues, "there are increasing expectations for small form factor cameras to be equipped with higher pixel count and/or larger pixel size image sensors (one or both of which may require larger image sensors) while still maintaining a module height that is compact enough to fit into portable electronic devices."

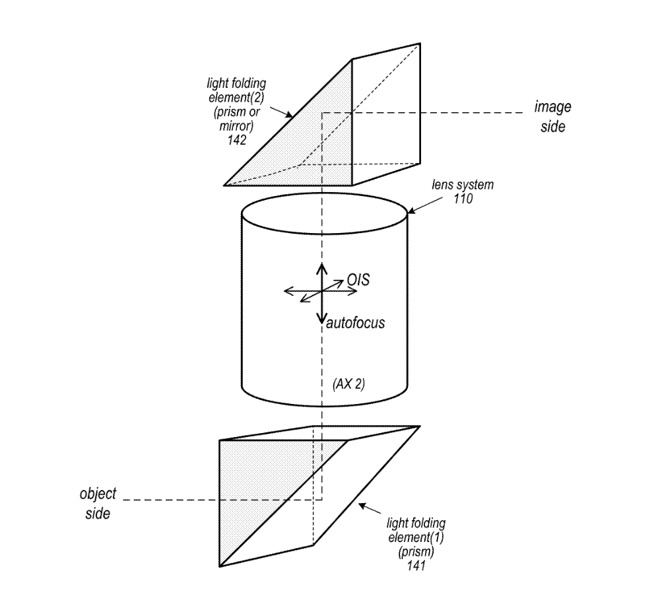

Detail from the patent showing a series of light folding prisms or mirrors

Apple's proposal is a lens system may be configured in the camera to move on one or more axes independently" of a prism or mirror in the iPhone body. This patent application describes very many different combinations of types of lens, and the number of lenses, but broadly the proposal follows one core idea.

"The camera may include an actuator component configured to move the lens system," it says, along with "two light folding elements (eg two prisms, or one prism and a mirror.") As well as the zoom function that a periscope lens is expected to give, this particular configuration could also "provide optical image stabilization (OIS) functionality for the camera."

The patent application is credited to Yuhong Yao, whose previous work includes several granted patents on related topics. Chief among those are a series of patents for folding lens systems with three, four, or five refractive lenses.

Apple has been expected to develop a "folding camera" for a future iPhone. Most recently, Apple has reportedly been searching for a supplier to make such a periscope lens, and now a new patent application explains the thinking behind it.

It's previously been reported that a folding lens system could reduce the camera bump on the back of iPhones. However, Apple has been researching this for several years, already holding multiple patents on the topic.

This latest patent application, "Camera Including Two Light Folding Elements," is specifically concerned with the balance between having an improved camera while keeping the small form factor of an iPhone, or an iPad.

"The advent of small, mobile multipurpose devices such as smartphones and tablet or pad devices has resulted in a need for high-resolution, small form factor cameras that are lightweight, compact, and capable of capturing high resolution, high quality images at low F-numbers for integration in the devices," says the application.

"However, due to limitations of conventional camera technology, conventional small cameras used in such devices tend to capture images at lower resolutions and/or with lower image quality than can be achieved with larger, higher quality cameras," it continues.

Apple says that what is needed is a "photosensor with small pixel size and a good, compact imaging lens system." But as these have been developed, demand for even better ones has increased.

"In addition," it continues, "there are increasing expectations for small form factor cameras to be equipped with higher pixel count and/or larger pixel size image sensors (one or both of which may require larger image sensors) while still maintaining a module height that is compact enough to fit into portable electronic devices."

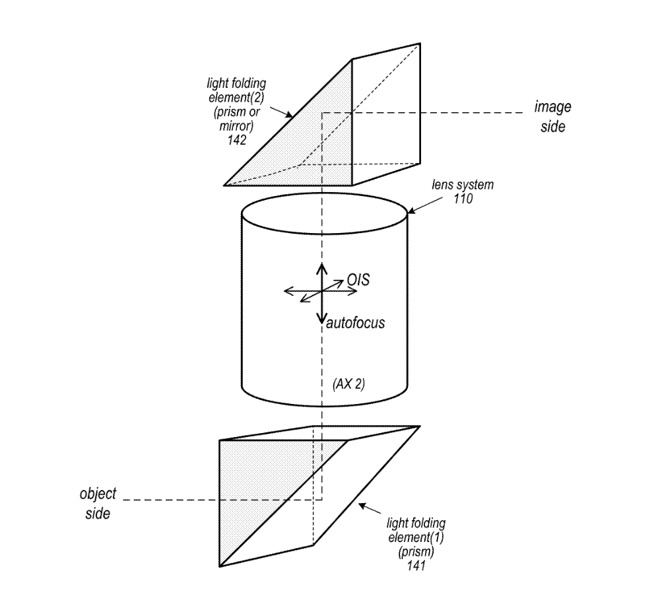

Detail from the patent showing a series of light folding prisms or mirrors

Apple's proposal is a lens system may be configured in the camera to move on one or more axes independently" of a prism or mirror in the iPhone body. This patent application describes very many different combinations of types of lens, and the number of lenses, but broadly the proposal follows one core idea.

"The camera may include an actuator component configured to move the lens system," it says, along with "two light folding elements (eg two prisms, or one prism and a mirror.") As well as the zoom function that a periscope lens is expected to give, this particular configuration could also "provide optical image stabilization (OIS) functionality for the camera."

The patent application is credited to Yuhong Yao, whose previous work includes several granted patents on related topics. Chief among those are a series of patents for folding lens systems with three, four, or five refractive lenses.

Comments

I'm not sure what the author means by "folding camera"

That’s interesting but very complex. Now, I’d rather see them drop one camera and have a wide angle camera with a 3:1 zoom, say 12mm to 36mm, and a second tele zoom with 36 to 144, which would be 4:1. They could easily fit two equal size, and larger sensors, in the space they have two now. Frankly, I don’t care if the camera is a bit thicker to accommodate them, just round the back edges of the camera a little, for comfort. It would also allow a bigger battery, which nobody would object to.

one reason why cameras have mostly been stuck at 12MP is that the SoCs aren’t powerful enough to do all the processing at 16mp. That may have been true, but no l9nger. The very high rez cameras we see in some phones aren’t processed as much.

so two cameras with 16mp and good optical zooms. That would be much better than what’s available now.

The idea I suggested is already happening with so-called “light field” cameras, but nothing prevents a smaller matrix of sensors from being used to achieve the same effects. It’s almost akin to using an array of orbital and ground based telescopes to synthesize a much sharper view of the universe.

https://raytrix.de

Remember the Light L16 with it's various cameras on the back, or LG's 16 camera concept (not actually light field).

Apple has at least three patents on light field cameras, that I know of. I think that’s a dead end though. Look at what Canon has with their split pixel sensors. They can capture depth without all the lenses that reduces resolution by up to 90%, making it pretty much useless. Remember that the company that started this went out of business, I tried one of their cameras. Ugh! Horrible pictures in every way. You need a 100 million pixel sensor to get a medium rez picture.

A-ple has been making phones for 14 years. The first patents I saw for cameras in phones from Apple was a good ten years ago. They’ve gotten many since then. They own hundreds, and maybe thousands of Kodak patents. So what have they been doing with all that? Apparently nothing.

there’s nothing wrong with them buying companies for technology. They buy around 24 every year. They R&D’d touch sensors for several years, and had a number of their own patents. But they bought the leading manufacturer, combined their nascent product with Apple’s work, and came out with Touch ID. That’s not the first time they did that, nor has it been the last. They’re doing it with micro LED.