Apple's AR headset may help a user's eyes adjust when putting it on

Apple's augmented reality headset could automatically account for the difference between a user's point of view and an attached camera, while peripheral lighting could make it easier for a user's eyes to adjust to wearing an AR or VR headset.

Apple is rumored to be working on its own augmented reality headset as well as smart glasses, currently speculated to be called "Apple Glass," which could also use AR as a way to display information to the user. As with practically any other mixed reality headset on the market, there's always challenges that have to be overcome by Apple to optimize the experience for its users.

In a pair of patent filings granted by the U.S. Patent and Trademark Office on Tuesday, Apple has seemingly come up with solutions for two distinct problems that can arise when using AR or VR headsets.

Apple reckons that headset users may encounter the built-in display to be too dark or washed out when the user first puts on the headset. This may be especially true when the headset is used in a bright environment, as the user's eyes are effectively shielded from the environment during the headset's use.

The same sort of problem exists at the end of usage, when the user removes the headset. It's possible that moving from a relatively dark viewing environment of a headset to a much brighter one could dazzle or cause discomfort to the user, until their eyes can adjust.

A ring of LEDs may make it easier on the eyes to put on or remove a VR headset.

Apple's solution to this is effectively a lighting system for the inside of the headset, illuminating areas in the periphery of the user's vision. Using ambient light sensors, the system can alter the brightness to more closely match the light intensity of the environment, and potentially even the warmth or color of the light itself.

Though this could involve a string of LEDs around the edge, it is also suggested the patent could use a light guide to divert light into the loop, which could reduce the number of LEDs required for the system.

Motion data from sensors on the headset could be used to adjust the brightness of the headset, such as to detect when a user is putting the headset on or removing it. Determining such an action can trigger the system go go through a lighting-up and dimming process.

For example, the light loop could be illuminated when the headset detects the user is putting the headset on, matching the light inside the headset to the outside world. Over time, the light loop can dim down to nothing, gradually allowing the wearer's eyes to adjust.

Likewise, if the headset believes it is being removed, it can gradually illuminate the loop, so a user's eyes have a chance to start adjusting before they have to deal with the lit environment.

Filed on September 4, 2019, the patent is invented by Cheng Chen, Nicolas P. Bonnier, Graham B. Myhre, and Jiaying Wu.

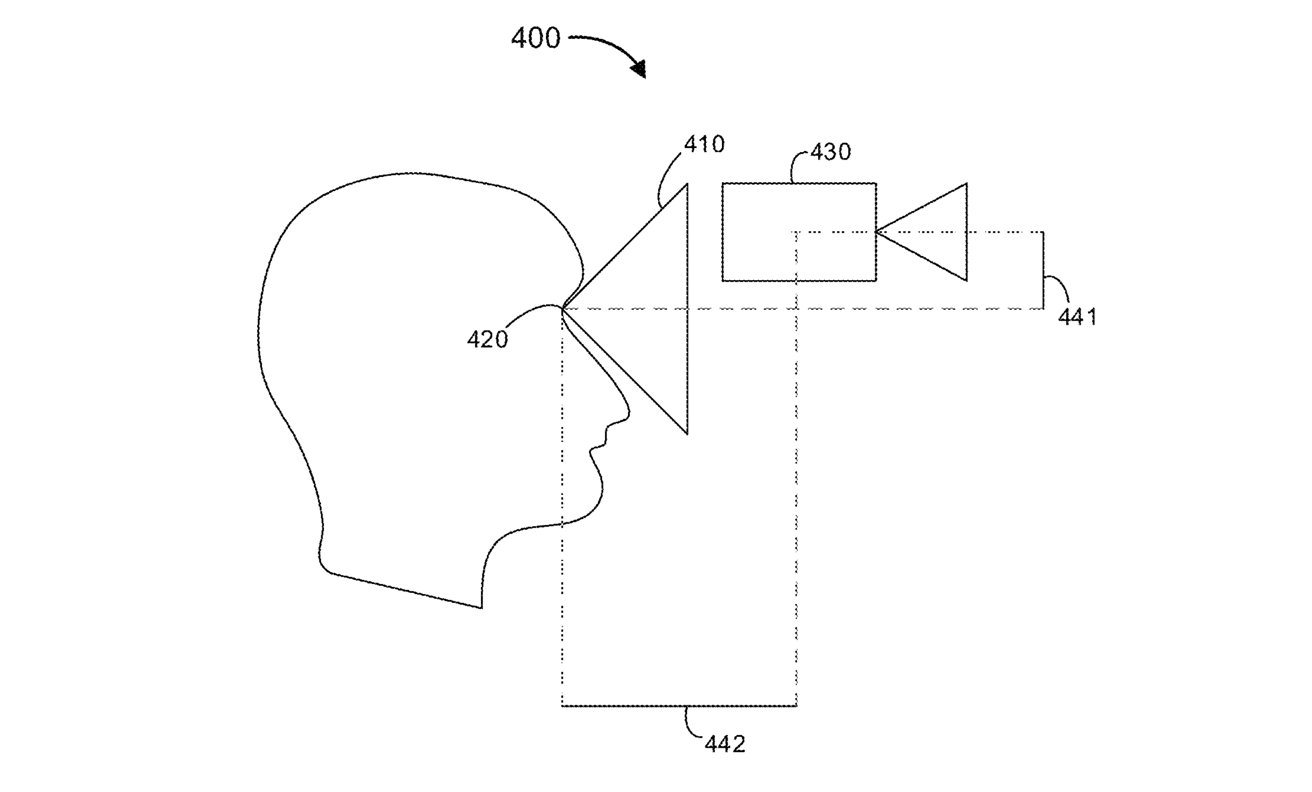

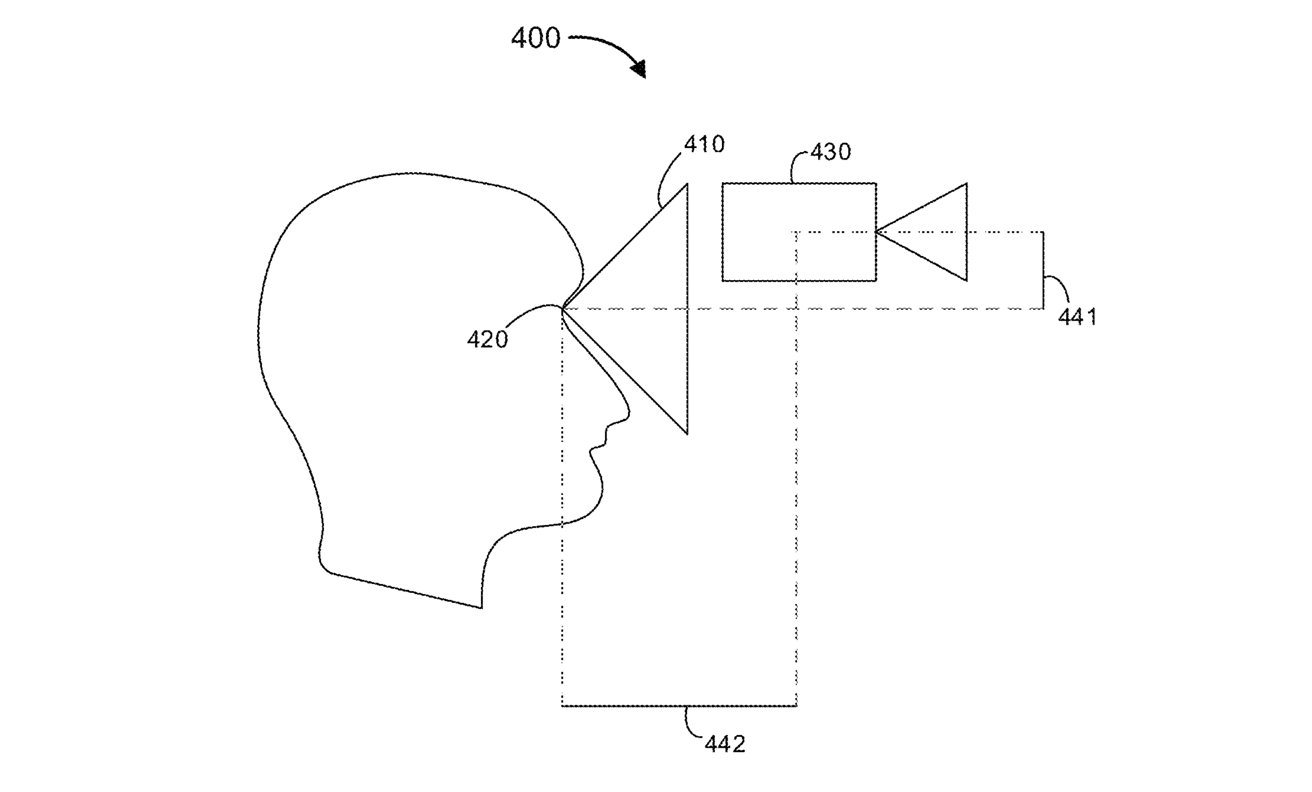

While the techniques at play make sense, there are still issues that platform creators have to overcome. In the case of this patent, Apple believes there may be a mismatch between what a camera observing the scene may see, and what the user observes.

This occurs due to the externally-facing cameras being positioned further forward and higher than the user's eyes. This is a completely different viewpoint, and rendering output based on this different view won't necessarily sell the illusion to the user that the virtual object is in their real-world vision due to its positioning.

The patent deals with how a headset camera's viewpoint may not match to a user's eyes.

In short, Apple's patent suggests that the headset could take the camera view of the environment, adjust it to better match the view from the user's eyes, then use that adjusted image to render the digital object into the real-world scene better.

The calculations to do this can include using a transformation matrix to represent the difference in viewpoints, as well as using analysis of the camera view to determine the depth of real-world objects to create the translated image.

The system could take advantage of other data points to generate its translated image, such as LiDAR sensors for more accurate depth data. This can also take advantage of accelerometer data.

Since a user's head moves, and that it takes time for a headset to render a scene object, there may be a small amount of lag in the translation process to create the altered viewpoint image for rendering. It's plausible that, if the user's head moves, the rendered object may still not match the scene, as the view itself will have moved.

By using the accelerometer, this could also enable the translation matrix to be further altered to account where it anticipates the user's viewpoint will be a short time in the future. This prediction could help make a render match up with the user's eventual view during motion.

The patent was originally filed on September 24, 2020, and lists its inventors as Tobias Eble and Thomas Post.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

Apple is rumored to be working on its own augmented reality headset as well as smart glasses, currently speculated to be called "Apple Glass," which could also use AR as a way to display information to the user. As with practically any other mixed reality headset on the market, there's always challenges that have to be overcome by Apple to optimize the experience for its users.

In a pair of patent filings granted by the U.S. Patent and Trademark Office on Tuesday, Apple has seemingly come up with solutions for two distinct problems that can arise when using AR or VR headsets.

Adaptive lighting system

The first patent, an "Electronic device with adaptive lighting system," deals with the lesser-considered issue of a user's vision at the time of donning or removing a VR or AR headset.Apple reckons that headset users may encounter the built-in display to be too dark or washed out when the user first puts on the headset. This may be especially true when the headset is used in a bright environment, as the user's eyes are effectively shielded from the environment during the headset's use.

The same sort of problem exists at the end of usage, when the user removes the headset. It's possible that moving from a relatively dark viewing environment of a headset to a much brighter one could dazzle or cause discomfort to the user, until their eyes can adjust.

A ring of LEDs may make it easier on the eyes to put on or remove a VR headset.

Apple's solution to this is effectively a lighting system for the inside of the headset, illuminating areas in the periphery of the user's vision. Using ambient light sensors, the system can alter the brightness to more closely match the light intensity of the environment, and potentially even the warmth or color of the light itself.

Though this could involve a string of LEDs around the edge, it is also suggested the patent could use a light guide to divert light into the loop, which could reduce the number of LEDs required for the system.

Motion data from sensors on the headset could be used to adjust the brightness of the headset, such as to detect when a user is putting the headset on or removing it. Determining such an action can trigger the system go go through a lighting-up and dimming process.

For example, the light loop could be illuminated when the headset detects the user is putting the headset on, matching the light inside the headset to the outside world. Over time, the light loop can dim down to nothing, gradually allowing the wearer's eyes to adjust.

Likewise, if the headset believes it is being removed, it can gradually illuminate the loop, so a user's eyes have a chance to start adjusting before they have to deal with the lit environment.

Filed on September 4, 2019, the patent is invented by Cheng Chen, Nicolas P. Bonnier, Graham B. Myhre, and Jiaying Wu.

Scene camera retargeting

The second patent, "Scene camera retargeting," deals with an issue regarding computer vision, and how it relates to that of the user. Typically augmented reality relies on rendering a digital asset in relation to a view of the environment, then either overlaying that on a video feed from a camera and presenting it to the user, or overlaying it over a transparent panel that the user looks through to see the real world.While the techniques at play make sense, there are still issues that platform creators have to overcome. In the case of this patent, Apple believes there may be a mismatch between what a camera observing the scene may see, and what the user observes.

This occurs due to the externally-facing cameras being positioned further forward and higher than the user's eyes. This is a completely different viewpoint, and rendering output based on this different view won't necessarily sell the illusion to the user that the virtual object is in their real-world vision due to its positioning.

The patent deals with how a headset camera's viewpoint may not match to a user's eyes.

In short, Apple's patent suggests that the headset could take the camera view of the environment, adjust it to better match the view from the user's eyes, then use that adjusted image to render the digital object into the real-world scene better.

The calculations to do this can include using a transformation matrix to represent the difference in viewpoints, as well as using analysis of the camera view to determine the depth of real-world objects to create the translated image.

The system could take advantage of other data points to generate its translated image, such as LiDAR sensors for more accurate depth data. This can also take advantage of accelerometer data.

Since a user's head moves, and that it takes time for a headset to render a scene object, there may be a small amount of lag in the translation process to create the altered viewpoint image for rendering. It's plausible that, if the user's head moves, the rendered object may still not match the scene, as the view itself will have moved.

By using the accelerometer, this could also enable the translation matrix to be further altered to account where it anticipates the user's viewpoint will be a short time in the future. This prediction could help make a render match up with the user's eventual view during motion.

The patent was originally filed on September 24, 2020, and lists its inventors as Tobias Eble and Thomas Post.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.