Apple research defines how new Apple Watch accessibility features could work

Apple Watch will shortly gain AssistiveTouch features such as being able to stop an alarm when the wearer gestures with their hand, and newly-revealed Apple research may have all the details.

Apple Watch will be able to recognise gestures such as clenching a hand

The forthcoming AssistiveTouch for Apple Watch is intended as an accessibility feature, specifically helping users who have upper body difficulties. However, the new ability to use Apple Watch without touching the displays or controls is going to be a boon for all users.

If you've ever had to put down what you're carrying because the Apple Watch alarm is drilling into your wrist, you'll recognize the value of being able to just clench your first to stop it. Previously you might have clenched your fist in annoyance, but now the Watch will recognize that you've done it and respond.

There are many other possibilities that Apple has shown, but now the specifics of how they may all work have been detailed. "Optical Sensing Device" is a newly-revealed patent application, which proposes ways of achieving these features.

All the methods described in this patent application revolve around the use of light and optical sensors. They may well be other systems that Apple is deploying, which could be the subject of other patent applications not yet known about.

This one, though, appears comprehensive. "A wearable device with an optical sensor is disclosed that can be used to recognize gestures of a user wearing the device," it begins.

"Light sources can be positioned on the back or skin-facing side of a wearable device, and an optical sensor can be positioned near the light sources," continues the patent application. "During operation, light can be emitted from the light sources and sensed using the optical sensor. Changes in the sensed light can be used to recognize user gestures."

The more than 11,000 words of the patent application detail multiple different gestures, but each one is chiefly detected by the same optical means.

"For example, light emitted from a light source can reflect off a wearer's skin, and the reflected light can be sensed using the optical sensor," it says. "When the wearer gestures in a particular way, the reflected light can change perceptibly due to muscle contraction, device shifting, skin stretching, or the distance changing between the optical sensor and the wearer's skin."

Once the optical system is able to recognize different gestures and reliably distinguish between them, they "can be interpreted as commands for interacting with the wearable device."

The gestures can "include finger movements, hand movements, wrist movements, whole arm movements, or the like," and can be small.

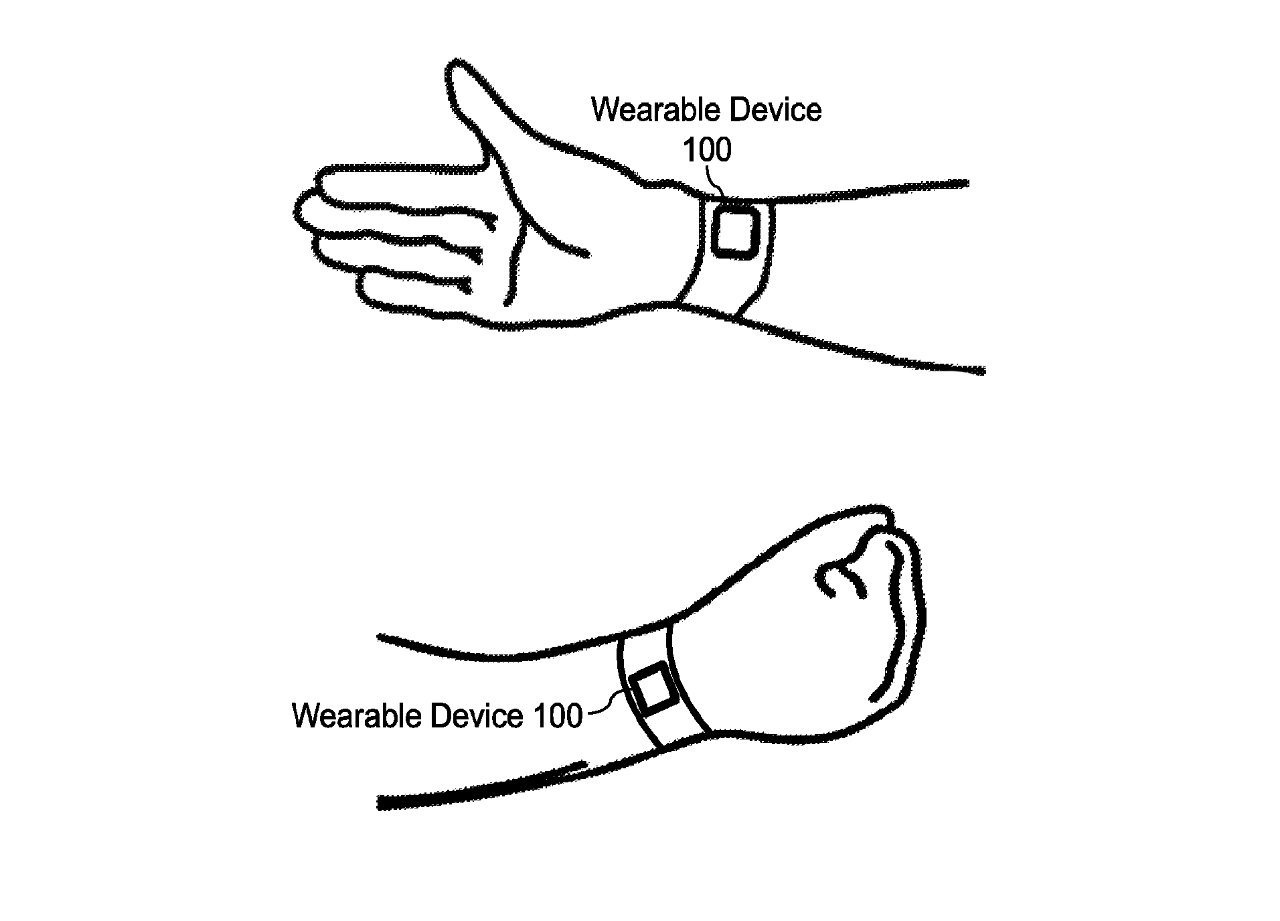

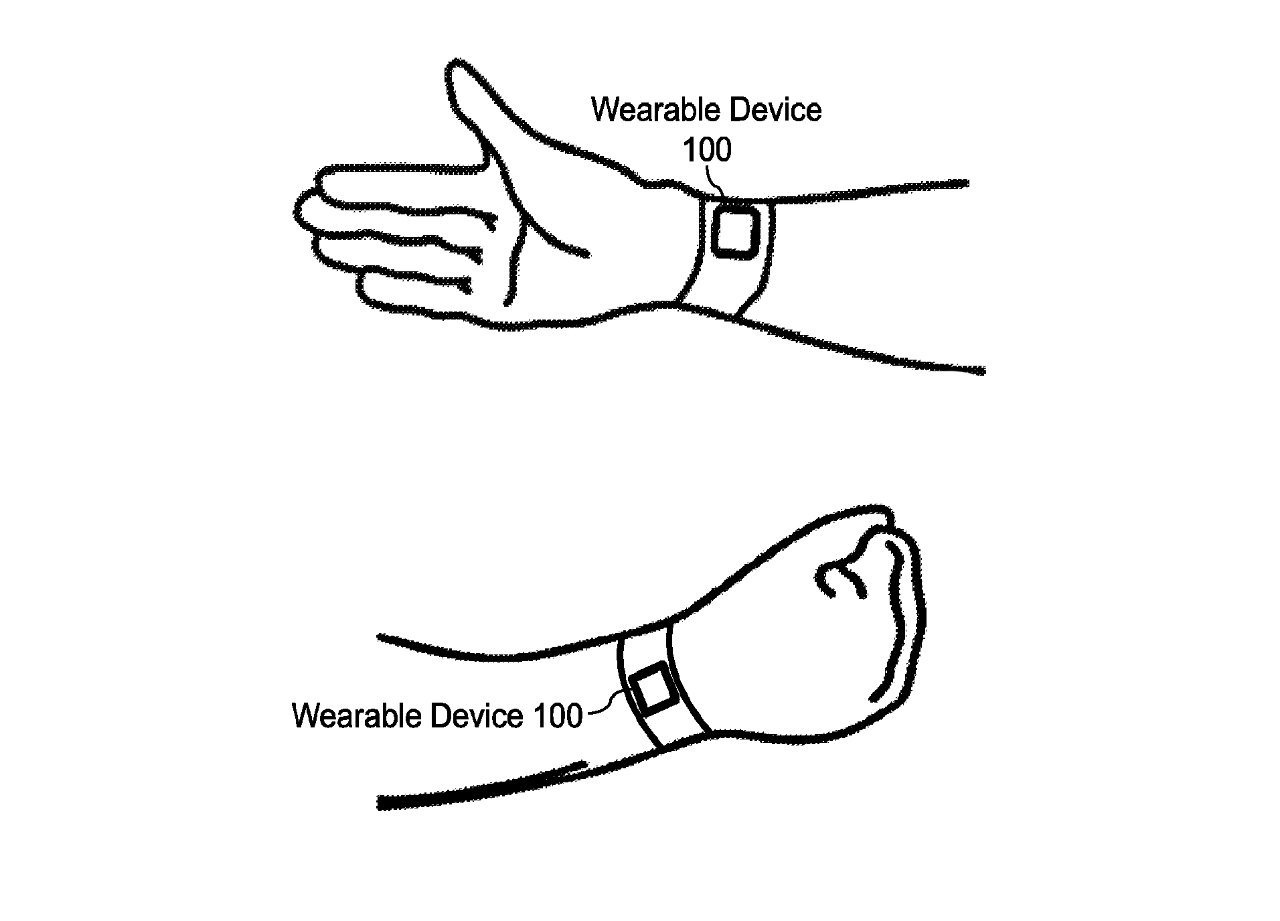

"The at least slight alteration of the wrist from having an open hand... to having a clenched fist... can cause the distance between wearable device 100 and the wearer's skin to change (e.g., increase or decrease)," explains Apple. "[An] optical sensor associated with wearable device can generate a signal that changes when the distance between the device and the wearer's skin changes.

Detail from the patent illustrating how different hand positions are recognizable by the optical sensor

Apple's patent application is less concerned with what an Apple Watch can do when a gesture is identified. It does have examples such as "return to home screen," or "navigate forward."

However, the Watch can respond in any way that Apple -- or perhaps the user -- decides, based on the gestures received. This application is more about proposing as many different distinguishable gestures as possible, so that watchOS can have many options.

That includes recognizing gestures over a short space of time, rather than always immediately responding to specific hand movements.

For example, a wearer can clench the fist, hold the clenched first for a certain amount of time, and subsequently open the hand to request a function different than one associated with clenching and unclenching the first in rapid succession (e.g., as in the difference between a simple mouse cursor click and a double-click)," says Apple.

This patent application is credited to Ehsan Maani. Although the filing date for this particular application is May 27, 2021, Maani left Apple to work for Google in late 2014.

There is a related initial filing date of February 2014, however. And prior to leaving, Maani was a designer on Apple Watch's heart rate monitor algorithms.

Follow all the details of WWDC 2021 with the comprehensive AppleInsider coverage of the whole week-long event from June 7 through June 11, including details of all the new launches and updates.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

Apple Watch will be able to recognise gestures such as clenching a hand

The forthcoming AssistiveTouch for Apple Watch is intended as an accessibility feature, specifically helping users who have upper body difficulties. However, the new ability to use Apple Watch without touching the displays or controls is going to be a boon for all users.

If you've ever had to put down what you're carrying because the Apple Watch alarm is drilling into your wrist, you'll recognize the value of being able to just clench your first to stop it. Previously you might have clenched your fist in annoyance, but now the Watch will recognize that you've done it and respond.

There are many other possibilities that Apple has shown, but now the specifics of how they may all work have been detailed. "Optical Sensing Device" is a newly-revealed patent application, which proposes ways of achieving these features.

All the methods described in this patent application revolve around the use of light and optical sensors. They may well be other systems that Apple is deploying, which could be the subject of other patent applications not yet known about.

This one, though, appears comprehensive. "A wearable device with an optical sensor is disclosed that can be used to recognize gestures of a user wearing the device," it begins.

"Light sources can be positioned on the back or skin-facing side of a wearable device, and an optical sensor can be positioned near the light sources," continues the patent application. "During operation, light can be emitted from the light sources and sensed using the optical sensor. Changes in the sensed light can be used to recognize user gestures."

The more than 11,000 words of the patent application detail multiple different gestures, but each one is chiefly detected by the same optical means.

"For example, light emitted from a light source can reflect off a wearer's skin, and the reflected light can be sensed using the optical sensor," it says. "When the wearer gestures in a particular way, the reflected light can change perceptibly due to muscle contraction, device shifting, skin stretching, or the distance changing between the optical sensor and the wearer's skin."

Once the optical system is able to recognize different gestures and reliably distinguish between them, they "can be interpreted as commands for interacting with the wearable device."

The gestures can "include finger movements, hand movements, wrist movements, whole arm movements, or the like," and can be small.

"The at least slight alteration of the wrist from having an open hand... to having a clenched fist... can cause the distance between wearable device 100 and the wearer's skin to change (e.g., increase or decrease)," explains Apple. "[An] optical sensor associated with wearable device can generate a signal that changes when the distance between the device and the wearer's skin changes.

Detail from the patent illustrating how different hand positions are recognizable by the optical sensor

Apple's patent application is less concerned with what an Apple Watch can do when a gesture is identified. It does have examples such as "return to home screen," or "navigate forward."

However, the Watch can respond in any way that Apple -- or perhaps the user -- decides, based on the gestures received. This application is more about proposing as many different distinguishable gestures as possible, so that watchOS can have many options.

That includes recognizing gestures over a short space of time, rather than always immediately responding to specific hand movements.

For example, a wearer can clench the fist, hold the clenched first for a certain amount of time, and subsequently open the hand to request a function different than one associated with clenching and unclenching the first in rapid succession (e.g., as in the difference between a simple mouse cursor click and a double-click)," says Apple.

This patent application is credited to Ehsan Maani. Although the filing date for this particular application is May 27, 2021, Maani left Apple to work for Google in late 2014.

There is a related initial filing date of February 2014, however. And prior to leaving, Maani was a designer on Apple Watch's heart rate monitor algorithms.

Follow all the details of WWDC 2021 with the comprehensive AppleInsider coverage of the whole week-long event from June 7 through June 11, including details of all the new launches and updates.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.