UK government lauds Apple's CSAM plans as it seeks to skirt end-to-end encryption

Priti Patel, Home Secretary of the UK, penned an op-ed this week highlighting a need for enhanced child protection online, and in doing so lauded Apple's now-postponed CSAM monitoring plans.

Patel's thoughts, published by The Telegraph and Britain's Home Office on Wednesday, begins with a call to action against sexual abuse of children, which is "incited, organized, and celebrated online."

In efforts to curb dissemination of child sexual abuse material and ensure public safety, Patel tasks "international partners and allies" to support the UK's approach of "holding technology companies to account and asking social media companies to put public safety before profits."

The home secretary cited Apple's CSAM monitoring plans as a novel solution to the problem.

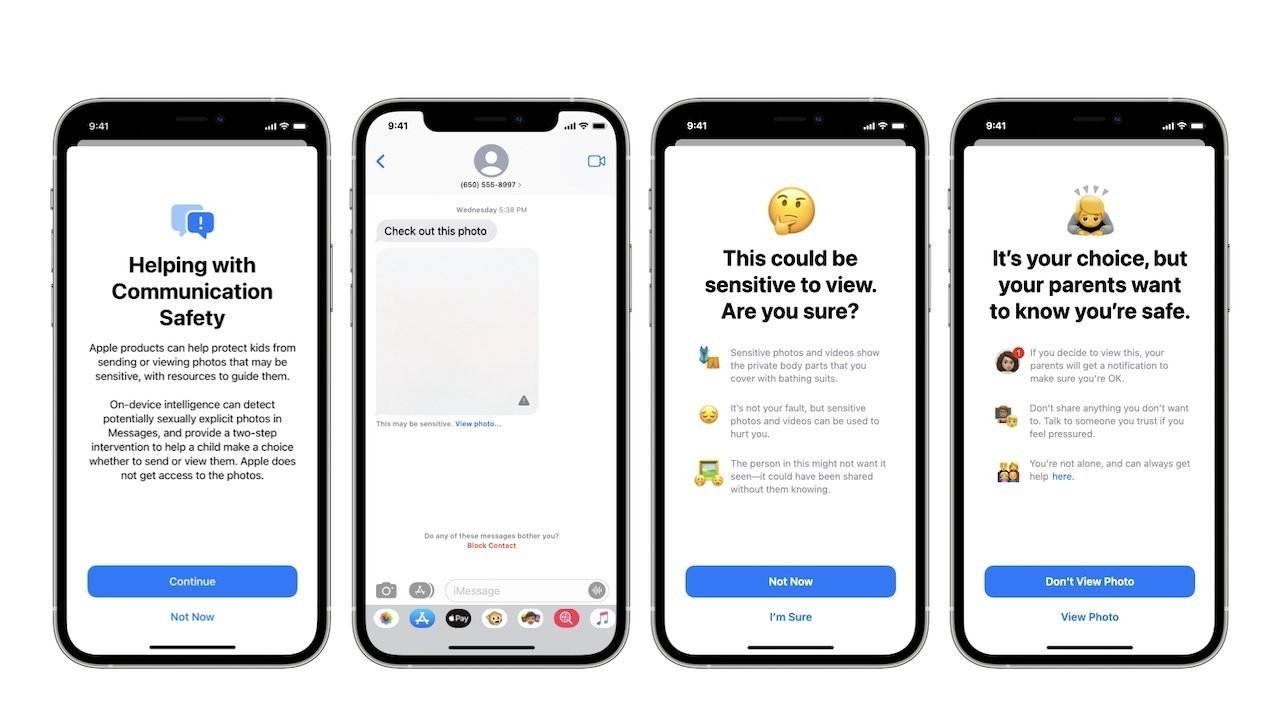

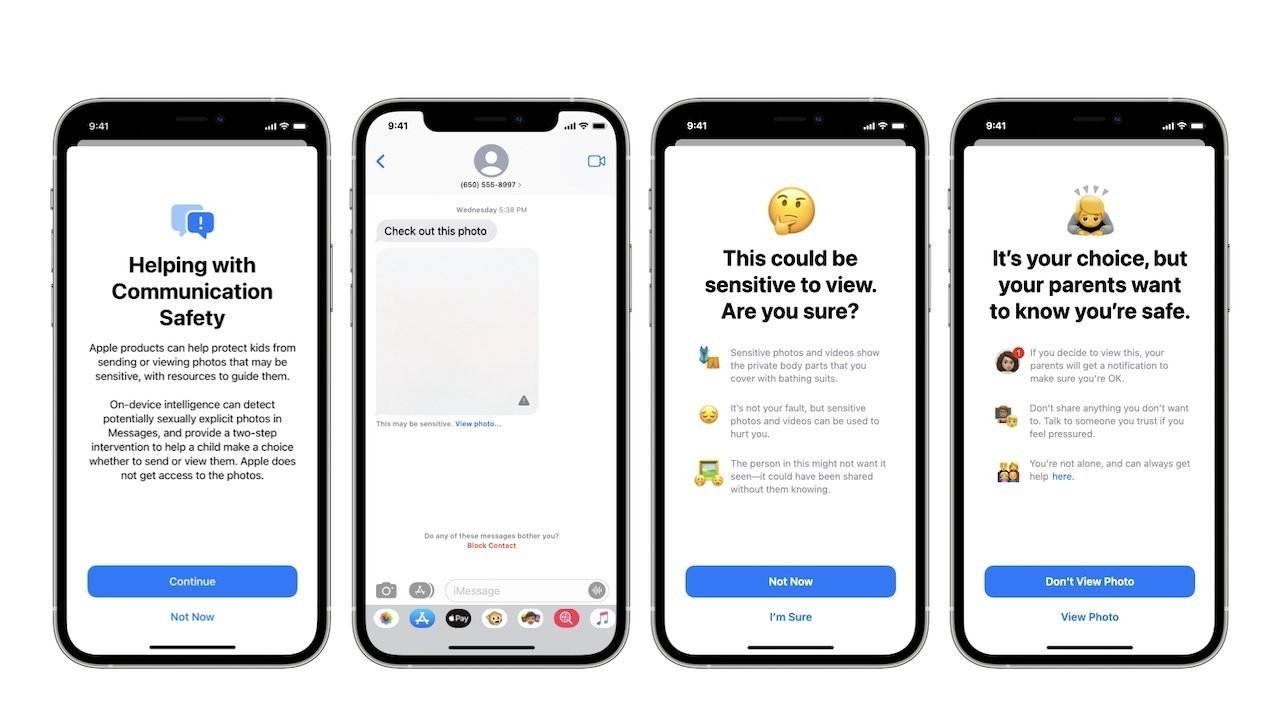

"Recently Apple have taken the first step, announcing that they are seeking new ways to prevent horrific abuse on their service," Patel writes. "Apple state their child sexual abuse filtering technology has a false positive rate of 1 in a trillion, meaning the privacy of legitimate users is protected whilst those building huge collections of extreme child sexual abuse material are caught out. They need to see though [sic] that project."

But Patel's wider push involves law enforcement access to trusted communications, a strategy that stands in stark contrast to Apple's corporate mantra of user privacy. End-to-end encryption, already in use by Apple's Messages and a variety of popular communications platforms, is specifically targeted as a specter to be feared.

Patel repeats a common refrain from governments opposed to inherently secure messaging systems, saying the technology obscures vital information that could be used in police investigations and complicates efforts to capture criminals. The government does not want to surveil its citizens, but protect them from "truly evil crimes," she says.

"The introduction of end-to-end encryption must not open the door to even greater levels of child sexual abuse - but that is the reality if plans such as those put forward by Facebook go ahead unchanged," Patel writes, referencing Facebook's plan to enable encryption technology for its Messenger product. "Hyperbolic accusations from some quarters that this is really about governments wanting to snoop and spy on innocent citizens are simply untrue. It is about keeping the most vulnerable among us safe and preventing truly evil crimes."

While it follows regional laws and regulations pertaining to legal requests for user data, Apple continues to develop software services that make it impossible for third parties to access certain information. Similar technologies have been applied to the company's hardware on the way to full stack protection.

As for Apple's CSAM initiative, the on-device CSAM monitoring solution faced stiff opposition from industry experts, privacy advocates and customers who argue the system will lead to mass surveillance. The uproar prompted Apple to postpone launch of the feature as it gathers feedback from interested parties.

Read on AppleInsider

Patel's thoughts, published by The Telegraph and Britain's Home Office on Wednesday, begins with a call to action against sexual abuse of children, which is "incited, organized, and celebrated online."

In efforts to curb dissemination of child sexual abuse material and ensure public safety, Patel tasks "international partners and allies" to support the UK's approach of "holding technology companies to account and asking social media companies to put public safety before profits."

The home secretary cited Apple's CSAM monitoring plans as a novel solution to the problem.

"Recently Apple have taken the first step, announcing that they are seeking new ways to prevent horrific abuse on their service," Patel writes. "Apple state their child sexual abuse filtering technology has a false positive rate of 1 in a trillion, meaning the privacy of legitimate users is protected whilst those building huge collections of extreme child sexual abuse material are caught out. They need to see though [sic] that project."

But Patel's wider push involves law enforcement access to trusted communications, a strategy that stands in stark contrast to Apple's corporate mantra of user privacy. End-to-end encryption, already in use by Apple's Messages and a variety of popular communications platforms, is specifically targeted as a specter to be feared.

Patel repeats a common refrain from governments opposed to inherently secure messaging systems, saying the technology obscures vital information that could be used in police investigations and complicates efforts to capture criminals. The government does not want to surveil its citizens, but protect them from "truly evil crimes," she says.

"The introduction of end-to-end encryption must not open the door to even greater levels of child sexual abuse - but that is the reality if plans such as those put forward by Facebook go ahead unchanged," Patel writes, referencing Facebook's plan to enable encryption technology for its Messenger product. "Hyperbolic accusations from some quarters that this is really about governments wanting to snoop and spy on innocent citizens are simply untrue. It is about keeping the most vulnerable among us safe and preventing truly evil crimes."

While it follows regional laws and regulations pertaining to legal requests for user data, Apple continues to develop software services that make it impossible for third parties to access certain information. Similar technologies have been applied to the company's hardware on the way to full stack protection.

As for Apple's CSAM initiative, the on-device CSAM monitoring solution faced stiff opposition from industry experts, privacy advocates and customers who argue the system will lead to mass surveillance. The uproar prompted Apple to postpone launch of the feature as it gathers feedback from interested parties.

Read on AppleInsider

Comments

They would first have had to get shareholders approval for maintaining certain ethical principles no matter what the cost for all future, and then advertising that that policy widely on their investors pages, prospectuses, etc. before even entertaining the thought of CSAM scanning.

Now they and their users are already fucked: they admitted they could do it, and the pressures already are mounting. Once it active for CSAM it will go to drugs, prostitution, political speech, traffic violations, etc. etc.

You open Pandora’s box, you cannot control what’s coming out of it.

What isn’t irrelevant, is that once the infrastructure is there, there’s no way Apple can (afford to) keep a lid on it, and it will creep into ever more area, until it reaches spitting on the sidewalk under the guise of stopping the spread of a future pandemic.

The fact that who’s for it and who’s against it influences your stance on the matter only shows one thing: you never did, and you still don’t, understand the gravity of what this is all about, and you let yourself be baited by the “but the poor children” straw man.

Let's not try too hard to spread that around.

Can one of you please explain to me how that is not currently possible? Because I’m missing something. If desired, China could require Apple to surveillance EVERYTHING on your device. There is no absolutely need for this CSAM tool to do that.

For years, Apple and every other online service was already compelled to search through all user data stored on their servers and report illegal child pornography. Why haven’t they expanded that search into other areas? Can one of you explain that?

The current “laws” do not allow e2e encryption of stored data for that one and only reason; child pornography. This gives law enforcement [warranted] access to all your iCloud data, not just your illegal photos.

All Apple’s CSAM tools allow, is that Apple can remain complaint to current laws (reporting illegal child pornography) while also offering users the ability to store the rest of their data (and photos) encrypted and out of prying eyes.

1. It may be extremely hard for you to grasp this basic point, but many people do understand this - Apple's iCloud servers are Apple's property and anything stored in iCloud by end-users is public data for all practical purposes (even though the owner of the data is end-users). And Apple can scan data in their property for illegal content and report it to law enforcement agencies. BUT they have no business or whatsoever looking into the data stored in a device which is "owned' by end-users. "Ownership of the property" is the key operative word here. Apple owns iCloud and they can do whatever hell they want to do with it, as long as they "inform" end-users about it. End-users own the phones and Apple/Google/<anyone else> (at least the ones who "claim" to uphold the "privacy" of the end-users) has no business peeking into it.

2. The most important one - you mentioned "All Apple’s CSAM tools allow, is that Apple can remain complaint to current laws (reporting illegal child pornography) while also offering users the ability to store the rest of their data (and photos) encrypted and out of prying eyes". This is pure SPECULATION on your part and please do NOT spread this RUMOR WITHOUT any basis again and again. Apple has NEVER mentioned that they WILL implement end-to-end encryption as soon as on-device CSAM scanning is enabled. NEVER. It is pure speculation by some of the AI forum members that Apple would do it. If you are so sure about it, can you please share Apple's official statement on this?

I can see you've extrapolated the effect of the solution to an absurd certainty of a totalitarian future as well. There's no actual evidence for any of that you know, it's all just a bunch of ifs and maybes.

As my boss would say “don’t come to me with problems, come to me with solutions” but the only alternative solution I’ve heard so far is don’t do anything.

A fact which also makes the comments about the U.K. becoming ‘communist/ mini-China’ even more hilarious. But these are probably comments from ignorant types who probably could identify either China or the U.K. on a map, and get worried when they occasionally cross the county-line.

- Forced to resign after holding secret meetings with Israeli authorities without government approval.

- Multiple incidents of bullying staff. The last complaint of bullying cost British taxpayers hundreds of thousands of pounds to settle.

- Broke the ministerial code a second time by not declaring work outside parliament.

- Backed soccer fans who booed England players kneeling against racism (although she changed her tune when the team started winning games).

- Broke the ministerial code again by lobbying to get PPE contracts awarded to friends.

And that's before we get to just how incompetent she's been as a minister. She's hated by the left for revelling in being cruel and she's hated by the right for being completely ineffectual at stopping asylum seekers from reaching Britain.But, sure, anyone who dislikes Priti Patel is a racist and a communist.

I'm not a communist dude. Calm your crazy down.