App Privacy Report to debut in iOS 15.2 beta, code for Communication Safety appears [u]

Apple continues to roll out new features promised for inclusion in iOS 15, with the first iOS 15.2 beta version issued on Wednesday delivering a more robust app privacy tool and hinting at future implementation of an upcoming child safety feature.

Announced at this year's Worldwide Developers Conference in June, Apple's App Privacy Report provides a detailed overview of app access to user data and device sensor information. The feature adds to a growing suite of tools that present new levels of hardware and software transparency.

Located in the Privacy section of Settings, the App Privacy Report offers insight into how often apps access a user's location, photos, camera, microphone and contacts over a rolling seven-day period. Apps are listed in descending chronological order starting with the last title to access sensitive information or tap into data from one of iPhone's sensors.

Apple's new feature also keeps track of recent network activity, revealing domains that apps reached out to directly or through content loaded in a web view. Users can drill down to see which domains were contacted, as well as what websites were visited during the past week.

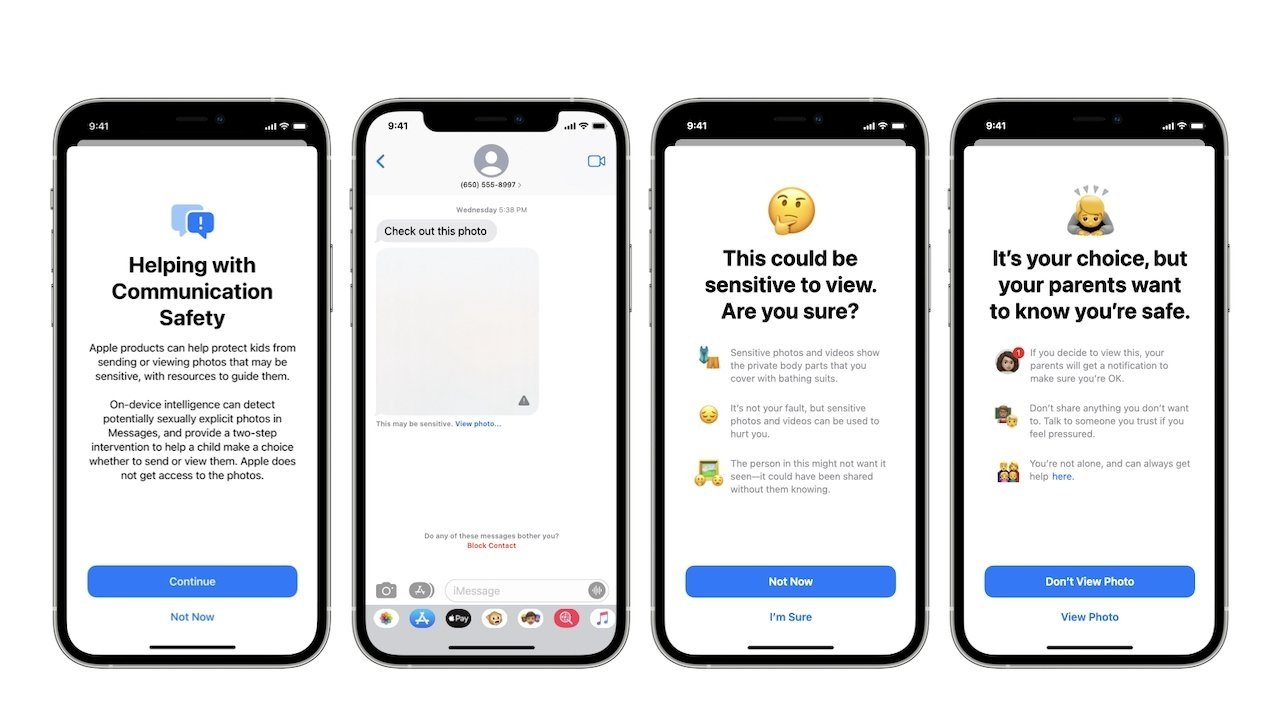

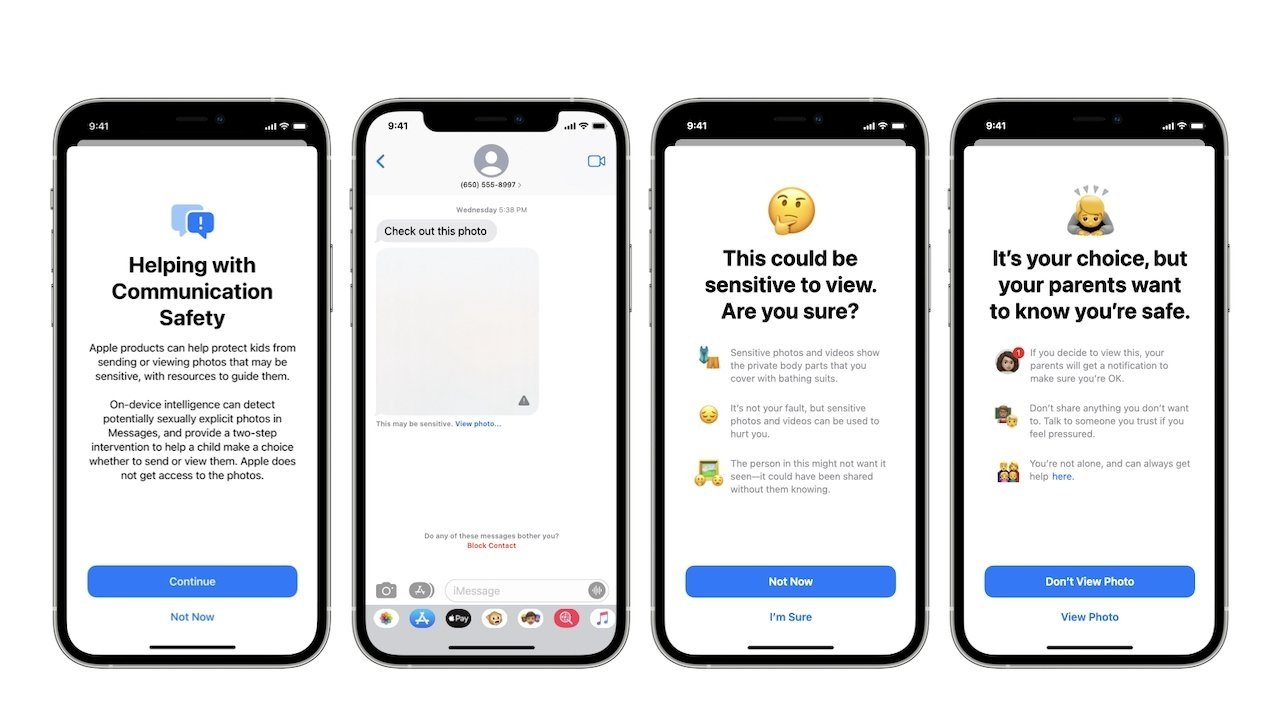

In addition to the new App Privacy Report user interface, iOS 15.2 beta includes code detailing Communication Safety affordances designed to protect children from sexually explicit images, reports MacRumors.

Built in to Messages, the feature automatically obscures images deemed inappropriate by on-device machine learning algorithms. When such content is detected, children under 13 years old are informed that a message will be sent to their parents if the image is viewed. Parental notifications are not sent to parents whose children are between the ages of 13 and 17.

Messages will display a variety of alerts when images are flagged, according to code uncovered by MacRumors contributor Steve Moser:

Update: Apple has informed MacRumors that Communication Safety will not debut with iOS 15.2, nor will it be released as reported.

Read on AppleInsider

Announced at this year's Worldwide Developers Conference in June, Apple's App Privacy Report provides a detailed overview of app access to user data and device sensor information. The feature adds to a growing suite of tools that present new levels of hardware and software transparency.

Located in the Privacy section of Settings, the App Privacy Report offers insight into how often apps access a user's location, photos, camera, microphone and contacts over a rolling seven-day period. Apps are listed in descending chronological order starting with the last title to access sensitive information or tap into data from one of iPhone's sensors.

Apple's new feature also keeps track of recent network activity, revealing domains that apps reached out to directly or through content loaded in a web view. Users can drill down to see which domains were contacted, as well as what websites were visited during the past week.

In addition to the new App Privacy Report user interface, iOS 15.2 beta includes code detailing Communication Safety affordances designed to protect children from sexually explicit images, reports MacRumors.

Built in to Messages, the feature automatically obscures images deemed inappropriate by on-device machine learning algorithms. When such content is detected, children under 13 years old are informed that a message will be sent to their parents if the image is viewed. Parental notifications are not sent to parents whose children are between the ages of 13 and 17.

Messages will display a variety of alerts when images are flagged, according to code uncovered by MacRumors contributor Steve Moser:

- You are not alone and can always get help from a grownup you trust or with trained professionals.

- You can also block this person.

- You are not alone and can always get help from a grownup you trust or with trained professionals. You can also leave this conversation or block contacts.

- Talk to someone you trust if you feel uncomfortable or need help.

- This photo will not be shared with Apple, and your feedback is helpful if it was incorrectly marked as sensitive.

- Message a Grownup You Trust.

- Hey, I would like to talk with you about a conversation that is bothering me.

- Sensitive photos and videos show the private body parts that you cover with bathing suits.

- It's not your fault, but sensitive photos can be used to hurt you.

- The person in this may not have given consent to share it. How would they feel knowing other people saw it?

- The person in this might not want it seen-it could have been shared without them knowing. It can also be against the law to share.

- Sharing nudes to anyone under 18 years old can lead to legal consequences.

- If you decide to view this, your parents will get a notification to make sure you're OK.

- Don't share anything you don't want to. Talk to someone you trust if you feel pressured.

- Do you feel OK? You're not alone and can always talk to someone who's trained to help here.

- Nude photos and videos can be used to hurt people. Once something's shared, it can't be taken back.

- It's not your fault, but sensitive photos and videos can be used to hurt you.

- Even if you trust who you send this to now, they can share it forever without your consent.

- Whoever gets this can share it with anyone-it may never go away. It can also be against the law to share.

Update: Apple has informed MacRumors that Communication Safety will not debut with iOS 15.2, nor will it be released as reported.

Read on AppleInsider

Comments

https://appleprivacyletter.com/

A feature like this can open the doors to abusive requests by hostile governments, compelling Apple to flag "inappropriate" content like political cartoons, memes critical of the regime, images promoting LGBT equality or other important social issues. The "penalty" for non-compliance would be to have those devices banned for sale or for use at all within those countries.