Australia orders Apple & others to disclose anti-CSAM measures

An Australian regulator has told Apple, Meta, and Microsoft to detail their strategies for combating child abuse material, or face fines.

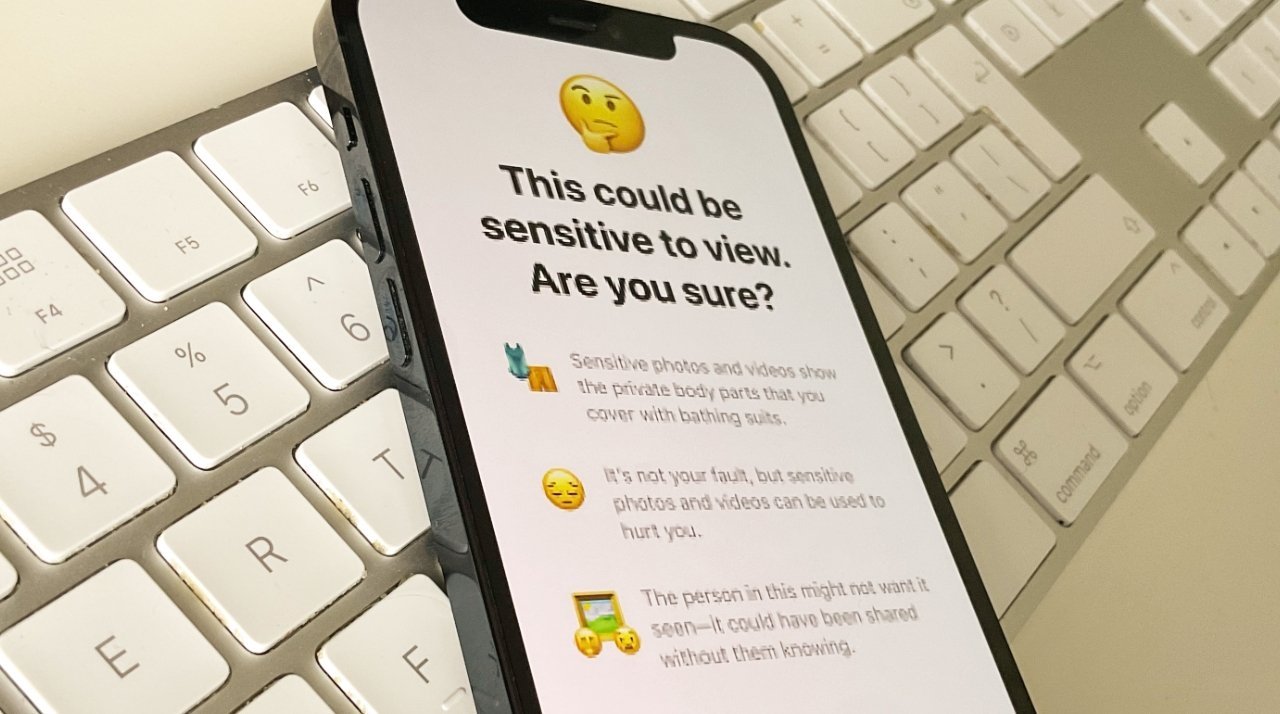

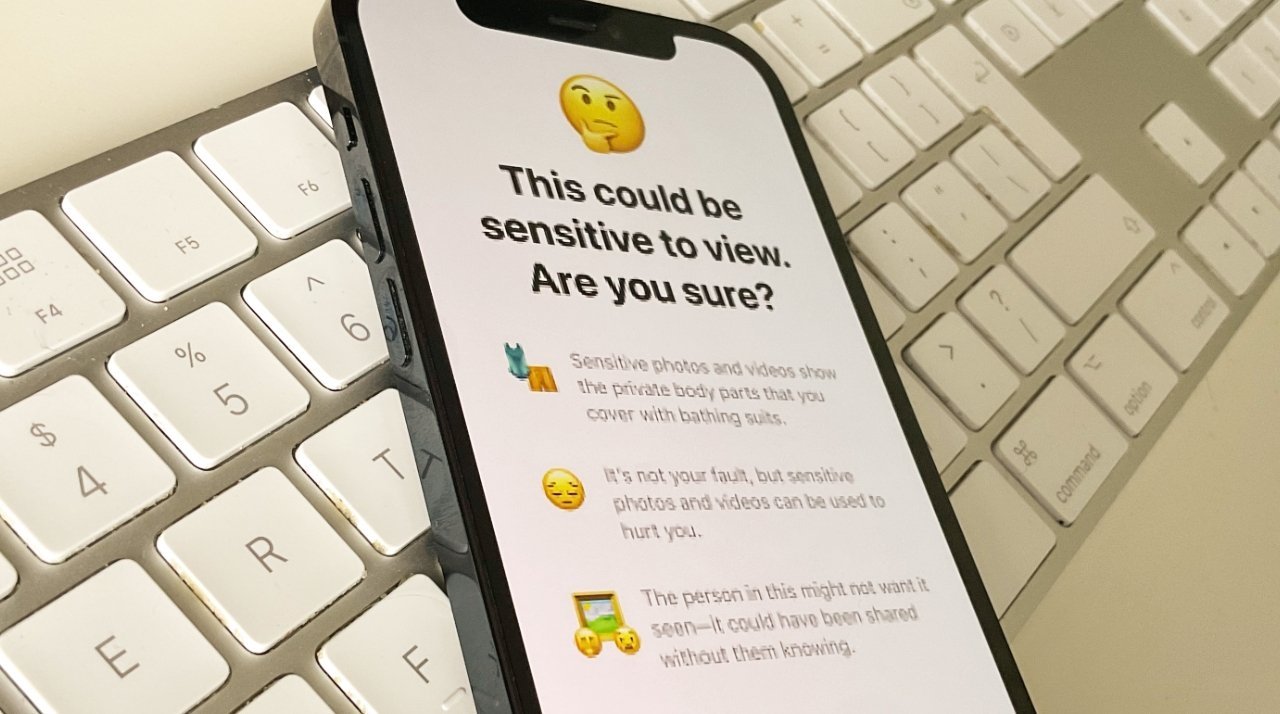

Apple famously announced measures to prevent the spread of Child Sexual Abuse Material (CSAM) in August 2021, only to face criticism over security and censorship. Apple backpedal its plans in September 2021, but as AppleInsider has noted, the addition of such measures is now inevitable.

According to Reuters, Australia's e-Safety Commissioner has sent what are described as legal letters to Apple and other Big Tech platforms. A spokesperson for the Commissioner, said that the letters use new Australian laws to compel disclosure.

"This activity is no longer confined to hidden corners of the dark web," said commissioner Julie Inman Grant in a statement seen by Reuters, "but is prevalent on the mainstream platforms we and our children use every day."

"As more companies move towards encrypted messaging services and deploy features like livestreaming," she continued, "the fear is that this horrific material will spread unchecked on these platforms."

It's not known in what detail or form Apple, Meta, and Microsoft are now required to report on their measures. However, they have 28 days from the issuing of the letter to do so.

The Australian regulator has asked for information about strategies to detect and also to remove CSAM. If the firms do not respond within the 28 days, the regulator is empowered to fine them $383,000 for every subsequent day they do not comply.

Apple has not commented on the issue. A Meta spokesperson said the company was reviewing the letter and continued to, "proactively engage with the e-Safety Commissioner on these important issues."

A Microsoft spokesperson told Reuters that the company received the letter and plans to respond within the 28 days.

Read on AppleInsider

Apple famously announced measures to prevent the spread of Child Sexual Abuse Material (CSAM) in August 2021, only to face criticism over security and censorship. Apple backpedal its plans in September 2021, but as AppleInsider has noted, the addition of such measures is now inevitable.

According to Reuters, Australia's e-Safety Commissioner has sent what are described as legal letters to Apple and other Big Tech platforms. A spokesperson for the Commissioner, said that the letters use new Australian laws to compel disclosure.

"This activity is no longer confined to hidden corners of the dark web," said commissioner Julie Inman Grant in a statement seen by Reuters, "but is prevalent on the mainstream platforms we and our children use every day."

"As more companies move towards encrypted messaging services and deploy features like livestreaming," she continued, "the fear is that this horrific material will spread unchecked on these platforms."

It's not known in what detail or form Apple, Meta, and Microsoft are now required to report on their measures. However, they have 28 days from the issuing of the letter to do so.

The Australian regulator has asked for information about strategies to detect and also to remove CSAM. If the firms do not respond within the 28 days, the regulator is empowered to fine them $383,000 for every subsequent day they do not comply.

Apple has not commented on the issue. A Meta spokesperson said the company was reviewing the letter and continued to, "proactively engage with the e-Safety Commissioner on these important issues."

A Microsoft spokesperson told Reuters that the company received the letter and plans to respond within the 28 days.

Read on AppleInsider

Comments

and of course it’s australia

An author’s opinion does not mean it’s inevitable.

Apple has probably been doing server side CSAM for a while now. I see no reason to move it client side, as I’ve detailed before.

I’ve also detailed that the “smart” criminals probably already use something else to transit their materials. Using these big name services is like doing your business in broad daylight. Client side CSAM only punishes the honest and the really stupid.

Who is the criminal here?

I think this proves that the recent editorial of AI is correct, Apple’s CSAM screening program is inevitable.