The music industry wants Apple Music & Spotify to block AI music training

AI services have been training on music hosted on streaming services like Apple Music, and Universal Music Group wants it to stop.

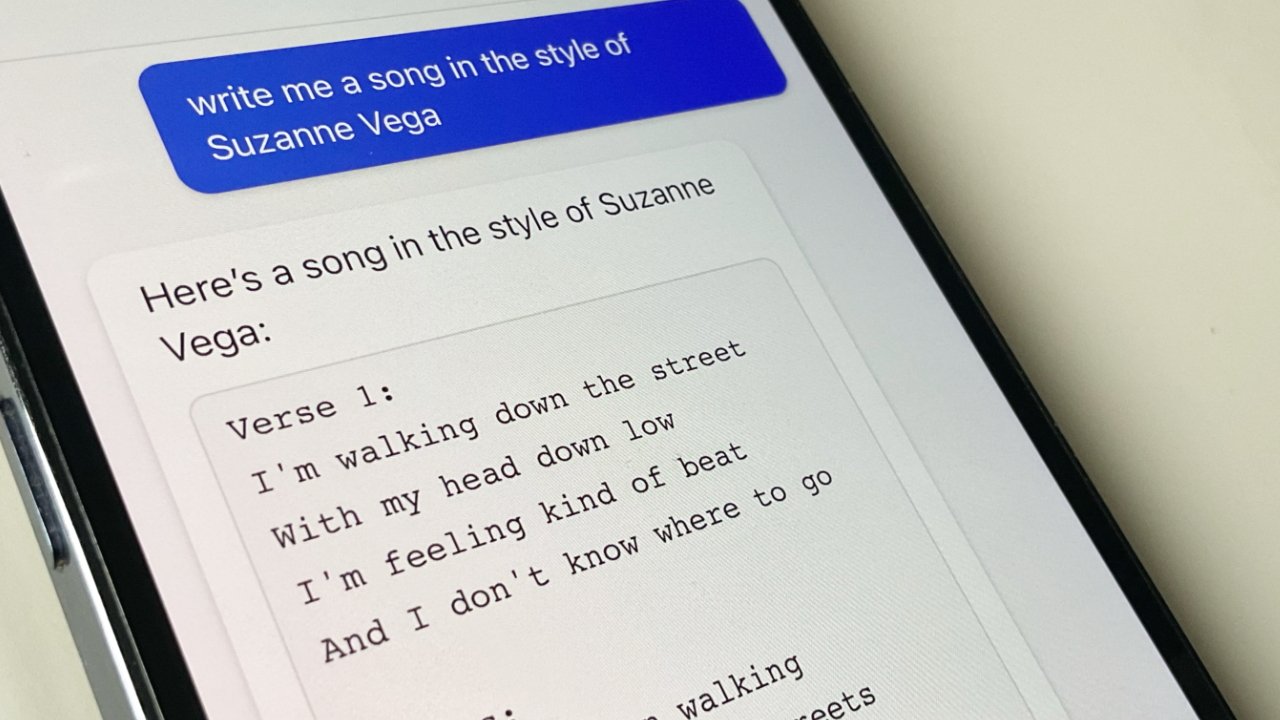

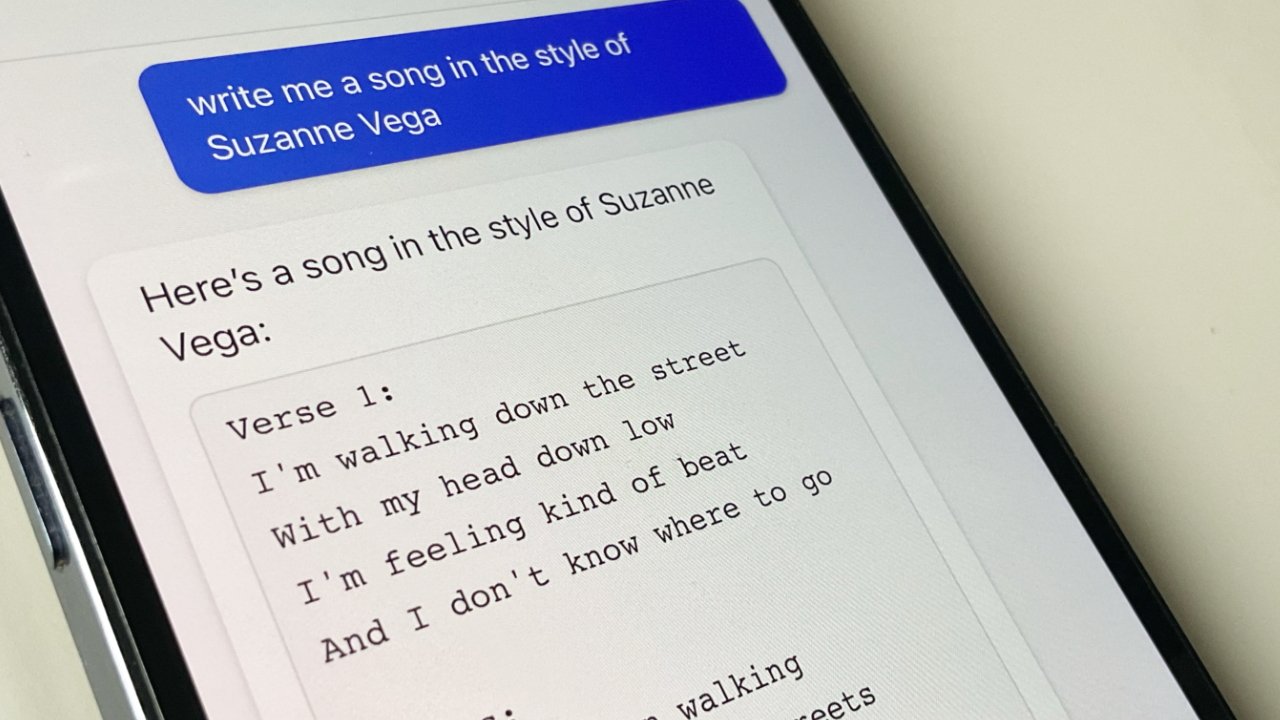

Most criticism of AI such as ChatGPT earning money off the back of unpaid creative people, has been focused on text. But now, according to the Financial Times, record labels are concerned about music.

Universal Music Group (UMG), responsible for around a third of the world's music, reportedly contacted streaming services in March 2023 concerning AI. The group told the streamers that AI systems have been trained by scraping lyrics and melodies from

"We have become aware that certain AI systems might have been trained on copyrighted content," said UMG's email to streamers, "without obtaining the required consents from, or paying compensation to, the rightsholders who own or produce the content."

UMG asked the streamers to block access to their music catalog for developers using it for training. "We will not hesitate to take steps to protect our rights and those of our artists," continued the group's email.

"We have a moral and commercial responsibility to our artists to work to prevent the unauthorised use of their music and to stop platforms from ingesting content that violates the rights of artists and other creators," a UMG spokesperson told the publication. "We expect our platform partners will want to prevent their services from being used in ways that harm artists."

"This next generation of technology poses significant issues," an unnamed source told the Financial Times. "Much of [generative AI] is trained on popular music."

"You could say: compose a song that has the lyrics to be like Taylor Swift, but the vocals to be in the style of Bruno Mars, but I want the theme to be more Harry Styles," continued the source. "The output you get is due to the fact the AI has been trained on those artists' intellectual property."

Generative AI systems require an enormous dataset called a Large Language Model (LLM). Google, for instance, reportedly trained a system called MusicLM with 280,000 hours of music.

Google has not released MusicLM publicly, though, as it found that 1% of the music it generated was identical to previous recordings.

The Financial Times says that Spotify declined to comment. Apple has not yet commented publicly, nor are we expecting it to respond to our queries on the matter.

Apple itself is reportedly working on creating music via AI. In 2022, it bought AI Music, a UK-based startup specializing in the field.

Read on AppleInsider

Most criticism of AI such as ChatGPT earning money off the back of unpaid creative people, has been focused on text. But now, according to the Financial Times, record labels are concerned about music.

Universal Music Group (UMG), responsible for around a third of the world's music, reportedly contacted streaming services in March 2023 concerning AI. The group told the streamers that AI systems have been trained by scraping lyrics and melodies from

"We have become aware that certain AI systems might have been trained on copyrighted content," said UMG's email to streamers, "without obtaining the required consents from, or paying compensation to, the rightsholders who own or produce the content."

UMG asked the streamers to block access to their music catalog for developers using it for training. "We will not hesitate to take steps to protect our rights and those of our artists," continued the group's email.

"We have a moral and commercial responsibility to our artists to work to prevent the unauthorised use of their music and to stop platforms from ingesting content that violates the rights of artists and other creators," a UMG spokesperson told the publication. "We expect our platform partners will want to prevent their services from being used in ways that harm artists."

"This next generation of technology poses significant issues," an unnamed source told the Financial Times. "Much of [generative AI] is trained on popular music."

"You could say: compose a song that has the lyrics to be like Taylor Swift, but the vocals to be in the style of Bruno Mars, but I want the theme to be more Harry Styles," continued the source. "The output you get is due to the fact the AI has been trained on those artists' intellectual property."

Generative AI systems require an enormous dataset called a Large Language Model (LLM). Google, for instance, reportedly trained a system called MusicLM with 280,000 hours of music.

Google has not released MusicLM publicly, though, as it found that 1% of the music it generated was identical to previous recordings.

The Financial Times says that Spotify declined to comment. Apple has not yet commented publicly, nor are we expecting it to respond to our queries on the matter.

Apple itself is reportedly working on creating music via AI. In 2022, it bought AI Music, a UK-based startup specializing in the field.

Read on AppleInsider

Comments

My nephew writes music for movies and TV. He’s very good at what he does and is paid well for it. But I can see a day when the studios tell him, “Yes your music is fantastic, but we can get good enough music from the AI composer for a few pennies.” At that point his career will be over and him, his wife, and their two kids will be out on the street.

This is the real cost of AI moving into the arts.

Once again, the music business is a generation behind in technology and its effect on their business model. They are like a dinosaur in quicksand. A generation ago, file-sharing nearly destroyed the business, all because these companies didn't have the foresight to create a usable, simple and economical way of purchasing digital music. So, their response was to go Defcon One on not just companies but individuals (as to make an example of them). This paved the way for Apple's iTunes Music Store, which they were also slow on embracing. Now we're in the streaming age, and the shortsighted hubris continues.

So an AI model analyzes songs on various services. But before that occurs, the AI model has to be allowed access to that material. That means a human has to allow the AI model to do that, presumably through a subscription. So, there's really no legal or moral basis to say that AI isn't allowed to listen. Moreover, it hasn't apparently occurred to the Music Dinosaurs that if someone gives AI a prompt like the one above, it means the they are interested in Taylor Swift, Bruno Mars, and Harry Styles. Repeat this, and it only increases the chances of more awareness of the artists. It's something that content creators and even publishers have figured out with YouTube. Why would AI be any different?

Well, that's not necessarily true. First, it won't happen overnight. Secondly, technological change always destroys some jobs and creates others. And while AI may change the way he does his job (perhaps very significantly) it's unlikely top replace him completely. AI cannot make artistic choices. It can assist with them, but it can't make them. I do a lot of video editing with audio. AI could help me sync clips to the audio, time transitions, suggest themes, etc. But it can't pick which royalty-free music fits the voice of the video beyond generic "emotional piano music" or "country two step". Creating an emotional reaction and communicating feelings requires....feelings.

A scam? I don't think that fits at all. It may turn out to be illegal, but it's not a scam. I also don't know that the AI model requires "constant access." Once the model understands Taylor Swift's lyric style and Bruno Mars' bass lines, for example, it doesn't forget. It's not much different than a model recommending specific content based on mood, tempo, style, instrumentation, etc. Go over to Pixabay and tell me if you think all of the content is human-curated. These models need to learn (train) on styles...I'm not sure how one can legally prevent programmers from training them on streaming services.

And it won’t happen with this. Last fall I read an article where Netflix had scanned their animation back catalogue to train an AI. Then they fired their background animators and are using the AI to do the backgrounds, landscapes, room interiors and such. And the animators? Gone, no more work, they were cut loose without a second thought. Netflix saved money, and the artists DIDN’T get better jobs. Is the animation as good? No, but Netflix saved money.

A few years ago I read where studios were experimenting with computer generated scripts. Back then they needed real writers to clean them up and make them actually usable, but it was the beginning. We are nearly to the point where AI scripts, will be “good enough”.Couple that with AI animation, and and AI music, and you won’t need any creative people. Oh it will start with low budget, direct to disk crap aimed at children. The industry mostly doesn’t respect them as an audience anyway. But after cutting their teeth on that it will move up.

Stay tuned, in 2035, you will be able to stream a film, All AI created and done in CGI. No actual person below the Producer involved. No writers, musicians, animators, or actors needed. It will have cost pennies to make and will rake in the money.

Just too bad the people that used to work in that industry won’t be able to afford to see it.

https://arstechnica.com/information-technology/2023/02/man-beats-machine-at-go-in-human-victory-over-ai/

So when Google says it "trained" an "AI" with 280,000 hours of music...that just means there is a 280,000 hour music database that it has constant access to.

The fundamentals of Copyright are to ensure the evolution of technology by protecting a person or entity's right to profit from their work without undue competition from the appropriation of their IP.

The founders of AI technologies are feverishly looking for a way to monetize their work but this cannot happen at the expense of creators.

The DMCA is going to have to revise it's language to encompass a process in which creators can make a proper claim if they feel like their content has been appropriated

in derivative works.

Current AI pretty clearly functions by scraping vast quantities of existing human-generated work, chops it into component parts and then recombines those parts per user instructions. That's not artistic creation. That's just "sampling" on steroids. Unless or until there is an actual spark of innovative creation involved, the writers of AI code are simply making IP theft apps, and they should be treated as such.

I can tell you, as a musician, it is not that easy to get a good recording this way. And most will find out and abandon such a project. Sure, there will always be a nefarious few who will go to great lengths, but this is a problem we've always had and have fought rather successfully. Making piracy easier isn't something the music industry is going to support.

There is no possibility that these musicians didn't do precisely what they are accusing AI doing.

What do you think Apple TVs Schmiggadoon is doing? Though I might have expected writers to do a better job.

I agree. AI samples music. And sampling is considered copyright infringement when the original creator or owner has not consented and been paid for the samples.