Why Apple uses integrated memory in Apple Silicon -- and why it's both good and bad

Apple's Unified Memory Architecture first brought changes to the Mac with Apple Silicon M1 chips. There are clear architectural benefits for the hardware -- and it is both good and bad for consumers. Here's why.

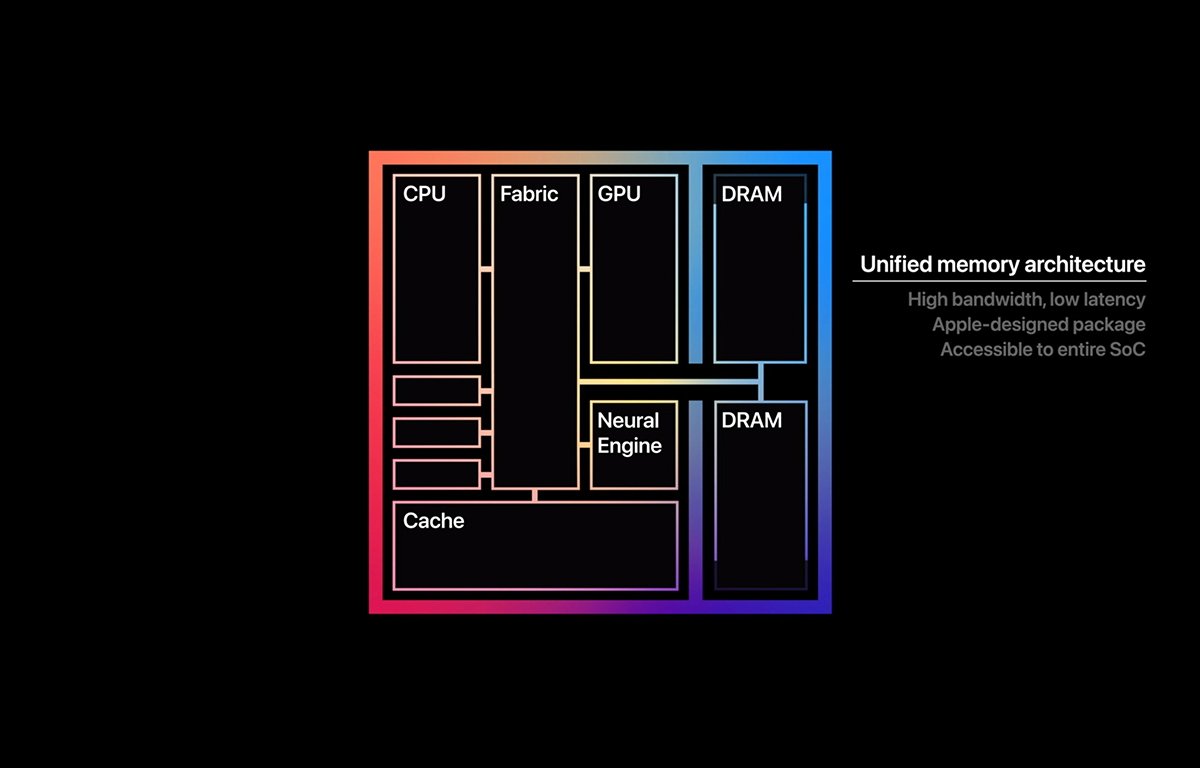

Apple's Unified Memory Architecture (UMA) was announced in June. 2020 along with its new Apple Silicon CPUs. The UMA has a number of benefits compared to more traditional memory approaches and represents a revolution in both performance and size.

In traditional desktop and laptop computer design, the main system memory known as RAM sits on a system bus which is separate from the CPU and the GPU.

A bus controller is typically required, which uses interrupts when the CPU needs data from the main system memory. Interrupts are hardware signals different parts of a computer use to pause other parts of the system while a task is performed.

Interrupts cause delays in system processing.

So for example, every time the CPU needs to access data in memory or every time the screen needs to be refreshed, an interrupt is generated, the system pauses, and the task completes. When the task is done, the system resumes general processing.

Direct Memory Access (DMA) was introduced later, but due to motherboard sizes and distances, RAM access can still be slow. DMA is a concept in which some computer subsystems can access memory independently of the CPU.

In DMA the CPU initiates a memory transfer, then performs other work. When the DMA operation is complete, the memory controller generates an interrupt signalling the CPU that data is ready.

RAM access is but one type of interrupt in traditional computer architecture. In general the more busses and interrupts, the more performance bottlenecks there are on a computer.

System on a chip

Graphics Processing Units (GPUs) and game consoles have long solved this problem by integrating components into single chips which eliminates busses and interrupts. GPUs for example, usually have its own RAM attached to the chip, which speeds up processing and allows for faster graphics.

This System on a Chip (SoC) design is the new trend in system and CPU design because it both increases speed and reduces component counts - which reduces the overall cost of products.

It also allows systems to be smaller. Smartphones have long used SoC designs to reduce size and save power, such as with Apple's own iPhone ARM SoC.

Sony's PlayStation 2 was the first consumer game console to ship with an integrated SoC called the Emotion Engine which integrated over a dozen traditional components and subsystems onto a single die.

Apple's M1 and M2 ARM-based chip designs are similar. They are essentially a SoC design that integrates CPUs, GPUs, main RAM, and other components into a single chip.

With this design, instead of the CPU having to access RAM contents across a memory bus, the RAM is connected directly to the CPU. When the CPU needs to store or retrieve data in RAM it simply goes directly to the RAM chips.

With this change, there are no more bus interrupts.

Apple's M1 integrated architecure.

This design eliminates RAM bus bottlenecks, which vastly improves performance. M1 Max, for example, provides 400GB/sec of memory throughput - approaching that of modern game consoles such as Sony's PlayStation 5.

SoC integration is one of the main reasons the M1 and M2 series of CPUs are so fast - and why modern console game-level graphics are finally coming to the Mac.

It's why macOS finally feels snappy and responsive after feeling slightly rubbery for decades.

SoCs also vastly reduce power consumption, and heat, which makes them ideal for laptops, phones, tablets, and other portable devices. Less heat also means the components last longer and suffer less material degradation over time.

Heat affects system performance over time as it slowly diminishes the materials' properties contained in components, which leads to slightly lower performance. This is one of the reasons why very old computers seem to "slow down" with time, and a big cause of failures.

"Heat is the enemy of electronics" as they say in the EE world.

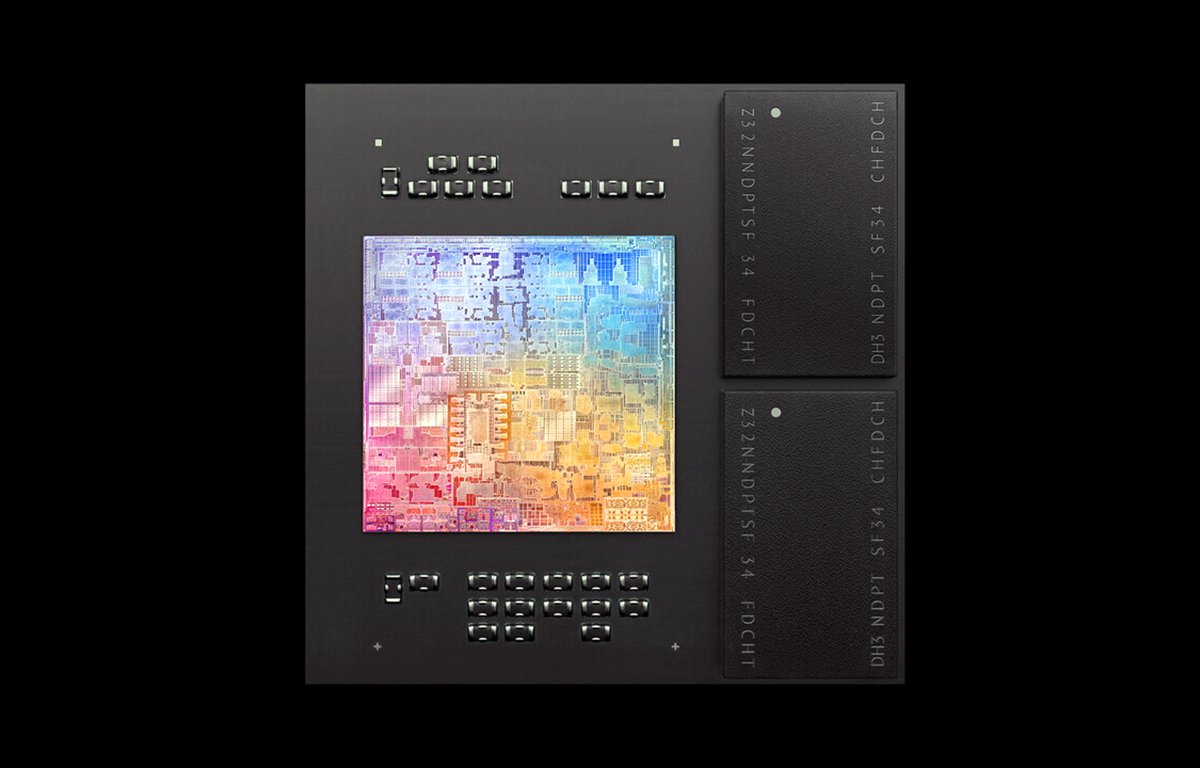

Apple's M1 CPU with integrated RAM.

The downside of integrated memory in Apple Silicon

While Apple's SoC designs have proven to be vast improvements over its traditional designs, there are some downsides.

The first, and most obvious one is upgrades -- with the system RAM contained in the CPU itself, there's no way to upgrade the RAM later, except to replace the CPU - which, with modern Surface-Mount Device (SMD) soldering technology, you probably wouldn't want to do.

Earlier Mac models had banks of RAM DIMMs (Dual in-line Memory Modules) or memory "sticks" which could be swapped in and out for larger sizes to upgrade memory.

With Apple Silicon that option goes away since the RAM chips themselves are manufactured into the CPU. When you buy an Apple Silicon Mac, you're stuck with whatever RAM size you initially ordered.

Another downside is that if the RAM or CPU fails, the entire thing fails. There's no replacing just one part, you have to do it all.

Modern Mac motherboards are so tiny with mostly SMD components. In most cases, it's cheaper and faster just to replace the entire thing, or just buy a new Mac.

Another, and equally obvious downside to SoCs is that the use of integrated GPUs means there's no way to upgrade your Mac's graphics card later for a faster or bigger version. And with Apple dropping support for external Thunderbolt GPU expansion boxes in Apple Silicon, even external GPU expansion is no longer an option.

What all of this means, of course, is that modern Macs are becoming more and more like "appliances" than like computers as we have traditionally thought of them.

Overall, this is a good thing.

It means you're going to want to buy a new Mac every few years, but the performance improvements make that upgrade path are worth it. Compared to Apple's old Intel-based traditional architecture, Apple Silicon is a complete revolution in terms of performance.

As systems get smaller and smaller, so will devices. Laptops will become thinner and lighter, and battery life will continue to improve - even as performance improves over time.

In a few years' time, there's no doubt Apple will have advanced Apple Silicon far enough that a new Mac will make the cost worth it. Time is money, and the amount of work you can get done on modern Macs using Apple Silicon far outweighs the cost of the upgrading.

You can read more about the technical details of Apple Silicon on Apple's developer website.

Read on AppleInsider

Comments

It appears that PCI-Express 6 will be amongst the first to really start to user in CXL Anandtech has a good write up :

https://www.anandtech.com/show/17520/compute-express-link-cxl-30-announced-doubled-speeds-and-flexible-fabrics

I've seen too much kvetching about "Who the Mac Pro is for?" which is understandable but moving to the next generation

of memory types isn't being taken lightly and the basic question is "why should a next generation computing platform have to choose?"

The system should be able to leverage volatile, persistent, DRAM level speeds and RAM level speeds without the application or the end user

fiddling.

In 5 years you shouldn't have to ponder about how much memory you need to purchase as the science should make the question relatively obsolete

you should be able to extend the memory albeit at perhaps slightly slower speeds.

don’t hurt to buy both. Don’t force each to be others.

That mindset typically carries into other areas of life too: why doesn't everyone speak the same language, worship the same god, look the same, etc, etc. They feel this need for everything to be the same, and for some reason want to force everything to be that way. I'd really like to know the reason why, because for myself, diversity is what makes life interesting. And in both nature and technology, it's proven to have great benefits for survival and progress.

Apple is not going after the low end of the market. Nor is Apple going after the ultra high end where users really do need terabytes of RAM, or multiple high performance GPUs.

Think of the car market. Most car owners stick with the original rims, suspension, radio, radiator, engine, etc. Even though many cars can be upgraded with performance boosting chips, fancier rims, even more powerful engines. Most car owners are not interested in these sorts of upgrades, and will keep the car as they bought it, until they replace it.

The Mac is not the best solution for everyone. However, Apple is trying to make it the best solution for most.

Generally socketed memory is on an external bus. This lets various peripherals directly access memory. The bus arbitration also adds overhead.

Traditional CPUs try to overcome these memory bottlenecks by using multiple levels of cache. This can provide a memory bandwidth performance boost for chunks of recently accessed memory. However, tasks that use more memory than will fit in the cache, may not benefit from these techniques.

Apples "System on a Chip" design really does allow much higher memory bandwidth. Socketing the memory really would reduce performance.

Yes, and it will be fun to see the other companies come up with a solution to the Apple Vision Pro (small size soon to get smaller in time), with their traditional ways of designing a computer system, Apple is a vertical computer company, the rest, the mainstream companies that Apple is in competition with are not…….

Nope

Yeah, not a greed thing. It’s an architecture thing. One line of extremely high performing chips for their product line is just smart.

Also, when was the last time any of you commenters had an issue with modular RAM or the CPU? I'll only accept issues less than 10-15 years ago. Both of these components perform very well, as long as you get them from reputable manufacturers. I have my daughter' original, white iBook and it still runs. The battery might have been replaced but nothing else.

When you look at the speed and capability of Apple's SoC and their unified memory, it's much faster and more capable than Apple's Intel Macs. People need to be open minded with this new architecture. Also take into account the ridiculously low wattage requirements. Intel and NVIDIA devices can be used to cook steaks on.

read Anandtech’s article if anyone is interested. The gcc performance of the M1 Max is comparable (not surpass, close) to the 12900K, and that’s 6 cores less.

oh and of course, Apple is designing their chip with a strict thermal limit (no higher than 5W per core), whereas the 12900K is pretty much at its peak.

I’d give up RAM sticks just for that performance. If I want a module, I’ll build a PC.

That’s far from the truth, and especially not when a soldered, proprietary system is required to give a huge performance boost.

The biggest strength from wintel is they’re standardized, but for every standard, they’re limiting themselves. Intel does want something like Apple’s UMA, but imagine building a PC like that. No customer will be happy.

I say that on my latest Mac I've bought myself: A 2014 5K Retina with 24GB of RAM that is on its last legs.