Apple Vision Pro needs custom-designed high-speed DRAM

To handle its multiple cameras without visible lag, Apple Vision Pro is to use a 1-gigabit DRAM chip, custom-designed to work with the headset's R1 chipset.

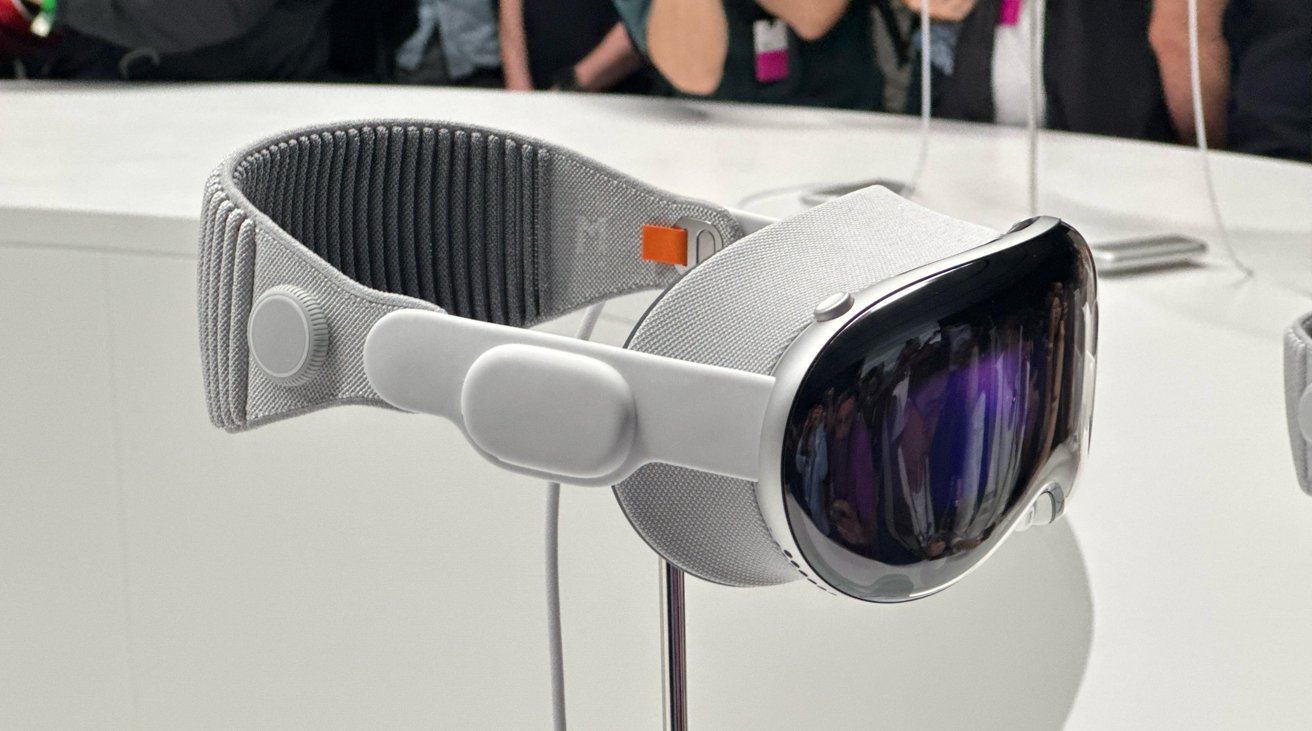

Apple Vision Pro

of the Vision Pro headset concentrated on use cases for it more than technical details, and little else will be known until the device goes on sale. However, Apple did say that there are 12 cameras, five sensors, six microphones -- and a 12-millisecond lag.

Apple has said that this delay is eight times faster than the blink of an eye. Now according to The Korea Herald, a substantial part of that speed is because the headset will use a specially-designed DRAM chip by memory manufacturer SK Hynix.

Representatives of SK Hynix told the publication only that "we cannot provide comments related to our clients." However, as well as discussing the speed of the DRAM design, the unspecified sources also say that this company is to be the sole memory chip supplier for Apple Vision Pro.

Unnamed industry officials told The Korea Herald that the DRAM's design would help Apple's headset double the possible data processing speed of the device.

As well as having 1-gigabit DRAM capacity, the new design reportedly features an eightfold increase in the number of input and output pins. It's claimed that the DRAM will be attached to Apple's R1 chipset to become a single unit.

This appears to be an evolution of Apple's Unified Memory concept that it has been using with the M-series processor for some time. it's not presently clear, however, if the DRAM associated with the R1 is also connected to the M2 chip in the headset, or if that has its own RAM.

Apple's R1 chip is dedicated to processing inputs from the Vision Pro's large range of cameras and other sensors. It then works in conjunction with Apple's M2 chip, which is the headset's main processor.

SK Hynix is one of several memory manufacturing firms, several of whom have previously faced class-action lawsuits over alleged price fixing.

Read on AppleInsider

Comments

It's only 256 MByte of DRAM, and it sounds like it will be in a package-on-package configuration. Ie, it sits on top of the R1 chip. Double the data rate as LPDDR5? Around 100 GByte/s per channel? 8 fold increase in pins sounds like a custom HBM type of design. The RAM amount sounds likes it is used exclusively by the R1 chip.

The M2 could be interesting too. I wonder if that is going to be a PoP package too. Package-on-package where the LPDDR sits on top of the M2 SoC chip like they do for A-series SoCs.

The trick is what display tech can they use that is cheaper than microOLED (is "OLED on Silicon" better?) and maintain 23m pixels?

End of 2025 perhaps.