Child safety advocacy group launches campaign against Apple

Heat Initiative, a child safety advocacy group, is launching a multi-million dollar campaign against Apple to pressure the company into reinstating iCloud CSAM detection.

Apple's abandoned child protection feature

Heat Initiative said it would launch the campaign against Apple after pressing the tech giant on why it had abandoned plans for on-device and iCloud Child Sexual Abuse Material (CSAM) detection tools. The launch comes after Apple giving its most detailed response yet as to why it backed off its plans, citing that it would uphold user privacy.

In response to Apple, Heat Initiative has officially launched its campaign website. The advocacy group issues a statement on the front page that reads, "Child sexual abuse is stored on iCloud. Apple allows it."

"Apple's landmark announcement to detect child sexual abuse images and videos in 2021 was silently rolled back, impacting the lives of children worldwide," the statement continues. "With every day that passes, there are kids suffering because of this inaction, which is why we're calling on Apple to deliver on their commitment."

The website contains alleged case studies that detail multiple cases where iCloud had been used to store sexual abuse materials, including photos, videos, and explicit messages.

It calls on Apple to "detect, report, and remove sexual abuse images and videos from iCloud," as well as "create a robust reporting mechanism for users to report child sexual abuse images and videos to Apple."

The company provides a copy of a letter it sent directly to Tim Cook, saying that it was "shocked and discouraged by your decision to reverse course and not institute" CSAM detection measures.

It also includes a button that allows visitors to send a prewritten email demanding action from Apple to the entire Apple executive team.

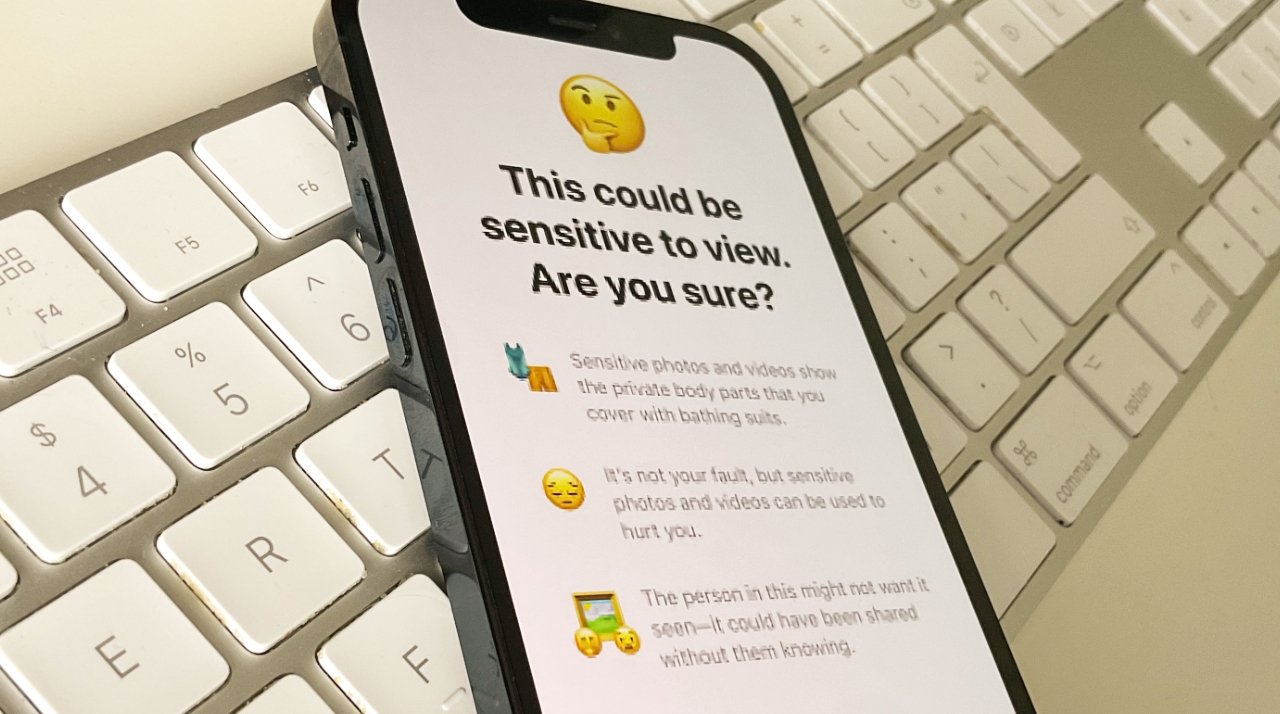

Child Sexual Abuse Material is an ongoing severe concern Apple attempted to address with on-device and iCloud detection tools. These controversial tools were ultimately abandoned in December 2022, leaving more controversy in its wake.

The Heat Initiative is not alone in its quest. As spotted by 9to5mac, the Christian Brothers Investment Services, and Degroof Petercam are respectively filing and backing a shareholder resolution about the topic.

Letter to Tim Cook With Signatures by Mike Wuerthele on Scribd

Read on AppleInsider

Comments

Good luck with that. If Apple’s own engineers tried and failed, it’s very unlikely anyone else could, and certainly not this fringe outfit.

With that said, Googling "Heat Initiative" and "Sarah Gardner" yields pretty much nothing. It's like they don't even exist. Looks very shady to me. Not sure why Apple even gave such an invisible and seemingly non-existent organization the time of day. We need Apple Insider to sleuth out more details about this mysterious group that's demanding to remove YOUR privacy protections currently in place.

Also, these groups can bleat all they want, but are they going to ban Math classes in college? Anyone with a bit of math knowledge can make an encryption scheme (or simply grab one of a few hundred thousand apps out there) and encrypt the files themselves. They can put their illegal files into an encrypted archive, or into an encrypted disk image.

Would these groups like to try to ban encryption? What they are asking for goes far beyond a "slippery slope", it is willful stupidity about the way the world, and computers, work. There is no silver bullet that will stop the abuse and exploitation of children. It is a repugnant crime that has gone on throughout history.

Apple is not facilitating these crimes, people are using their services while they commit a crime.

Are we going to start ordering car companies to limit the speed a car can travel? I mean cars can go far faster than the speed limit, and it is super easy for the car to know the speed limit of the road it is on, so why don't we simply prevent cars from going faster than that? What about all the cameras in cars, should we also demand that those are networked and monitored by the car companies so that if someone injures someone with their car, they can be caught by the car company?

Never allow private companies to do the job of police, they aren't trained for it, and then when they inevitably turn over a parent for taking a picture of their baby in the bath they will get gutted by a lawsuit for defamation.

I agree that the complexities surrounding the issue mean that there is no solution that will make all sides happy but I will take issue with the crackpot extreme sensationalist approach of things like this:

"Child sexual abuse is stored on iCloud. Apple allows it"

No matter how concerned you may be with a given situation, you shouldn't be banding claims around in that fashion.

However, unlike the other major cloud services Apple has made the bizarre decision to say that CSAM scanning shouldn't be done...despite scanning for other illegal content.

A. Content

B. Your Conduct

C. Removal of Content

Obviously Apple has to be scanning files in order to remove content. Just click on the link I provided and go to Section V and you will find highly detailed information.

So in other words if Congress were to pass a law to create a federal agency to regulate CSAM standards, that agency will have full access to the records of any company that falls under their charter without warrant as part of their regulatory examinations and if they find anything they will automatically refer it to law enforcement.

I'll go with the 4th and say show me the search warrant. Otherwise, bug off.