How Apple is already using machine learning and AI in iOS

Apple may not be as flashy as other companies in adopting artificial intelligence features, nor does it have as much drama surrounding what it does. Still, the company already has a lot of smarts scattered throughout iOS and macOS. Here's where.

Apple's Siri

Apple does not go out of its way to specifically name-drop "artificial intelligence" or AI meaningfully, but the company isn't avoiding the technology. Machine learning has become Apple's catch-all for its AI initiatives.

Apple uses artificial intelligence and machine learning in iOS and macOS in several noticeable ways.

What is machine learning?

It has been several years since Apple started using machine learning in iOS and other platforms. The first real use case was Apple's software keyboard on the iPhone.

Apple utilized predictive machine learning to understand which letter a user was hitting, which boosted accuracy. The algorithm also aimed to predict what word the user would type next.

Machine learning, or ML, is a system that can learn and adapt without explicit instructions. It is often used to identify patterns in data and provide specific results.

This technology has become a popular subfield of artificial intelligence. Apple has also been incorporating these features for several years.

Places with machine learning

In 2023, Apple is using machine learning in just about every nook and cranny of iOS. It is present in how users search for photos, interact with Siri, see suggestions for events, and much, much more.

On-device machine learning systems benefit the end user regarding data security and privacy. This allows Apple to keep important information on the device rather than relying on the cloud.

To help boost machine learning and all of the other key automated processes in iPhones, Apple made the Neural Engine. It launched with the iPhone's A11 Bionic processor to help with some camera functions, as well as Face ID.

Siri

Siri isn't technically artificial intelligence, but it does rely on AI systems to function. Siri taps into the on-device Deep Neural Network, or DNN, and machine learning to parse queries and offer responses.

Siri says hi

Siri can handle various voice- and text-based queries, ranging from simple questions to controlling built-in apps. Users can ask Siri to play music, set a timer, check the weather, and much more.

TrueDepth camera and Face ID

Apple introduced the TrueDepth camera and Face ID with the launch of the iPhone X. The hardware system can project 30,000 infrared dots to create a depth map of the user's face. The dot projection is paired with a 2D infrared scan as well.

That information is stored on-device, and the iPhone uses machine learning and the DNN to parse every single scan of the user's face when they unlock their device.

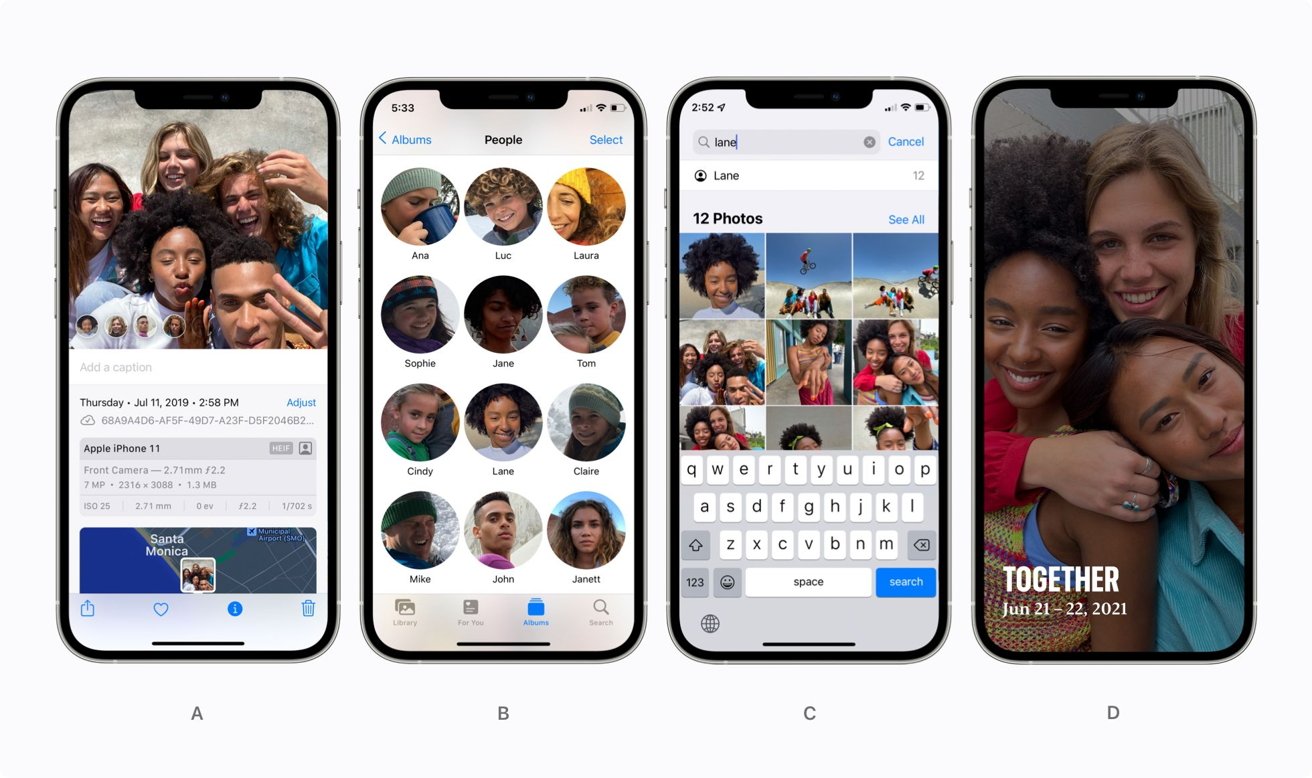

Photos

This goes beyond iOS, as the stock Photos app is available on macOS and iPadOS as well. This app uses several machine learning algorithms to help with key built-in features, including photo and video curation.

Apple's Photos app using machine learning

Facial recognition in images is possible thanks to machine learning. The People album allows searching for identified people and curating images.

An on-device knowledge graph powered by machine learning can learn a person's frequently visited places, associated people, events, and more. It can use this gathered data to automatically create curated collections of photos and videos called "Memories."

The Camera app

Apple works to improve the camera experience for iPhone users regularly. Part of that goal is met with software and machine learning.

Apple's Deep Fusion optimizes for detail and low noise in photos

The Neural Engine boosts the camera's capabilities with features like Deep Fusion. It launched with the iPhone 11 and is present in newer iPhones.

Deep Fusion is a type of neural image processing. When taking a photo, the camera captures a total of nine shots. There are two sets of four shots taken just before the shutter button is pressed, followed by one longer exposure shot when the button is pressed.

The machine learning process, powered by the Neural Engine, will kick in and find the best possible shots. The result leans more towards sharpness and color accuracy.

Portrait mode also utilizes machine learning. While high-end iPhone models rely on hardware elements to help separate the user from the background, the iPhone SE of 2020 relied solely on machine learning to get a proper portrait blur effect.

Calendar

Machine learning algorithms help customers automate their general tasks as well. ML makes it possible to get smart suggestions regarding potential events the user might be interested in.

For instance, if someone sends an iMessage that includes a date, or even just the suggestion of doing something, then iOS can offer up an event to add to the Calendar app. All it takes is a few taps to add the event to the app to make it easy to remember.

There are more machine learning-based features coming to iOS 17:

The stock keyboard and iOS 17

One of Apple's first use cases with machine learning was the keyboard and autocorrect, and it's getting improved with iOS 17. Apple announced in 2023 that the stock keyboard will now utilize a "transformer language model," significantly boosting word prediction.

The transformer language model is a machine learning system that improves predictive accuracy as the user types. The software keyboard also learns frequently typed words, including swear words.

The new Journal app and iOS 17

Apple introduced a brand-new Journal app when it announced iOS 17 at WWDC 2023. This new app will allow users to reflect on past events and journal as much as they want in a proprietary app.

Apple's stock Journal app

Apple is using machine learning to help inspire users as they add entries. These suggestions can be pulled from various resources, including the Photos app, recent activity, recent workouts, people, places, and more.

This feature is expected to arrive with the launch of iOS 17.1.

Apple will improve dictation and language translation with machine learning as well.

Notable mentions and beyond iOS

Machine learning is also present in watchOS with features that help track sleep, hand washing, heart health, and more.

As mentioned above, Apple has been using machine learning for years. Which means the company has technically been using artificial intelligence for years.

People who think Apple is lagging behind Google and Microsoft are only considering chatGPT and other similar systems. The forefront of public perception regarding AI in 2023 is occupied by Microsoft's AI-powered Bing and Google's Bard.

Apple is going to continue to rely on machine learning for the foreseeable future. It will find new ways to implement the system and boost user features in the future.

It is also rumored Apple is developing its own chatGPT-like experience, which could boost Siri in a big way at some point in the future. In February 2023, Apple held a summit focusing entirely on artificial intelligence, a clear sign it's not moving away from the technology.

Apple Car render

Apple can rely on systems it's introducing with iOS 17, like the transformer language model for autocorrect, expanding functionality beyond the keyboard. Siri is just one avenue where Apple's continued work with machine learning can have user-facing value.

Apple's work in artificial intelligence is likely leading to the Apple Car. Whether or not the company actually releases a vehicle, the autonomous system designed for automobiles will need a brain.

Read on AppleInsider

Comments

Wonder when RobinHood finds a dead AI company to pump and dump.

The current "AI" fad of this era is, in my mind, analogous to what "bleeding edge" tech companies of the late 90s and early 00s thought the internet was going to become, with 3D VR-style, immersive "cyberspace" experiences, where our avatars walk around in rendered worlds (which can currently happen in gaming environments, but is not part of our normal everyday lives). Instead, our internet experiences are mediated by browsers on 2D screens. Not exactly Ready Player One, or Lawnmower Man, or Johnny Mnemonic.

The US government says this:

"The term ‘artificial intelligence’ means a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations or decisions influencing real or virtual environments"

That is why a ‘foldable phone’ will never see the light of day.

These companies were all founded by people with extensive experience in the segment prior to startup, and still failed. It's my belief that Apple would suffer the same fate if they "diWORSEified" into automaking. It could be significantly detrimental to the entire company if they don't cut their losses, from the enormous startup costs to the overhead and payroll costs. Better would be to license Apple's proprietary technologies to existing automakers, and charge them up the wazoo for it!

The hype about AI is overblown. When I first learned about decision trees (make a list of possible outcomes, assign them a probability and a cost, then calculate to find the "best" option) I was taught that the trick is to get the estimated probability as accurate as possible - that with experience your estimates will get more accurate. The current buzz is happening because people have figured out a way to analyse huge amounts of data and build a bunch of probability lookup tables in a short enough period of time to be feasible and at a low enough cost to be justifiable.

At the end of the day, it's all just computation. The algorithms are not too complicated but the steps are computationally intensive and to understand how it works you need to be comfortable with matrix multiplication and statistics (e.g. this YouTube Video). The thing I really struggle to wrap my head around is why the machine doesn't have to show how it arrived at an answer - all of my teachers were very particular about that part of the process.

The great futurist Isaac Asimov proposed the famous "Three Laws of Robotics," and countless writers and movie makers have demonstrated how ineffective those guardrails can be against an advanced artificial intelligence.

Search on the term "ouroboros". That creature is the apt metaphor for what you are describing, which is a logical impossibility masquerading as a logical paradox. There are certain self-referential systems or operations that are just plain impossible. For example, an ER doc suturing a laceration on his thigh is possible. But a heart transplant surgeon performing transplant surgery on himself is not.

Remember when Apple released the 6S and the world poo pooed it because “who needs a 64 bit processor in a phone?”.

What they failed to notice was the Camera app which could take 100 photos, process them, take the best parts of each photo, then stitch them together to make the best photos ever seen on a mobile device. All in the time it takes to go click.

That sort of processing needed a powerful processor like say… a 64 bit processor to achieve.

Apple hasn’t looked back since.

The thing is, though, the definition of "optimum performance" was never decided by the software - it was simply following rules devised by the humans who set up the experiments. There is no agency, no creativity, just brute force iteration over every possible combination of pre-existing ideas. This is useful, but it is not intelligence.

The chance of true intelligence emerging from the approaches taken thus far is zero. All we are building is a mimicry apparatus with a dogged persistence and speed that is beyond human capacity.

The hype is completely unfounded.