Can Apple Vision Pro reinvent the computer, again?

As the universe counts down the clock to Apple's upcoming "reinvention" of augmented reality computing with its new Vision Pro early in the new year, it's useful to take a look at how successful it has been at reinventing the computing platform in the past. It's happened more often than you might think.

Apple has, quite miraculously, delivered the technology world an extraordinary list of world-changing innovations. It has invented and perfected entirely new products and platforms that shifted what and how consumers buy, monumentally changing how the world works, and drastically altering the commercial and industrial lay of the land.

Please indulge me a moment to articulate how this all happened before, as a way to confidently predict whether it can ever happen again.

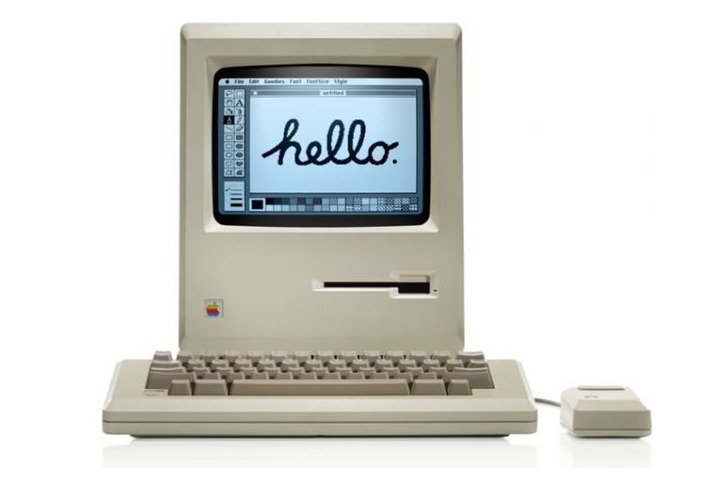

To prep yourself for Vision Pro, stop to appreciate the first Mac

The original Mac in 1984 not only changed-- across the entire industry-- how we worked with computers, but also changed how software was written. On that new Mac desktop, there was an under-appreciated innovation by Apple that decreed that all apps "must" share the same conceptual conventions, from copy and pasting to printing to saving documents.

You have to be quite advanced in age to even call into mind what a mess desktop computing was before the Mac, particularly in regard to third party computer applications -- or "computer programs," before Apple's Human User Interface Guideline writers coined the term that we today shorten to "app." The first Macintosh didn't just bring to market a new desktop appearance with a mouse and pointer; it also performed all the invisible heavy lifting to sort out how the desktop and its apps should function.

This was a sea change. Before the Mac, every function of every program on every computer needed to be learned independently-- they didn't work the same way. This benefited old app developers that made programs like Lotus and WordPerfect at the expense of everyone else, because it allowed them to create their own platforms of operation that served as difficult barriers of entry to competition.

Even Microsoft was prevented from a successful commercial launch its own new Office apps across PCs until it first copied Apple's well thought out aspect of the consistent Mac desktop design and finally delivered this to PC users just over a decade later with Windows 95. And most of that time involved litigating its way into finding a way to appropriate Apple's work without consequence.

Microsoft took the value Apple had created and reused it to power sales of Office, eventually erasing any need for anyone to pay Apple for Mac hardware just to get the Mac-like value of its design consistency.

The original Mac certainly was for a time a moneymaker for Apple, but it ended up that Windows 95 had far more impact on the world, from users to the apps industry, because Microsoft didn't just copy Apple once. Microsoft kept adapting its Windows product line to find and attract more customers and more platform partners.

Apple lost out on the computing reinvention it had ignited because it got sidetracked in delivering things people weren't going to be paying for (like OS support for crafting fancy ligatures) while failing to control the underlying technologies powering its product and delivering the value that people would actually pay for.

By 1996, Apple was already in free fall. That's a quite rapid implosion following the broad commercial introduction of Windows 95 as a "cheaper Mac" just a couple years earlier.

That fall was so rapid and so dramatic that it impressed itself in the minds of PC journalists and thinkers for decades the way that the Great Depression and WWII and Vietnam and COVID-19 all seared the life experience of the generations of people who lived through them and forever scarred how they think, act, and understand the world.

Almost another decade later, Apple was still viewed by almost everyone in tech media as the "beleaguered company that had failed," which could never again catch up and certainly even if it did would have its crown taken away, probably again by Microsoft. All of the valuable lessons of the first Macintosh were largely erased and replaced with a pure fallacy that anything that seemed to be cheaper and perhaps more "open," in the way that the Windows PC was, would "always win."

Apple reinvented mobile computing

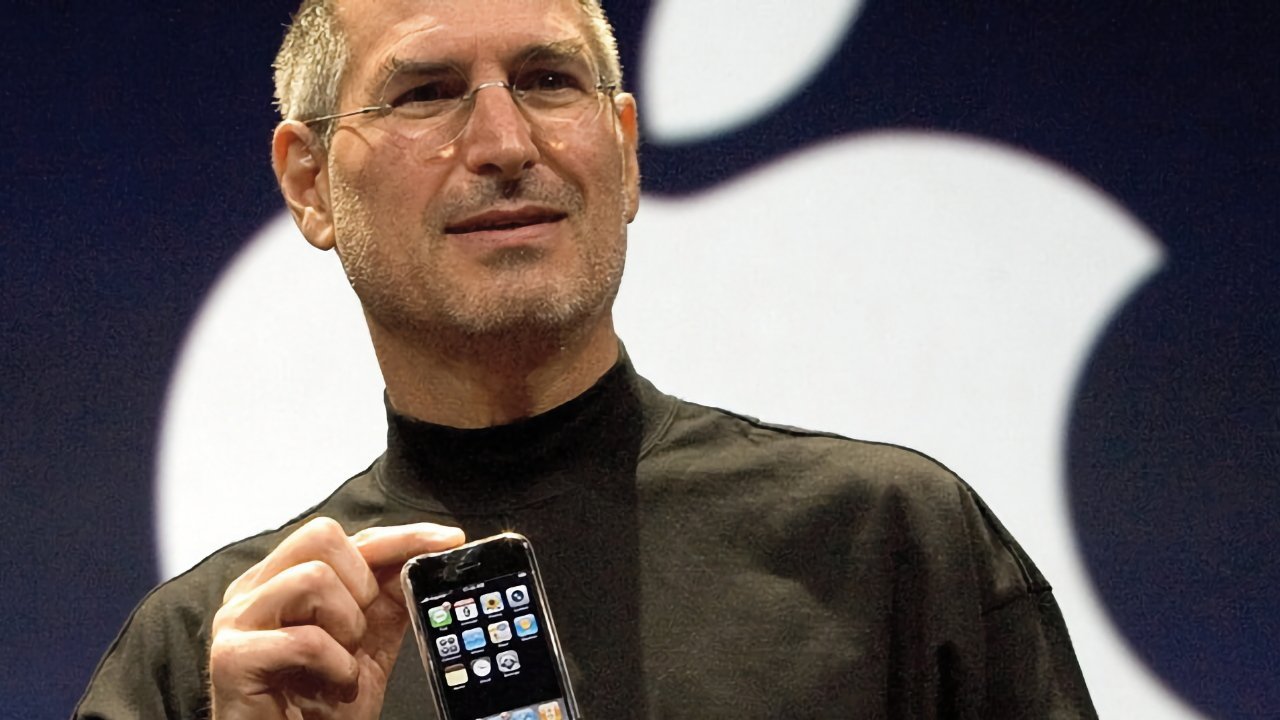

If you were alive and voraciously reading about consumer technology in 2006 (perhaps a larger part of my core audience, although still a slim subset of today's population) you can certainly recall the second "reinvention of computing" that Apple is broadly credited with. At that time, the new Phone hadn't shipped yet, but the tech world was abuzz with rumors and analysis that suggested something big was around the corner, much the same as in today's prelude to Vision Pro.

Today, we are currently living in the giddy period of time comparable to early 2007, after Steve Jobs had showed off new iPhone at the January Macworld Expo, but before it actually went on sale that summer.

We had seen marketing photos of it, we got to see the general outline of how it works, we had some understanding of the internals. But, we were also getting fed non-stop criticism and some full-on disbelief by parties representing the rival companies that would ultimately be crushed by its arrival.

Back then, it was difficult to be taken seriously in thinking that there was any chance that Apple would "walk right in" and suck all the oxygen away from the world leaders in mobile devices. That included the once giants Nokia, Motorola, RIM Blackberry, Ericsson, Sony, Palm and so many more that had been the Lotus and WordPerfect of their era.

In large part, that perception was held was because Apple had fallen ten years prior when Microsoft and all the hardware companies that were failing to make their own Mac competitors joined together for Windows 95 and ultimately snatched away Apple's desktop crown. Surely history would work out exactly the same this time, right? Yet as anyone who follows fashion or history knows, trends don't repeat, they rhyme.

When you're old enough to see cycles happen, you can adapt to them, just as Apple did.

Apple had already reinvented mobile computing

All those years ago, Apple's first iPhone in 2007 was greeted with some significant skepticism not only because tech writers had fresh in mind the previous vanquishing of Apple's Mac by Microsoft, but also because they had grown used to repeating the public relations narratives of another group of industry competitors: the iPod killers who never actually killed the iPod.

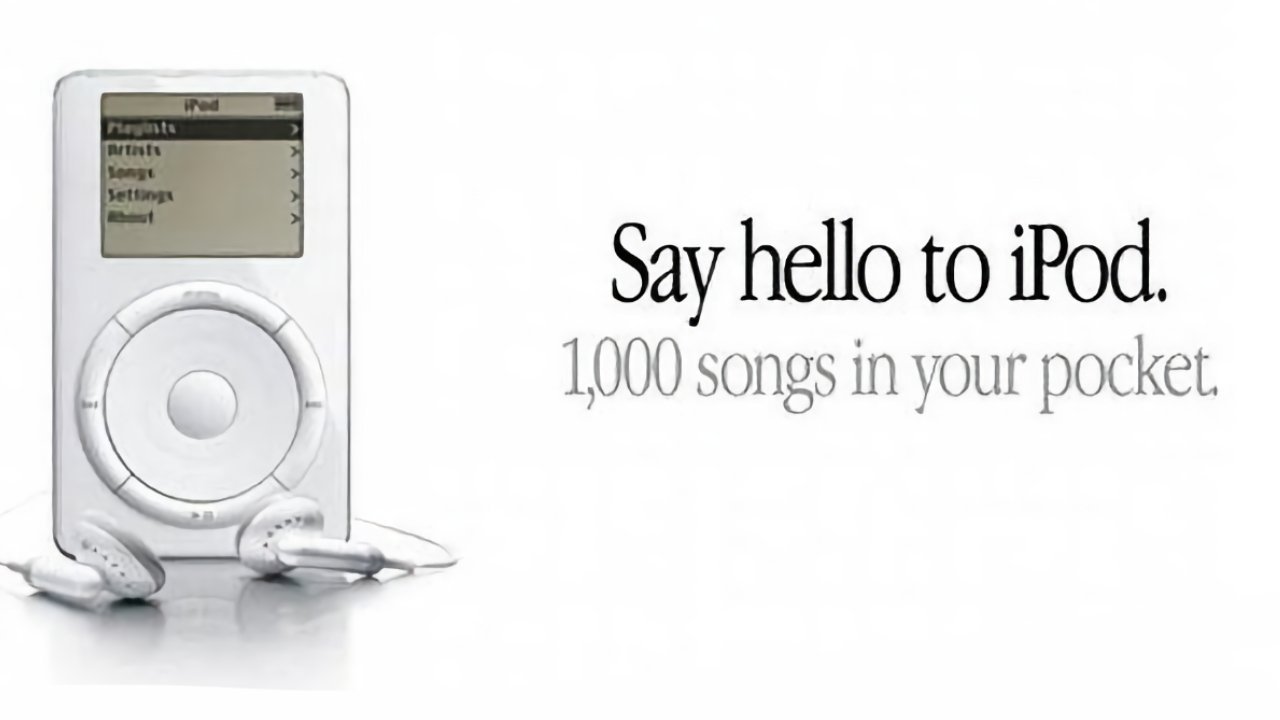

Apple's 2001-2006 iPod era had been a monumental, preliminary part of its sneaky introduction of iPhone that allowed the company to eventually (and then quite rapidly) obliterate every existing facet of the then-existing telecom industry and replace everything with its own design.

Some smartphone executives, like Palm's CEO, famously mused that Apple wouldn't "walk right in." Others, like Microsoft's Steve Ballmer nervously laughed at how "expensive" iPhone was compared with what they'd been commercially struggling to offer to consumers during the pre-iPhone era.

Both also knew in the back of their minds something else had already occurred. Apple had just spent years "walking" into the consumer music industry with its unusually "expensive" iPod, and yet customers globally could barely contain themselves from buying millions of new ones every year.

Buyers were primed to demand an iPhone even before it went on sale because they'd already seen what a convenient, valuable mobile music (dare I say PDA?) device that iPod had been, consistently year after year following its incremental arrival as a luxuriously extravagant way to have "1000 songs in your pocket," something a CD Walkman couldn't do, and something that the digital music industry's heavyweights Sony and Microsoft couldn't similarly pull off despite their own unfettered access to the same core technologies Apple was using.

Apple didn't have some unique access to digital music storage, FireWire connectivity, music library software, or mobile device hardware construction that Sony and Microsoft and the other iPod killers lacked. Apple was the underdog.

Yet, Apple came out on top and turned the "MP3 player" industry upside down the same way that it had introduced the Mac, and the same way it would subsequently introduce iPhone.

For all three products, Apple first worked internally for a significant time determining how it could ship a functional device with some serious limitations, then dramatically introduced a real product while clearly communicating its value, as it worked with platform partners to help it bridge the things it couldn't do alone. It had also learned-- certainly with the return of Steve Jobs-- that every interaction also needed to be followed up with relentless advancement to keep it ahead of another Microsoft-ing.

A main reason why iPhone-era critics were so flabbergasted by the success of Apple's new phone is that they'd preached for so long that Apple was soon going to lose its iPod business that they believed it themselves as a core of their understanding of reality. But of course, competitors can't walk in and steal away your core competency when you simply transition it into another product that's even better and ever harder to copy.

Figure out what's next!

"I think if you do something and it turns out pretty good," Jobs once observed, "then you should go do something else wonderful, not dwell on it for too long. Just figure out what's next."

At first glance, that might not seem the most eloquently pithy thing a tech visionary has said.

Yet, Apple printed out those words and emblazoned them in its original campus in a prominent location outside its first theater, in a place not only easily seen by its employees, but also by investors and the tech media it would invite to see whatever it was figuring out to be next.

When Jobs returned to Apple, his first priority was to figure out what was next for the Mac, in the perilous years after Microsoft had ripped off all of its apparent value and passed that off as its own work. The pillaging of the Mac as a product and platform by Windows 95 had been devastating for Apple as a company, economically, intellectually, and reputationally.

Yet by returning to and refining the core principles of how to be an innovator, Apple not only reinvented the Mac using NeXT's software to establish a new platform that could generate revenue and get Apple back on the right track, but subsequently could branch out into music with iTunes and iPod, then into mobile phones with iPhone. Those were not simply "two new products." They were product lines that relentlessly advanced every year.

Just three years later, Apple took the technology and techniques that got it that far to introduce iPad, revolutionizing the "tablet market," as well as erasing the supposed markets imagined for netbooks, super cheap laptop PCs, Chromebooks, convertibles and folding screen phones, and so many more half-baked product concepts that flopped out into the market without being ready, without communicating clear value, without forging effective partnerships, and without being relentlessly advanced after their version 1.0.

The outside tech world has failed to grasp why Apple became so successful. Since 2010's iPad, the Microsofts and Androids of the world have desperately tried to launch volleys of fancy new smartphones and tablets with little success. Yet even as they have repeatedly failed to snatch away Apple's crown following the model of Windows 95 (and folks, that one-time intellectual shakedown occured nearly 30 years ago), Apple has moved into building new branches.

The conspiracy to tell everyone that Apple isn't figuring out what's next

Since 2001, Apple not only vanquished every major MP3, mobile phone, and tablet maker with the arrival of iPod, iPhone and iPad across the first years of the Millennium, but has subsequently moved into wearables with Apple Watch, hearables with AirPods, whereables with AirTags, and televisisables with the new iOS-based Apple TV across the last several years.

I'll leave it as an exercise for the reader to figure out which terms I coined.

At the risk of sounding fan-boyish (for which I am not at all embarrassed or concerned about being labeled as, because the shoe fits) I have to proclaim that Apple has pulverized any threat of competitive usurpers from wrenching away its markets for music, phones, tablets, watches, earbuds, home devices and TV entertainment and running with an embezzled copy of its platforms and products. Not even Samsung's most eye-rolling copies have enjoyed long-lasting success.

This makes it really bizarre to try to understand why every contrarian blogger-analyst pundit in the technology world thinks that Apple "isn't innovating" but somehow the industry's commercial losers are because they have something quirky and different and perhaps it's cheap or folds somehow or can load up software in a way that makes it unsafe at any speed. Sometimes things are controversial but sometimes it's just ignorant conspiracy floated by people who want things to be different, either because they want to get paid to deliver some ideology or because they're so blinded by it they're volunteering for free.

We live in the best possible tech universe alternative

That being said, today's Apple is also not erecting the kind of ugly monopolistic empire of half-assery that Microsoft established in the miserable tech decade after it stole the valuable concepts of the Mac way back before most people today were even born or were at least aware of what a computer is. The U.S. median age is under 40 if you need to feel older today, but let's not dwell on that.

Today, if you want a smartphone, tablet, sliding or folding PC, headphones, watch, or TV dongle made by a company that isn't Apple, you have an exceptional range of suitable products that will delight you and which can be used without concern that Apple will somehow deprive you of access to media, apps, or services the way that Microsoft deliberately leveraged its Windows monopoly to force professionals to buy a PC and pay for Windows licensing taxes even if they just wanted to run a Linux command line.

Sure there are debatable criticisms about whether Apple should be compelled to write and maintain its own software for your oddball platform choice, or whether it should allow you the Owner to swap out a broken OEM component for some counterfeit part you sourced from the People's Republic of China at a discount, or whether Apple should be forced to build and maintain non-vetted App Store access to competitors who don't want to have to support the safe and reliable platforms they use and their customers have chosen, or whether Apple should have to implement some tight integration between its messaging platform and the competitor who claimed for decades to be "better at software" and have "more customers choosing its alternative" with a flurry of failed messaging platform flops that it couldn't deliver on its own.

But despite these ostensibly controversial beefs, the reality -- or perhaps "spectrum of indisputable facts" -- details that we are living in the best possible alternative universe of technology, where there's a great company that delivers the best stuff that's all quite expensive but worth it. And yet, there is also vibrant and significant competition allowing for fans of another variety to own a Pixel paired with a round watch and busy-box folding screen PC with a stylus marker and USB-A ports if they really want to swing that way.

And if your text bubbles are green as a consequence, deal with it. It's nothing like being forced to use Microsoft DRM or a Windows web browser, or dreadful smartphones with buttons, or be forced to use Cingular, or be tracked with surveillance advertising, or slowed to a crawl by "Defender" malware scanning.

Will the best possible alternative reality deliver the best possible immersive reality?

Now perhaps you've already made the connection, but the crux of the rhetorical question I wanted to answer here unequivocally is: what if the reinvention of computing that we anticipate to be just around the corner with Vision Pro has already been delivered, at least in principle?

What if Apple already demonstrated that it can reinvent computing and we just haven't fully appreciated it yet, because we're like frogs in the incrementally hot water waiting for things to get dangerous?

Just as incessant waves of new iPods set up the world for the revolution of iPhone-- from its core technologies to its operational handling of massive-scale component purchases orchestrated by a younger, almost unknown at the time Tim Cook, to its internal software helmed by Scott Forstall, and music platform run by Eddy Cue, its industrial design championed by Jony Ive, and its retail store development launched by Ron Johnson -- tomorrow's Vision Pro sits on the same technological foundations that have incrementally revolutionized Apple's current Macs.

The Mac just hasn't got so much credit for re-revolutionizing the PC world and setting up Apple to obliterate computing done immersively rather than on a display screen. Yet much the same way that iPod prepared the universe for iPhone, today's Mac has shifted from being just a customized Intel PC running its own software not so long ago, to being a completely custom device with more in common with today's iOS than the original Macintosh or a Windows 95 sort of thing.

Key to this has been software transitions recently floated which uniquely bring the vastly larger platform of iOS and iPad apps to the Mac, facilitating internal cross platform developments such as Home, News, Maps, Music, TV, Podcasts and so on, on the Mac. Additionally, Apple Silicon incrementally brought tight firmware integration into MacBooks with chips that first handled more types of media encoding and authentication and security tasks, then launched to full Intel-free Application Processor SoCs (those delicious M chips) that radically changed Macs into instantly booting, snappily launching, battery sipping, super advanced machines that have left PC makers as flatfooted as yesterday's smartphone makers in 2007 or tablet manufacturers in 2010.

And just a back then, Apple's competitors and their PR staffs with their tightly integrated tech journalists keep telling us that really soon now, perhaps right around the corner, their own PCs will also have advanced "ARM chips" with integrated GPUs and AI engines that don't require huge fans and air ducts and howling vents. Someday real soon Windows will deliver Voice First or some kind of AI that will do everything for you.

Maybe it will even recognize when you've been infected with malware -- or maybe it will skip the middleman and take advantage of you with tracking and surveillance advertising in order to make itself more affordable.

Technology doesn't come first!

Tech bloggers: stop begging for titillating innovation over titivating incremental improvement! And stop asking for Apple to shoehorn the latest tech fads into its products. Jobs himself detailed why functionality came before technology.

"One of the things I've always found is that you've got to start with the customer experience and work backwards to the technology," Jobs told developers as he returned to Apple. "You can't start with the technology and try to figure out where you're going to sell it. And as we have tried to come up with a strategy and a vision for Apple, it started with What incredible benefits can we give to the customer? Where can we take the customer?' Not starting with Let's sit down with the engineers and figure out what awesome technology we have and how we're going to market that.'"

As someone who had to become an expert in decrypting the false promises of the technology industry, I can bypass having to explain why all of this is meritless, simply by pointing out how rapidly Apple has been incrementally enhancing all of its platforms, and how its now poised to deliver the first step in immersive computing while delivering the world's advanced computing platform that already works today.

I've been scoffed at for years for pointing out that Apple hasn't really had any real threat from competition for many years, and in hindsight it's hard to argue that it has. Yet there's enough efforts at competition-- billions thrown away from Google, Microsoft, Samsung and China's state makers-- all trying to be Apple, that it effectively keeps the company from getting too comfortably numb to the real risk of complacently.

The biggest actual risk to Apple today is that the world might collapse into total chaos and a new era of medieval war, superstition and environmental catastrophe. But short of that, we're in the best timeline we can be in for the launch of Vision Pro. And the best thing for Apple: it's so safe and functional right now that it doesn't need a lucky Hail Mary pass to save it from apparent economic disaster the way it did in 1996 or 2001 or 2006.

Vision Pro can incrementally slip out like a delicately birthed helpless child and take years to develop into an AR warrior. We can all leisurely bathe like frogs in its warming waters until it lights up and takes over the world and cooks off any existential competition to delivering a computing world that puts us in the center.

Because Apple is already really adept and doing that. When you boot up your M-series Mac you're already nearly there.

Read on AppleInsider

Comments

https://www.osnews.com/story/24882/the-history-of-app-and-the-demise-of-the-programmer/

I’m not familiar with the first Apple computers, maybe someone else is.

Apple certainly started using the term “app” with the iPhone and iPod Touch when they introduced the App Store.

What is missing in the editorial is that Apple is the one trying to be like MS and Google, with datacenters and AI.

Apple AI server purchases could hit $5B over two years (appleinsider.com)

Even the Application Menu” (called this in the Mac documentation) existed in System 4.2 in 1987 and Applications have been called Applications in all Macs inside the OS GUI itself.

Even the BBC Micro and the Unix/Sco-Unix systems I used (Windows 3.1 era) all called them Programs in my experience. Even the Sinclair, Commodore & Amiga systems of the 80s called them Programs.

There may be some systems that called Applications that before Macs, but I never came across any.

The first instance I know of for the .app extension is in NeXTSTEP. The ".app" extension was used for NeXTSTEP application bundles in the late 80s. This is the primary reason why macOS, iOS, etc, applications are called "app" today, imo.

Operating systems prior to this were command line style operating systems that used extensions, and ".exe" was the dominant extension, if not the only extension, used for programs. Obviously true for M/S DOS, and also true of Atari and Amiga. Apple's DOS did not use extensions as I recall.

Mac OS X is NeXTSTEP 5, and you can call all OS X derivatives (iOS, visionOS) successors to NeXTSTEP. Basically anything using XNU (Mach+BSD) and Objective-C frameworks. Once Swift becomes the dominant language for Apple's operating systems, I think you can probably say the era of NeXTSTEP is over, and while what replaces it still uses Mach+BSD, the change to Swift represents a rather large change. You can still run original NeXTSTEP applications on macOS or iOS with little effort. Once Obj-C is deprecated, it will be the end.

The term "killer application" or "killer app" become popular sometime in the late 80s. Maybe 1990 or 1991, but definitely somewhere around there. You can hear people talking about VisiCalc as a killer app in Triumph of the Nerds, and it was probably used in the Accidental Empires book in 1992, whenever it was published.

Yoda?

Yes, there are many. I don't question that Apple popularized the term with consumers, but to claim they "coined the term" as the article does is blatantly false.