How to use the Microsoft Copilot app on macOS

AI copilots promise to ease and speed up tasks for users, making work easier in the long run. Here's how to use Microsoft's Copilot app on macOS.

Microsoft Copilot

AI chatbots give you the ability to converse with a variety of AI systems to get answers to your questions. Some chatbots are specialized, and some are generalized.

Copilot from Microsoft is a general-purpose chatbot that leverages Microsoft's Bing AI-powered search to provide you with answers to your questions,

Chatbots have expertise because they are trained on large subject-matter sets of data known as Large Language Models or LLMs.

The first commercially available chatbot was Japan's BeBot - a Tokyo Station navigation assistant which we covered previously.

Microsoft's Copilot is similar, but it's more general-purpose.

The idea with chatbots is you enter your question into a chatbot app or on the web at its prompt, press Enter and it responds with an answer it predicts will fit your question.

You can then further interrogate the chatbot for more info. Conversing with a chatbot is almost like speaking with a human.

Additional features

Copilot can do much more than just chat, however, as it also features Generative AI or GenAI for creative work. You can ask it to write computer code, create images or music, organize information for you, look up travel and shopping info, or even tell you jokes.

GenAI saves you time by doing small creative and data tasks for you quickly so you don't have to.

It also knows how to interoperate with Microsoft 365 apps such as Word, Excel, PowerPoint, and others so you can use AI powers in those apps.

Getting started

To access and read about Copilot on the web, go to copilot.microsoft.com. If you choose to log in with your Microsoft account, Copilot will learn from and store your previous Copilot sessions so that it can work better when you use it later in future sessions.

If you don't want to use Safari, you can download and use the Mac version of the Microsoft Edge browser and run Copilot from there.

You can also learn more about how Copilot works and how to use it on the Microsoft Copilot support page.

There are also Windows versions of Copilot, and Microsoft has now bundled Copilot into most versions of Windows 10 and 11 and Microsoft's Edge Browser for Windows.

The web-based version of Copilot is simple and has a chat prompt at the bottom of the page along with several buttons for GenAI tasks and settings. If you give Copilot access to your computer's microphone, you can also ask it questions using voice control so you don't have to type your prompts.

You can click the New Chat button in the web-based version of Copilot to clear your current chat conversation and start over.

Installing Copilot on your Mac

There are two ways you can install Copilot on your Mac:

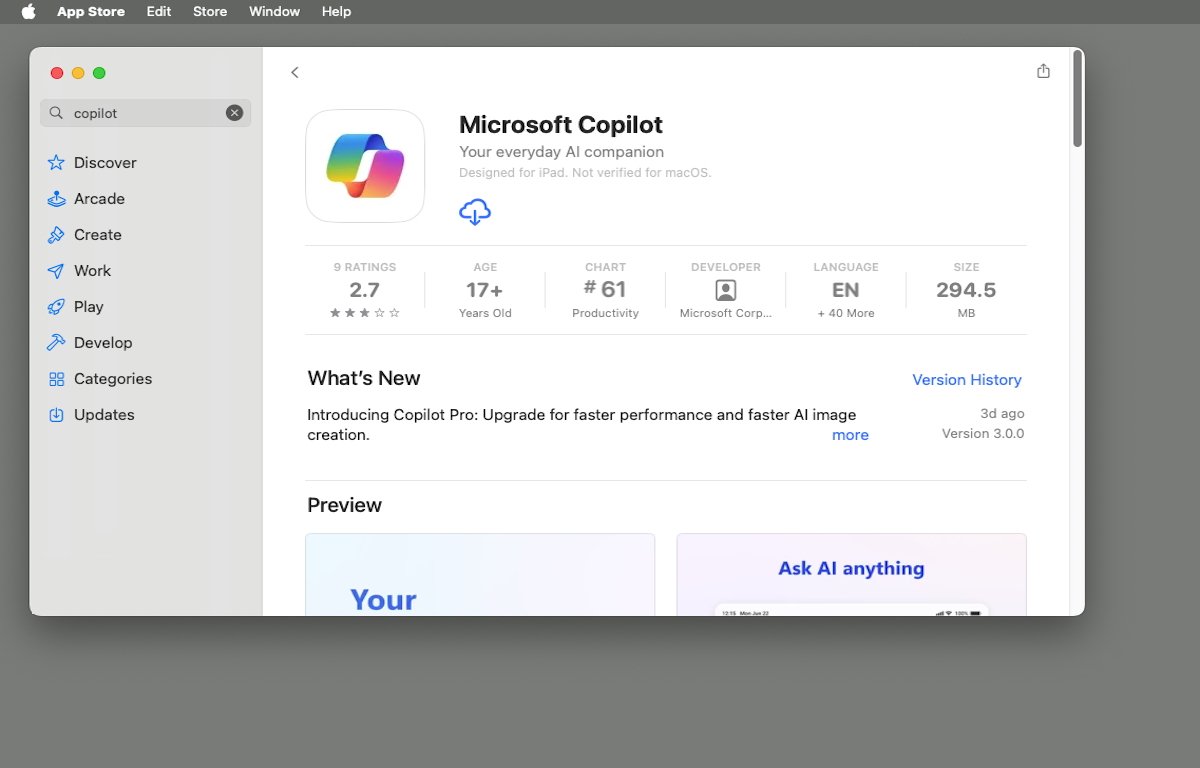

- Download it from the Mac App Store and run it in the Finder.

- Go to copilot.microsoft.com in Safari, click the Share button in the upper-right corner of your Safari window, then select Add to Dock from the share menu.

If you install Copilot from the share menu, it simply adds a web shortcut to the Microsoft Copilot page in the Finder's Dock on your Mac so that when you click it you're redirected to the Copilot web page.

If you download Copilot from the Mac App Store, you get a standalone Mac app, which you can add to your Dock by dragging it there or by double-clicking it in the Finder. We'll get to the standalone Mac version in a moment.

Copilot on Apple's Mac App Store.

Pricing for Copilot, Pro, and 365

The basic Copilot is free, but you can get a $20 monthly subscription from Microsoft for Copilot Pro which includes:

- Faster performance at peak times with GPT-4 and Turbo

- Copilot in Microsoft 365 apps if you have a 365 subscription

- Faster AI image creation with 100 boosts per day with Copilot Designer

For business subscribers of Microsoft 365 Business Premium and Business Standard, companies can purchase Copilot Pro for use in 365 business apps for $30/seat,. However, based on the combined cost of the Business 365 subscription and the Copilot Pro subscription, it may be cheaper just to go with the basic 365 Personal user subscriptions since they are $69.99 and $99.99 per month each.

Which plan you choose depends on which apps you use and which levels of Copilot you want for each user or worker.

There are also higher Enterprise levels. For more info on Pro and Enterprise see Bringing the full power of Copilot to more people and businesses.

The Microsoft 365 webpage also has more info on Copilot and AI.

A free AI-powered Reading Coach is available to students on Microsoft's website.

Copilot Studio

If you want to build your own custom Copilots, Microsoft has released a new tool called Microsoft Copilot Studio. Using Studio you can design and assemble your own Copilots with custom prompts, models, topics, and agents.

By Using Studio you can design Copilots tailored to specific users, guide user conversations, and decide what information the Bing backend will use to determine and provide answers to Copilot interactions.

There is even a GenAI feature in Copilot Studio which leverages AI to help build custom Copilots for you.

The Copilot app for macOS

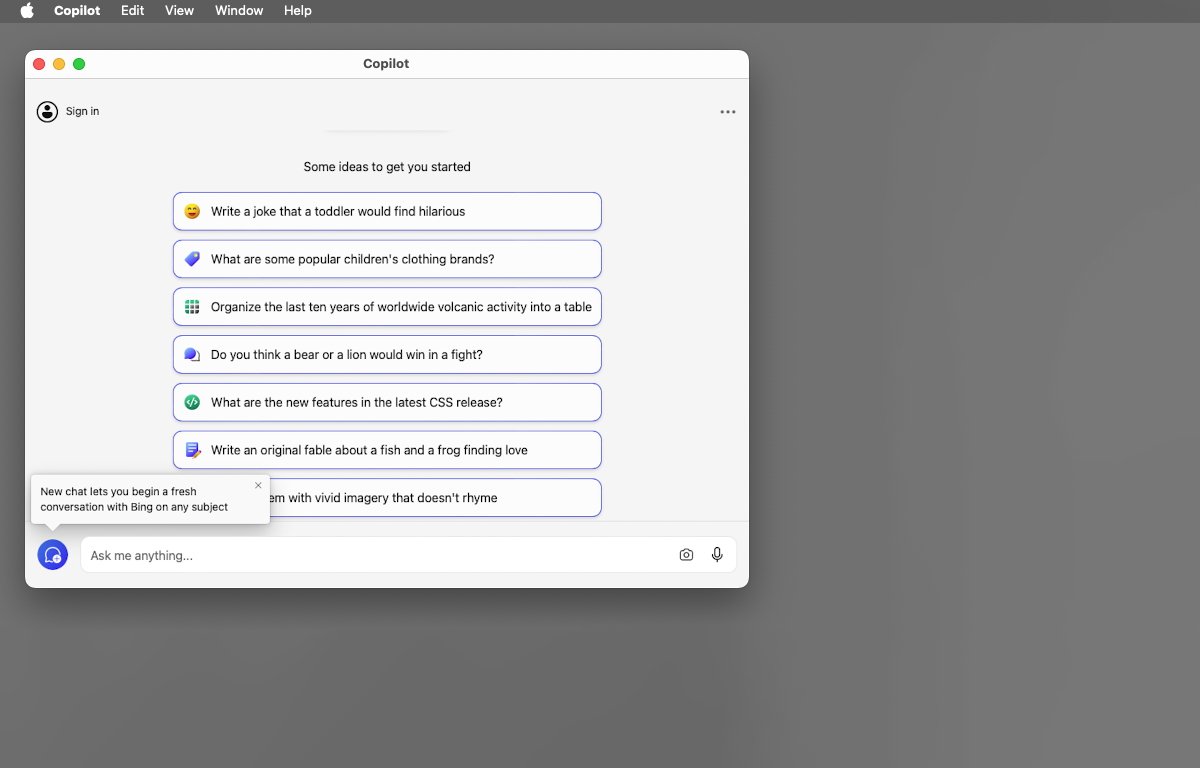

If you download Copilot from the Apple Mac App Store, you get a standalone app. Double-click it in the Finder to run it:

First-run of Copilot in macOS.

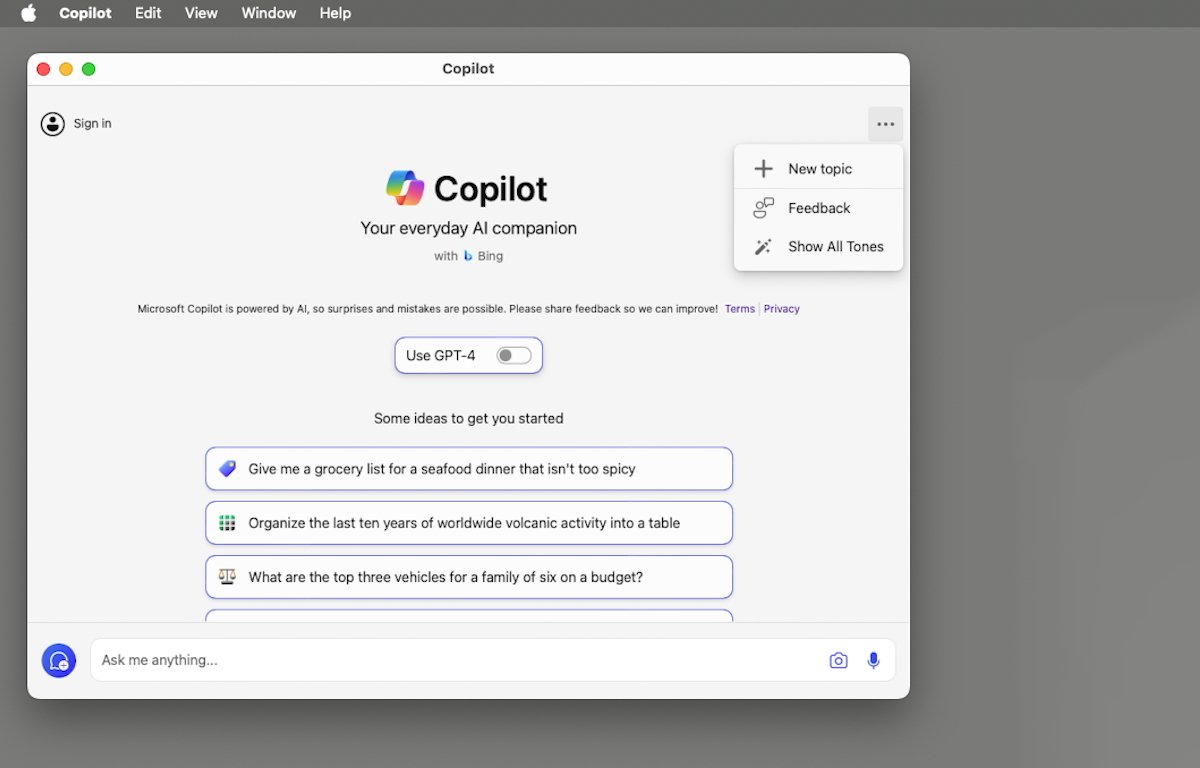

On the first run click Continue, and you'll arrive at the main interface which looks a lot like the Copilot web interface.

In the top left corner is a sign-in button, and in the top-right corner is a button with a menu that contains items for creating a new topic and clearing the current conversation.

The main interface also has a toggle switch which allows you to turn GPT-4 on and off.

Copilot's main window on macOS.

Once the app is initialized, the main window provides a chat prompt at the bottom with a text field, microphone and image buttons, and several predefined buttons that you can click to insert prompts.

To chat, type or speak your prompt into the text field at the bottom of the window and hit the Return key on your Mac's keyboard. Copilot will connect to the Microsoft Bing search backend and reply (usually within seconds) with a response.

Type or speak into the text prompt field, or click a predefined button.

If you want to halt Copilot's responses before it finishes, click the Stop responding button in the main window.

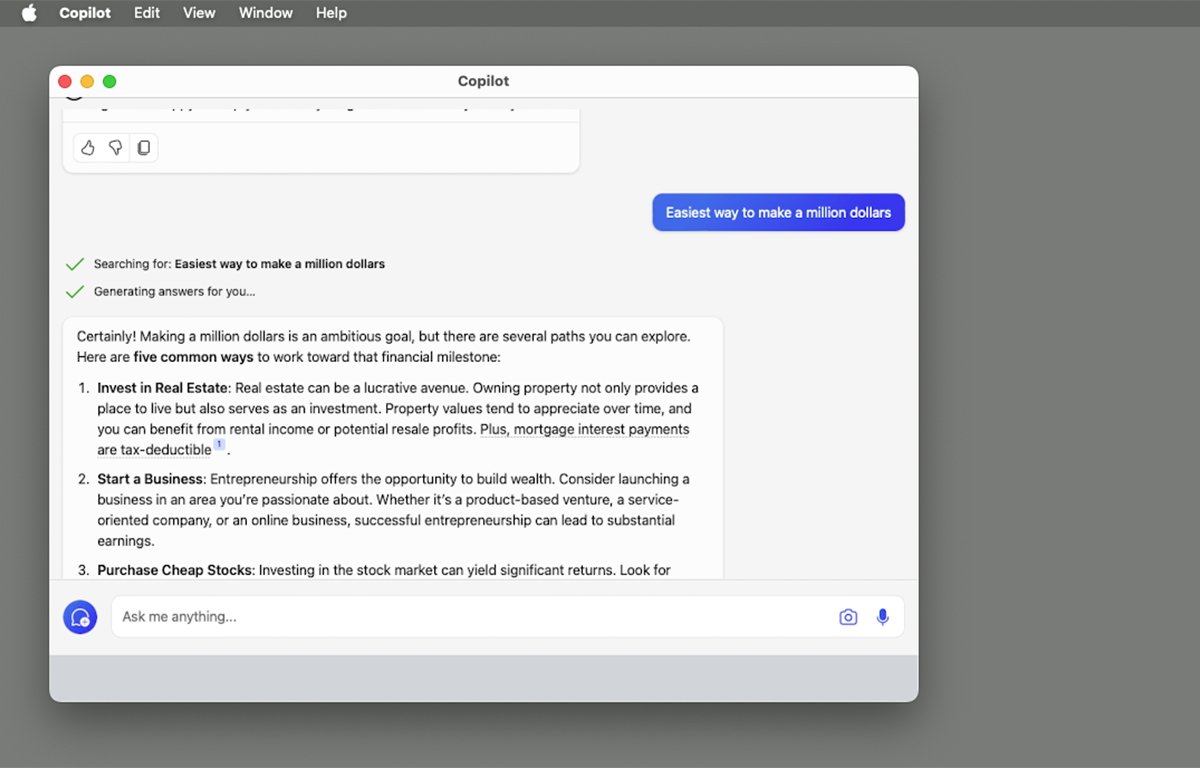

If your prompt is a question, usually Copilot will respond with a list summary of answers, or a single answer if your question was something simple like directions to a location, or simple facts.

If you are signed in to your Microsoft account, Copilot provides more detailed answers - and you can ask it follow-up questions to refine the answers it provides.

Copilot responds with lists of answers or single answers for simple prompts.

Chatbots and AI are still very new and we are just beginning to see what they can do. As AI learns, it gets better and faster, and as the worldwide base of knowledge for LLMs grows, chatbots and GenAI will become more and more useful, and more accurate in the future.

AI has the potential to revolutionize the way we work and interact with computers, and it will save us countless hours of time on mundane work like looking things up or obtaining simple information which is already available around the world.

In our next chatbot installment, we'll take a look at Copilot for iOS and iPad.

Read on AppleInsider

Comments

Save yourself and the planet some energy.

If it is the latter I'd simply suggest you give it a try and see if it can be of use to you.

I'll give you a practical example of a real world case that I've explained here before.

I was preparing someone for an important interview (in English) for a UX position. We are talking about a candidate who is considered to be a the top of the league.

Due to time constraints the candidate asked ChatGPT to draw up a list of interview questions and possible replies. Then the system was asked to go into more detail on both the questions and replies.

I was presented with the finished results and was blown away. It would have taken me hours to draw up comparable results.

To a native speaker some of the language would have sounded a bit too 'enthusiastic' but I was able to tone it down on one reading. To a non-native speaker, I doubt they would have noticed.

We have spent months in the admission process for a paper in a specialist journal and the interview process was just something that popped up along the way.

We decided the paper was more important given the timeframes involved but couldn't turn the interview down. ChatGPT saved us multiple hours in time and even more in logistics (for some things, face-to-face communication is still the best option).

This is one small example but I'm sure most users are finding it more useful than not.

https://fortune.com/crypto/2023/05/11/new-memecoin-77-million-market-cap-gpt-4/

It can write code templates for most things, including complex examples. There's a demo site here (it's limited in responses due to being free so needs refreshed):

https://gpt4free.io/chat/

It can be useful for getting started with a new development language. It can be asked the same type of questions that get asked on StackOverflow.

Example questions:

How do I setup a Rust development environment on Mac?

Write a Rust application to draw a graphic.

Write a Javascript app to get the current weather using a web API.

Build a database schema in SQLite for a book collection.

Eventually, these AI tools will be able to build full apps and games. Someone will be able to ask them to build a game like Call of Duty Mobile in Unity Engine and it will build a game foundation that would otherwise take months of work.

This will be useful for working with Swift. Swift frequently points out errors but isn't good at saying what the best practises are. It can migrate code from another language.

Apple doesn't have to make a chat app but Siri would be much more useful if it did things like this. People experiment with AppleScript and Shortcuts and these could be built with voice descriptions.

Siri, build a Shortcut that gives me directions to my next Calendar event and sends a text if I'm late.

Siri, give me a list of my unread emails in order of importance.

If all you want is a list of possible questions, there are a million articles with those.

As an enterprise software engineer for Fortune 100s I can tell you that while code generators may be useful for syntax or boilerplate, it will not be building you the types of solutions we build. Why? Coding is the easy part! Coding is NOT engineering. Engineering is solving hard problem based on constraints and compromise. The details of which are unique and depend entirely on your business domain’s particulars.

It’s like saying generating legal boilerplate will replace lawyers. Nope! The letters are the easy part. It’s the thought behind wrestling with law and legal theory which make lawyers valuable. Nor Word docs.

I think this is hard to understand for those outside engineering. No generator is going to replace the minds of people solving hard, unique problems, like John Carmack.

That’s how good it was.

The personal side is only part of an interview.

The candidate's English is very good but some 'interview polish' is always a good thing. AI took all the biggest pain points away and more importantly, it saved a huge amount of time and effort that could be re-directed elsewhere.

At the end of the day here, what really counts is your portfolio of previous work and recommendations but the interview part is something you have to go through.

Even though you can't see any benefit, I can't help but think what your colleagues think. Are they using it in any capacity?

This morning I read through a research paper on Large Vision-Language Models (LVLMs) and how to reduce hallucination.

The advantages of that are unquestionable. Not simply being able to recognise what is in an image (the subject) but describe objects, attributes and relations within it.

I'm thinking about what it could do for a major problem with photo management: actually finding the photo you are looking for.

What's your opinion of articles like this?

https://www.neuralconcept.com/post/generative-design-the-role-of-ai-engineering-applied-use-cases

As I said, for software engineering code generators can save time, but coding is not the skill. Engineering within the constraints & compromises of your business domain is the skill.

Office's Clippy on steroids isn't going to put lawyers out of work, no matter how coherently written generated form letters are.

As for your buddy's AI interview answers -- good luck memorizing all that. Interviewing is a conversation between two humans. The candidate's personality and experiences are what we're connecting on, not some textual content.

BTW, yes, Apple is late in the game. We'll see what they show in the WWDC.

I would like to add that code generation and code review apps in the form of "app wizards" and tools like sonar have been around for a very long time. At best these wizards and tools generated code for the skeletal framework and boilerplate pieces and parts of an application or the application itself. Over the years more human knowledge and best practices was baked into these tools, but it was still largely hard-coded into the generation or analysis processes for these products. But the one big thing that was always missing from these tools was any semblance of an attachment to the "business logic" portion of a software project, which is largely the whole reason for creating the product in the first place. Like you mentioned, what problem is the product solving within a specific problem domain? Things like constraints, compromises, conventions, the mathematical and physical basis that defines the problem domain itself, and even things like how the product presents itself to the user within the domain in ways that are understandable and can be acted upon.

That said, I can imagine a capability wherein the problem domain specific, or business logic, at some level could somehow be captured very widely and very deeply in a form that allows for the generation of something, perhaps at a pseudo code level, that resembles actual business logic that solves one or more pieces of the solution. If a decent pseudo code level solution can be produced then the generation of code in your language of choice seems like the easier part of the code generation challenge. Coming up with the pseudo code seems much more difficult because the AI has to know quite a lot about the what problem the product, component, or code snippet must solve, the business requirements, functional and non-functional requirements, quality attributes, etc.

If the scope of the code generation is constrained to single functions or very detailed algorithms with very well defined requirements, including exception driven execution paths, I suppose the generated code would be a lego brick that can be stacked on top of other similar lego bricks into something that the developer could flesh out and stitch together to form the basis of an application. Maybe I'm underestimating what the AI can do.

The thing I don't understand at this point is how this wide & deep knowledge behind the AI/ML is captured in the first place. I don't see engineers working in for-profit companies building products for sale within the context of a specific problem domain giving away their requirements, design, and implementation code, all things that are the intellectual property of the business. Sure, open source projects, depending on the licensing model, are probably much more fertile ground for harvesting instances of implementation that can be associated with some specific requirement or business problem to be solved. Perhaps black box level behavioral analysis and reverse engineering of existing products could be done using AI and ML. Still, someone or something has to backfill specifics about what problem the existing product is trying to solve so it can match it to one or even several proven code based ways of meeting the requirements.

To add to your response, I would say that the real challenge with code generation is the "what" side of the problem, not the "how" side of the problem. In many ways it mirrors the divide between software implementation and software architecture. Software implementation is all about building things right. Software architecture is all about building the right things.

Now I’m curious whether to give this a try with a LLM tool like GPT for much of the Swift code if that’s presently possible. But I’d guess I’d still need to partner with someone with more experience than I have for finishing the App to be App Store ready.

Marvin, do you know if any large language models have yet learned Swift? — or is that your projection of a future use-case? Thanks again.

https://twitter.com/mortenjust/status/1636001311417319426

The app design would be broken down into parts, start an app launching with a blank page, then build it out piece by piece using GPT as a helper.

Any reasonably useful app is going to have around 10k lines of code (around 3 months of work) or more. GPT apps will write 100 or so lines per question so it will take a while to build it out but aiming to do 100-200 lines of working code per day will get there. Use version control (git) https://git-scm.com/ https://www.gitkraken.com/ and commit changes along the way. Use Visual Studio Code https://code.visualstudio.com/ or Xcode for editing. The Xcode device simulator can test the apps on the desktop.

Swift isn't an easy language to use, the easiest is Javascript/Typescript and it's possible to build iOS apps this way. They will be cross-platform using this:

https://blog.logrocket.com/how-to-build-ios-apps-using-react-native/

https://reactnative.dev/showcase

This isn't good for performance-intensive apps like games and media but it's fine for information-based apps.