Apple Vision Pro shows users the real world four times faster than its rivals

New benchmarks say Apple Vision Pro has by far the best system of any major headset for relaying views of a user's surroundings to them.

An iOS stop watch superimposed onto an Apple Vision Pro

Meta Quest 3's Mark Zuckerberg didn't mention this point when he reviewed the Apple Vision Pro. Both his headset and Apple's are mixed reality (MR) ones, which means they both show the wearer a view of their surroundings, overlaid with digital content.

The process involves having cameras on the headset relay what they are seeing, to the screens in front of the wearer's eyes. It's called the see-through, and Apple has claimed that Apple Vision Pro does this with groundbreaking speed.

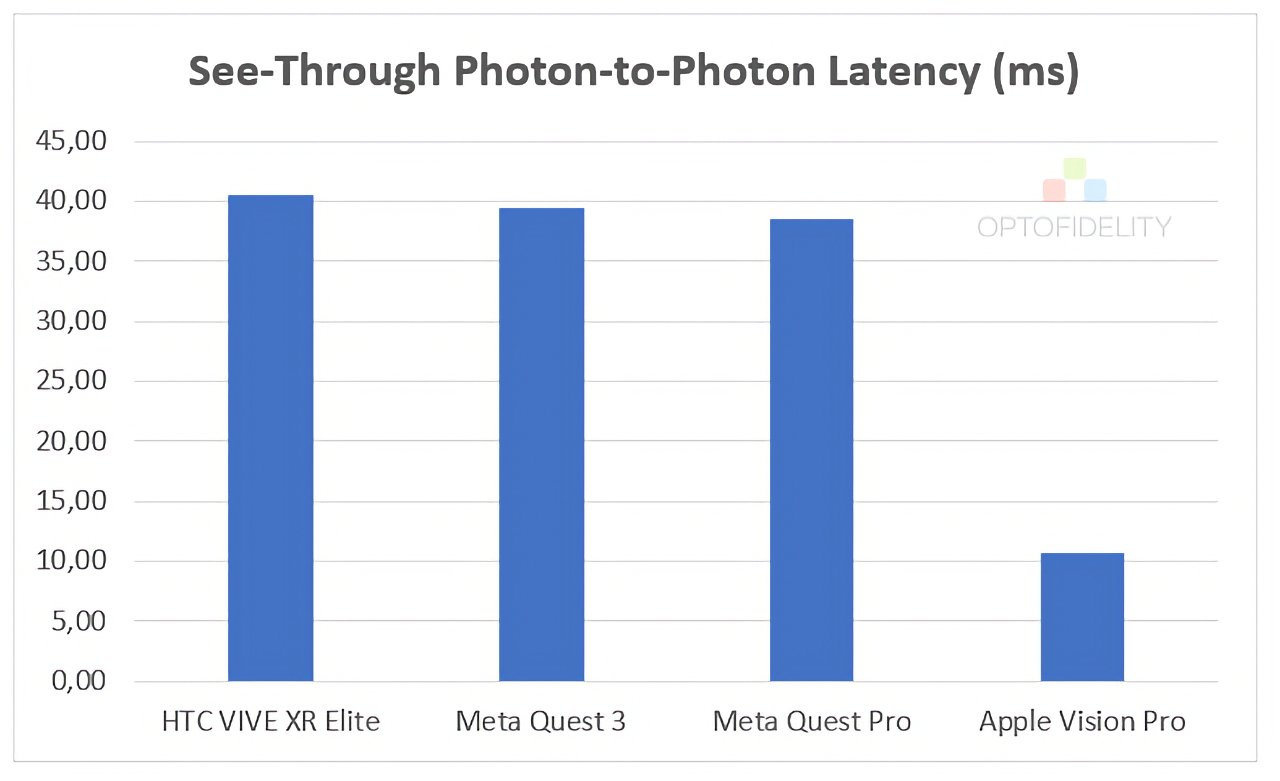

According to new benchmark results, it does. OptoFidelity, which makes testing equipment, investigated the see-through capabilities of four major MR headsets and measured what's called the photon-to-photon latency.

Photon-to-Photon latency measures the time it takes for an image of the outside world to be transferred through the headset and to the user. Using its OptoFidelity Buddy Test System, the company measured the photon-to-photon latency of:

- Apple Vision Pro

- HTC VIVE XR Elite

- Meta Quest 3

- Meta Quest Pro

The three non-Apple ones came in at around 40 milliseconds. Previous tests with them reportedly saw results ranging from around 35 milliseconds to 40 milliseconds. The testers say this figure used to considered a good standard.

How much faster Apple Vision Pro is than its rivals at see-through (source: OptoFidelity

Apple Vision Pro's see-through is 11 milliseconds.

That makes it almost four times faster at relaying the real world to the headset's wearer. Its closest rival was the Quest Pro, which came out at just under 40 milliseconds.

This does all mean that Apple is correct in saying it has groundbreaking technology in the Apple Vision Pro, at least in terms of its record-breaking latency issue. But even with by far the best latency of the major headsets, Apple Vision Pro does have a question mark over its intended audience.

Read on AppleInsider

Comments

And to think, this see-through photon-to-photon latency needs to drop by half to 6 ms to expand headset use cases. Crazy how much compute and lower latency this product needs to reach maturity.

10 finger typing on a virtual keyboard is going to need some bonkers hand tracking too. It may need to use a different keyboard layout to do really do it.

The Apple Vision already the best needs a M3/R2 faster GPU and more software optimization. In short Apple needs one more generation which Apple probably already has in the testing lab now due to product lead times (the M3 is already here the only question is the R2 done?). No surprise bandwidth/SOC size/battery/wattage are the holdups. Who do you think in the tech world currently is closer to solving all those challenges to stay ahead of the competition? Hint a vertical computer company with in house software/hardware. Example the Apple Vision wouldn't be possible using Intel, AMD, Nvidia and Qualcomm who in time wants half your revenue for two chips.

From the link www.chioka.in/what-is-motion-to-photon-latency

“To improve motion-to-photon latency, every component in the motion-to-photon pipeline needs to be optimized. For a movement to be reflected on the screen, it involves the head mounted display, connecting cables, CPU, GPU, game engine, display technology (display refresh rate and pixel switching time).”

So, a doubling everywhere. That's 70m pixels at 180 Hz, multiple streams of 24MP cameras (?), doubling of hand tracking frame rates. That's a 10x increase in compute power needed. So, R4 level that has 10x the throughput. Also would need a variable focus lens.

One good thing to come out of the existence of Apple Vision, the communication skills between all Apple devices will need to go to even higher bandwidth levels. Looking forward to future M3, M4, M5, and R2 SOC'S in the Apple Vision.

Other benefits is it makes occlusion of hands possible, it will make your hands and body feel like your actual body parts. Some basic math. If you wave your hand at 10 inches per second, and the latency from camera to display is 40 ms, it means your real hands will be 10 x 0.04 = 0.4 inches ahead of where it is being shown in the display. The occlusion will also lag. Everything regarding your interaction and movements will have lag between what your body thinks it is doing and what it sees it is doing.

So, if you were to grab something, you may tip it over instead of being able to grab it. There is motion sickness involved with this. It has ramifications in the UI well.

So, no ramifications for you, but there are a lot of people out there. Quest headsets likely have a larger fraction of potential buyers being affected by motion sickness. AVP? Smaller fraction. Ie, more potential buyers. Obviously the price alone eliminates a lot of buyers, no gaming eliminates a lot of buyers, but they made the potential market of wearers bigger in the first place.