NVIDIA's shared VR environment technology is coming to Apple Vision Pro

A combination of technologies from NVIDIA involving Omniverse Cloud APIs will soon let enterprise developers interact with fully rendered 3D digital twins streamed to Apple Vision Pro.

NVIDIA cloud streaming brings complex digital twins to Apple Vision Pro

You may know NVIDIA for its graphics cards or game streaming service, but the company also develops applications for enterprise use. A new software framework built with Omniverse Cloud APIs lets developers send OpenUSD scenes from their applications to the NVIDIA Graphics Delivery Network, which can stream the content to Apple Vision Pro.

That was a lot of technical jargon and industry terms that can be summed up like this -- enterprise NVIDIA developers will be able to upload their work to the cloud and stream it to Apple Vision Pro without running it on the local M2 processor. It also enables hybrid rendering that combines local and remote rendering using technologies from Apple and NVIDIA in the same software.

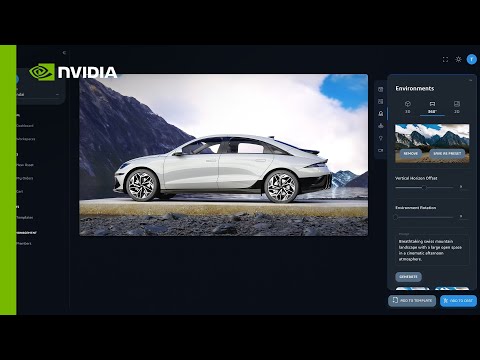

The new framework was announced during NVIDIA GTC. If you're still wondering what all of this means, CGI studio Katana showed off a demo of an Apple Vision Pro wearer using a car configurator powered by the NVIDIA cloud.

The vehicle in the video is called a digital twin. A digital twin is an object like a vehicle built using real-world stats and metrics to represent it in a simulation.

Creating a digital twin isn't as simple as rendering something like a vehicle in a video game. It requires powerful, dedicated graphics processors to handle all of the data on high-resolution displays.

"The breakthrough ultra-high-resolution displays of Apple Vision Pro, combined with photorealistic rendering of OpenUSD content streamed from NVIDIA accelerated computing, unlocks an incredible opportunity for the advancement of immersive experiences," said Mike Rockwell, vice president of the Vision Products Group at Apple. "Spatial computing will redefine how designers and developers build captivating digital content, driving a new era of creativity and engagement."

Moving a 3D concept from a 2D monitor into a spatial computing environment can open up new possibilities for interacting with data. While Apple Vision Pro can't run these models independently, NVIDIA RTX Enterprise Cloud Rendering allows digital twins to be brought to spatial environments.

Read on AppleInsider

Comments

Creating a digital twin isn't as simple as rendering something like a vehicle in a video game. It requires powerful, dedicated graphics processors to handle all of the data on high-resolution displays.”

So… how is that different than a video game say like Gran Turism and Forza - also using real world stats and metrics to represent each car?

It’s like it’s trying to sound more than it really is.

In reality it seems to be just another way to stream content from a render/server farm and that’s pretty much what it is another way for Nvidia to create business for itself.

You don’t need apples cameras for the car since it’s created and rendered elsewhere. You don’t need the Vision Pro processing power since it doesn’t even touch the m2. This sounds like it would do just fine on that old Google cardboard thing.

In the end, this isn’t about the Vision Pro or its tech at all. This is entirely an Nvidia commercial. And Nvidia’s nice words about leveraging all this amazing tech in Vision Pro is just butt-kissing to apple so they’ll use the platform.

Meh.

Still waiting for some Apple Vision Pro - specific thing to come around and showcase why it’s the next best thing

Race car drivers operating a digital twin while a real car speeds down the race track.

Flight simulators that are actually controlling a real jet.

Doctors performing surgery from another continent.

We've only seen the beginning.