Future Apple devices may precisely track your gestures using radar

You may soon be able to wave your hands or draw with a stylus in mid-air with these gestures then controlling everything from your Mac to an office whiteboard, thanks to radar.

A radar-based tracking system would allow for new types of tracking.

A new Apple patent could radically change how you interact with your devices and usher in new ways to control them. By using radar transmitters and receivers

Physical tracking systems, like the trackpad on a Mac or iPhone's screen, have limitations. If you've ever attempted to use an iPhone while wearing gloves, you've likely experienced this yourself.

When you slide your finger across your iPhone screen or the trackpad on your Mac, an array of electrical sensors measures your finger position and pressure. Unfortunately, these sensors can't detect your fingers if they're encased in warm gloves.

A device's accuracy relies on the number of sensors in it. The more electronic circuits there are on the surface of an iPhone, the more accurate it is to the touch.

More sensors equals more accuracy, but adding sensors leads to more challenging and costly manufacturing, and at a certain point, there is no room for more sensing circuits.

If you've ever used a touch-screen device that seems to react slowly or only when pressed in very specific spots, you've seen what happens when only a few sensor points are used.

Improving touch screens

ATMs and self-checkout systems are typical examples of how frustrating it can be when a device has few sensors. Getting these screens to accept your touch often requires a lot of blunt force and guesswork about where exactly to push to make a selection.

Apple's new technology would solve these problems using a small array of radar transmitters and receivers to track fingers or other objects with extreme precision.

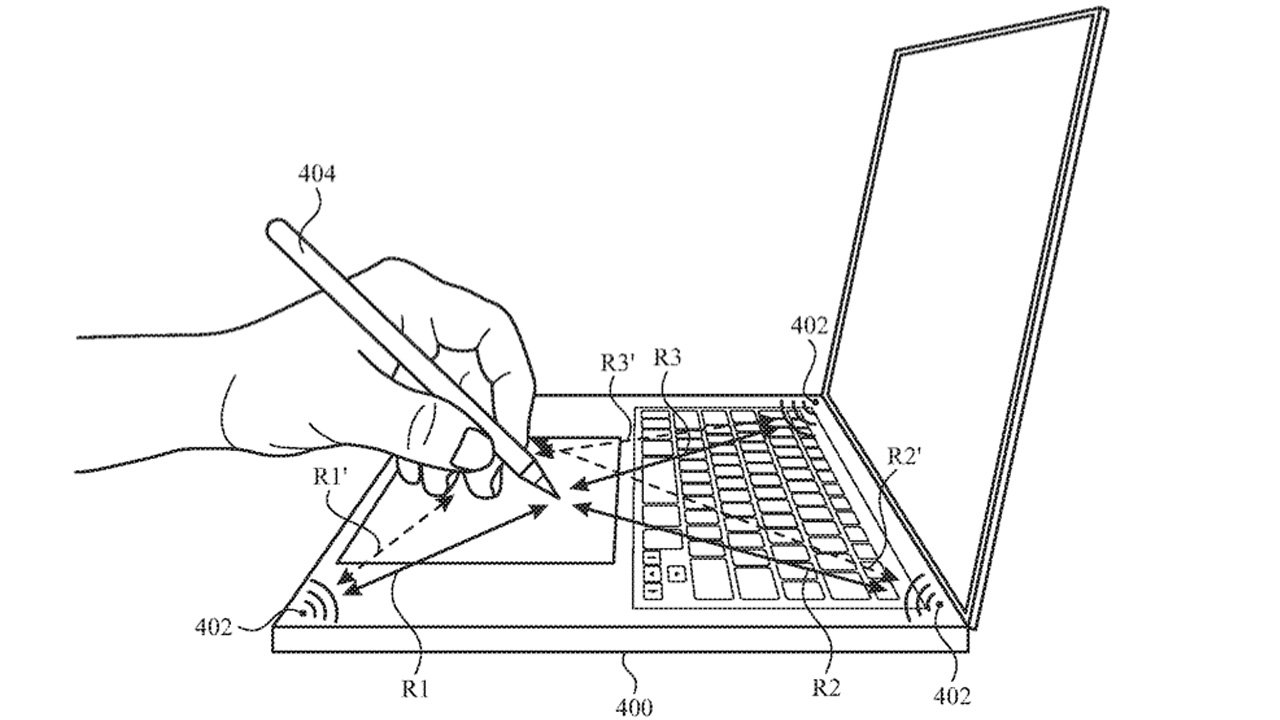

In Apple's patent, an array of radar units embedded in the case of a laptop or other device would provide pinpoint accuracy without needing any touch sensors. This system would provide more accuracy and open new ways of interacting with your devices.

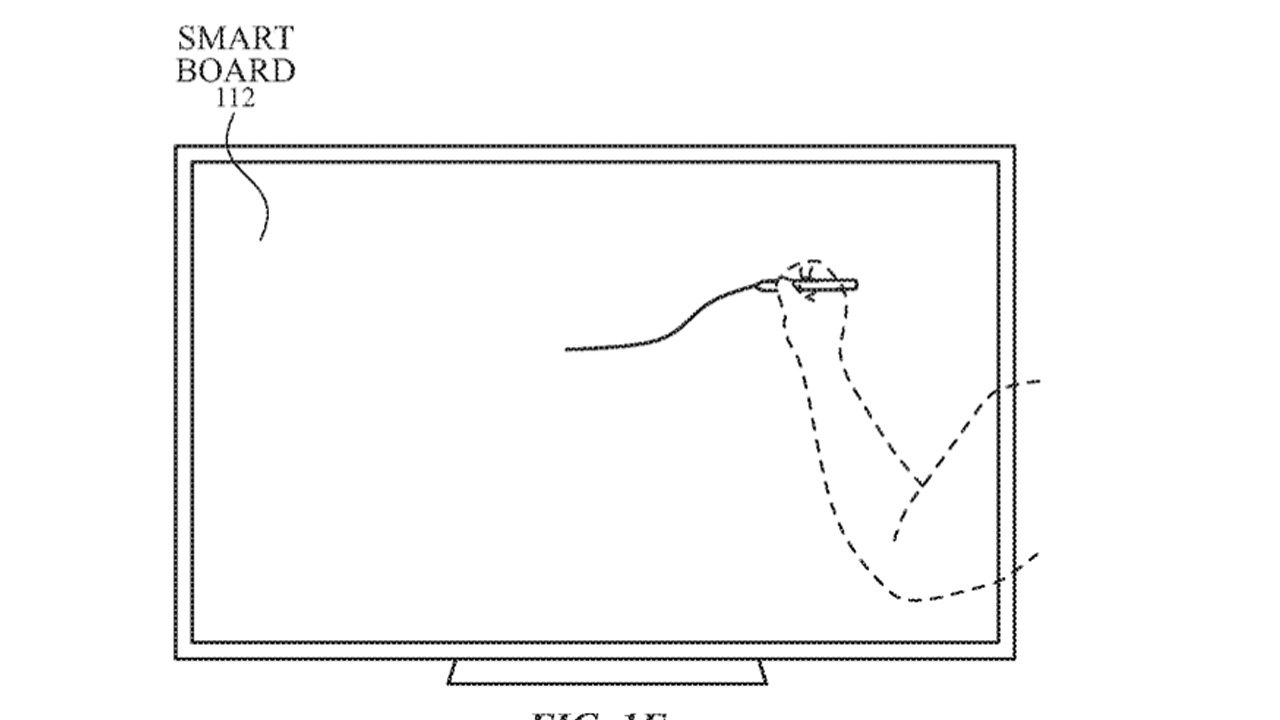

The patent shows a stylus held above a laptop trackpad and a series of radar transmitters and receivers embedded in the case tracking the movement of the virtual pen. It also shows the technology being used with a whiteboard, which means Apple has more general plans for the technology than just devices.

Tracking a hand in front of a whiteboard could be made much more accurate with radar.

Controlling a laptop with a stylus is an excellent example of how this technology could simplify the design of new uses.

With current technology, recognizing a stylus on a trackpad would require specific circuitry in the trackpad and the stylus. This new radar system would eliminate the need to embed these new sensors in the laptop and the Pencil.

While the patent doesn't depict the use of a VR/AR system like the Apple Vision Pro, using the integrated technology in this patent could allow for more accurate hand tracking, improving the experience of interacting with the world.

The patent was credited to Michael Kerner, who has more than 20 years of successful patent applications in the design and manufacturing of radio-based tracking systems.

Read on AppleInsider

Comments

I do think Apple should add eye and hand tracking to all their devices, so this goes right along with that.

Apple already has LiDAR actually working in the iPhone, and the iPad Pro, for the last four years and now have it in the just introduced Apple Vision Pro if Apple decides to add radar it will be as a supplement to what they already have which is already far beyond Googles feeble attempts so far, Apple is able to do all the computations on the edge with the M2/R1 what can Google do with the weak Tensor and the bad on board modem in the Pixel phone do phone home for help?

Apple solutions are working now in the real world on the edge and they are not hallucinating.

https://healthimaging.com/topics/medical-imaging/apple-vision-pro-app-turns-imaging-scans-interactive-holograms Laying the foundations of new ecosystem that works for developers. Hard to do without the processing power of the M2/R1and that will only get better with the M3/R2 SOC'S.

https://www.siemens-healthineers.com/press/releases/cinematic-reality-applevisionpro Software ecosystem combined with Apple in house hardware.

https://gomeasure3d.com/blog/apple-vision-pro-3d-scanning-drive-content-creation/ Laying the foundations.

https://www.spatialpost.com/lidar-vs-radar-vs-sonar/ LiDAR wasn't put in iPhones/iPads 4-5 years ago for nothing Apple has done the ground work years ago.

This time, it's just a keyboard over a "thicken" case. There is no mouse this time, as the lidar/radar sensors in the board can see fingers, styluses, or any pointer. Some extra smarts in the optional pencil make the input pressure sensitive for writing and drawing in front of the device.

Why even have switches in the keys? The movement of the keys would only be there for comfort while typing, as the sensors would see the impact before the switch registers. So all the swipe gestures of the iOS keyboard now just work as well.

Team the device with any airplay device for the screen, VisionPro, smart TVs, smart monitors and IPads all work out of box. Take it with you