Activists rally at Apple Park for reinstatement of child safety features

Protesters at Apple Park are demanding that the company reinstate its recently abandoned child safety measures.

Nearly three dozen protesters gathered around Apple Park on Monday morning, carrying signs that read, "Why Won't you Delete Child Abuse?" and "Build a future without abuse."

The group, known as Heat Initiative, is demanding that the iPhone developer bring back its child sexual abuse material (CSAM) detection tool.

"We don't want to be here, but we feel like we have to," Heat Initiative CEO Sarah Gardner told Silicon Valley. "This is what it's going to take to get people's attention and get Apple to focus more on protecting children on their platform."

Today, we showed up at #WWDC, with dozens of survivors, advocates, parents, community + youth leaders and allies, calling on Apple to stop the spread of child sexual abuse on their platforms now.

We must build a future without abuse. pic.twitter.com/hky9eh3dFD-- heatinitiative (@heatinitiative)

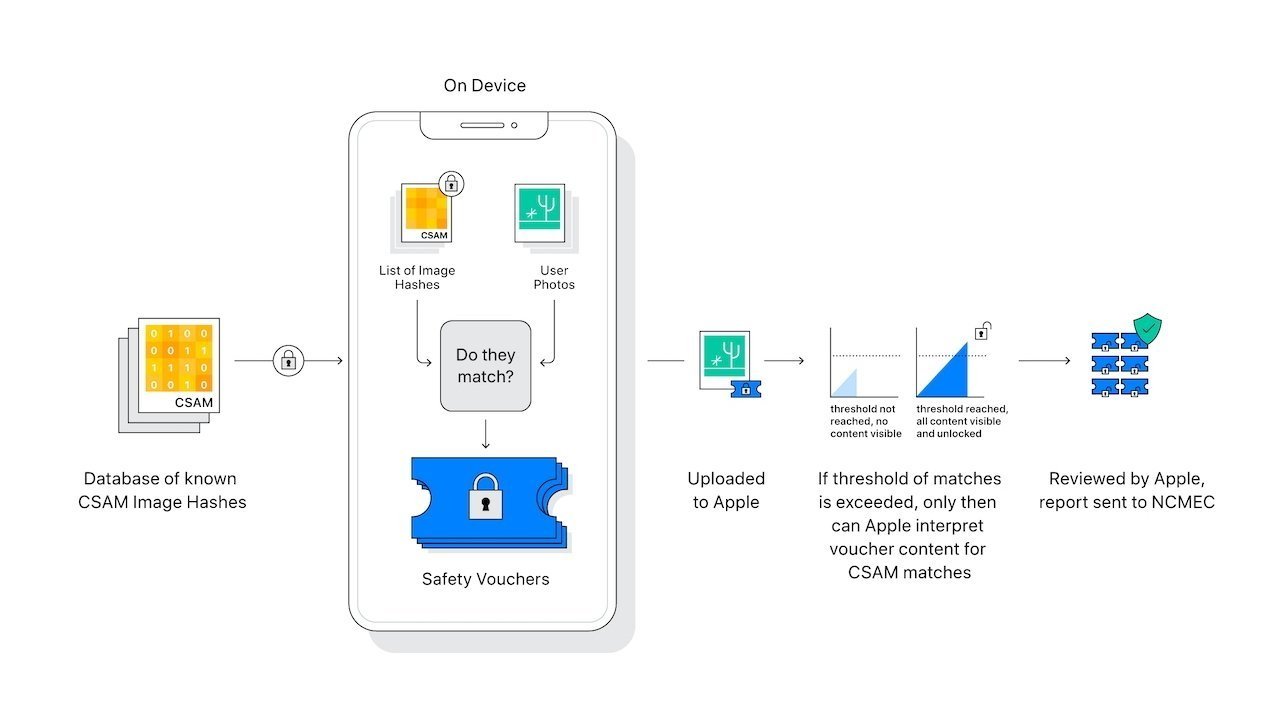

In 2021, Apple had planned to roll out a new feature capable of comparing iCloud photos to a known database of CSAM images. If a match were found, Apple would review the image and report any relevant findings to NCMEC. This agency works as a reporting center for child abuse material that works with law enforcement agencies around the U.S.

After significant backlash from multiple groups, the company paused the program in mid-December of the same year, and then ultimately abandoned the plan entirely the following December.

Apple's abandoned CSAM detection tool

When asked why, Apple said the program would "create new threat vectors for data thieves to find and exploit," and worried that these vectors would compromise security, a topic that Apple prides itself in taking very seriously.

While Apple never officially rolled out its CSAM detection system, it did roll out a feature that alerts children when they receive or attempt to send content containing nudity in Messages, AirDrop, FaceTime video messages and other apps.

However, protesters don't feel like this system does enough to hold predators accountable for possessing CSAM and would rather it reinstate its formerly abandoned system.

"We're trying to engage in a dialog with Apple to implement these changes," protester Christine Almadjian said on Monday. "They don't feel like these are necessary actions."

This isn't the first time that Apple has butt heads with Heat Initiative, either. In 2023, the group launched a multi-million dollar campaign against Apple.

Read on AppleInsider

Comments

As I recall, one of the major bones of contention was that the system was going to scan photos destined for iClound, but not yet actually there, and was using resources on the user's phone itself, and not using iCloud resources, to do the actual scanning. There were also, if I remember correctly, concerns about mistakes, as happened with some Google attempt to do the same thing flagging a file with a single character in it as problematic and locking an account.

I mean, if they are serious about trying to trample constitutional limits on the right to privacy and are pro-preemptive state searching without the presumption of innocence, they’d be much more likely to get further with those companies (who handle far more volume of cloud transportation of data than Apple anyway).

Apple wants to do the right thing, and had everything in place to do so (perhaps going one step too far) but is struggling with how to do so now without stirring up the minions.

There was some interesting technology behind it, and it would have likely been the first widespread use of fully homomorphic encryption (the ability to run operations on data without decrypting it first). They came up with a whole host of techniques like private set intersection that would have provided security guarantees in the cloud (Apple wouldn't have the ability to view your photos without meeting a threshold of positives). And even people who understood the technology compared it against some non-existent ideal system that doesn't exist, instead of viewing this as an improvement to the current "cloud provider can see 100% of the pictures you send them" system.

I actually lost some respect for the EFF during this whole episode. I still think they generally do good advocacy, but in this instance they totally failed to evaluate the proposal honestly.

This part. The scanning process would have been under Apple's control. The database of image signatures was not. The probability of compromise by some, at least one, tyrannical and/or fascist government approaches closely enough to 1 as not to matter.