Apple working out how to use Mac in parallel with iPhone or iPad to process big jobs

Apple isn't content with the existing distributed computing technologies, and is working on a way to network your Mac, iPhone, iPad, and maybe even the Apple Vision Pro together to combine processing jobs dynamically according to device power, to get a big calculation done faster.

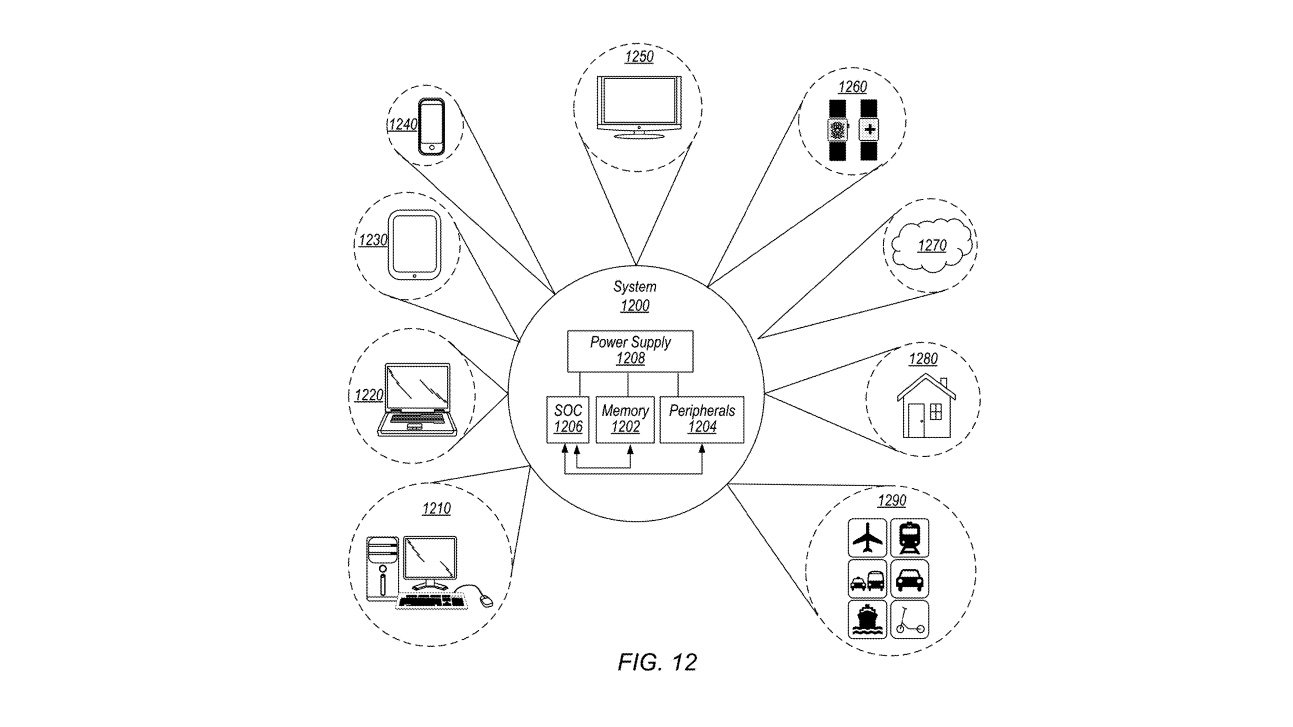

Apple's patent shows multiple devices sharing the computing workload.

In a newly published patent, Apple has detailed what it wants to see in the future as it pertains to using all of your devices for computations, simultaneously, an seamlessly. If the research pans out as published, this would radically improve performance for some tasks, and automatically take into consideration the varying power from each device.

For example, an idle iPad could make a photo edit or 3D render faster. Or, a Mac could improve the experience on an Apple Vision Pro by sharing the processing of tasks.

The Apple Vision Pro in theory, could get the biggest boost by automatically using other devices to process the enormous amounts of data used for augmented reality. For example, in the processing demands of a mixed reality and augmented reality system like the Apple Vision Pro, a single internal processor outside of the motion sensing chip could be a limitation in the headset's tasks.

Processing more data more quickly can create a much more immersive experience. Sharing that processing with multiple devices can radically improve performance, bringing new features.

It would help if you increasingly had increasingly powerful devices to perform complex tasks such as mixed reality, video editing and rendering, special effects creation, 3D modeling, and mathematical simulations.

Most of this is already addressed in distributed computing models, like Apple's two-decade old xGrid. That technology was limited, though, and didn't consider the different processing power of each computational node.

A single slow node could bog down the entire job. Without consideration of job size in relation to satellite processing power, that slower calculation could delay future work dependent on the job delivery time.

The technology in Apple's new patent would not only share your workload but also figure out which devices would be best to get the job done.

So, if you're trying to output 4K/60 video for YouTube from Final Cut Pro on a Mac mini but are playing Death Stranding on your iPhone, this system would automatically detect that the iPhone doesn't have enough spare power. The workload could be automatically shared with your iPad or even a MacBook Air nearby, or give a smaller load to that iPhone, dynamically.

To the user, one or more Apple devices would automatically handle tasks without even knowing what's happening behind the curtain.

In another practical example, the performance of the Apple Vision Pro could be sped up just by having an iPhone in your pocket. In a future version of the relevant operating systems, the Apple Vision Pro could automatically send some of the processing tasks to that iPhone, boosting its speed and potentially enabling new features.

More processing power means better Apple Intelligence

This approach could benefit more than just the Apple Vision Pro or your Mac.

Apple's newly announced Apple Intelligence features will be processed on-device. If you ask Siri on your iPhone to book you a flight with your mother and send reminders to everyone, that iPhone has to do all the artificial intelligence processing.

While the bulk of the processing power needed to handle Apple's upcoming AI features can be handled on an iPhone or iPad, sometimes the task is too complex for just those devices alone. As part of Apple's new Apple Intelligence initiatives, Apple plans to use a new technology called Secure Cloud Compute to perform similar functionality with more complexity.

Naturally, the round-trip from the device to the cloud requires an Internet connection. It also adds delay to tasks while the data is sent and received. Automatically sending the requests to a user's other devices would cut back the need to use a cloud system, speeding up the process tremendously.

The patent was credited to Andrew M. Havlir, Ajay Simba Modugala, and Karl D. Mann. Havlir holds multiple patents, many of which are in distributed processing and graphics processing.

Read on AppleInsider

Comments

It would even make dumb devices like Apple HomePod capable of using Apple Intelligence.

It was a great device just in its old basic form. I miss that thing.

1—In-device Comput

2— “Secure Personal Clous Compute"

3— Secure Cloud Compute

4— Other companies

Edit: I should have said the the staggering unused capacity is more on the client side of things. Servers are typically utilized at much higher levels than clients. On the server side too much is never enough.

Non-training AI workloads are often like this -- a moderately sized model (a large part of which can often be generic and shared, but fine tuned by relative low volume training data), fairly small amounts of data to be analyzed, and a whole lot of compute needed on this data. We haven't seen commonly used distributed compute before because the workloads haven't had the necessary compute:bandwidth ratio, but now this huge wave of machine learning use has changed that, so Apple is jumping on it.

Apple could vary the M chips used to support different mixes of CPU/NPU/GPU work.

It sounds like what you're describing would also lend itself to a "stack-of-minis" approach to provide additional compute resources as needed, i.e., compute elasticity. At one point in time before the Mac Studio came out I really thought that Apple might do something like this with the Mx Mac mini. It sounds like Apple is proposing an architecture that will allow end users to setup their own ad hoc system for managing compute elasticity but also functional elasticity by using a heterogeneous mix of computers. It sounds very compelling.