Child safety watchdog accuses Apple of hiding real CSAM figures

A child protection organization says it has found more cases of abuse images on Apple platforms in the UK than Apple has reported globally.

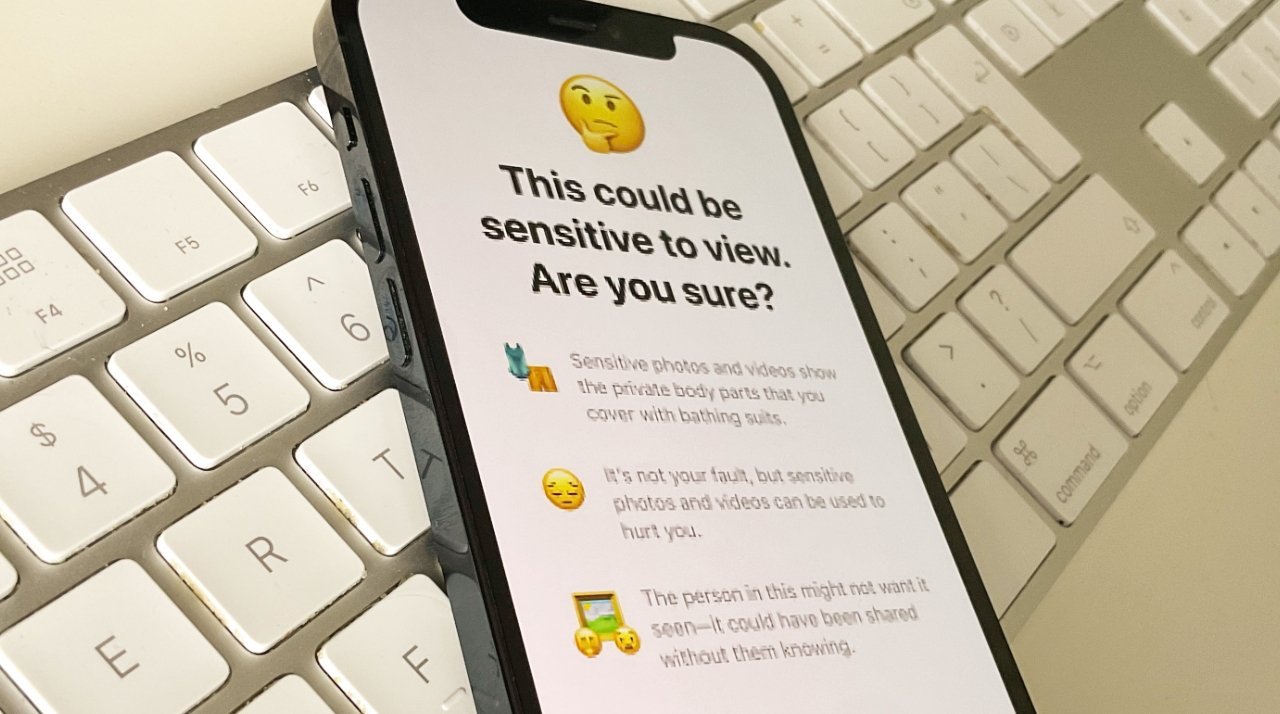

Apple cancelled its major CSAM proposals but introduced features such as automatic blocking of nudity sent to children

, Apple abandoned its plans for Child Sexual Abuse Material (CSAM) detection, following allegations that it would ultimately be used for surveillance of all users. The company switched to a set of features it calls Communication Safety, which is what blurs nude photos sent to children.

According to The Guardian newspaper, the UK's National Society for the Prevention of Cruelty to Children (NSPCC) says Apple is vastly undercounting incidents of CSAM in services such as iCloud, FaceTime and iMessage. All US technology firms are required to report detected cases of CSAM to the National Center for Missing & Exploited Children (NCMEC), and in 2023, Apple made 267 reports.

Those reports purported to be for CSAM detection globally. But the UK's NSPCC has independently found that Apple was implicated in 337 offenses between April 2022 and March 2023 -- in England and Wales alone.

"There is a concerning discrepancy between the number of UK child abuse image crimes taking place on Apple's services and the almost negligible number of global reports of abuse content they make to authorities," Richard Collard, head of child safety online policy at the NSPCC said. "Apple is clearly behind many of their peers in tackling child sexual abuse when all tech firms should be investing in safety and preparing for the roll out of the Online Safety Act in the UK."

In comparison to Apple, Google reported over 1,470,958 cases in 2023. For the same period, Meta reported 17,838,422 cases on Facebook, and 11,430,007 on Instagram.

Apple is unable to see the contents of users iMessages, as it is an encrypted service. But the NCMEC notes that Meta's WhatsApp is also encrypted, yet Meta reported around 1,389,618 suspected CSAM cases in 2023.

In response to the allegations, Apple reportedly referred The Guardian only to its previous statements about overall user privacy.

Reportedly, some child abuse experts are concerned about AI-generated CSAM images. The forthcoming Apple Intelligence will not create photorealistic images.

Read on AppleInsider

Comments

That is not an excuse to go full draconian and spy on the law abiding good people.

No reporting system is perfect. There are likely more than reported just like with other evil things.

but don't try to turn that into a movement to treat the good guys like criminals, losing our freedoms, privacy, and dignity in the process.

Of note, PatentlyApple has an expanded viewpoint.

https://www.patentlyapple.com/2023/06/apple-was-once-a-leader-in-scanning-message-apps-for-child-pornographic-images-now-joins-a-group-to-protect-encryption-ov.html

A. User decides whether or not they want to use iCloud for backup

B. User decides whether or not they agree to Apple's terms of use for iCloud backup

C. User decides which apps have files uploaded to iCloud

D. Per Apple terms of use, files from those apps can be scanned for illegal content

Those steps wouldn't have changed with on-device scanning AND the files scanned wouldn't have changed with on-device scanning. The "controversy" surrounding that method was always inane. There was no change in level of surveillance at all. The user always had complete control over what would be subject to scanning.

iCloud?

From a related article:

"Almost all cloud services routinely scan for the digital fingerprints of known CSAM materials in customer uploads, but Apple does not.

The company cites privacy as the reason for this, and back in 2021 announced plans for a privacy-respecting system for on-device scanning. However, these leaked ahead of time, and fallout over the potential for abuse by repressive governments.. led to the company first postponing and then abandoning plans for this.

As we’ve noted at the time, an attempt to strike a balance between privacy and public responsibility ended up badly backfiring.

If Apple had instead simply carried out the same routine scanning of uploads used by other companies, there would probably have been little to no fuss. But doing so now would again turn the issue into headline news. The company really is stuck in a no-win situation here."

https://www.apple.com/legal/internet-services/icloud/

You agree that you will NOT use the Service to:

a. upload, download, post, email, transmit, store, share, import or otherwise make available any Content that is unlawful, harassing, threatening, harmful, tortious, defamatory, libelous, abusive, violent, obscene, vulgar, invasive of another’s privacy, hateful, racially or ethnically offensive, or otherwise objectionable;"

----

C. Removal of Content

You acknowledge that Apple is not responsible or liable in any way for any Content provided by others and has no duty to screen such Content. However, Apple reserves the right at all times to determine whether Content is appropriate and in compliance with this Agreement, and may screen, move, refuse, modify and/or remove Content at any time, without prior notice and in its sole discretion, if such Content is found to be in violation of this Agreement or is otherwise objectionable.

The reality is that Apple's iCloud terms already give them the right to scan files. You can't use the service without agreeing to their terms. As shown above, their terms include the right to screen files for unlawful content. CSAM is unlawful. And note that they don't have to give notice to do it. They can scan for CSAM any time they choose. They don't have to make public statements about changing their position etc.

Your idea is exactly what Apple was attempting to implement, but there was too much pushback from ignorant folks that saw "risks" that didn't exist.

Apple's approach was safe and secure, and would have been very effective. Being proactive (prevention) is always better than being reactive.