Apple accused of using privacy to excuse ignoring child abuse material on iCloud

A proposed class action suit claims that Apple is hiding behind claims of privacy in order to avoid stopping the storage of child sexual abuse material on iCloud, and alleged grooming over iMessage.

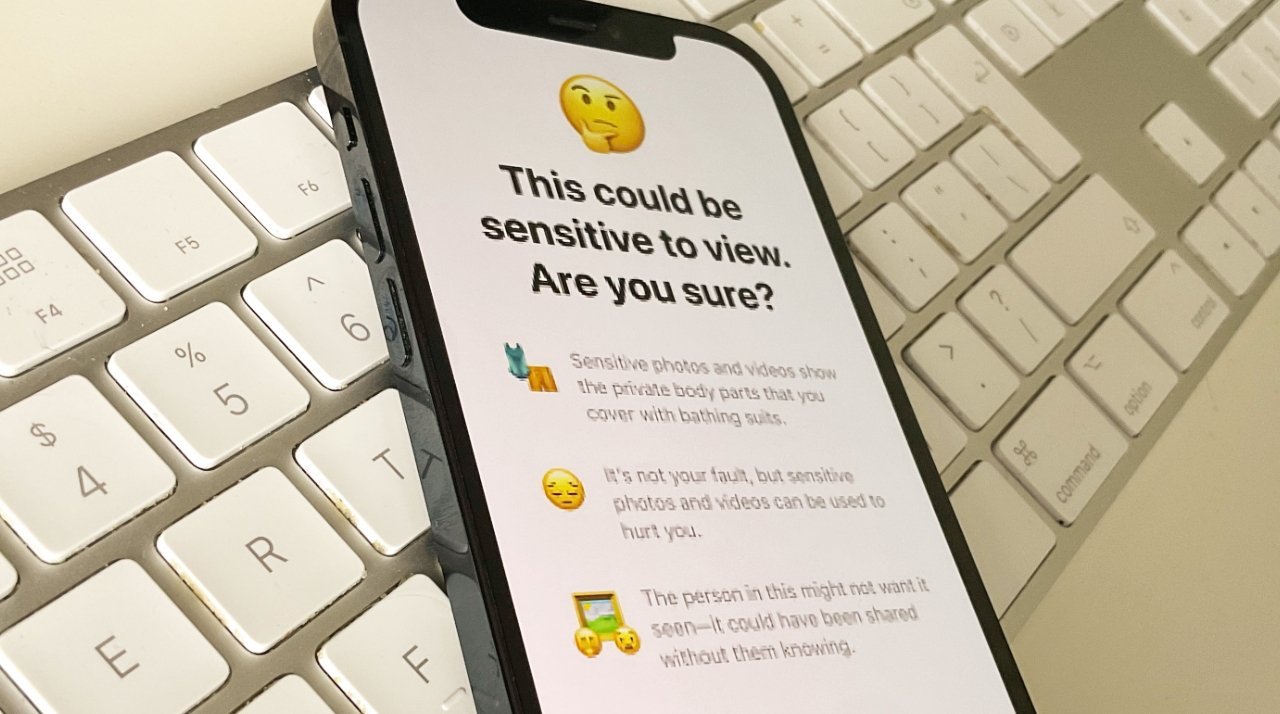

Apple cancelled its major CSAM proposals but introduced features such as automatic blocking of nudity sent to children

Following a UK organization's claims that Apple is vastly underreporting incidents of child sexual abuse material (CSAM), a new proposed class action suit says the company is "privacy-washing" its responsibilities.

A new filing with the US District Court for the Northern District of California, has been brought on behalf of an unnamed 9-year-old plaintiff. Listed only as Jane Doe in the complaint, the filing says that she was coerced into making and uploading CSAM on her iPad.

"When 9-year-old Jane Doe received an iPad for Christmas," says the filing, "she never imagined perpetrators would use it to coerce her to produce and upload child sexual abuse material ("CSAM") to iCloud."

The suit asks for a trial by jury. Damages exceeding $5 million are sought for every person encompassed by the class action.

It also asks in part that Apple be forced to:

- Adopt measures to protect children against the storage and distribution of CSAM on iCloud

- Adopt measures to create easily accessible reporting

- Comply with quarterly third-party monitoring

"This lawsuit alleges that Apple exploits 'privacy' at its own whim," says the filing, "at times emblazoning 'privacy' on city billboards and slick commercials and at other times using privacy as a justification to look the other way while Doe and other children's privacy is utterly trampled on through the proliferation of CSAM on Apple's iCloud."

The child cited in the suit was initially contacted over SnapChat. The conversations were then moved over to iMessage before she was asked to record videos.

SnapChat is not named in the suit as a co-defendant.

Apple did attempt to introduce more stringent CSAM detection tools. But in 2022, it abandoned those following allegations that the same tools would lead to surveillance of any or all users.

Read on AppleInsider

Comments

“Apple accused of using CSAM detection to ignoring privacy protection on iCloud”

If you have to know a piece of information is lawful or evil, you must look into it, investigate it, examine it, check it or even compare it to some other information, and all of these activities are not suppose to be related to “Privacy”, not mention that if we know what/where is the targeted information, txt? pic? video? voice message? When does it happen?

Scan everything relate to iCloud in real time, all users around the world? How about once a month?

I am not saying that Apple should do nothing, but what and how to do it?

Find something out of something but you are not allowed to touch it or not even have a look, or a peek….

Even use Computer or AI to look for CSAM, it must go through a manned process to do the final judgement before it become a case, how about it is a picture of someone just standing next to a child mannequin? False alarm, then “someone” privacy was exposed. How about a wife recording the father changing the diaper very first time? False alarm, but the naked baby exposed, then?

Basically a bunch of politicians told Apple they’d make their live hell if they protected kids from the politicians donors.