Geekbench launches new AI benchmarking tool for macOS and iOS

Following months of beta testing, the newly-renamed Geekbench AI 1.0 is now available with the aim of giving users the ability to make comparable measurements of Artificial Intelligence performance across iOS, macOS, and more.

Geekbench AI

Originally released in beta form under the name Geekbench ML -- for Machine Learning -- in December 2023, the tool allowed for comparisons between the Mac and the iPhone. Now as Geekbench AI, the makers claim to have radically increased the app's ability to usefully measure and gauge performance.

"With the 1.0 release, we think Geekbench Al has reached a level of dependability and reliability that allows developers to confidently integrate it into their workflows," wrote the company in a blog post, "[and] many big names like Samsung and Nvidia are already using it."

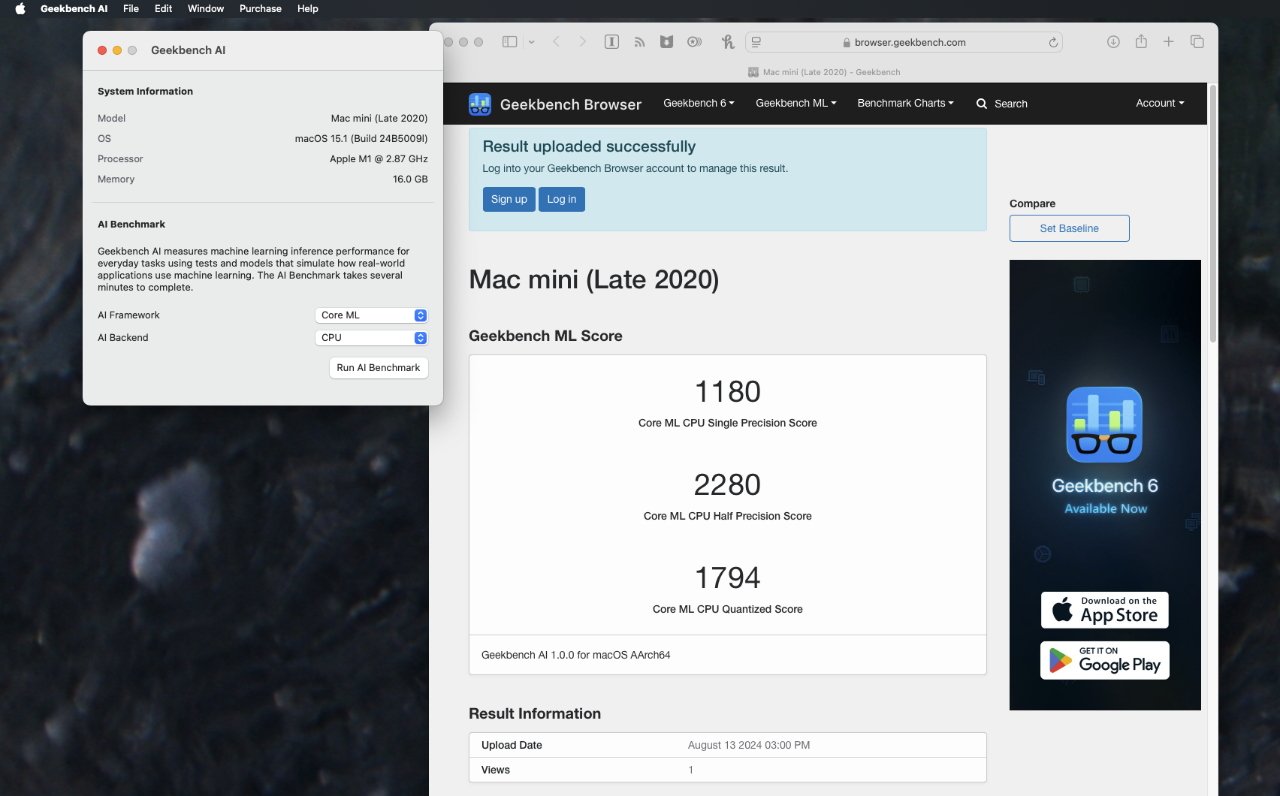

Running Geekbench AI on a Mac mini

The regular Geekbench has long provided separate scores for single-core and multi-core performance of devices. In the case of Geekbench AI, it measures against three different types of workload.

"Geekbench AI presents its summary for a range of workload tests accomplished with single-precision data, half-precision data, and quantized data," continues the company, "covering a variety used by developers in terms of both precision and purpose in AI systems."

The firm stresses that the intention is to produce comparable measurements that reflect real-world use of AI -- as wide-ranging as that is.

Geekbench AI 1.0 is available direct from the developer. As with previous Geekbench releases, the tool is free for most users, and it runs on macOS, iOS, Android and Windows.

There is a paid version called Geekbench AI Pro. It allows developers to keep their scores private instead of automatically uploading to a public site.

Read on AppleInsider

Comments

Happy to be corrected, but I take this to mean that Apple hardware is good for on-device inference but crappy for model training.

I wonder how much of the issue is CoreML needing more optimization versus Apple needing beefier GPUs...

The fact that current Apple hardware (which is consumer targeted) isn't optimized for model training comes as no surprise.

This just leaves many questions and perhaps some of them will be answered in the future. My hunch is that Apple will eventually provide developers some sort of access to cloud servers that have more hardware resources for model training. There's also a small possibility that Apple could release some sort of add-in-board AI accelerator for the Mac Pro that is better suited for model training.

But for sure, Apple won't prioritize model training on their regular MacBook and mobile offerings.

I ran the benchmark on one of my Windows PCs equipped with a GeForce RTX 3060 12GB graphics card. That GPU had nearly double the performance of my M2 Pro for single precision and +50% better performance at half precision (compared to the Mac's Neural Engine). Now the 3060 is a low end card from the Ampere generation of GPUs so I'd expect even better performance from higher-end models or those from the Ada Lovelace generation.

All that said, benchmarks are a generally poor barometer of real world performance. Since iOS 18/Sequoia isn't even officially released, it will be some time until we see whether or not the Geekbench AI benchmark reflects actual performance in any sort of meaningful way. In the same way, there aren't many consumer AI applications running locally on typical PCs yet so running the Geekbench benchmark on Windows PCs.

So much of the usefulness is unleashed from the software (Apple considers itself a software company first) so just benchmarking hardware using synthetic tests might be extremely marginal in terms of usefulness or accuracy vis-a-vis real world usage.

https://browser.geekbench.com/ai/v1/6589

https://browser.geekbench.com/ai/v1/8699

and the M3 Max can support 128GB of memory, Ultra (M4) will likely support 256GB. M3 Max is roughly equivalent to a 4070 laptop GPU.

M2 Max to Ultra doesn't seem to scale well for the test:

https://browser.geekbench.com/ai/v1/10284

https://browser.geekbench.com/ai/v1/3502

but M4 Ultra might just use a bigger GPU that will scale better.

Super interesting point/question about a Mac Pro potentially having an Apple-designed AI board. Also interesting to consider if they'd sell that or only make it available through the cloud. It really seems like they ought to both sell an AI board for the Mac Pro AND make the same thing available through the cloud to provide the Apple ecosystem with maximum flexibility and make it as competitive as possible with other ecosystems. But we shall see...